GPT-4

GPT-4 is OpenAI's advanced multimodal LLM combining text and image processing. Learn its capabilities, architecture, and impact on AI monitoring and content cit...

GPT-5 is OpenAI’s fifth-generation large language model released on August 7, 2025, featuring unified reasoning and multimodal capabilities with a 400K token context window, 45% fewer hallucinations, and advanced agentic task execution. It represents a major architectural advancement combining reasoning-first design with real-time adaptive routing between fast and deep-thinking modes.

GPT-5 is OpenAI's fifth-generation large language model released on August 7, 2025, featuring unified reasoning and multimodal capabilities with a 400K token context window, 45% fewer hallucinations, and advanced agentic task execution. It represents a major architectural advancement combining reasoning-first design with real-time adaptive routing between fast and deep-thinking modes.

GPT-5 is OpenAI’s fifth-generation large language model, officially released on August 7, 2025, representing a fundamental architectural shift in how AI systems approach reasoning, multimodal processing, and task execution. Unlike its predecessors, GPT-5 unifies advanced reasoning capabilities with non-reasoning functionality into a single, adaptive system that automatically routes queries between fast processing and deep-thinking modes based on complexity. The model features a 400,000 token context window, enabling it to process entire books, lengthy meeting transcripts, and large code repositories without losing contextual coherence. Most significantly, GPT-5 demonstrates approximately 45% fewer hallucinations compared to earlier models while achieving 50-80% greater token efficiency, making it substantially more accurate and cost-effective for enterprise and consumer applications. This represents a watershed moment in generative AI development, as GPT-5 moves beyond being merely “a better chatbot” to functioning as a genuine reasoning engine capable of complex multi-step problem-solving, agentic task execution, and sophisticated multimodal understanding across text, images, and video.

The journey to GPT-5 represents nearly a decade of incremental and revolutionary advances in large language model architecture and training methodology. The original GPT (Generative Pre-trained Transformer) models, introduced by OpenAI starting in 2018, demonstrated that scaling transformer architectures on massive text datasets could yield surprisingly coherent language generation. GPT-2 (2019) attracted widespread attention for generating multi-paragraph coherent text, while GPT-3 (2020) with its 175 billion parameters cemented large language models as transformative AI technology. However, these early models suffered from significant limitations: they hallucinated frequently, struggled with complex reasoning, and required separate specialized models for different tasks. GPT-4 (2023) introduced multimodal capabilities and improved reasoning, but still required users to manually switch between different model variants. The intermediate GPT-4.5 (Orion) model, released in early 2025, served as a transitional bridge, incorporating reasoning-first principles from OpenAI’s specialized o1 and o3 models. This progression culminated in GPT-5, which synthesizes all previous learnings into a unified architecture that eliminates the need for model switching while dramatically improving accuracy and reasoning depth. According to industry analysis, over 78% of enterprises now use AI-driven content monitoring tools, making GPT-5’s improved accuracy particularly valuable for brand tracking and citation monitoring across AI platforms.

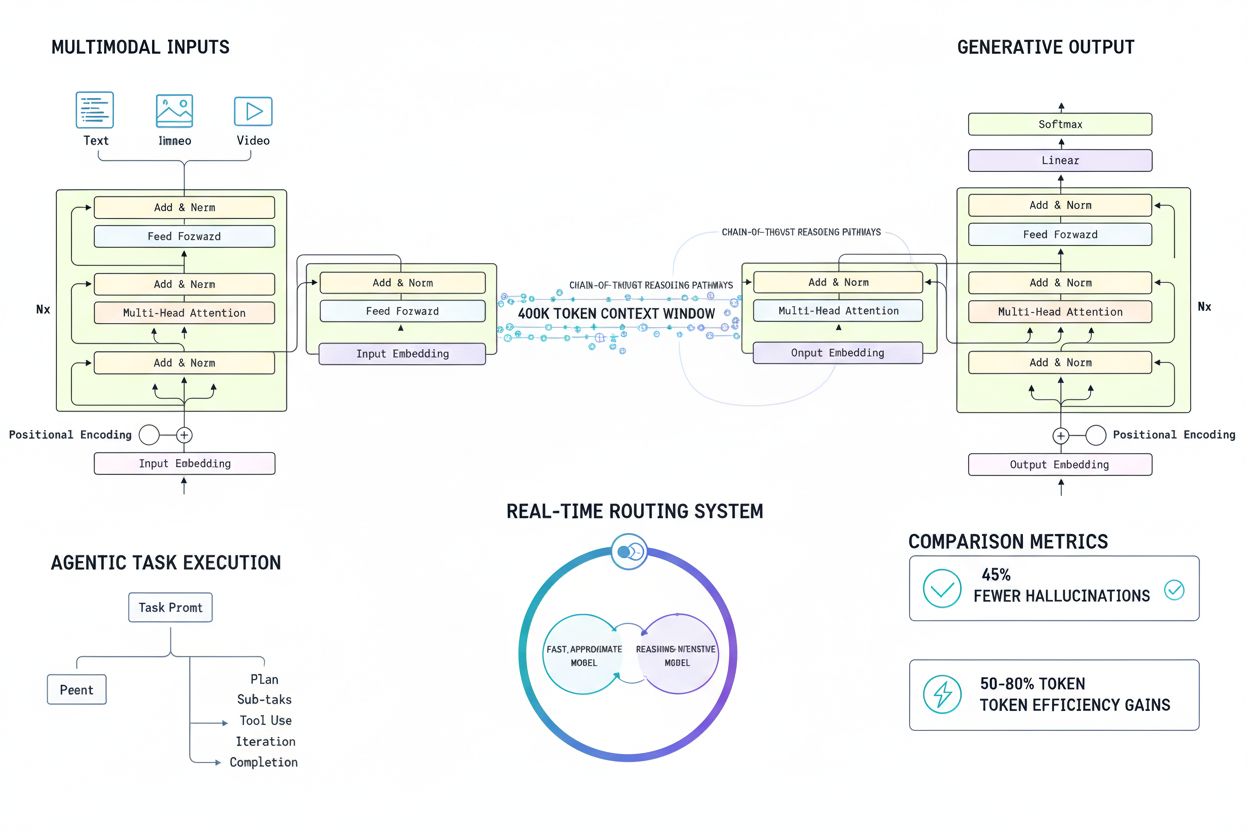

GPT-5’s architecture represents a departure from traditional transformer-only designs by incorporating a real-time adaptive routing system that functions as an intelligent traffic controller for incoming queries. When a user submits a prompt, the routing system analyzes the query’s complexity and automatically directs it to either a fast, high-throughput model for straightforward requests or a “thinking” model for complex reasoning tasks requiring multi-step logic. This unified approach eliminates the computational waste of previous systems where users had to choose between speed and reasoning depth. The model’s 400,000 token context window is approximately 3.1 times larger than GPT-4o’s ~128,000 tokens, enabling unprecedented capability for handling long-form content. Each GPT-5 variant (gpt-5, gpt-5-mini, gpt-5-nano, and gpt-5-chat) runs on the same unified architecture but is optimized for different performance-cost trade-offs. The gpt-5 variant, designed for maximum reasoning capability, maintains a September 30, 2024 knowledge cutoff, while gpt-5-mini and gpt-5-nano have May 30, 2024 cutoffs but offer significantly faster inference speeds. Under the hood, GPT-5 integrates chain-of-thought reasoning natively, allowing the model to break complex problems into intermediate steps before generating final answers. This architectural innovation, combined with improved self-attention mechanisms and enhanced positional encoding, enables GPT-5 to capture long-range dependencies and contextual relationships more effectively than previous models.

| Feature | GPT-5 | GPT-4o | GPT-5 Pro | o3 | Claude 3.5 Sonnet |

|---|---|---|---|---|---|

| Context Window | 400K tokens | ~128K tokens | 400K tokens | 200K tokens | 200K tokens |

| Hallucination Rate | 45% reduction | Baseline | 50%+ reduction | 40% reduction | 35% reduction |

| Token Efficiency | 50-80% fewer tokens | Baseline | 60-80% fewer | 45% fewer | 40% fewer |

| Multimodal Support | Text/Vision/Video | Text/Vision/Voice | Enhanced multimodal | Limited | Text/Vision |

| Reasoning Capability | Unified adaptive | Baseline | Deep reasoning | Advanced reasoning | Strong reasoning |

| Real-time Routing | Yes (automatic) | No | Yes (enhanced) | No | No |

| Input Cost (per 1M tokens) | $1.25 | $2.50 | $3.00+ | $3.00 | $3.00 |

| Output Cost (per 1M tokens) | $10.00 | $10.00 | $15.00+ | $12.00 | $15.00 |

| Release Date | Aug 7, 2025 | May 2024 | Aug 7, 2025 | Dec 2024 | June 2024 |

| Best Use Case | Complex workflows | General purpose | Enterprise reasoning | Scientific problems | Long-form analysis |

GPT-5’s multimodal architecture represents a significant leap in how AI systems integrate different data types. The model excels across visual reasoning, spatial understanding, and scientific reasoning benchmarks, demonstrating superior performance compared to previous generations. Unlike earlier systems that treated text, image, and video processing as separate tasks, GPT-5 seamlessly transitions between modalities without requiring explicit mode switching or separate API calls. The vision capabilities are particularly noteworthy: GPT-5 can generate complex front-end UI code from minimal prompting, analyze intricate diagrams and technical drawings, and perform sophisticated image-based reasoning tasks. In independent testing, GPT-5 ranked #1 on vision capability assessments covering 80+ real-world tasks, outperforming specialized vision models in many scenarios. The video understanding capabilities enable GPT-5 to analyze temporal sequences, understand narrative flow, and extract information from video content with contextual awareness. This multimodal integration is particularly valuable for enterprise applications where documents contain mixed content types—for instance, analyzing financial reports with embedded charts, reviewing technical documentation with diagrams, or processing medical records with imaging data. The improved multilingual support extends these capabilities across languages, with GPT-5 handling dozens of major languages with strong fluency while maintaining reasoning quality across linguistic boundaries. For brand monitoring applications, these multimodal capabilities mean that AmICited can track brand mentions not just in text-based AI responses but also in image descriptions, video transcripts, and cross-modal reasoning outputs.

GPT-5’s reasoning architecture fundamentally transforms how the model approaches complex problems by implementing native chain-of-thought processing that breaks multi-step tasks into intermediate reasoning steps. When processing a complex query, GPT-5 doesn’t attempt to jump directly to the answer; instead, it generates explicit reasoning traces that show its logical progression. This approach, inspired by o1 and o3 models, dramatically improves accuracy on tasks requiring mathematical reasoning, logical deduction, and multi-stage problem-solving. The real-time routing system determines when to activate this deep reasoning mode: simple factual queries bypass the reasoning pipeline for speed, while complex queries automatically trigger the thinking model. Research indicates that this adaptive approach reduces latency by approximately 60% for straightforward queries while maintaining reasoning quality for complex tasks. The chain-of-thought capability is particularly valuable for professional applications: lawyers can use GPT-5 to analyze complex legal documents with explicit reasoning about precedent and interpretation, engineers can leverage it for debugging large codebases with step-by-step logic, and researchers can employ it for literature synthesis with transparent reasoning about connections between papers. The model’s ability to sustain reasoning across long contexts means it can maintain logical consistency throughout 400,000 tokens of input, a capability that previous models struggled with. For instance, GPT-5 can analyze an entire research paper, maintain awareness of all cited sources, and generate conclusions that logically follow from the evidence presented—a task where earlier models frequently contradicted themselves or lost track of earlier information.

GPT-5’s 45% reduction in hallucinations represents one of its most significant practical improvements, achieved through multiple complementary techniques. The model’s expanded context window enables better information retention, reducing the likelihood of contradictions or fabricated details. The improved training methodology incorporating reinforcement learning from human feedback (RLHF) and supervised fine-tuning (SFT) on high-quality datasets has refined the model’s ability to distinguish between confident and uncertain predictions. Most importantly, the native chain-of-thought reasoning allows GPT-5 to catch logical inconsistencies before generating final outputs—if intermediate reasoning steps contradict each other, the model can recognize and correct this before producing the response. Independent research from the NIH documented marked reductions in hallucination rates across medical reasoning tasks, with GPT-5 demonstrating significantly higher factual accuracy than GPT-4o on domain-specific queries. The token efficiency improvements (50-80% fewer tokens for equivalent outputs) contribute to accuracy by reducing the model’s tendency to pad responses with filler content. For brand monitoring and citation tracking, these accuracy improvements are transformative: when GPT-5 cites a brand or source, there’s substantially higher confidence that the citation is accurate and contextually appropriate. Research from Profound reveals that citation drift (changes in source selection across AI platforms) can vary by up to 60%, making GPT-5’s improved consistency particularly valuable for organizations tracking their brand visibility in AI responses. The model’s ability to maintain factual accuracy across long documents means that AmICited’s monitoring of brand mentions in AI-generated content becomes more reliable and actionable.

GPT-5’s agentic capabilities represent a fundamental shift from passive text generation to active task execution. The model can now function as an autonomous agent capable of planning multi-step workflows, calling external APIs, making decisions based on real-time information, and executing complex business processes. This is enabled by native tool-calling functionality that allows GPT-5 to interact directly with external systems—CRMs, databases, productivity suites, and custom APIs—without requiring intermediate processing layers. The agentic reasoning in GPT-5 goes beyond simple function calling: the model can understand task context, break complex objectives into subtasks, handle errors and edge cases, and adapt its approach based on intermediate results. For example, a GPT-5 agent could autonomously manage a customer support workflow: receiving a ticket, analyzing the issue, retrieving relevant documentation, drafting a response, and escalating to human support if needed—all while maintaining context and reasoning about the best approach at each step. The real-time routing system is particularly important for agentic applications: routine tasks execute quickly through the fast model, while complex decisions automatically route to the thinking model. This architecture enables cost-effective automation where organizations only pay for deep reasoning when genuinely needed. According to OpenAI’s benchmarks, GPT-5 shows significant gains in instruction following and agentic tool use, the capabilities that enable it to function reliably as an autonomous agent. For enterprise applications, this means GPT-5 can power sophisticated AI agents that handle customer service, content moderation, data analysis, and workflow automation with minimal human intervention.

GPT-5 pricing is structured to accommodate different use cases and budget constraints through its variant-based approach. The gpt-5 variant costs $1.25 per million input tokens and $10.00 per million output tokens, representing a 50% reduction in input costs compared to GPT-4o ($2.50) while maintaining equivalent output pricing. The gpt-5-mini variant offers dramatic cost savings at $0.05 and $0.40 respectively, making it accessible for high-volume applications where reasoning depth isn’t critical. The gpt-5-nano variant at $0.25 and $2.00 targets ultra-low-latency embedded applications. For users requiring maximum reasoning capability, GPT-5 Pro offers extended context windows and priority access at premium pricing. Availability spans multiple channels: ChatGPT users (free and paid tiers) have automatic access to GPT-5 as the default model, with GPT-5 Pro available to ChatGPT Pro subscribers. API users can access all variants through the OpenAI Platform or the OpenAI Python SDK, enabling integration into custom applications. The GitHub Models Playground provides a free testing environment for developers exploring GPT-5 capabilities. Deployment flexibility is a key advantage: organizations can use GPT-5 through ChatGPT’s web interface for interactive use, integrate it via API for production applications, or deploy it through platforms like Botpress for building AI agents without coding. The context window caching feature offers a 90% discount on cached input tokens, enabling significant cost savings for applications that repeatedly process the same documents or knowledge bases. For brand monitoring applications, this pricing structure means organizations can cost-effectively track their brand mentions across multiple AI platforms using GPT-5’s improved accuracy without prohibitive expenses.

GPT-5’s release has profound implications for AI monitoring platforms like AmICited that track brand and domain appearances in AI-generated responses. The model’s 45% reduction in hallucinations means that brand citations in GPT-5 responses are substantially more reliable and accurate than in previous models. The expanded 400K token context window enables GPT-5 to maintain consistency across longer documents, reducing the citation drift phenomenon where AI models cite different sources when processing the same information in different contexts. Research indicates that citation patterns can shift by up to 60% across different AI platforms, but GPT-5’s improved consistency should reduce this variability. The real-time routing system has monitoring implications: simple brand mentions route through the fast model, while complex reasoning about brands or products routes through the thinking model, potentially affecting how brands are discussed in different contexts. The multimodal capabilities expand monitoring scope beyond text: brands mentioned in image descriptions, video transcripts, and cross-modal reasoning now require tracking. For organizations using AmICited to monitor their brand visibility, GPT-5 represents both an opportunity and a challenge: the opportunity is that GPT-5’s improved accuracy means more reliable brand mention data, but the challenge is that GPT-5’s different architecture may change citation patterns compared to GPT-4o. The agentic capabilities introduce new monitoring dimensions: as GPT-5 agents autonomously execute tasks, they may cite brands or domains in their reasoning processes, creating new touchpoints for brand visibility tracking. The model’s native tool-calling means GPT-5 agents might directly access brand websites or APIs, creating new opportunities for tracking how AI systems interact with brand digital properties.

GPT-5 represents a waypoint rather than a destination in the evolution of large language models, with clear trajectories for future development already visible. OpenAI has indicated that GPT-5.2 (released in late 2025) brings significant improvements in general intelligence, long-context understanding, agentic tool-calling, and vision capabilities, suggesting that the core architecture will continue evolving. The reasoning-first design philosophy pioneered by o1 and o3 models will likely become increasingly central to future LLM development, with more models adopting explicit chain-of-thought processing and adaptive routing. Industry trends suggest that model specialization will increase: while GPT-5 is a generalist model, future development may see specialized variants optimized for specific domains (legal, medical, scientific) or specific modalities (vision-focused, audio-focused). The efficiency improvements in GPT-5 (50-80% fewer tokens) will likely accelerate, driven by competition and environmental concerns about AI’s computational footprint. Multimodal integration will deepen, with future models potentially incorporating audio, structured data, and real-time information streams alongside text, images, and video. For brand monitoring and AI citation tracking, the strategic implication is that organizations must continuously adapt their monitoring strategies as AI models evolve. The citation drift phenomenon may change as models improve, potentially creating more stable brand mention patterns or introducing new variability as models gain new capabilities. The agentic capabilities will likely expand, creating new channels through which brands are mentioned or referenced in AI systems. Organizations should view GPT-5 not as a stable target for monitoring but as a dynamic system that will continue evolving, requiring adaptive monitoring strategies that can accommodate architectural changes and capability improvements. The competitive landscape will intensify as other organizations (Anthropic, Google, Meta) release competing models with similar or superior capabilities, potentially fragmenting the AI response landscape and making comprehensive brand monitoring increasingly important.

Unified Architecture: GPT-5 combines reasoning and non-reasoning capabilities in a single model with real-time adaptive routing, eliminating the need to manually switch between specialized models for different task types.

Context Window Advantage: The 400K token context window enables processing of entire books, lengthy transcripts, and large codebases without losing contextual coherence or consistency.

Hallucination Reduction: 45% fewer hallucinations compared to GPT-4o, achieved through improved training, chain-of-thought reasoning, and better contextual understanding across long documents.

Token Efficiency: 50-80% fewer tokens required for equivalent outputs, reducing both latency and API costs while maintaining or improving response quality.

Multimodal Integration: Seamless processing of text, images, and video without separate models, with superior performance on visual reasoning and spatial understanding tasks.

Agentic Capabilities: Native tool-calling and autonomous task execution enable GPT-5 to function as an independent agent for workflow automation and complex business processes.

Real-time Routing: Automatic decision-making between fast processing for simple queries and deep reasoning for complex tasks, optimizing both speed and accuracy.

Variant Flexibility: Four model variants (gpt-5, gpt-5-mini, gpt-5-nano, gpt-5-chat) enable cost-effective deployment across different use cases and performance requirements.

Brand Monitoring Reliability: Improved accuracy and consistency make GPT-5 responses more reliable for tracking brand citations and monitoring brand visibility in AI-generated content.

Deployment Options: Available through ChatGPT, OpenAI API, Python SDK, and no-code platforms like Botpress, enabling integration across consumer and enterprise applications.

GPT-5 stands as a watershed moment in AI development, representing not merely an incremental improvement but a fundamental architectural shift in how large language models approach reasoning, multimodal processing, and task execution. The model’s unified architecture, 45% reduction in hallucinations, 400K token context window, and native agentic capabilities collectively address the primary limitations of previous generations. For organizations tracking brand visibility and citations in AI-generated responses, GPT-5’s improved accuracy and consistency make it an essential component of comprehensive AI monitoring strategies. As the AI landscape continues evolving with competing models and new capabilities, understanding GPT-5’s architecture, capabilities, and implications becomes increasingly critical for businesses seeking to maintain visibility and control over their brand presence in AI systems.

GPT-5 introduces a unified architecture that combines reasoning and non-reasoning capabilities in a single model, whereas GPT-4o required switching between specialized models. GPT-5 features a 400K token context window (compared to GPT-4o's ~128K), produces 50-80% fewer tokens for the same output, and demonstrates approximately 45% fewer hallucinations. The real-time routing system in GPT-5 automatically selects between fast and deep-thinking modes based on query complexity, eliminating manual model switching.

GPT-5 achieves a 45% reduction in hallucinations through improved chain-of-thought reasoning, better contextual understanding, and enhanced training with reinforcement learning from human feedback (RLHF). The model's unified architecture allows it to break complex problems into smaller reasoning steps before generating final outputs, and its expanded context window enables better retention of earlier information without contradictions. Additionally, GPT-5 integrates reasoning-first design principles from models like o1 and o3, which prioritize multi-step logical processes over direct prediction.

GPT-5 comes in four variants: gpt-5 (best for deep reasoning with 400K context), gpt-5-mini (faster, lower-cost option), gpt-5-nano (ultra-fast for real-time applications), and gpt-5-chat (optimized for conversational use). Choose gpt-5 for complex multi-step workflows and research tasks, gpt-5-mini for balanced performance and cost, gpt-5-nano for embedded systems or latency-sensitive applications, and gpt-5-chat for interactive dialogue. All variants share the same unified architecture but are tuned for different performance-cost trade-offs.

GPT-5 features a unified multimodal architecture that seamlessly processes text, images, and video inputs without requiring separate models or mode switching. The model excels across visual reasoning, spatial understanding, and scientific reasoning benchmarks. Its improved vision capabilities allow it to handle complex front-end UI generation with minimal prompting and perform sophisticated image analysis. The multimodal integration is particularly valuable for tasks requiring cross-modal reasoning, such as analyzing documents with embedded images or generating code based on visual mockups.

GPT-5's real-time routing system is an adaptive mechanism that automatically decides whether to answer queries instantly using a fast, high-throughput model or route them to a 'thinking' model for complex reasoning. This eliminates the need for users to manually select between different models based on task complexity. The router analyzes incoming queries and determines the optimal processing path, reducing API costs while maintaining reasoning quality for complex tasks. This architecture represents a significant shift from previous approaches where users had to choose between speed and reasoning depth.

GPT-5's improved accuracy and reduced hallucinations make it more reliable for brand monitoring and citation tracking across AI platforms. With 45% fewer hallucinations and better contextual understanding, GPT-5 provides more accurate brand mentions and source citations in AI-generated responses. The expanded 400K token context window allows GPT-5 to maintain consistency across longer documents and conversations, reducing citation drift. For platforms like AmICited that track brand appearances in AI responses, GPT-5's enhanced reasoning and accuracy mean more reliable data for monitoring how brands are cited across ChatGPT, Perplexity, Google AI Overviews, and Claude.

GPT-5 pricing varies by variant: gpt-5 costs $1.25 per million input tokens and $10.00 per million output tokens, gpt-5-mini costs $0.05 and $0.40 respectively, and gpt-5-nano costs $0.25 and $2.00. For comparison, GPT-4o costs $2.50 and $10.00, while o3 costs $3.00 and $12.00. GPT-5 Pro offers extended context windows and priority access at higher rates. The pricing structure allows developers to optimize costs by selecting the appropriate variant for their specific use case, with gpt-5-mini offering the best balance of capability and affordability for most applications.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

GPT-4 is OpenAI's advanced multimodal LLM combining text and image processing. Learn its capabilities, architecture, and impact on AI monitoring and content cit...

ChatGPT is OpenAI's conversational AI assistant powered by GPT models. Learn how it works, its impact on AI monitoring, brand visibility, and why it matters for...

Learn what SearchGPT is, how it works, and its impact on search, SEO, and digital marketing. Explore features, limitations, and the future of AI-powered search.

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.