How Do I Correct Misinformation in AI Responses?

Learn effective methods to identify, verify, and correct inaccurate information in AI-generated answers from ChatGPT, Perplexity, and other AI systems.

Inference is the process by which a trained AI model generates outputs, predictions, or conclusions from new input data by applying patterns and knowledge learned during training. It represents the operational phase where AI systems apply their learned intelligence to real-world problems in production environments.

Inference is the process by which a trained AI model generates outputs, predictions, or conclusions from new input data by applying patterns and knowledge learned during training. It represents the operational phase where AI systems apply their learned intelligence to real-world problems in production environments.

Inference is the process by which a trained artificial intelligence model generates outputs, predictions, or conclusions from new input data by applying patterns and knowledge learned during the training phase. In the context of AI systems, inference represents the operational phase where machine learning models transition from the laboratory into production environments to solve real-world problems. When you interact with ChatGPT, Perplexity, Google AI Overviews, or Claude, you are experiencing AI inference in action—the model is taking your input and generating intelligent responses based on patterns it learned from massive training datasets. Inference is fundamentally different from training; while training teaches the model what to do, inference is where the model actually does it, applying its learned knowledge to data it has never encountered before.

The distinction between AI training and AI inference is critical to understanding how modern artificial intelligence systems operate. During the training phase, data scientists feed enormous curated datasets into neural networks, allowing the model to learn patterns, relationships, and decision-making rules through iterative optimization. This process is computationally intensive, often requiring weeks or months of processing on specialized hardware like GPUs and TPUs. Once training is complete and the model has converged on optimal weights and parameters, the model enters the inference phase. At this point, the model is frozen—it no longer learns from new data—and instead applies its learned patterns to generate predictions or outputs on previously unseen inputs. According to research from IBM and Oracle, inference is where the true business value of AI is realized, as it enables organizations to deploy AI capabilities at scale across production systems. The AI inference market was valued at USD 106.15 billion in 2025 and is projected to grow to USD 254.98 billion by 2030, reflecting the explosive demand for inference capabilities across industries.

AI inference operates through a multi-stage process that transforms raw input data into intelligent outputs. When a user submits a query to a large language model like ChatGPT, the inference pipeline begins with input encoding, where text is converted into numerical tokens that the neural network can process. The model then enters the prefill phase, where all input tokens are processed simultaneously through every layer of the neural network, allowing the model to understand context and relationships within the user’s query. This phase is computationally heavy but necessary for comprehension. Following the prefill phase, the model enters the decode phase, where it generates output tokens sequentially, one at a time, with each new token depending on all previous tokens in the sequence. This sequential generation is what creates the characteristic streaming effect users see when interacting with AI chatbots. Finally, the output conversion stage transforms the predicted tokens back into human-readable text, images, or other formats that users can understand and interact with. This entire process must happen in milliseconds for real-time applications, making inference latency optimization a critical concern for AI service providers.

Organizations deploying AI systems must choose between three primary inference architectures, each optimized for different use cases and performance requirements. Batch inference processes large volumes of data offline at scheduled intervals, making it ideal for scenarios where real-time responses are not required, such as generating daily analytics dashboards, processing weekly risk assessments, or running nightly recommendation updates. This approach is highly efficient and cost-effective because it can process thousands of predictions simultaneously, amortizing computational costs across many requests. Online inference, also called dynamic inference, generates predictions instantly upon request with minimal latency, making it essential for interactive applications like chatbots, search engines, and real-time fraud detection systems. Online inference requires sophisticated infrastructure to maintain low latency and high availability, often employing caching strategies and model optimization techniques to ensure responses arrive within milliseconds. Streaming inference continuously processes data flowing from sensors, IoT devices, or real-time data pipelines, making predictions on each data point as it arrives. This type powers applications like predictive maintenance systems that monitor industrial equipment, autonomous vehicles that process sensor data in real-time, and smart city systems that analyze traffic patterns continuously. Each inference type demands different architectural considerations, hardware requirements, and optimization strategies.

| Aspect | Batch Inference | Online Inference | Streaming Inference |

|---|---|---|---|

| Latency Requirement | Seconds to minutes | Milliseconds | Real-time (sub-second) |

| Data Processing | Large datasets offline | Single requests on-demand | Continuous data flow |

| Use Cases | Analytics, reporting, recommendations | Chatbots, search, fraud detection | IoT monitoring, autonomous systems |

| Cost Efficiency | High (amortized across many predictions) | Medium (requires always-on infrastructure) | Medium to High (depends on data volume) |

| Scalability | Excellent (processes in bulk) | Good (requires load balancing) | Excellent (distributed processing) |

| Model Optimization Priority | Throughput | Latency and throughput balance | Latency and accuracy balance |

| Hardware Requirements | Standard GPUs/CPUs | High-performance GPUs/TPUs | Specialized edge hardware or distributed systems |

Inference optimization has become a critical discipline as organizations seek to deploy AI models more efficiently and cost-effectively. Quantization is one of the most impactful optimization techniques, reducing the numerical precision of model weights from standard 32-bit floating-point numbers to 8-bit or even 4-bit integers. This reduction can decrease model size by 75-90% while maintaining 95-99% of the original accuracy, resulting in faster inference speeds and lower memory requirements. Model pruning removes non-critical neurons, connections, or entire layers from the neural network, eliminating redundant parameters that don’t significantly contribute to predictions. Research shows that pruning can reduce model complexity by 50-80% without substantial accuracy loss. Knowledge distillation trains a smaller, faster “student” model to mimic the behavior of a larger, more accurate “teacher” model, enabling deployment on resource-constrained devices while maintaining reasonable performance. Batch processing optimization groups multiple inference requests together to maximize GPU utilization and throughput. Key-value caching stores intermediate computation results to avoid redundant calculations during the decode phase of language model inference. According to NVIDIA research, combining multiple optimization techniques can achieve 10x performance improvements while reducing infrastructure costs by 60-70%. These optimizations are essential for deploying inference at scale, particularly for organizations running thousands of concurrent inference requests.

Hardware acceleration is fundamental to achieving the latency and throughput requirements of modern AI inference workloads. Graphics Processing Units (GPUs) remain the most widely deployed inference accelerators due to their parallel processing architecture, which is naturally suited to the matrix operations that dominate neural network computations. NVIDIA GPUs power the majority of large language model inference deployments globally, with their specialized CUDA cores enabling massive parallelism. Tensor Processing Units (TPUs), developed by Google, are custom-designed ASICs optimized specifically for neural network operations, offering superior performance-per-watt compared to general-purpose GPUs for certain workloads. Field-Programmable Gate Arrays (FPGAs) provide customizable hardware that can be reprogrammed for specific inference tasks, offering flexibility for specialized applications. Application-Specific Integrated Circuits (ASICs) like Google’s TPU or Cerebras’ WSE-3 are engineered for particular inference workloads, delivering exceptional performance but with limited flexibility. The choice of hardware depends on multiple factors: the model architecture, required latency, throughput demands, power constraints, and total cost of ownership. For edge inference on mobile devices or IoT sensors, specialized edge accelerators and neural processing units (NPUs) enable efficient inference with minimal power consumption. The global shift toward AI factories—highly optimized infrastructure designed to manufacture intelligence at scale—has driven massive investments in inference hardware, with enterprises deploying thousands of GPUs and TPUs in data centers to meet surging demand for AI services.

Generative AI systems like ChatGPT, Claude, and Perplexity rely entirely on inference to generate human-like text, code, images, and other content. When you submit a prompt to these systems, the inference process begins by tokenizing your input into numerical representations that the neural network can process. The model then executes the prefill phase, processing all your input tokens simultaneously to build a comprehensive understanding of your request, including context, intent, and nuance. Following this, the model enters the decode phase, where it generates output tokens sequentially, predicting the most likely next token based on all previous tokens and the learned patterns from its training data. This token-by-token generation is why you see streaming text appearing in real-time when using these services. The inference process must balance multiple competing objectives: generating accurate, coherent, and contextually appropriate responses while maintaining low latency to keep users engaged. Speculative decoding, an advanced inference optimization technique, allows a smaller model to predict multiple future tokens while the larger model validates these predictions, significantly reducing latency. The scale of inference for large language models is staggering—OpenAI’s ChatGPT processes millions of inference requests daily, each generating hundreds or thousands of tokens, requiring massive computational infrastructure and sophisticated optimization strategies to remain economically viable.

For organizations concerned with their brand presence and content citation in AI-generated responses, inference monitoring has become increasingly important. When AI systems like Perplexity, Google AI Overviews, or Claude generate responses, they perform inference on their trained models to produce outputs that may reference or cite your domain, brand, or content. Understanding how inference systems work helps organizations optimize their content strategy to ensure proper representation in AI-generated responses. AmICited specializes in monitoring where brands and domains appear in AI inference outputs across multiple platforms, providing visibility into how AI systems cite and reference your content. This monitoring is crucial because inference systems may generate responses that include or exclude your brand based on training data quality, relevance signals, and model optimization choices. Organizations can use inference monitoring data to understand which content is being cited, how frequently their brand appears in AI responses, and whether their domain is being properly attributed. This intelligence enables data-driven decisions about content optimization, SEO strategy, and brand positioning in the emerging AI-driven search landscape. As inference becomes the primary interface through which users discover information, tracking your presence in AI-generated outputs is as important as traditional search engine optimization.

Deploying inference systems at scale presents numerous technical, operational, and strategic challenges that organizations must address. Latency management remains a persistent challenge, as users expect sub-second responses from interactive AI applications, yet complex models with billions of parameters require significant computation time. Throughput optimization is equally critical—organizations must serve thousands or millions of concurrent inference requests while maintaining acceptable latency and accuracy. Model drift occurs when inference performance degrades over time as real-world data distributions shift away from training data, requiring continuous monitoring and periodic model retraining. Interpretability and explainability become increasingly important as AI inference systems make decisions affecting users, requiring organizations to understand and explain how models arrive at specific predictions. Regulatory compliance presents growing challenges, with regulations like the EU AI Act imposing requirements for transparency, bias detection, and human oversight in AI inference systems. Data quality remains fundamental—inference systems can only be as good as the data they were trained on, and poor training data leads to biased, inaccurate, or harmful inference outputs. Infrastructure costs can be substantial, with large-scale inference deployments requiring significant investments in GPUs, TPUs, networking, and cooling infrastructure. Talent scarcity means that organizations struggle to find engineers and data scientists with expertise in inference optimization, model deployment, and MLOps, driving up hiring costs and slowing deployment timelines.

The future of AI inference is rapidly evolving in several transformative directions that will reshape how organizations deploy and utilize AI systems. Edge inference—running inference on local devices rather than cloud data centers—is accelerating, driven by advances in model compression, specialized edge hardware, and privacy concerns. This shift will enable real-time AI capabilities on smartphones, IoT devices, and autonomous systems without relying on cloud connectivity. Multimodal inference, where models process and generate text, images, audio, and video simultaneously, is becoming increasingly common, requiring new optimization strategies and hardware considerations. Reasoning models that perform multi-step inference to solve complex problems are emerging, with systems like OpenAI’s o1 demonstrating that inference itself can scale with more computation time and tokens, not just model size. Disaggregated serving architectures are gaining adoption, where separate hardware clusters handle the prefill and decode phases of inference, optimizing resource utilization for different computational patterns. Speculative decoding and other advanced inference techniques are becoming standard practice, enabling 2-3x latency reductions. Inference at the edge combined with federated learning will enable organizations to deploy AI capabilities locally while maintaining privacy and reducing bandwidth requirements. The AI inference market is expected to grow at a 19.2% CAGR through 2030, driven by increasing enterprise adoption, new use cases, and the economic imperative to optimize inference costs. As inference becomes the dominant workload in AI infrastructure, optimization techniques, specialized hardware, and inference-specific software frameworks will become increasingly sophisticated and essential for competitive advantage.

AI training is the process of teaching a model to recognize patterns using large datasets, while AI inference is when that trained model applies what it learned to generate predictions or outputs on new data. Training is computationally intensive and happens once, whereas inference is typically faster, less resource-intensive, and occurs continuously in production environments. Think of training as studying for an exam and inference as taking the exam itself.

Inference latency—the time it takes for a model to generate an output—is critical for user experience and real-time applications. Low-latency inference enables instant responses in chatbots, real-time translation, autonomous vehicles, and fraud detection systems. High latency can make applications unusable for time-sensitive tasks. Enterprises optimize latency through techniques like quantization, model pruning, and specialized hardware like GPUs and TPUs to meet service level agreements.

The three primary types are batch inference (processing large datasets offline), online inference (generating predictions instantly upon request), and streaming inference (continuously processing data from sensors or IoT devices). Batch inference suits scenarios like daily analytics dashboards, online inference powers chatbots and search engines, and streaming inference enables real-time monitoring systems. Each type has different latency requirements and use cases.

Quantization reduces the numerical precision of model weights from 32-bit to 8-bit or lower, significantly reducing model size and computational requirements while maintaining accuracy. Pruning removes non-critical neurons or connections from the neural network, reducing complexity. Both techniques can reduce inference latency by 50-80% and lower hardware costs. These optimization methods are essential for deploying models on edge devices and mobile platforms.

Inference is the core mechanism enabling generative AI systems to produce text, images, and code. When you prompt ChatGPT, the inference process tokenizes your input, processes it through the trained neural network layers, and generates output tokens one at a time. The prefill phase processes all input tokens simultaneously, while the decode phase generates output sequentially. This inference capability is what makes large language models responsive and practical for real-world applications.

Inference monitoring tracks how AI models perform in production, including accuracy, latency, and output quality. Platforms like AmICited monitor where brands and domains appear in AI-generated responses across systems like ChatGPT, Perplexity, and Google AI Overviews. Understanding inference behavior helps organizations ensure their content is properly cited and represented when AI systems generate outputs that reference their domains or brand information.

Common inference accelerators include GPUs (Graphics Processing Units) for parallel processing, TPUs (Tensor Processing Units) optimized for neural networks, FPGAs (Field-Programmable Gate Arrays) for customizable workloads, and ASICs (Application-Specific Integrated Circuits) designed for specific tasks. GPUs are most widely used due to their balance of performance and cost, while TPUs excel at large-scale inference. The choice depends on throughput requirements, latency constraints, and budget considerations.

The global AI inference market was valued at USD 106.15 billion in 2025 and is projected to reach USD 254.98 billion by 2030, representing a compound annual growth rate (CAGR) of 19.2%. This rapid growth reflects increasing enterprise adoption of AI applications, with 78% of organizations using AI in 2024, up from 55% in 2023. The expansion is driven by demand for real-time AI applications across industries including healthcare, finance, retail, and autonomous systems.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Learn effective methods to identify, verify, and correct inaccurate information in AI-generated answers from ChatGPT, Perplexity, and other AI systems.

Model parameters are learnable variables in AI models that determine behavior. Understand weights, biases, and how parameters impact AI model performance and tr...

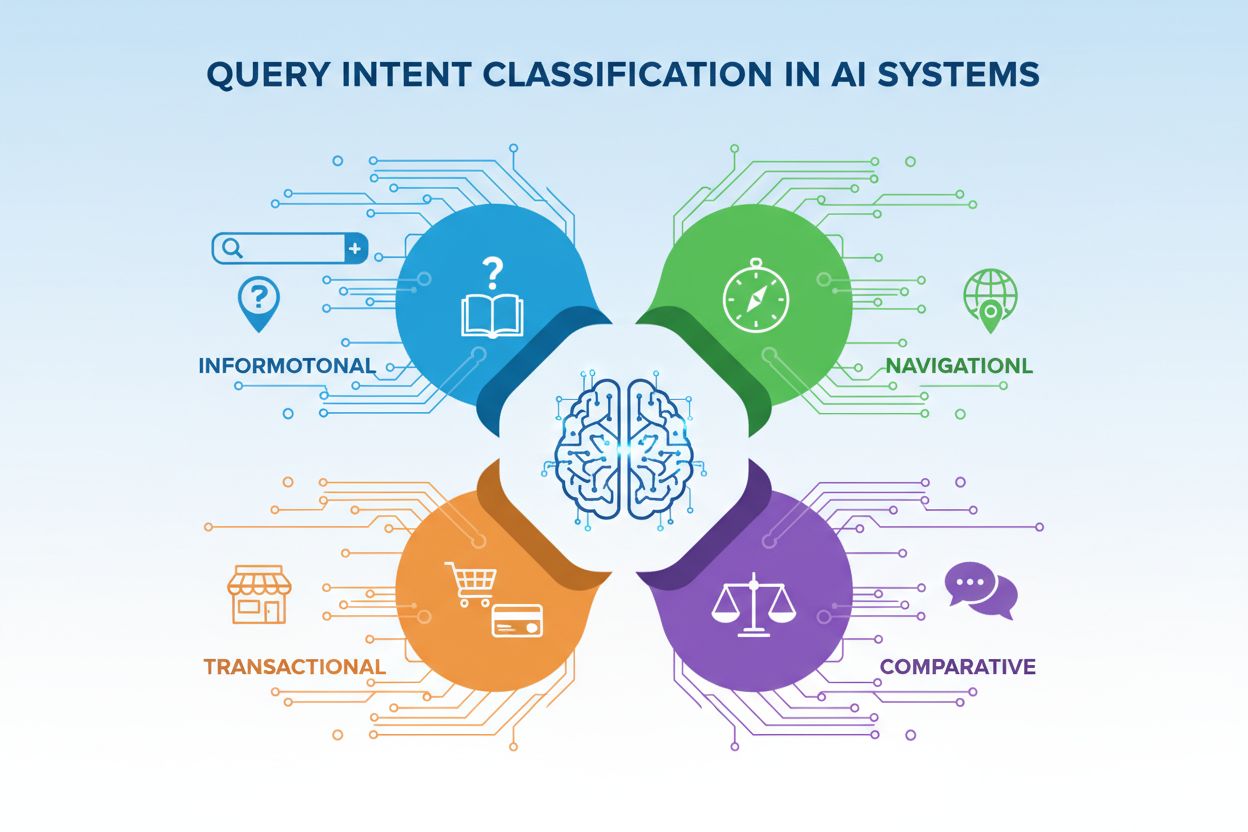

Learn about Query Intent Classification - how AI systems categorize user queries by intent (informational, navigational, transactional, comparative). Understand...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.