Technical SEO

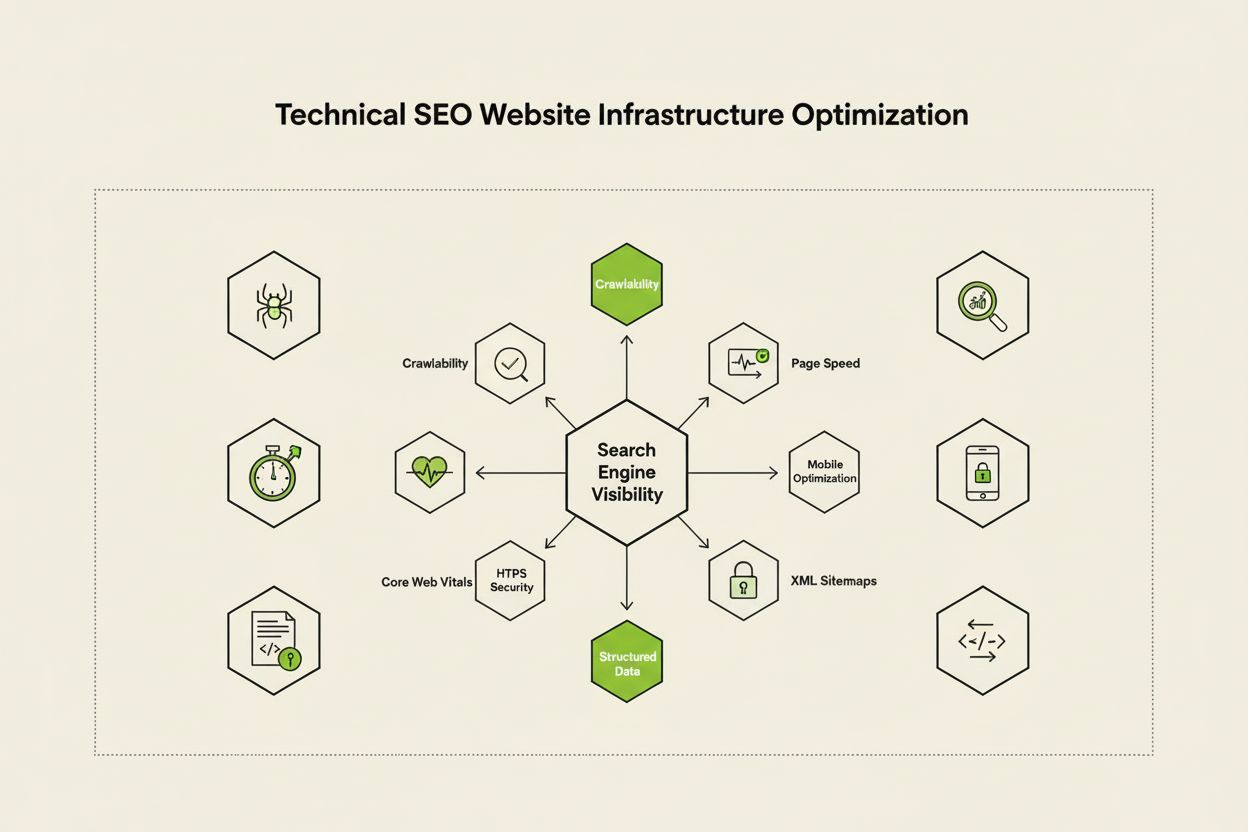

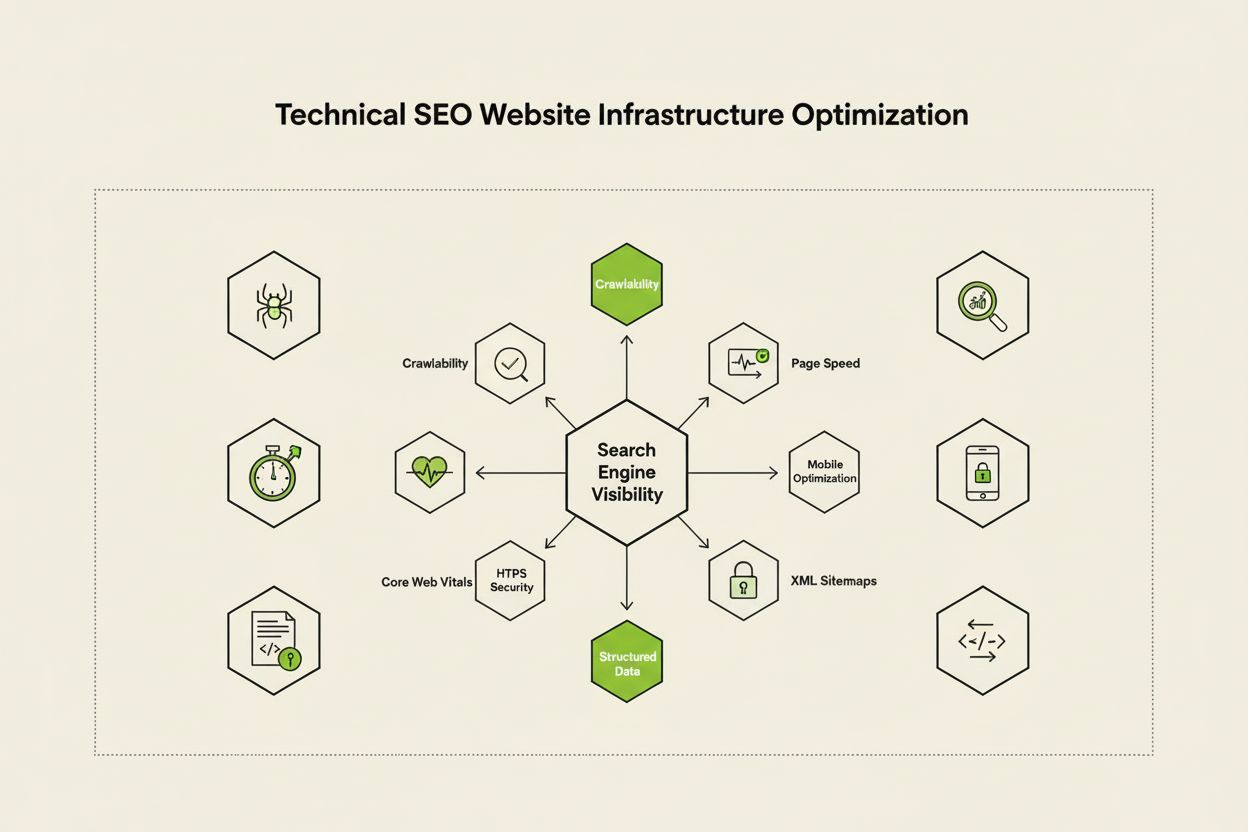

Technical SEO optimizes website infrastructure for search engine crawling, indexing, and ranking. Learn crawlability, Core Web Vitals, mobile optimization, and ...

JavaScript SEO is the process of optimizing JavaScript-rendered websites to ensure search engines can effectively crawl, render, and index content. It encompasses best practices for making JavaScript-powered web applications discoverable and rankable in search results while maintaining optimal performance and user experience.

JavaScript SEO is the process of optimizing JavaScript-rendered websites to ensure search engines can effectively crawl, render, and index content. It encompasses best practices for making JavaScript-powered web applications discoverable and rankable in search results while maintaining optimal performance and user experience.

JavaScript SEO is the specialized practice of optimizing JavaScript-rendered websites to ensure search engines can effectively crawl, render, and index content. It encompasses a comprehensive set of technical strategies, best practices, and implementation methods designed to make JavaScript-powered web applications fully discoverable and rankable in search results. Unlike traditional HTML-based websites where content is immediately available in the server response, JavaScript-rendered content requires additional processing steps that can significantly impact how search engines understand and rank your pages. The discipline combines technical SEO expertise with an understanding of how modern web frameworks like React, Vue, and Angular interact with search engine crawlers. JavaScript SEO has become increasingly critical as 98.7% of websites now incorporate some level of JavaScript, making it essential knowledge for any SEO professional working with contemporary web technologies.

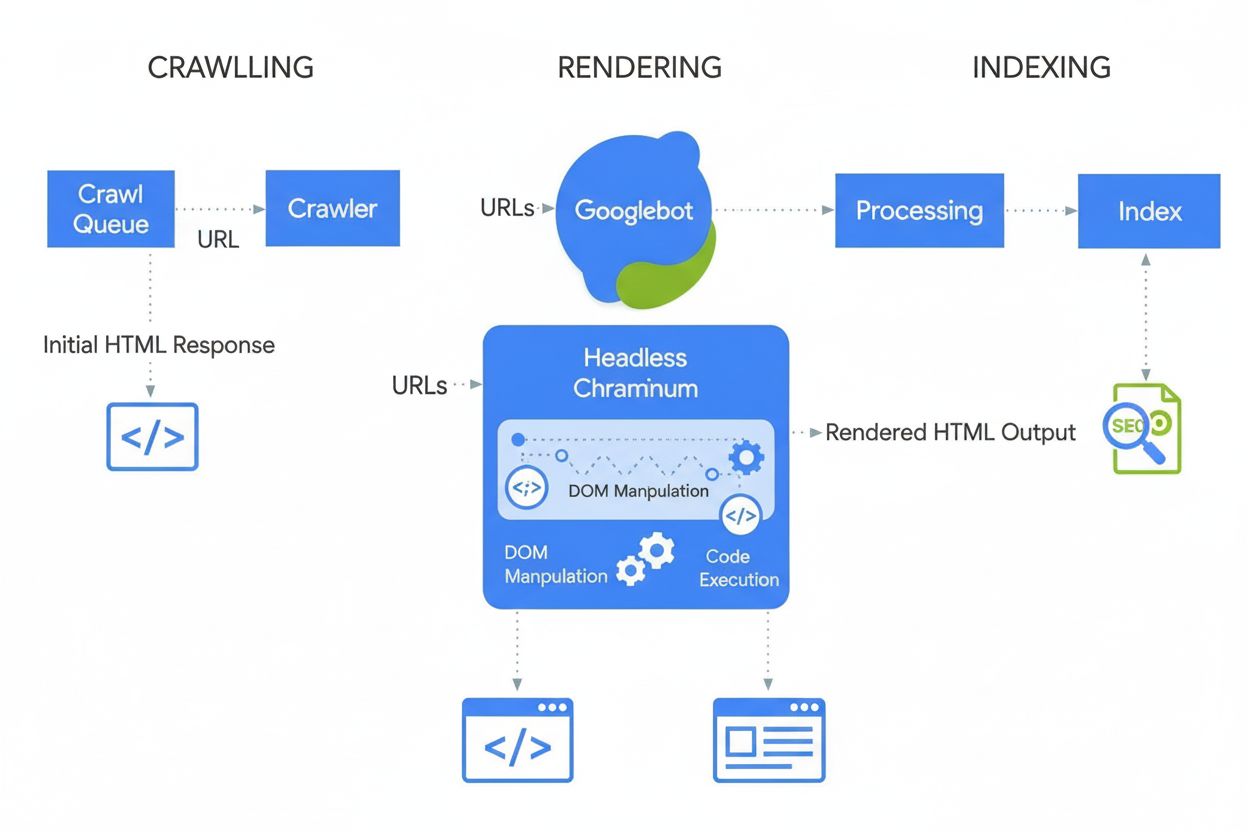

The rise of JavaScript frameworks has fundamentally transformed how websites are built and how search engines must process them. In the early days of the web, Googlebot simply parsed HTML responses from servers, making SEO straightforward—content in the HTML was indexed. However, as developers embraced client-side rendering to create more interactive and dynamic user experiences, search engines faced a critical challenge: content was no longer present in the initial HTML response but was instead generated by JavaScript execution in the browser. This shift created a significant gap between what users saw and what search engines could initially access. Google responded by developing headless Chromium rendering capabilities, allowing Googlebot to execute JavaScript and process the rendered DOM. However, this rendering process is resource-intensive—approximately 100 times more expensive than simply parsing HTML—which means Google cannot render every page immediately. This resource constraint created the concept of a render budget, where pages are queued for rendering based on their expected importance and search traffic potential. Understanding this evolution is crucial because it explains why JavaScript SEO is not optional but rather a fundamental component of modern technical SEO strategy.

Google’s approach to JavaScript-rendered content follows a sophisticated three-phase process that fundamentally differs from traditional HTML crawling. In the crawling phase, Googlebot requests a URL and receives the initial HTML response. It immediately parses this response to extract links and check for indexing directives like robots meta tags and noindex declarations. Critically, if a page contains a noindex tag in the initial HTML, Google will not proceed to render it—this is a key distinction that many SEOs overlook. Simultaneously, the URL is queued for the rendering phase, where Web Rendering Service (WRS) uses headless Chromium to execute JavaScript, build the DOM, and generate the fully rendered HTML. This rendering step can take seconds or longer depending on JavaScript complexity, and pages may wait in the render queue for extended periods if Google’s resources are constrained. Finally, in the indexing phase, Google processes the rendered HTML to extract content, links, and metadata for inclusion in the search index. The critical insight here is that Google indexes based on rendered HTML, not the initial response HTML—meaning JavaScript can completely change what gets indexed. This three-phase process explains why JavaScript sites often experience slower indexing, why render delays matter, and why comparing response HTML to rendered HTML is essential for diagnosing JavaScript SEO issues.

| Rendering Method | How It Works | SEO Advantages | SEO Disadvantages | Best For |

|---|---|---|---|---|

| Server-Side Rendering (SSR) | Content fully rendered on server before delivery to client | Content immediately available in initial HTML; fast indexing; no render delays; supports all crawlers | Higher server load; slower Time to First Byte (TTFB); complex implementation | SEO-critical sites, ecommerce, content-heavy sites, news publishers |

| Client-Side Rendering (CSR) | Server sends minimal HTML; JavaScript renders content in browser | Reduced server load; better scalability; faster page transitions for users | Delayed indexing; requires rendering; invisible to LLM crawlers; slower initial load; consumes crawl budget | Web applications, dashboards, content behind login, non-SEO-dependent sites |

| Dynamic Rendering | Server detects crawlers and serves pre-rendered HTML; users get CSR | Content immediately available to crawlers; balances bot and user experience; easier than SSR | Complex setup; tool dependency; potential cloaking risks; requires bot detection; temporary solution | Large JavaScript-heavy sites, SPAs needing search visibility, transitional solution |

| Static Site Generation (SSG) | Content pre-rendered at build time; served as static HTML | Fastest performance; optimal SEO; no rendering delays; excellent Core Web Vitals | Limited dynamic content; rebuild required for updates; not suitable for real-time data | Blogs, documentation, marketing sites, content that changes infrequently |

JavaScript-rendered websites present several technical obstacles that directly impact SEO performance and search visibility. The most fundamental challenge is rendering delay—since rendering is resource-intensive, Google may delay rendering pages for hours or even days, meaning your content won’t be indexed immediately after publication. This is particularly problematic for time-sensitive content like news articles or product launches. Another critical issue is soft 404 errors, which occur when single-page applications return a 200 HTTP status code even for non-existent pages, confusing search engines about which pages should be indexed. JavaScript-induced changes to critical elements represent another major obstacle: when JavaScript modifies titles, canonical tags, meta robots directives, or internal links after the initial HTML response, search engines may index incorrect versions or miss important SEO signals. The crawl budget consumption problem is particularly severe for large sites—JavaScript files are large and resource-intensive, meaning Google spends more resources rendering fewer pages, limiting how deeply it can crawl your site. Additionally, LLM crawlers and AI search tools don’t execute JavaScript, making JavaScript-only content invisible to emerging AI search platforms like Perplexity, Claude, and others. Statistics show that 31.9% of SEOs aren’t sure how to determine if a website is significantly JavaScript-dependent, and 30.9% aren’t comfortable investigating JavaScript-caused SEO issues, highlighting the knowledge gap in the industry.

Optimizing JavaScript-rendered content requires a multi-faceted approach that addresses both technical implementation and strategic decision-making. The first and most important best practice is to include essential content in the initial HTML response—titles, meta descriptions, canonical tags, and critical body content should be present in the server response before JavaScript executes. This ensures search engines get a complete first impression of your page and don’t have to wait for rendering to understand what the page is about. Avoid blocking JavaScript files in robots.txt, as this prevents Google from rendering your pages properly; instead, allow access to all JavaScript resources needed for rendering. Implement proper HTTP status codes—use 404 for non-existent pages and 301 redirects for moved content rather than relying on JavaScript to handle these scenarios. For single-page applications, use the History API instead of URL fragments to ensure each view has a unique, crawlable URL; fragments like #/products are unreliable for search engines. Minimize and defer non-critical JavaScript to reduce rendering time and improve Core Web Vitals—use code splitting to load only necessary JavaScript on each page. Implement lazy loading for images using the native loading="lazy" attribute rather than JavaScript-based solutions, allowing search engines to discover images without rendering. Use content hashing in JavaScript filenames (e.g., main.2a846fa617c3361f.js) so Google knows when code has changed and needs to be re-fetched. Test your implementation thoroughly using Google Search Console’s URL Inspection Tool, Screaming Frog with rendering enabled, or Sitebulb’s Response vs Render report to compare initial HTML with rendered HTML and identify discrepancies.

Choosing the right rendering approach is one of the most consequential decisions for JavaScript SEO. Server-Side Rendering (SSR) is the gold standard for SEO-critical websites because content is fully rendered on the server before delivery, eliminating rendering delays and ensuring all crawlers can access content. Frameworks like Next.js and Nuxt.js make SSR implementation more accessible for modern development teams. However, SSR requires more server resources and can result in slower Time to First Byte (TTFB), which impacts user experience. Client-Side Rendering (CSR) is appropriate for web applications where SEO is not the primary concern, such as dashboards, tools behind login walls, or internal applications. CSR reduces server load and allows for highly interactive user experiences, but it creates indexing delays and makes content invisible to LLM crawlers. Dynamic rendering serves as a pragmatic middle ground: it detects search engine crawlers and serves them pre-rendered HTML while users receive the interactive CSR experience. Tools like Prerender.io handle this automatically, but Google explicitly states this is a temporary solution and recommends moving toward SSR long-term. Static Site Generation (SSG) is optimal for content that doesn’t change frequently—content is pre-rendered at build time and served as static HTML, providing the best performance and SEO characteristics. The decision should be based on your site’s SEO priorities, technical resources, and content update frequency. Data shows that 60% of SEOs now use JavaScript crawlers for audits, indicating growing awareness that rendering must be considered in technical SEO analysis.

Effective JavaScript SEO requires ongoing monitoring of specific metrics and indicators that reveal how search engines interact with your JavaScript-rendered content. The response vs. rendered HTML comparison is fundamental—using tools like Sitebulb’s Response vs Render report, you can identify exactly what JavaScript changes on your pages, including modifications to titles, meta descriptions, canonical tags, internal links, and robots directives. Statistics reveal that 18.26% of JavaScript crawls have H1 tags only in rendered HTML (not in the initial response), and critically, 4.60% of JavaScript audits show noindex tags only in response HTML—a nightmare scenario where Google sees noindex and never renders the page, preventing indexing of content you want indexed. Render budget consumption should be monitored through Google Search Console’s Coverage Report, which shows how many pages are queued for rendering versus already rendered. Core Web Vitals are particularly important for JavaScript sites because JavaScript execution directly impacts Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS). Monitor indexing latency—how long after publication does your content appear in Google’s index—as JavaScript sites typically experience longer delays than HTML sites. Track crawl efficiency by comparing the number of pages crawled versus the total pages on your site; JavaScript sites often have lower crawl efficiency due to resource constraints. Use Google Search Console’s URL Inspection Tool to verify that critical content appears in the rendered HTML Google processes, not just in the initial response.

The emergence of AI-powered search platforms like Perplexity, ChatGPT, Claude, and Google AI Overviews has created a new dimension to JavaScript SEO that extends beyond traditional search engines. Most LLM crawlers do not execute JavaScript—they consume raw HTML and DOM content as it appears in the initial server response. This means that if your critical content, product information, or brand messaging only appears after JavaScript execution, it is completely invisible to AI search tools. This creates a dual visibility problem: content that’s invisible to LLM crawlers won’t be cited in AI responses, and users searching through AI platforms won’t discover your content. For AmICited users monitoring brand and domain appearances in AI responses, this is particularly critical—if your JavaScript-rendered content isn’t accessible to LLM crawlers, you won’t appear in AI citations at all. The solution is to ensure that essential content is present in the initial HTML response, making it accessible to both traditional search engines and AI crawlers. This is why Server-Side Rendering or Dynamic Rendering becomes even more important in the age of AI search—you need your content visible not just to Googlebot but to the growing ecosystem of AI search tools that don’t execute JavaScript.

The landscape of JavaScript SEO continues to evolve as both search engines and web technologies advance. Google has made significant investments in improving JavaScript rendering capabilities, moving from a two-phase process (crawl and index) to a three-phase process (crawl, render, and index) that better handles modern web applications. However, rendering remains resource-constrained, and there’s no indication that Google will render every page immediately or that render budgets will disappear. The industry is seeing a shift toward hybrid rendering approaches where critical content is server-rendered while interactive elements are client-rendered, balancing SEO needs with user experience. Web Components and Shadow DOM are becoming more prevalent, requiring SEOs to understand how these technologies interact with search engine rendering. The rise of AI search is creating new pressure to ensure content is accessible without JavaScript execution, potentially driving adoption of SSR and SSG approaches. Core Web Vitals continue to be a ranking factor, and JavaScript’s impact on these metrics means performance optimization is inseparable from JavaScript SEO. Industry data shows that only 10.6% of SEOs perfectly understand how Google crawls, renders, and indexes JavaScript, indicating significant room for education and skill development. As JavaScript frameworks become more sophisticated and AI search platforms proliferate, JavaScript SEO expertise will become increasingly valuable and essential for competitive organic visibility.

main.2a846fa617c3361f.js) so Google knows when code has changed and needs re-fetchingloading="lazy") rather than JavaScript-based solutions for better crawler compatibilityJavaScript SEO has evolved from a niche technical concern to a fundamental component of modern search engine optimization. With 98.7% of websites incorporating JavaScript and 88% of SEOs regularly encountering JavaScript-dependent sites, the ability to optimize JavaScript-rendered content is no longer optional—it’s essential. The complexity of the three-phase rendering pipeline, the resource constraints of render budgets, and the emergence of AI search platforms have created a multifaceted challenge that requires both technical knowledge and strategic decision-making. The statistics are sobering: 41.6% of SEOs haven’t read Google’s JavaScript documentation, 31.9% aren’t sure how to identify JavaScript-dependent sites, and 30.9% aren’t comfortable investigating JavaScript-caused issues. Yet the impact is significant—4.60% of JavaScript audits show critical issues like noindex tags only in response HTML that prevent indexing entirely. The path forward requires investment in education, adoption of appropriate rendering strategies, and implementation of best practices that ensure content is accessible to both search engines and AI crawlers. Whether through Server-Side Rendering, Dynamic Rendering, or careful optimization of Client-Side Rendering, the goal remains constant: make your JavaScript-powered content fully discoverable, indexable, and visible across all search platforms—from traditional Google Search to emerging AI search tools. For organizations using AmICited to monitor brand visibility in AI responses, JavaScript SEO becomes even more critical, as unoptimized JavaScript-rendered content will be invisible to LLM crawlers and won’t generate citations in AI search results.

Yes, Google does render and index JavaScript content using headless Chromium. However, rendering is resource-intensive and deferred until Google has available resources. Google processes pages in three phases: crawling, rendering, and indexing. Pages marked with noindex tags are not rendered, and rendering delays can slow down indexing. Most importantly, the rendered HTML—not the initial response HTML—is what Google uses for indexing decisions.

According to 2024 data, 98.7% of websites now have some level of JavaScript reliance. Additionally, 62.3% of developers use JavaScript as their primary programming language, with 88% of SEOs dealing with JavaScript-dependent sites either sometimes or all the time. This widespread adoption makes JavaScript SEO knowledge essential for modern SEO professionals.

Key challenges include rendering delays that slow indexing, resource-intensive processing that consumes crawl budget, potential soft 404 errors in single-page applications, and JavaScript-induced changes to critical elements like titles, canonicals, and meta robots tags. Additionally, most LLM crawlers and AI search tools don't execute JavaScript, making content invisible to AI-powered search platforms if it only appears post-render.

Response HTML is the initial HTML sent from the server (what you see in 'View Source'). Rendered HTML is the final DOM after JavaScript execution (what you see in browser Inspector). JavaScript can significantly modify the DOM by injecting content, changing meta tags, rewriting titles, and adding or removing links. Search engines index based on rendered HTML, not response HTML.

Server-Side Rendering (SSR) is optimal for SEO as content is fully rendered on the server before delivery. Client-Side Rendering (CSR) requires search engines to render pages, causing delays and indexing issues. Dynamic rendering serves pre-rendered HTML to crawlers while users get CSR, but Google recommends it only as a temporary solution. Choose based on your site's SEO priorities and technical resources.

Use Google Search Console's URL Inspection Tool: go to URL Inspection, click 'Test live URL,' then view the 'HTML' tab to see the rendered HTML Google processed. Alternatively, use tools like Screaming Frog with rendering enabled, Sitebulb's Response vs Render report, or Chrome DevTools to compare initial HTML with rendered DOM and identify JavaScript-related issues.

A render budget is the amount of resources Google allocates to rendering pages on your site. Google prioritizes rendering for pages expected to receive more search traffic. JavaScript-heavy sites with lower priority may experience significant rendering delays, slowing indexing. This is why optimizing JavaScript to reduce rendering time and ensuring critical content is in the initial HTML response is crucial for SEO performance.

Most LLM crawlers and AI-powered search tools (like Perplexity, Claude, and others) don't execute JavaScript—they consume raw HTML. If your critical content only appears after JavaScript execution, it's invisible to both Google's initial crawl and AI search platforms. This makes JavaScript SEO essential not just for traditional search but for emerging AI search visibility and citation opportunities.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Technical SEO optimizes website infrastructure for search engine crawling, indexing, and ranking. Learn crawlability, Core Web Vitals, mobile optimization, and ...

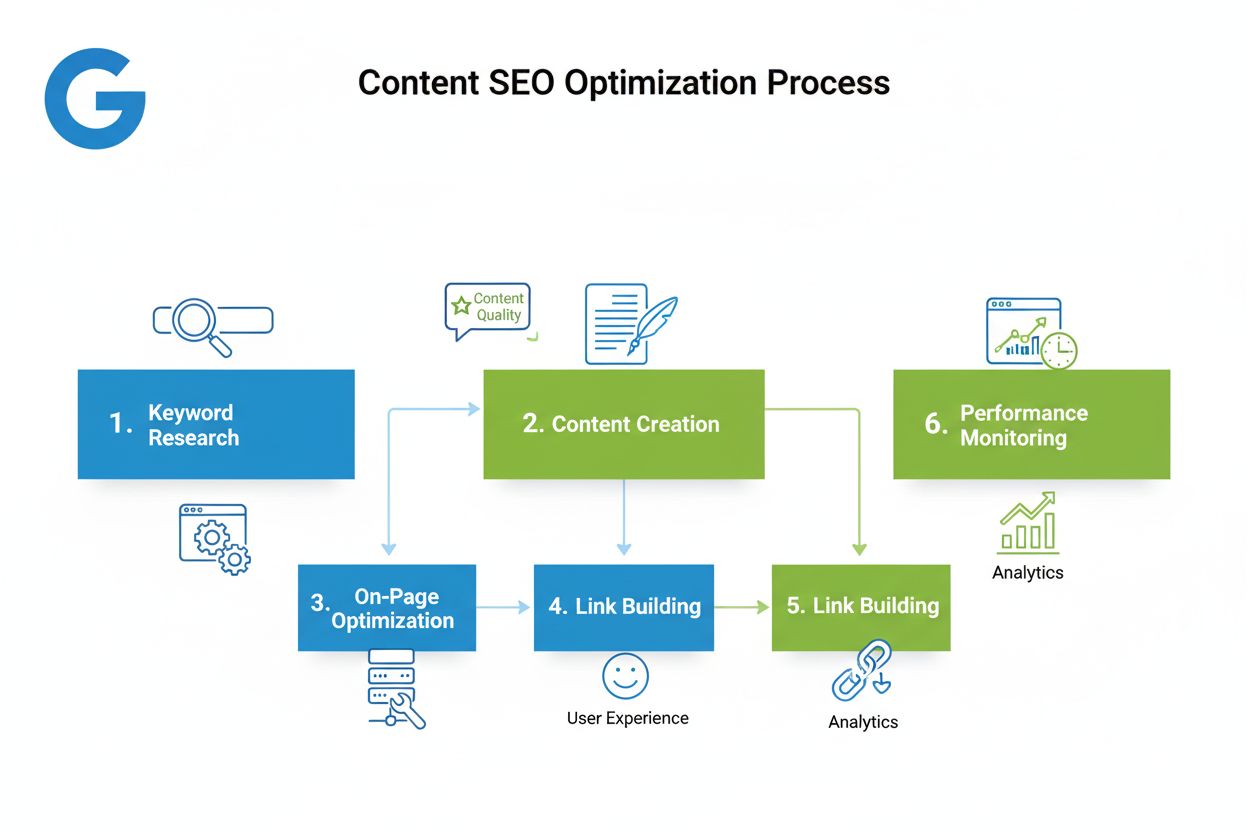

Content SEO is the strategic creation and optimization of high-quality content to improve search engine rankings and organic visibility. Learn how to optimize c...

Image SEO optimizes images for search visibility through alt text, file names, compression, and structured data. Learn how to improve rankings in Google Images ...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.