What is Large Language Model Optimization (LLMO)? Complete Guide

Learn what LLMO is, how it works, and why it matters for AI visibility. Discover optimization techniques to get your brand mentioned in ChatGPT, Perplexity, and...

Large Language Model Optimization (LLMO) is the practice of optimizing content, website structure, and brand presence to appear in AI-generated responses from conversational AI tools like ChatGPT, Claude, Perplexity, and Google Gemini. Unlike traditional SEO which focuses on search rankings, LLMO aims to get brands mentioned, cited, and recommended within LLM responses to improve visibility and authority in AI-powered discovery.

Large Language Model Optimization (LLMO) is the practice of optimizing content, website structure, and brand presence to appear in AI-generated responses from conversational AI tools like ChatGPT, Claude, Perplexity, and Google Gemini. Unlike traditional SEO which focuses on search rankings, LLMO aims to get brands mentioned, cited, and recommended within LLM responses to improve visibility and authority in AI-powered discovery.

Large Language Model Optimization (LLMO) is the strategic practice of optimizing content, website architecture, and brand presence to achieve visibility and citations within AI-generated responses from conversational AI systems. Unlike traditional Search Engine Optimization (SEO), which focuses on ranking websites in search engine results pages, LLMO targets the emerging ecosystem of Large Language Models like ChatGPT, Claude, Perplexity, and Google Gemini. The primary goal of LLMO is not necessarily to drive clicks to your website, but rather to ensure your brand is mentioned, recommended, and cited when users interact with these AI systems. This represents a fundamental shift in how digital visibility is achieved—from optimizing for algorithmic ranking to optimizing for AI-driven brand recognition and authority. As AI search usage explodes globally, with ChatGPT processing 3+ billion prompts monthly and Google AI Overviews appearing in 13%+ of searches, LLMO has become essential for maintaining competitive visibility in the AI-first discovery landscape.

The emergence of Large Language Models has fundamentally transformed how people search for and discover information online. Historically, search was dominated by keyword-based queries on Google, Bing, and other traditional search engines. Users would type a query, receive a list of ranked results, and click through to websites. This model created a clear incentive structure: rank higher, get more clicks, drive more traffic. However, the introduction of conversational AI systems has disrupted this paradigm entirely. Instead of browsing through multiple links, users now ask natural language questions to AI assistants and receive synthesized, direct answers. This shift has profound implications for digital marketing and brand visibility.

Research from Semrush reveals that AI search visitors convert 4.4x better than traditional organic search visitors, and LLM traffic channels are projected to drive as much business value as traditional search by 2027. This isn’t merely a marginal trend—it represents a wholesale transformation of the search landscape. According to Adobe Analytics, generative AI traffic to U.S. retail websites grew by 1,200% between July 2024 and February 2025, with the 2024 holiday season alone seeing a 1,300% increase in AI search referrals. Simultaneously, Google’s search market share dropped below 90% in October 2024 for the first time since March 2015, signaling that alternative discovery channels are capturing meaningful market share. The Digital Bloom 2025 AI Citation Report, analyzing 680+ million citations, found that ChatGPT processes 3+ billion prompts monthly, Perplexity indexes 200+ billion URLs, and Google AI Overviews appear in 13%+ of searches. These metrics underscore why LLMO has transitioned from a speculative concept to an operational necessity for brands seeking sustained visibility.

The distinction between parametric knowledge (what LLMs learned during training) and retrieved knowledge (real-time information fetched via Retrieval Augmented Generation or RAG) is critical to understanding LLMO. Approximately 60% of ChatGPT queries are answered purely from parametric knowledge without triggering web search, meaning that entities mentioned frequently across authoritative sources during training develop stronger neural representations and are more likely to be recalled. For the remaining 40% of queries requiring real-time information, LLMs use hybrid retrieval systems combining semantic search with keyword matching, achieving 48% improvement over single-method approaches. This dual-pathway architecture means LLMO strategies must address both training data dominance and real-time retrieval optimization.

| Strategy | Primary Focus | Target Platforms | Key Ranking Signals | Citation Mechanism | Measurement |

|---|---|---|---|---|---|

| LLMO | Brand mentions in AI responses | ChatGPT, Claude, Perplexity, Gemini | Information gain, entity optimization, semantic depth | Direct citations in conversational responses | Brand mentions, share of voice, citation frequency |

| SEO | Organic search rankings | Google, Bing, Yahoo | Backlinks, keywords, technical signals | Ranked position in SERPs | Keyword rankings, organic traffic, CTR |

| AEO | Google AI Overviews appearance | Google Search results | Featured snippets, structured data, E-E-A-T | Inclusion in AI-generated summaries | AIO appearance rate, featured snippet position |

| GEO | Multi-platform AI visibility | All AI answer engines | Cross-platform authority, content structure | Citations across diverse AI platforms | Cross-platform mention frequency, domain diversity |

| Entity SEO | Knowledge Graph recognition | Google Knowledge Graph, Wikidata | Entity consistency, schema markup, cross-platform mentions | Knowledge Panel appearance | Knowledge Panel visibility, entity recognition |

LLMO success rests on five interconnected pillars, each addressing different aspects of how Large Language Models discover, evaluate, and cite content. Understanding and implementing these pillars systematically increases the probability that your brand will be selected as a source in AI-generated responses.

Information gain refers to the degree to which your content provides original, unique insights that LLMs haven’t encountered elsewhere in their training data or retrieval systems. LLMs prioritize content that offers novel perspectives, proprietary data, or synthesized insights over content that merely repackages existing information. A Princeton University study on Generative Engine Optimization found that content including quotes, statistics, and links to credible data sources is mentioned 30-40% more often in LLMs compared to baseline unoptimized content. This finding is transformative: it means that information gain is quantifiably the most impactful LLMO tactic for improving visibility.

Creating content with strong information gain requires moving beyond surface-level coverage. Instead of writing “10 Top SEO Tips,” consider publishing “How We Increased Organic Traffic 300% Using Unconventional SEO Tactics Competitors Ignore.” The latter demonstrates original methodology, proprietary insights, and unique value. Practical approaches to building information gain include: (1) Conducting original research or surveys that generate proprietary data; (2) Publishing case studies with specific metrics and real-world outcomes; (3) Sharing contrarian viewpoints backed by data and reasoning; (4) Providing frameworks or methodologies that others haven’t published; and (5) Synthesizing multiple sources into novel insights that add analytical depth. When LLMs encounter content rich with original data points, statistics, and expert quotations, they recognize it as authoritative and citable—making it significantly more likely to appear in AI-generated responses.

Entity optimization is the process of ensuring that Large Language Models and search engines clearly understand who you are, what you do, and what topics you’re authoritative about. An entity is any person, place, brand, or concept that AI systems can recognize and connect to other entities in their knowledge graphs. For your brand, entity optimization means creating a clear “identity card” that AI systems can reference. This involves several interconnected tactics: (1) Implementing schema markup (Organization, Person, Product, Service schema) to explicitly define your entity; (2) Claiming and optimizing your Google Knowledge Panel to ensure accurate brand information; (3) Creating or updating Wikidata entries with essential properties like label, description, founded date, headquarters, and website; and (4) Building consistent citations across authoritative platforms like Wikipedia, LinkedIn, Crunchbase, and industry directories.

Research shows that brands mentioned on 4+ platforms are 2.8x more likely to appear in ChatGPT responses than those with limited cross-platform presence. This multiplier effect occurs because LLMs recognize entity consistency across diverse sources as a signal of legitimacy and importance. When your brand name, description, and associated topics appear consistently across multiple authoritative platforms, LLMs develop stronger entity associations and are more likely to recall and recommend your brand. Additionally, using the sameAs property in schema markup to link your website to Wikidata, Wikipedia, LinkedIn, and other authoritative sources strengthens entity recognition. The goal is for your brand to become a recognized entity in the AI’s semantic network—not just a website, but a distinct, well-understood actor in your industry.

Structured content refers to how information is organized and formatted to make it easy for both humans and AI systems to understand and extract. Research by AirOps analyzing ChatGPT citations found that ChatGPT cites content with sequential heading structure (H1 > H2 > H3) nearly three times more often than poorly structured content. Furthermore, almost 80% of articles cited in ChatGPT results include at least one section with a list, whereas only 28.6% of Google’s top results contain lists. Most strikingly, pages cited by ChatGPT have an average of almost 14 list sections—more than 17 times as many list sections as average pages ranked in Google SERPs. This data reveals a fundamental truth: LLMs strongly prefer content that is logically organized with clear signposts.

Practical implementation of structured content for LLMO includes: (1) Using descriptive, question-based headings that mirror how users actually search (e.g., “How to Optimize Meta Descriptions for AI Search” rather than “Tips”); (2) Creating comparison tables for complex topics to present information side-by-side; (3) Weaving FAQ blocks throughout content rather than relegating them to the end—FAQ schema is more than twice as common in LLM-cited content; (4) Using numbered lists for processes and step-by-step guides; (5) Maintaining optimal paragraph length of 40-60 words for easy AI chunking; and (6) Including definition lists for industry terms formatted as “[Term] is [definition].” Each section should be self-contained and comprehensible when extracted as an independent chunk, allowing LLMs to cite specific sections without requiring readers to view the entire article.

Clarity and attribution refers to writing content that is both easy to understand and properly sourced. A GEO study by Princeton University and IIT Delhi found that adding quotes, citations, and links to sources are the most effective ways to improve LLM visibility. This makes intuitive sense: when LLMs generate responses, they need to quickly extract key facts and understand source credibility. Content that is written clearly and cites authoritative sources makes this process easier, increasing citation probability. Practical tactics include: (1) Writing concise, factual paragraphs (around 2-3 sentences) with frontloaded key information; (2) Including proper citations and outbound links to industry studies, government data, academic research, and expert opinions; (3) Using formatting that aids clarity—bolding key terms, using numbered lists, creating summary boxes, and including transition words; and (4) Providing verifiable data points with clear attribution.

The research is unambiguous: content with high readability scores and clear source attribution receives significantly more LLM citations. An article with 10,000+ words and Flesch Score 55 received 187 total citations (72 from ChatGPT), while similar content under 4,000 words with lower readability received only 3 citations. This demonstrates that clarity and proper sourcing are not optional—they are fundamental to LLMO success. When you cite authoritative sources, you’re not just building credibility with human readers; you’re signaling to LLMs that your content is grounded in verifiable information and worthy of citation.

Authoritativeness in the LLMO context refers to how often your brand is mentioned and cited across the web, particularly on high-authority platforms that LLMs commonly reference. Research analyzing 7,000+ citations found that brand search volume has a 0.334 correlation with AI visibility—the strongest predictor identified. This means that how often people search for your brand name directly influences whether LLMs mention you. Building authoritativeness requires a multi-channel approach: (1) Getting referenced on high-authority sites like industry publications, news outlets, and authoritative forums; (2) Earning unlinked brand mentions through journalist outreach, HARO (Help a Reporter Out) responses, and industry participation; (3) Publishing consistently within core topic clusters to build semantic footprint; and (4) Engaging authentically on platforms like Reddit and Quora where LLMs frequently source information.

The data on platform-specific citation patterns is revealing: Reddit dominates Perplexity citations at 46.7%, Wikipedia represents 47.9% of ChatGPT citations, and Google AI Overviews cite at least one top-10 organic result in 93.67% of responses. This means that building presence on these platforms directly impacts LLMO visibility. Additionally, 65% of AI bot hits target content published within the past year, and 79% target content updated within 2 years, indicating that content recency is critical. Building authoritativeness is therefore an ongoing process requiring consistent content publication, active community engagement, and strategic media outreach to ensure your brand maintains visibility across the platforms that LLMs reference.

Understanding the technical mechanisms by which Large Language Models retrieve and rank sources is essential for effective LLMO strategy. LLMs operate through two distinct knowledge pathways: parametric knowledge (learned during training) and retrieved knowledge (accessed in real-time via RAG systems). For parametric knowledge, entities mentioned frequently across authoritative sources during training develop stronger neural representations, making them more likely to be recalled. Approximately 22% of training data for major AI models comes from Wikipedia, explaining why Wikipedia dominance is so pronounced in ChatGPT citations.

For retrieved knowledge, LLMs use hybrid retrieval systems combining semantic search (dense vector embeddings) with keyword matching (BM25 algorithm) using Reciprocal Rank Fusion. This hybrid approach delivers 48% improvement over single-method retrieval. The process works as follows: (1) Query Encoding—user queries are converted to vector embeddings; (2) Hybrid Retrieval—semantic and keyword methods are combined; (3) Reranking—cross-encoder models evaluate query-document pairs jointly, improving NDCG@10 by 28%; and (4) Generation—top 5-10 retrieved chunks are injected into the LLM prompt as context. Content chunking strategy significantly impacts retrieval success: page-level chunking achieves 0.648 accuracy with the lowest variance, meaning content should be structured so individual paragraphs (200-500 words) can stand alone as citable units.

Different LLM platforms use distinct architectures and citation patterns, requiring tailored optimization approaches. ChatGPT relies heavily on parametric knowledge from training data, with 87% of SearchGPT citations matching Bing’s top 10 results. This means that Bing ranking and Wikipedia presence are critical for ChatGPT visibility. Perplexity uses real-time retrieval against 200+ billion indexed URLs, with Reddit dominating at 46.7% of citations, making authentic Reddit engagement essential. Google AI Overviews maintain the strongest correlation with traditional search rankings, citing at least one top-10 organic result in 93.67% of responses, meaning strong SEO performance directly supports AIO visibility. Claude uses Brave Search backend with Constitutional AI preferences for trustworthy sources, requiring focus on trust signals and factual accuracy. Understanding these platform-specific patterns is critical because only 11% of domains are cited by both ChatGPT and Perplexity, indicating that cross-platform optimization requires distinct strategies.

Measuring LLMO effectiveness requires moving beyond traditional SEO metrics to focus on AI-specific visibility indicators. The primary KPIs include: (1) Brand mention frequency—how often your brand appears in LLM responses across platforms, tracked monthly; (2) Share of Voice—your percentage of mentions versus competitors, with top brands capturing approximately 15% and enterprise leaders reaching 25-30%; (3) Citation sentiment—whether mentions are positive, negative, or neutral, with benchmarks of >70% positive sentiment; (4) AI referral traffic—visitors from ChatGPT, Perplexity, and other LLMs, which convert 4.4x better than traditional organic traffic; (5) Topical authority expansion—the breadth of topics LLMs associate with your brand; and (6) Citation drift—monthly volatility in citations, with 40-60% normal variation indicating platform volatility.

Tools like Semrush AI Toolkit, Profound, Peec AI, and LLMrefs provide dashboards to track these metrics across multiple platforms. Enterprise-level tools like Profound track 240M+ ChatGPT citations with competitive benchmarking, while mid-market options like Peec AI (€89-€499/month) offer clean interfaces with prompt-level reporting. Budget options like Otterly.AI and Scrunch AI provide freemium tiers for smaller organizations. The key is establishing baseline metrics, setting monthly improvement targets, and iterating based on platform-specific performance data.

The LLMO landscape is evolving rapidly, with several emerging trends shaping the future of AI-driven brand visibility. First, LLM platforms are becoming increasingly specialized—ChatGPT focuses on conversational depth, Perplexity emphasizes real-time retrieval, and Google AI Overviews integrate with traditional search. This specialization means that one-size-fits-all LLMO strategies will become obsolete, requiring brands to develop platform-specific optimization approaches. Second, citation tracking and measurement are maturing—what was once intuition-based is becoming data-driven, with tools providing granular insights into citation patterns, sentiment, and competitive positioning. Third, brand-building activities are becoming directly measurable in AI visibility, as brand search volume emerges as the strongest predictor of LLM citations. This represents a fundamental shift: traditional PR and brand marketing are no longer disconnected from technical SEO—they directly impact AI visibility.

Fourth, content freshness and recency are becoming increasingly critical—65% of AI bot hits target content published within the past year, and 79% target content updated within 2 years. This means that static, evergreen content strategies may underperform in the LLMO era, requiring more frequent updates and continuous optimization. Fifth, entity optimization and semantic richness are replacing keyword density as the primary content optimization focus. Brands that build strong semantic footprints across multiple topics will capture more diverse citation opportunities. Finally, cross-platform presence is becoming non-negotiable—only 11% of domains are cited by both ChatGPT and Perplexity, meaning brands must optimize for multiple platforms simultaneously rather than focusing on a single channel.

The strategic implication is clear: LLMO is not a one-time optimization project but an ongoing discipline requiring continuous monitoring, platform-specific strategy refinement, and content iteration. Organizations that treat LLMO as a core marketing function—equivalent to SEO or paid search—will capture disproportionate share of voice in AI-generated responses. Those that treat it as a peripheral tactic will find themselves increasingly invisible in the AI-first discovery landscape. As LLM traffic channels are projected to drive as much business value as traditional search by 2027, the time to build LLMO competency is now, not later.

Traditional SEO focuses on optimizing content to rank higher in search engine results pages (SERPs) through keyword targeting, backlinks, and technical optimization. LLMO, by contrast, targets conversational AI systems and aims to get your brand mentioned and cited within AI-generated responses. While SEO is about driving clicks from search results, LLMO is about building brand awareness and authority within AI conversations. Both strategies are complementary—strong SEO performance often correlates with better LLMO visibility, but they require different optimization approaches. LLMO emphasizes information gain, entity optimization, and semantic richness over traditional keyword density.

The five pillars of LLMO are: (1) Information Gain—providing unique, original insights that LLMs haven't seen elsewhere; (2) Entity Optimization—helping AI systems recognize and connect your brand to relevant topics and concepts; (3) Structured and Semantic Content—organizing information with clear headings, lists, and logical flow for easy AI extraction; (4) Clarity and Attribution—writing concise, factual content with proper citations and sources; and (5) Authoritativeness and Mentions—building brand authority through mentions on high-authority platforms and consistent cross-web presence. Research shows that implementing these pillars can boost AI visibility by 30-40% compared to unoptimized content.

Brand search volume is the strongest predictor of LLM citations, with a correlation coefficient of 0.334—stronger than traditional backlinks. This means that how often people search for your brand name directly influences whether LLMs mention you in responses. When users actively search for your brand, it signals to AI systems that your brand is recognized and relevant. This creates a virtuous cycle: increased brand awareness leads to more branded searches, which increases LLM visibility, which further boosts brand awareness. Building brand search volume through digital PR, content marketing, and community engagement is therefore a critical LLMO strategy that directly impacts AI visibility.

Different LLM platforms use distinct retrieval and ranking mechanisms. ChatGPT relies heavily on parametric knowledge from training data, with Wikipedia representing approximately 22% of major LLM training data and 47.9% of ChatGPT citations. Perplexity uses real-time retrieval against 200+ billion indexed URLs, with Reddit dominating at 46.7% of citations. Google AI Overviews maintain stronger correlation with traditional search rankings, citing at least one top-10 organic result in 93.67% of responses. Claude uses Brave Search backend with Constitutional AI preferences for trustworthy sources. Understanding these platform-specific patterns is essential—only 11% of domains are cited by both ChatGPT and Perplexity, meaning cross-platform optimization requires tailored strategies for each system.

E-E-A-T (Experience, Expertise, Authoritativeness, and Trustworthiness) is fundamental to LLMO success. While E-E-A-T is Google's framework for evaluating content quality, LLMs also recognize these signals through different mechanisms. Experience is demonstrated through firsthand insights and real-world examples. Expertise is shown through depth of knowledge and comprehensive coverage. Authoritativeness comes from consistent mentions on high-authority platforms and recognized entity status. Trustworthiness is built through accurate, well-sourced content and transparent authorship. LLMs evaluate E-E-A-T signals linguistically and contextually rather than through backlinks, meaning content that demonstrates genuine expertise and authority across multiple dimensions is more likely to be cited in AI responses.

LLMO success is measured through several key performance indicators: (1) Brand mention frequency—how often your brand appears in LLM responses across platforms; (2) Share of Voice—your percentage of mentions versus competitors in your industry; (3) Citation sentiment—whether mentions are positive, negative, or neutral; (4) AI referral traffic—visitors coming from ChatGPT, Perplexity, and other LLMs, which convert 4.4x better than traditional organic traffic; (5) Topical authority expansion—the breadth of topics LLMs associate with your brand; and (6) Citation drift—monthly volatility in citations (40-60% normal variation). Tools like Semrush AI Toolkit, Profound, and Peec AI provide dashboards to track these metrics across multiple platforms simultaneously.

Research analyzing 30+ million citations reveals that comparative listicles are the highest-performing format at 32.5% of all AI citations, followed by how-to guides, FAQ/Q&A formats, and product/service descriptions. Content that directly answers questions in the opening paragraph performs better than content that builds to answers gradually. Optimal paragraph length is 40-60 words for easy AI extraction and chunking. Sections with 100-150 words between headings show highest citation rates. Content updated within the past 3 months is twice as likely to be cited as older content. Articles over 2,900 words are 59% more likely to be cited than those under 800 words. Including statistics increases visibility by 22%, while adding quotations boosts visibility by 37%.

While structured data (schema markup) doesn't directly influence LLM retrieval like it does for traditional search, it indirectly supports LLMO by improving how search engines and knowledge graphs understand your content. Well-implemented schema markup helps establish your entity in Google's Knowledge Graph, which many LLMs reference during training and retrieval. Priority schema types for LLMO include Organization, Person, Article/BlogPosting, HowTo, FAQPage, and Product schema. Comparison tables with proper HTML markup show 47% higher AI citation rates. FAQPage schema is more than twice as common in LLM-cited content than in traditional Google SERPs. The key is that schema helps LLMs understand content structure and entity relationships, making your content more likely to be selected for citation in AI responses.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Learn what LLMO is, how it works, and why it matters for AI visibility. Discover optimization techniques to get your brand mentioned in ChatGPT, Perplexity, and...

Learn how to optimize your content for AI training data inclusion. Discover best practices for making your website discoverable by ChatGPT, Gemini, Perplexity, ...

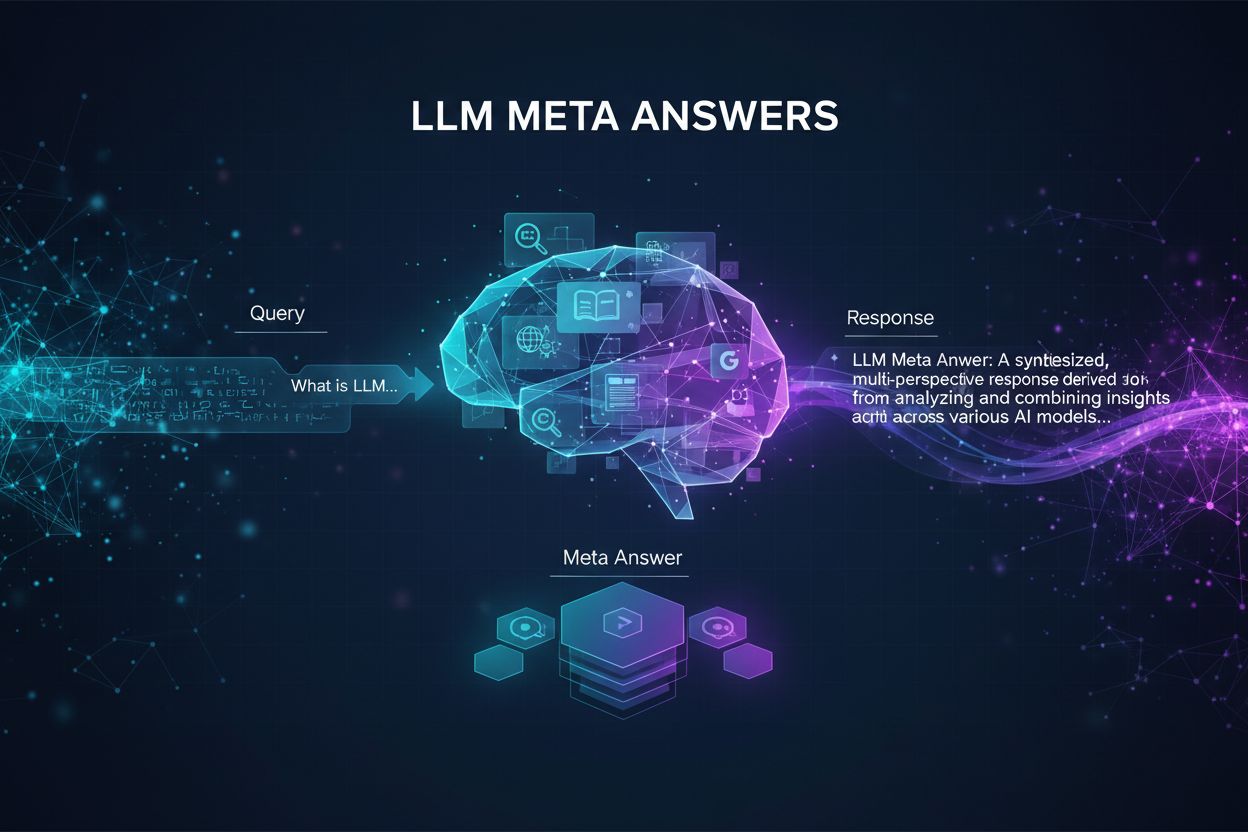

Learn what LLM Meta Answers are and how to optimize your content for visibility in AI-generated responses from ChatGPT, Perplexity, and Google AI Overviews. Dis...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.