Meta AI Optimization: Facebook and Instagram's AI Assistant

Discover how Meta AI optimization transforms Facebook and Instagram advertising with AI-powered automation, real-time bidding, and intelligent audience targetin...

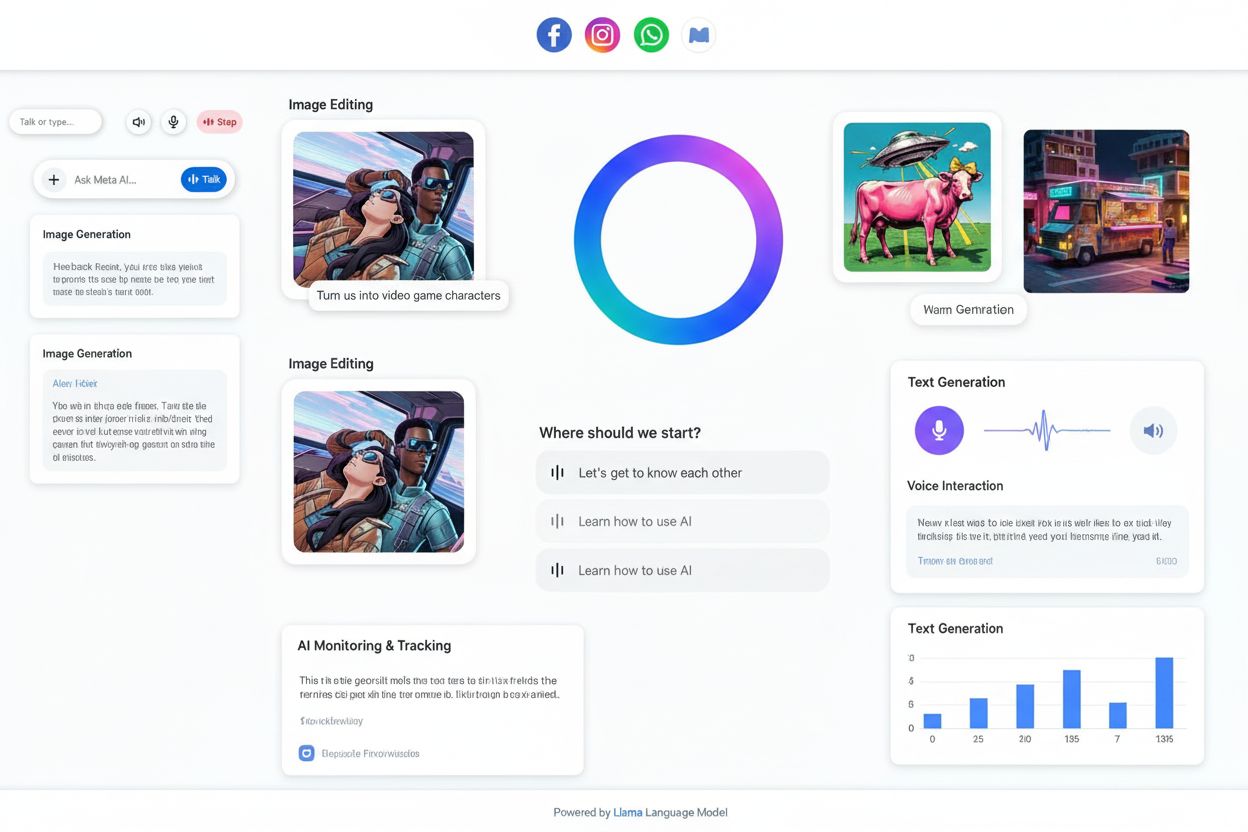

Meta AI is Meta’s multimodal artificial intelligence assistant integrated across Facebook, Instagram, WhatsApp, and Messenger, powered by the open-source Llama language model. It provides conversational assistance, text and image generation, voice interactions, and personalized recommendations to over 1 billion monthly active users globally.

Meta AI is Meta's multimodal artificial intelligence assistant integrated across Facebook, Instagram, WhatsApp, and Messenger, powered by the open-source Llama language model. It provides conversational assistance, text and image generation, voice interactions, and personalized recommendations to over 1 billion monthly active users globally.

Meta AI is Meta’s advanced multimodal artificial intelligence assistant that seamlessly integrates across the company’s ecosystem of social platforms and messaging applications. Launched in October 2024, Meta AI serves as a personal AI companion designed to enhance user experiences through conversational assistance, content generation, image editing, and voice interactions. Unlike standalone AI chatbots, Meta AI is embedded directly into Facebook, Instagram, WhatsApp, and Messenger, making it accessible to over 1 billion monthly active users worldwide. The platform represents Meta’s strategic commitment to democratizing AI access by providing free, unlimited access to advanced AI capabilities across its social ecosystem. Meta AI is powered by Llama, Meta’s open-source large language model family, which enables sophisticated natural language understanding and generation across multiple languages and modalities.

Meta’s journey into artificial intelligence extends back decades, with the company’s AI research laboratory (formerly Facebook Artificial Intelligence Research) pioneering numerous breakthroughs in machine learning and computer vision. The formal launch of Meta AI as a consumer-facing product in October 2024 marked a pivotal moment in the company’s evolution, positioning AI not as a separate tool but as an integral component of how billions of people communicate and create content daily. This integration strategy differs fundamentally from competitors like OpenAI and Google, which initially offered AI as standalone applications before gradually integrating it into existing platforms. By 2025, Meta AI had achieved remarkable adoption metrics, reaching 1 billion monthly active users by May 2025—a milestone that underscores the power of distribution through existing social networks. The platform’s rapid growth reflects both the ubiquity of Meta’s platforms and the increasing normalization of AI assistance in everyday digital interactions. Meta’s investment in open-source AI through Llama has also positioned the company as a democratizing force in AI development, contrasting with more proprietary approaches taken by competitors.

Meta AI encompasses a comprehensive suite of capabilities that extend far beyond simple text-based conversation. The platform excels at text generation, enabling users to draft emails, write creative content, brainstorm ideas, and receive detailed explanations on complex topics. Image generation and editing represent another cornerstone feature, allowing users to create original images from text descriptions, edit existing photos by adding or removing elements, modify backgrounds, and even animate static images into short videos. The voice interaction capability enables natural, conversational exchanges where users can speak to Meta AI and receive spoken responses, with options to select from celebrity voices including John Cena, Kristen Bell, and Awkwafina. Personalization is deeply embedded into Meta AI’s architecture—the system learns user preferences, remembers personal details shared in conversations, and draws on engagement history across Meta platforms to provide contextually relevant responses. The search and web integration feature allows Meta AI to access current information and provide up-to-date recommendations, distinguishing it from earlier language models with knowledge cutoffs. Additionally, Meta AI Studio enables users and businesses to create custom AI chatbots without programming knowledge, extending the platform’s utility beyond Meta’s own assistant.

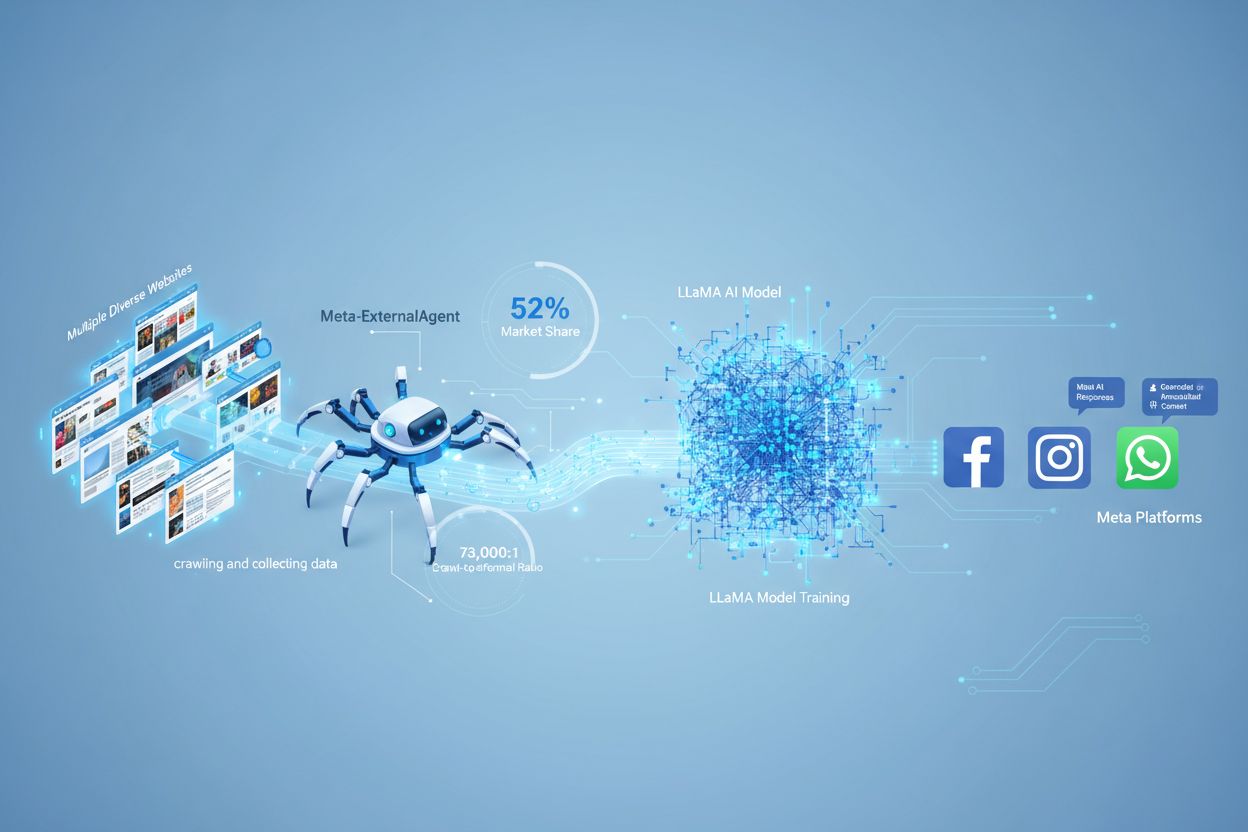

The technological backbone of Meta AI is Llama (Large Language Model Meta AI), Meta’s family of open-source large language models that represent some of the most capable publicly available AI systems. Llama 4, the latest iteration released in 2025, introduces native multimodality, meaning the model processes text and images simultaneously during training rather than treating them as separate inputs. This architectural advancement enables more sophisticated understanding of visual content and more coherent generation of text-image combinations. Llama 4 Maverick, a 17-billion active parameter variant with 128 experts, has demonstrated performance comparable to or exceeding proprietary models like GPT-4o and Gemini 2.0 Flash in multimodal benchmarks. The instruction-tuning approach used in Llama models ensures that the AI follows user directives precisely while maintaining safety guardrails. Meta’s decision to release Llama as open-source software has profound implications for the AI ecosystem, enabling researchers, developers, and organizations worldwide to build applications on top of these models. The context length supported by Llama 4 models is unprecedented, allowing the AI to process and understand much longer documents and conversations, which enhances its utility for complex tasks requiring sustained context.

Meta AI is not confined to a single application but rather woven throughout Meta’s entire product portfolio, creating a unified AI experience across multiple touchpoints. Within Facebook, users encounter Meta AI through search functions, content recommendations, and the ability to summon the assistant in comments and posts. On Instagram, Meta AI assists with caption suggestions, image editing, and direct messaging, helping creators optimize their content. WhatsApp integration enables users to invoke Meta AI in group chats and individual conversations by typing ‘@Meta AI’, providing assistance without leaving the messaging interface. Messenger offers similar functionality with dedicated Meta AI chat threads and contextual assistance. The Ray-Ban Meta smart glasses represent a frontier application, where users can activate Meta AI with voice commands like “Hey Meta, start live AI” to get real-time assistance based on what they see through the glasses’ cameras. The Meta AI app, launched as a standalone application in 2025, serves as a hub for all Meta AI interactions, featuring a Discover feed where users can explore and share AI-generated content and prompts. This multi-platform approach ensures that Meta AI is available wherever users already spend their time, reducing friction and increasing adoption compared to standalone AI applications.

| Feature | Meta AI | ChatGPT | Google Gemini | Claude |

|---|---|---|---|---|

| Primary Access Method | Integrated into Meta apps + standalone app | Web, mobile apps, API | Web, mobile apps, Google services | Web, mobile apps, API |

| Base Model | Llama 4 (open-source) | GPT-4o (proprietary) | Gemini 2.0 (proprietary) | Claude 3.5 (proprietary) |

| Multimodal Capabilities | Native text + image processing | Text + image + voice | Text + image + video | Text + image |

| Monthly Active Users | 1+ billion | 200+ million | 500+ million | 50+ million |

| Cost | Free | Free + Premium ($20/month) | Free + Premium ($20/month) | Free + Premium ($20/month) |

| Voice Interaction | Yes (multiple celebrity voices) | Yes (limited voices) | Yes (limited voices) | No |

| Image Generation | Yes (integrated) | Yes (DALL-E 3) | Yes (Imagen 3) | No |

| Geographic Availability | 60+ countries (not EU) | 180+ countries | 180+ countries | 180+ countries |

| Data Privacy Approach | Uses Meta platform data for personalization | Minimal data retention | Integrated with Google services | Privacy-focused |

| Open-Source Model | Yes (Llama available) | No | No | No |

| Real-Time Web Search | Yes | Yes (with Plus) | Yes | Yes |

The emergence of Meta AI as a dominant force in the AI landscape carries significant implications for businesses, marketers, and content creators. With over 1 billion monthly active users, Meta AI represents an enormous distribution channel for brand visibility and customer engagement. Companies must now consider how their content, products, and services appear in AI-generated responses within Meta’s ecosystem, similar to how they optimize for search engines and social media algorithms. The personalization engine powering Meta AI means that different users receive different responses based on their profiles and engagement history, creating opportunities for targeted AI-driven marketing but also raising questions about algorithmic bias and information fragmentation. For content creators, Meta AI’s image generation and editing capabilities democratize professional-grade content creation, enabling individuals to produce high-quality visual assets without specialized skills or expensive software. The integration of Meta AI into Ray-Ban smart glasses opens new frontiers for augmented reality applications, where AI assistance becomes contextually aware of the user’s physical environment. Businesses are beginning to recognize that AI monitoring and brand tracking across platforms like Meta AI is as critical as traditional search engine optimization, with platforms like AmICited enabling organizations to track where their brands appear in AI-generated responses.

For users seeking to maximize the value of Meta AI, several best practices emerge from early adoption patterns. Prompt engineering—crafting specific, detailed requests—yields significantly better results than vague queries, whether for text generation, image creation, or problem-solving. Users can enhance personalization by explicitly telling Meta AI about their preferences, interests, and constraints, allowing the system to tailor responses more precisely. For image generation, providing detailed descriptions of style, mood, lighting, and composition produces higher-quality outputs than generic prompts. Leveraging Meta AI’s integration across platforms means users can start a conversation on one platform and continue it on another, creating seamless workflows. Businesses implementing Meta AI Studio to create custom chatbots should focus on clear personality definition, accurate knowledge bases, and regular testing to ensure the AI represents their brand appropriately. For brand monitoring, organizations should regularly check how Meta AI responds to queries related to their industry, competitors, and products, using tools like AmICited to systematically track visibility. Privacy-conscious users should review their Accounts Center settings to understand what data Meta AI can access and adjust permissions accordingly, though complete data isolation is not possible while using Meta’s services.

While Meta AI offers impressive capabilities, important limitations and platform-specific considerations warrant attention. The system is not available in the European Union due to regulatory requirements under the EU AI Act, which mandates detailed transparency about training data and model capabilities—requirements Meta has been reluctant to meet given its history with data privacy concerns. Hallucinations, where the AI generates plausible-sounding but factually incorrect information, remain a challenge, particularly for queries requiring current information or specialized expertise. The integration with Meta’s data ecosystem means that Meta AI’s responses are influenced by user data across all Meta platforms, raising privacy concerns for users who prefer data minimization. Image generation quality varies based on prompt specificity and complexity, with some requests producing inconsistent or lower-quality results compared to specialized image generation tools like Midjourney or DALL-E. The voice interaction feature currently supports only a limited set of languages and accents, though this is expanding. For businesses, the lack of API access to Meta AI (unlike ChatGPT or Claude) limits integration possibilities, though Meta is gradually expanding developer access. The Discover feed feature, while innovative, raises questions about content moderation and the potential spread of AI-generated misinformation if not properly managed.

The trajectory of Meta AI suggests several important developments on the horizon. Meta has publicly stated its ambition to make Meta AI the world’s most widely used AI assistant by the end of 2025, a goal that appears increasingly achievable given current adoption rates. The expansion of Ray-Ban smart glasses integration represents a significant frontier, with Meta investing heavily in augmented reality and wearable AI, positioning Meta AI as a contextually aware assistant that understands the user’s physical environment. Llama model improvements will continue, with Meta committing to releasing increasingly capable open-source models that rival or exceed proprietary alternatives, potentially reshaping the competitive landscape. The integration with Meta’s metaverse ambitions suggests that Meta AI will play a central role in virtual and augmented reality experiences, serving as an intelligent guide through immersive digital environments. Regulatory evolution will likely force Meta to enhance transparency around data usage and model training, particularly as the EU AI Act’s requirements become clearer and other jurisdictions implement similar regulations. The competitive pressure from other tech giants integrating AI into their platforms means Meta must continuously innovate to maintain its advantage, particularly in areas like real-time information access and specialized domain expertise. For organizations monitoring brand visibility, the importance of AI monitoring platforms like AmICited will only increase as Meta AI becomes a primary channel through which consumers discover information about products and services.

Meta AI represents a fundamental shift in how artificial intelligence is integrated into everyday digital experiences, moving beyond standalone applications to become an invisible layer within the platforms billions of people already use. The platform’s rapid achievement of 1 billion monthly active users demonstrates the power of distribution through existing networks and the growing acceptance of AI assistance in social contexts. For organizations and individuals, understanding Meta AI is increasingly essential—not merely as a tool to use, but as a critical channel for brand visibility, customer engagement, and information discovery. The platform’s foundation in open-source Llama models signals Meta’s commitment to democratizing AI technology, contrasting with more proprietary approaches and potentially reshaping the competitive landscape. As Meta AI continues to evolve, particularly through integration with augmented reality glasses and metaverse experiences, its influence on how people interact with information and each other will only deepen. For businesses, the emergence of AI monitoring as a distinct discipline reflects the reality that brand visibility in AI-generated responses is now as important as search engine rankings. The intersection of Meta AI with platforms like AmICited that track AI citations and mentions creates new opportunities for understanding and optimizing brand presence in the AI-driven information ecosystem that is rapidly becoming the primary way people discover and evaluate products, services, and information.

Meta AI is deeply integrated into Meta's social platforms (Facebook, Instagram, WhatsApp, Messenger) and focuses on personalized, contextual assistance within those ecosystems, while ChatGPT is a standalone conversational AI accessible via web and apps. Meta AI uses the open-source Llama model and emphasizes social integration and content creation, whereas ChatGPT prioritizes general-purpose conversation and research capabilities. Meta AI is free and available to over 1 billion users, while ChatGPT offers both free and premium tiers with different feature sets.

Meta AI draws on information you've shared across Meta platforms, including your profile details, content preferences, and engagement history, to personalize responses and recommendations. The system can also remember details you explicitly tell it about yourself to provide more relevant assistance. Meta's privacy policy allows users to control data usage through their Accounts Center, though complete opt-out options are limited in some regions, particularly the EU where stricter AI regulations apply.

Yes, Meta AI can generate, edit, and animate images and videos based on text prompts. Users can create images from descriptions, edit existing images by adding or removing elements, change backgrounds, and convert generated images into short videos. The image generation feature is powered by Meta's advanced multimodal capabilities and is available across the Meta AI app, web interface, and integrated into social platforms.

Llama (Large Language Model Meta AI) is Meta's open-source family of large language models that form the foundation of Meta AI. The latest version, Llama 4, features native multimodality, allowing it to process both text and images simultaneously. Llama models are trained on vast amounts of data to understand and generate human-like text, answer questions, and hold conversations. Meta makes Llama available to developers and researchers, democratizing access to advanced AI technology.

Meta AI is available in over 60 countries and supports 8+ languages including English, French, German, Hindi, Italian, Portuguese, and Spanish. However, it is not currently available in the European Union due to regulatory requirements under the EU AI Act. The service is widely available in North America, South America, Africa, Asia, and Oceania, with Meta continuously expanding geographic availability.

Meta AI is accessible through multiple channels: integrated into Facebook, Instagram, WhatsApp, and Messenger apps; via the standalone Meta AI app for iOS and Android; through the web at meta.ai; and on Ray-Ban Meta smart glasses with voice commands. You can summon Meta AI in chats by typing '@' followed by 'Meta AI,' or access it through dedicated search and chat interfaces within each platform.

Meta AI is increasingly important for brand monitoring as it appears in AI-generated responses across Meta's ecosystem. Platforms like AmICited track where brands and domains are mentioned in AI responses, and Meta AI represents a significant channel for such visibility. Understanding Meta AI's integration helps brands optimize their presence in AI-driven search and recommendation systems, similar to monitoring appearances in ChatGPT, Perplexity, and Google AI Overviews.

You cannot completely disable Meta AI across Meta's platforms, but you can mute notifications from the assistant on Instagram, WhatsApp, and Messenger. Muting options allow you to silence Meta AI messages for specific durations. For more granular control, users can adjust privacy settings through their Accounts Center to limit data sharing and personalization, though this may reduce the quality of personalized recommendations.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Discover how Meta AI optimization transforms Facebook and Instagram advertising with AI-powered automation, real-time bidding, and intelligent audience targetin...

Learn about Meta AI Imagine, Meta's text-to-image generation feature integrated into Facebook, Instagram, WhatsApp, and Messenger. Discover how it works, its ca...

Learn about Meta-ExternalAgent, Meta's AI crawler bot that collects web content for training LLaMA models. Understand how it works, why it matters for publisher...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.