Multimodal AI Search: Optimizing for Image and Voice Queries

Master multimodal AI search optimization. Learn how to optimize images and voice queries for AI-powered search results, featuring strategies for GPT-4o, Gemini,...

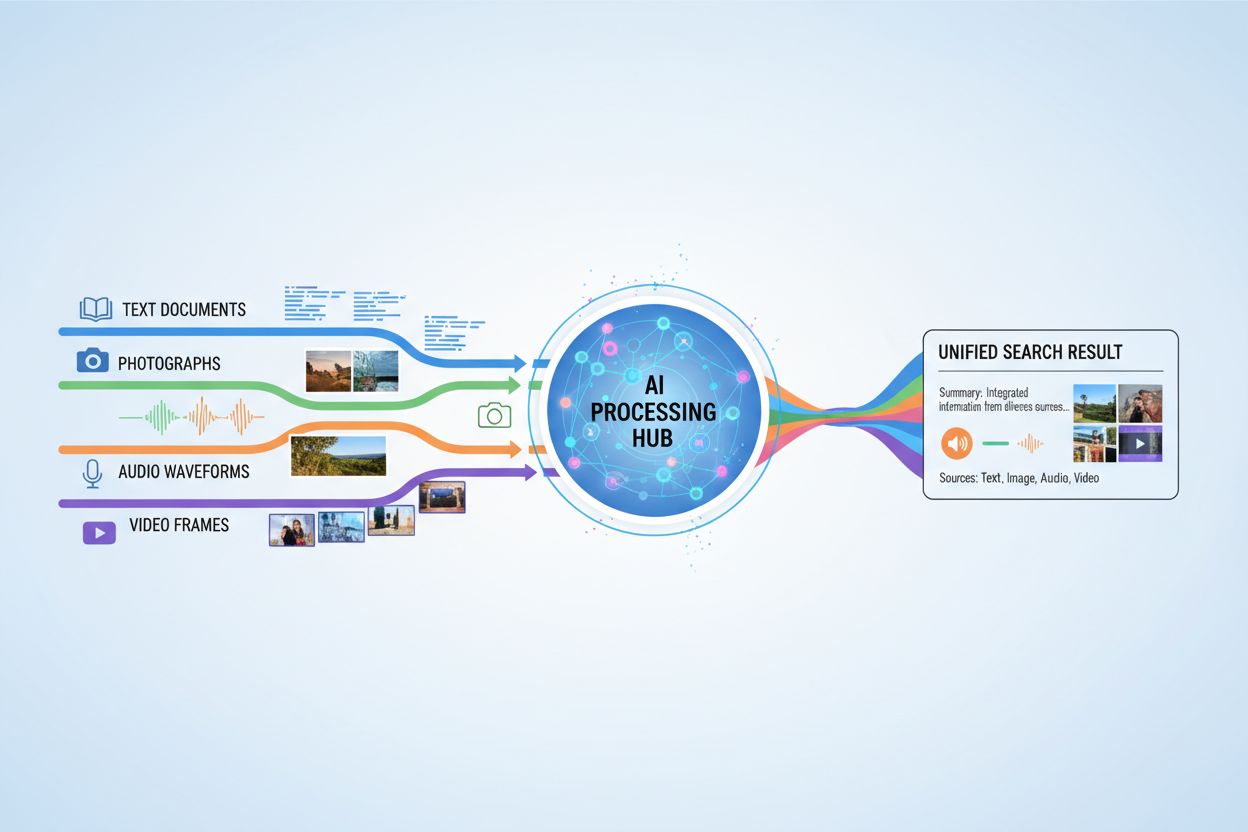

AI systems that process and respond to queries involving text, images, audio, and video simultaneously, enabling more comprehensive understanding and context-aware responses across multiple data types.

AI systems that process and respond to queries involving text, images, audio, and video simultaneously, enabling more comprehensive understanding and context-aware responses across multiple data types.

Multimodal AI search refers to artificial intelligence systems that process and integrate information from multiple data types or modalities—such as text, images, audio, and video—simultaneously to deliver more comprehensive and contextually relevant results. Unlike unimodal AI, which relies on a single type of input (for example, text-only search engines), multimodal systems leverage the complementary strengths of different data formats to achieve deeper understanding and more accurate outcomes. This approach mirrors human cognition, where we naturally combine visual, auditory, and textual information to comprehend our environment. By processing diverse input types together, multimodal AI search systems can capture nuances and relationships that would be invisible to single-modality approaches.

Multimodal AI search operates through sophisticated fusion techniques that combine information from different modalities at various processing stages. The system first extracts features from each modality independently, then strategically merges these representations to create a unified understanding. The timing and method of fusion significantly impact performance, as illustrated in the following comparison:

| Fusion Type | When Applied | Advantages | Disadvantages |

|---|---|---|---|

| Early Fusion | Input stage | Captures low-level correlations | Less robust with misaligned data |

| Mid Fusion | Preprocessing stages | Balanced approach | More complex |

| Late Fusion | Output level | Modular design | Reduced context cohesiveness |

Early fusion combines raw data immediately, capturing fine-grained interactions but struggling with misaligned inputs. Mid-fusion applies fusion during intermediate processing stages, offering a balanced compromise between complexity and performance. Late fusion operates at the output level, allowing independent modality processing but potentially losing important cross-modal context. The choice of fusion strategy depends on the specific application requirements and the nature of the data being processed.

Several key technologies power modern multimodal AI search systems, enabling them to process and integrate diverse data types effectively:

These technologies work synergistically to create systems capable of understanding complex relationships between different types of information.

Multimodal AI search has transformative applications across numerous industries and domains. In healthcare, systems analyze medical images alongside patient records and clinical notes to improve diagnostic accuracy and treatment recommendations. E-commerce platforms use multimodal search to enable customers to find products by combining text descriptions with visual references or even sketches. Autonomous vehicles rely on multimodal fusion of camera feeds, radar data, and sensor inputs to navigate safely and make real-time decisions. Content moderation systems combine image recognition, text analysis, and audio processing to identify harmful content more effectively than single-modality approaches. Additionally, multimodal search enhances accessibility by allowing users to search using their preferred input method—voice, image, or text—while the system understands the intent across all formats.

Multimodal AI search delivers substantial benefits that justify its increased complexity and computational requirements. Improved accuracy results from leveraging complementary information sources, reducing errors that single-modality systems might make. Enhanced contextual understanding emerges when visual, textual, and auditory information combine to provide richer semantic meaning. Superior user experience is achieved through more intuitive search interfaces that accept diverse input types and deliver more relevant results. Cross-domain learning becomes possible as knowledge from one modality can inform understanding in another, enabling transfer learning across different data types. Increased robustness means the system maintains performance even when one modality is degraded or unavailable, as other modalities can compensate for missing information.

Despite its advantages, multimodal AI search faces significant technical and practical challenges. Data alignment and synchronization remains difficult, as different modalities often have different temporal characteristics and quality levels that must be carefully managed. Computational complexity increases substantially when processing multiple data streams simultaneously, requiring significant computational resources and specialized hardware. Bias and fairness concerns emerge when training data contains imbalances across modalities or when certain groups are underrepresented in specific data types. Privacy and security become more complex with multiple data streams, increasing the surface area for potential breaches and requiring careful handling of sensitive information. Massive data requirements mean that training effective multimodal systems demands substantially larger and more diverse datasets than unimodal alternatives, which can be expensive and time-consuming to acquire and annotate.

Multimodal AI search intersects importantly with AI monitoring and citation tracking, particularly as AI systems increasingly generate answers that reference or synthesize information from multiple sources. Platforms like AmICited.com focus on monitoring how AI systems cite and attribute information to original sources, ensuring transparency and accountability in AI-generated responses. Similarly, FlowHunt.io tracks AI content generation and helps organizations understand how their branded content is being processed and referenced by multimodal AI systems. As multimodal AI search becomes more prevalent, tracking how these systems cite brands, products, and original sources becomes crucial for businesses seeking to understand their visibility in AI-generated results. This monitoring capability helps organizations verify that their content is being accurately represented and properly attributed when multimodal AI systems synthesize information across text, images, and other modalities.

The future of multimodal AI search points toward increasingly unified and seamless integration of diverse data types, moving beyond current fusion approaches toward more holistic models that process all modalities as inherently interconnected. Real-time processing capabilities will expand, enabling multimodal search to operate on live video streams, continuous audio, and dynamic text simultaneously without latency constraints. Advanced data augmentation techniques will address current data scarcity challenges by synthetically generating multimodal training examples that maintain semantic consistency across modalities. Emerging developments include foundation models trained on vast multimodal datasets that can be efficiently adapted to specific tasks, neuromorphic computing approaches that more closely mimic biological multimodal processing, and federated multimodal learning that enables training across distributed data sources while preserving privacy. These advances will make multimodal AI search more accessible, efficient, and capable of handling increasingly complex real-world scenarios.

Unimodal AI systems process only one type of data input, such as text-only search engines. Multimodal AI systems, by contrast, process and integrate multiple data types—text, images, audio, and video—simultaneously, enabling deeper understanding and more accurate results by leveraging the complementary strengths of different data formats.

Multimodal AI search improves accuracy by combining complementary information sources that capture nuances and relationships invisible to single-modality approaches. When visual, textual, and auditory information combine, the system achieves richer semantic understanding and can make more informed decisions based on multiple perspectives of the same information.

Key challenges include data alignment and synchronization across different modalities, substantial computational complexity, bias and fairness concerns when training data is imbalanced, privacy and security issues with multiple data streams, and massive data requirements for effective training. Each modality has different temporal characteristics and quality levels that must be carefully managed.

Healthcare benefits from analyzing medical images with patient records and clinical notes. E-commerce uses multimodal search for visual product discovery. Autonomous vehicles rely on multimodal fusion of cameras, radar, and sensors. Content moderation combines image, text, and audio analysis. Customer service systems leverage multiple input types for better support, and accessibility applications allow users to search using their preferred input method.

Embedding models convert different modalities into numerical representations that capture semantic meaning. Vector databases store these embeddings in a shared mathematical space where relationships between different data types can be measured and compared. This allows the system to find connections between text, images, audio, and video by comparing their positions in this common semantic space.

Multimodal AI systems handle multiple sensitive data types—recorded conversations, facial recognition data, written communication, and medical images—which increases privacy risks. The combination of different modalities creates more opportunities for data breaches and requires strict compliance with regulations like GDPR and CCPA. Organizations must implement robust security measures to protect user identity and sensitive information across all modalities.

Platforms like AmICited.com monitor how AI systems cite and attribute information to original sources, ensuring transparency in AI-generated responses. Organizations can track their visibility in multimodal AI search results, verify that their content is accurately represented, and confirm proper attribution when AI systems synthesize information across text, images, and other modalities.

The future includes unified models that process all modalities as inherently interconnected, real-time processing of live video and audio streams, advanced data augmentation techniques to address data scarcity, foundation models trained on vast multimodal datasets, neuromorphic computing approaches mimicking biological processing, and federated learning that preserves privacy while training across distributed sources.

Track how multimodal AI search engines cite and attribute your content across text, images, and other modalities with AmICited's comprehensive monitoring platform.

Master multimodal AI search optimization. Learn how to optimize images and voice queries for AI-powered search results, featuring strategies for GPT-4o, Gemini,...

Learn what multi-modal content for AI is, how it works, and why it matters. Explore examples of multi-modal AI systems and their applications across industries.

Learn how to optimize text, images, and video for multimodal AI systems. Discover strategies to improve AI citations and visibility across ChatGPT, Gemini, and ...