Quality Rater Guidelines

Learn about Google's Quality Rater Guidelines, the evaluation framework used by 16,000+ raters to assess search quality, E-E-A-T signals, and how they influence...

Needs Met Rating is Google’s evaluation metric that assesses how well search results satisfy a user’s query intent and information needs. It uses a five-level scale ranging from ‘Fully Meets’ to ‘Fails to Meet’ to measure the helpfulness and relevance of search results for mobile users.

Needs Met Rating is Google's evaluation metric that assesses how well search results satisfy a user's query intent and information needs. It uses a five-level scale ranging from 'Fully Meets' to 'Fails to Meet' to measure the helpfulness and relevance of search results for mobile users.

Needs Met Rating is Google’s standardized evaluation metric that measures how effectively search results satisfy a user’s query intent and information requirements. Introduced in Google’s Search Quality Evaluator Guidelines, this rating system uses a five-level scale to assess the helpfulness and relevance of search results specifically for mobile users. The metric represents a fundamental shift in how search quality is evaluated—moving beyond simple keyword matching to focus on whether results genuinely fulfill what users are actually seeking. In the context of modern search and AI monitoring, Needs Met Rating has become increasingly important as search engines and AI platforms compete to deliver the most satisfying user experiences. The rating system acknowledges that user satisfaction depends not only on content relevance but also on factors like page quality, freshness, mobile usability, and the comprehensiveness of information provided.

Google’s Needs Met Rating system comprises five distinct levels, each representing a different degree of user satisfaction and query fulfillment. Understanding these levels is essential for content creators, SEO professionals, and anyone involved in search quality evaluation. The Fully Meets (FullyM) rating represents the highest satisfaction level, applying only to specific queries where almost all users would be immediately and completely satisfied by the result without needing to view other search results. Examples include branded queries like “Amazon” or “Apple iPhone,” where users have a clear intent to reach a specific website. The Highly Meets (HM) rating indicates results that are very helpful for many or most users, though some may still wish to see additional information. These results demonstrate strong relevance, reliability, and often include up-to-date information that directly addresses the user’s primary need.

The Moderately Meets (MM) rating applies to results that are helpful for many users or very helpful for some users, yet many or some users may still desire additional information. This level often applies to broad queries where different users may have varying information needs, or where the content is relevant but not comprehensive. The Slightly Meets (SM) rating describes results that are helpful for fewer users, with a weak or unsatisfying connection between the query and result. Many or most users would wish to see additional results when encountering Slightly Meets content. Finally, the Fails to Meet (FailsM) rating represents results that completely fail to satisfy user needs, where all or almost all users would seek additional information. This category includes irrelevant results, factually incorrect information, pages that don’t load, and content that is offensive or inappropriate.

The Needs Met Rating system emerged from Google’s recognition that traditional search quality metrics were insufficient for evaluating modern search results. When Google released its Search Quality Evaluator Guidelines in 2005, the focus was primarily on relevance and authority. However, as search behavior evolved and mobile usage exploded, Google realized that relevance alone didn’t guarantee user satisfaction. In October 2020, Google released a significantly updated version of its Search Quality Evaluator Guidelines with multiple enhancements to the Needs Met category, reflecting the platform’s intensified focus on mobile user experience. This update emphasized that Needs Met evaluation should specifically consider mobile user needs and how helpful and satisfying results are for mobile users. The evolution of this metric reflects broader industry trends: according to recent data, over 60% of all search queries now originate from mobile devices, making mobile-first evaluation critical.

The development of Needs Met Rating also coincided with Google’s shift toward understanding user intent more deeply. Rather than simply matching keywords, Google began investing heavily in natural language processing and semantic understanding to determine what users actually wanted when they performed searches. This philosophical shift transformed how search quality is measured and evaluated. The metric has become increasingly relevant in the context of AI search platforms like Perplexity, ChatGPT, and Google AI Overviews, which must also evaluate whether their generated responses satisfy user queries effectively. For organizations monitoring their brand presence across these platforms, understanding Needs Met Rating principles helps assess how well AI systems cite and reference their content when answering user queries.

| Metric | Focus Area | Evaluation Basis | Scale/Levels | Primary Use |

|---|---|---|---|---|

| Needs Met Rating | Query-result satisfaction | User intent fulfillment | 5 levels (Fully to Fails) | Assesses how well results answer specific queries |

| Page Quality Rating | Overall page excellence | Page purpose achievement | 9 levels (Lowest to Highest) | Evaluates standalone page quality independent of query |

| E-E-A-T | Content authority | Expertise, Experience, Authority, Trustworthiness | Qualitative assessment | Measures creator credibility and content reliability |

| Click-Through Rate (CTR) | User engagement | Actual user clicks | Percentage metric | Indicates real-world user interest in results |

| Dwell Time | User satisfaction | Time spent on page | Duration metric | Measures engagement depth after clicking |

| Bounce Rate | Content relevance | Immediate exit behavior | Percentage metric | Indicates whether content matched user expectations |

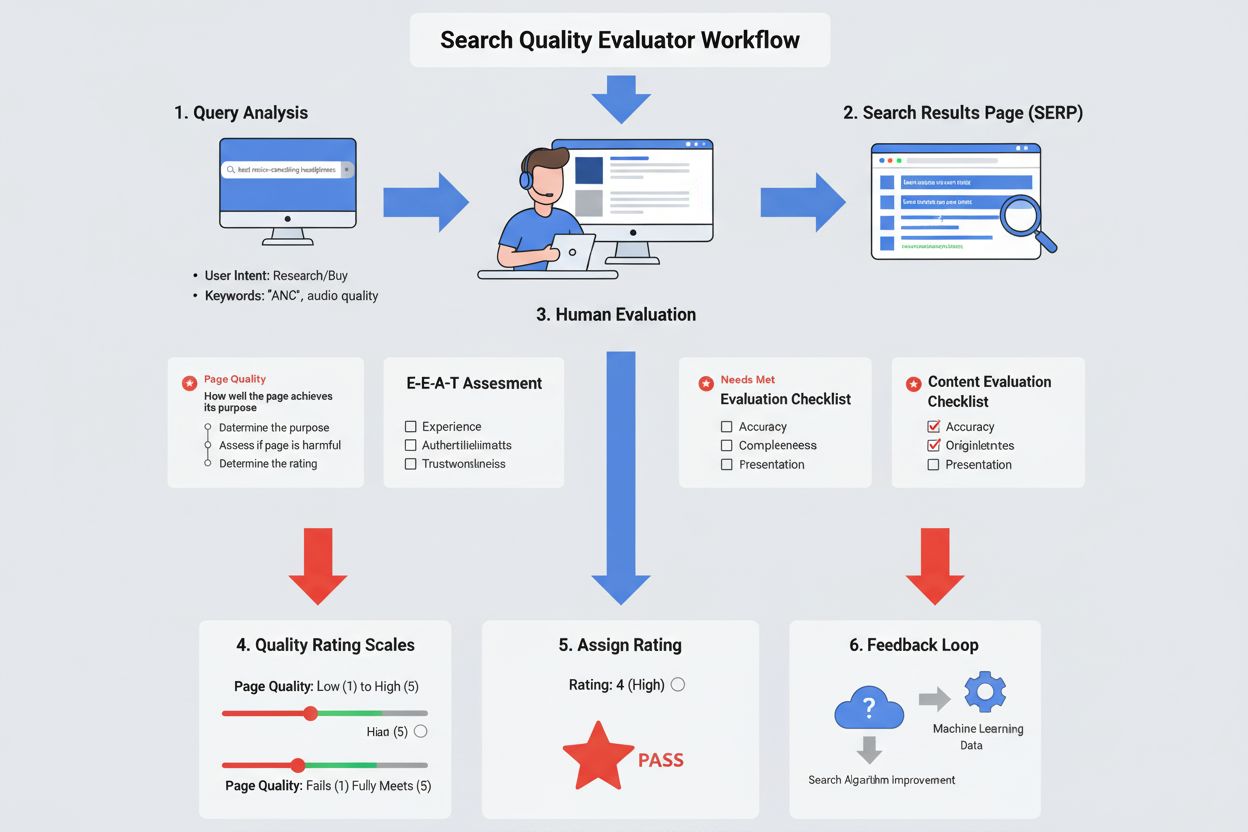

The Needs Met Rating system operates through a structured evaluation process involving human quality raters who assess search results according to Google’s detailed guidelines. When a rater evaluates a search result, they must consider multiple dimensions simultaneously: the clarity of the user’s search intent, the relevance of the result to that intent, the quality of the landing page, and the comprehensiveness of the information provided. The rater examines both the snippet displayed in search results and the actual landing page content, as both contribute to the overall Needs Met assessment. This dual evaluation recognizes that users make initial decisions based on search snippets, but their ultimate satisfaction depends on the full page experience.

The technical implementation of Needs Met Rating involves sophisticated query classification systems that help raters understand different query types and their satisfaction requirements. Queries are categorized as branded (seeking a specific website), informational (seeking knowledge), transactional (seeking to complete an action), or navigational (seeking a specific location). Each category has different satisfaction thresholds and requirements. For instance, a branded query like “Facebook login” can achieve Fully Meets status more easily because the user’s intent is unambiguous. Conversely, broad informational queries like “knitting” cannot typically achieve Fully Meets status because different users may seek different types of information—some want to learn techniques, others want to find knitting supplies, and still others want community resources. The system accounts for these variations through detailed guidance that helps raters make consistent, fair evaluations across millions of search results.

While Google explicitly states that Needs Met Rating does not directly affect individual webpage rankings, the underlying principles profoundly influence search algorithm performance and content strategy. The ratings serve as a feedback mechanism that helps Google engineers understand whether their ranking algorithms are producing satisfying results. When quality raters identify patterns—such as consistently low Needs Met ratings for a particular type of query—Google engineers use this information to refine algorithms and improve result quality. This indirect influence means that optimizing for Needs Met principles is essential for long-term SEO success. Content that consistently receives high Needs Met ratings signals to Google that the algorithm is working correctly, reinforcing the ranking factors that produced those results.

For businesses and content creators, understanding Needs Met Rating principles translates into practical SEO strategy. Research indicates that websites focusing on user intent and comprehensive content satisfaction see improved organic traffic and engagement metrics. The metric emphasizes that keyword targeting alone is insufficient—content must genuinely solve user problems. A study of top-ranking websites found that pages achieving high Needs Met ratings typically included comprehensive information addressing multiple aspects of user queries, clear page structure facilitating quick information access, and mobile-optimized design ensuring excellent user experience. Additionally, pages with high Needs Met ratings tend to have lower bounce rates and higher engagement metrics, indicating that users find the content genuinely helpful. This creates a virtuous cycle where satisfied users spend more time on pages, generate positive engagement signals, and are more likely to return or share content.

The emergence of AI search platforms has expanded the relevance of Needs Met Rating principles beyond traditional Google Search. Platforms like Perplexity, ChatGPT, Google AI Overviews, and Claude must evaluate whether their generated responses satisfy user queries effectively. These AI systems face unique challenges in meeting user needs because they synthesize information from multiple sources and must determine which sources to cite and how to present information. For organizations monitoring their brand presence through platforms like AmICited, understanding Needs Met Rating principles helps assess how well AI systems cite and reference their content when answering user queries. When an AI system generates a response that cites your brand or content, the quality of that citation and the comprehensiveness of the response both relate to Needs Met principles.

Different AI platforms approach Needs Met differently based on their architecture and design philosophy. ChatGPT focuses on conversational satisfaction, where users can ask follow-up questions to refine their information needs. Perplexity emphasizes source citation and transparency, showing users exactly which sources contributed to answers. Google AI Overviews integrate AI-generated summaries directly into traditional search results, requiring seamless integration with existing Needs Met evaluation frameworks. Claude prioritizes accuracy and nuance, often acknowledging uncertainty when information is incomplete. For content creators and brand managers, this means optimizing content for Needs Met principles requires understanding how different AI platforms evaluate and present information. Content that clearly addresses specific user needs, provides authoritative information, and includes proper context for citations performs better across all these platforms.

Achieving high Needs Met ratings requires a systematic approach to content creation and optimization that prioritizes user satisfaction above all else. The first critical step is conducting thorough keyword research that goes beyond search volume and competition metrics to understand the actual intent behind queries. For each target keyword, content creators should ask: What is the user actually trying to accomplish? What information would completely satisfy their need? What follow-up questions might they have? This intent-focused research forms the foundation for creating content that achieves high Needs Met ratings. Once intent is understood, content should be structured comprehensively to address all aspects of the user’s need, not just the primary query. For example, a page targeting “best budget smartphones” should include detailed specifications, price comparisons, user reviews, and purchasing options—not just a list of phones.

Mobile optimization is non-negotiable for achieving high Needs Met ratings, given Google’s explicit focus on mobile user needs. This extends beyond responsive design to include fast loading times, intuitive navigation, and content that’s easily scannable on small screens. Research shows that pages with load times exceeding three seconds experience significantly higher bounce rates, directly impacting Needs Met satisfaction. Content freshness matters significantly for certain query types, particularly news-related, trend-based, and time-sensitive queries. Pages addressing these topics should be regularly updated to reflect current information. Additionally, page quality factors like E-E-A-T (Expertise, Authoritativeness, Trustworthiness, and Experience) directly influence Needs Met ratings. Content should clearly demonstrate author expertise, cite authoritative sources, and build trust through transparent information about content creators and their qualifications. Reducing intrusive elements like excessive ads, pop-ups, and auto-playing videos improves user experience and Needs Met satisfaction.

The future of Needs Met Rating is inextricably linked to the evolution of search technology and user expectations. As AI search platforms become increasingly sophisticated, the definition of what constitutes “meeting user needs” will likely expand and evolve. Current trends suggest that Needs Met evaluation will increasingly incorporate factors like source transparency, citation accuracy, and the ability to provide nuanced, context-aware responses. For AI monitoring platforms like AmICited, this evolution means developing more sophisticated metrics for assessing how well AI systems cite and attribute information to original sources. The rise of generative AI has introduced new challenges to Needs Met evaluation: when an AI system synthesizes information from multiple sources, how should satisfaction be measured? Does the user need to see the original sources, or is a well-synthesized summary sufficient? These questions will shape how Needs Met Rating principles evolve.

Industry experts predict that Needs Met Rating will become increasingly important as search engines compete on user satisfaction rather than just relevance. Google’s continued investment in AI-powered search features, including AI Overviews and SGE (Search Generative Experience), suggests that the company views Needs Met principles as central to future search quality. The metric will likely become more granular, with separate evaluation frameworks for different query types, content formats, and user contexts. Additionally, as voice search, visual search, and other emerging search modalities grow, Needs Met Rating frameworks will need to adapt to evaluate satisfaction across these diverse interaction patterns. For organizations, this means that understanding and optimizing for Needs Met principles today positions them well for future search landscape changes. Content that genuinely satisfies user needs, provides authoritative information, and demonstrates clear expertise will remain valuable regardless of how search technology evolves.

The integration of Needs Met Rating principles with AI monitoring and brand tracking represents a significant opportunity for organizations. As AI systems become primary information sources for many users, ensuring that your brand and content appear in AI-generated responses—and that they appear in ways that satisfy user needs—becomes critical. This requires not only traditional SEO optimization but also understanding how AI systems evaluate and cite sources. Companies that proactively optimize their content for Needs Met principles while monitoring their presence across AI platforms will gain competitive advantages in the emerging search landscape. The future belongs to organizations that recognize that user satisfaction, not just visibility, is the ultimate measure of search success.

Needs Met Rating serves as a critical evaluation metric that helps Google assess whether search results fulfill user intent and satisfy information needs. The metric is used by human quality raters to evaluate search results across various query types, providing feedback that helps Google improve its ranking algorithms. While ratings don't directly affect individual webpage rankings, they measure how well Google's algorithms perform across a broad range of searches, enabling continuous refinement of search quality.

The five levels represent a satisfaction spectrum: Fully Meets means almost all users are immediately satisfied without needing additional results; Highly Meets indicates very helpful results for most users, though some may seek more information; Moderately Meets provides helpful content for many users but some desire additional results; Slightly Meets offers limited helpfulness with weak query-result connection; Fails to Meet completely fails to satisfy user needs. Each level reflects different degrees of query fulfillment and user satisfaction with the search result.

Google explicitly states that Needs Met ratings do not directly affect how individual webpages rank in search results. Instead, these ratings are used to measure how well Google's search algorithms perform overall. However, the underlying principles of Needs Met—understanding user intent, providing relevant content, and ensuring page quality—are fundamental to SEO success and do influence ranking factors indirectly through algorithm improvements.

Needs Met Rating and Page Quality are related but distinct concepts. While Needs Met evaluates how well a result answers a specific query, Page Quality assesses how well a page achieves its intended purpose regardless of the query. A high-quality page may receive a low Needs Met rating if it doesn't match the user's search intent, and conversely, a lower-quality page might receive a higher Needs Met rating if it perfectly answers the specific query. Both factors contribute to overall search result effectiveness.

While Needs Met Rating originated with Google's Search Quality Evaluator Guidelines, the principles apply across all AI search platforms. These platforms must evaluate whether their generated responses satisfy user queries effectively. For AI monitoring platforms like AmICited, tracking Needs Met principles helps assess how well AI systems cite and reference sources when answering user queries, ensuring that AI-generated content meets user information needs with proper attribution.

Content creators should focus on understanding search intent, creating comprehensive content that addresses all aspects of user queries, ensuring mobile-friendly design, maintaining content freshness for time-sensitive topics, and improving overall page quality. Additionally, reducing intrusive ads and pop-ups, optimizing page load speed, and providing accurate, authoritative information from credible sources all contribute to better Needs Met ratings. The key is thinking from the user's perspective and delivering exactly what they're searching for.

Branded queries like 'Amazon' typically have clear intent and can achieve Fully Meets ratings more easily since users want to reach a specific website. Informational queries like 'how to knit' may be harder to fully satisfy since different users seek different types of information. Transactional queries require results that facilitate actions like purchases or bookings. Each query type has different satisfaction thresholds, and understanding these distinctions helps content creators tailor their approach to match user expectations for their specific query category.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Learn about Google's Quality Rater Guidelines, the evaluation framework used by 16,000+ raters to assess search quality, E-E-A-T signals, and how they influence...

Page Quality Rating is Google's assessment framework evaluating webpage quality through E-E-A-T, content originality, and user satisfaction. Learn the rating sc...

Learn what Search Quality Evaluators do, how they assess search results, and their role in improving Google Search. Understand E-E-A-T, rating scales, and quali...