Panda Update

Learn about Google's Panda Update, the 2011 algorithm change targeting low-quality content. Understand how it works, its impact on SEO, and recovery strategies ...

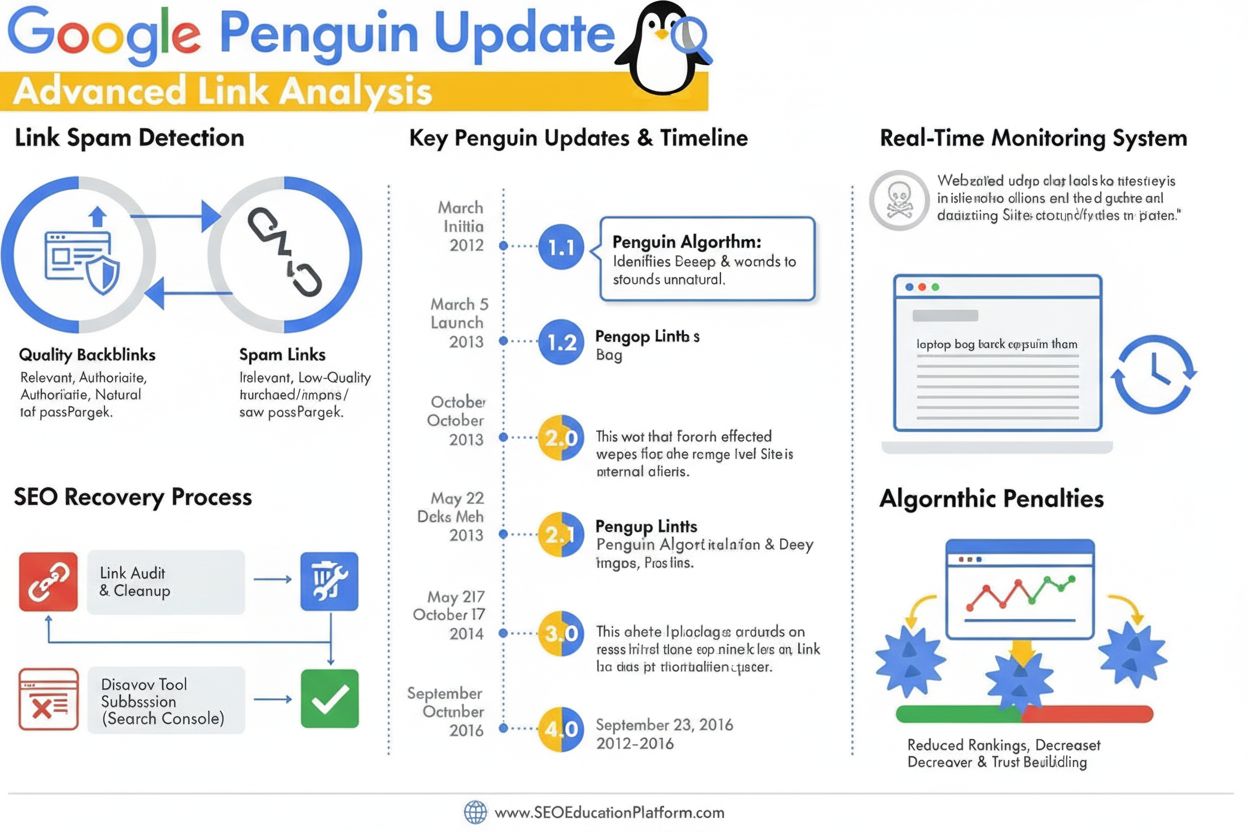

The Penguin Update is a Google algorithm designed to combat link spam and manipulative link-building practices by identifying and devaluing low-quality, unnatural backlinks. First launched in April 2012, it evolved into a real-time algorithm component in 2016 that continuously monitors and adjusts rankings based on backlink quality and link scheme patterns.

The Penguin Update is a Google algorithm designed to combat link spam and manipulative link-building practices by identifying and devaluing low-quality, unnatural backlinks. First launched in April 2012, it evolved into a real-time algorithm component in 2016 that continuously monitors and adjusts rankings based on backlink quality and link scheme patterns.

The Penguin Update is a Google algorithm specifically designed to combat link spam and manipulative link-building practices by identifying, devaluing, and penalizing websites with unnatural or low-quality backlink profiles. First officially announced by Matt Cutts on April 24, 2012, the Penguin algorithm represented Google’s aggressive response to the growing problem of black-hat SEO techniques that artificially inflated search rankings through purchased links, link exchanges, and other deceptive practices. The algorithm’s primary objective is to ensure that natural, authoritative, and relevant links are rewarded while manipulative and spammy links are downgraded or ignored entirely. Unlike content-focused updates such as Panda, which targets low-quality content, Penguin specifically evaluates the quality and authenticity of a website’s incoming link profile, making it a critical component of Google’s ranking system for over a decade.

The Penguin Update emerged during a critical period in search engine optimization when link volume played a disproportionately large role in determining search rankings. Prior to Penguin’s launch, webmasters could artificially boost their rankings by acquiring large quantities of low-quality links from link farms, private blog networks, and other spam sources. This practice created a significant problem for Google’s search results, as low-quality websites and content appeared in prominent positions despite lacking genuine authority or relevance. Matt Cutts, then head of Google’s webspam team, explained at the SMX Advanced 2012 conference that “We look at it something designed to tackle low-quality content. It started out with Panda, and then we noticed that there was still a lot of spam and Penguin was designed to tackle that.” The initial Penguin launch affected more than 3% of all search queries, making it one of the most impactful algorithm updates in Google’s history. This massive impact signaled to the SEO industry that Google was taking a firm stance against manipulative link practices and would continue to evolve its algorithms to maintain search quality.

The Penguin algorithm operates through sophisticated machine learning and pattern recognition systems that analyze backlink profiles to identify unnatural linking patterns. The algorithm examines multiple factors when evaluating link quality, including the authority and relevance of linking domains, the anchor text used in links, the velocity of link acquisition, and the contextual relevance between the linking site and the target site. Penguin specifically targets link schemes, which encompass a broad range of manipulative practices including purchased links, reciprocal link exchanges, links from private blog networks (PBNs), links from low-quality directories, and links with excessive keyword-stuffed anchor text. The algorithm also evaluates the ratio of high-quality links to low-quality links in a website’s backlink profile, recognizing that even legitimate websites occasionally receive some low-quality links. However, when the proportion of spam links becomes disproportionately high, Penguin flags the site for potential ranking adjustments. One critical aspect of Penguin’s design is that it only evaluates incoming links to a website; it does not analyze outgoing links from that site, focusing exclusively on the quality of backlinks pointing to a domain.

The Penguin Update underwent significant evolution from its initial launch through multiple iterations, with the most transformative change occurring in September 2016 when Penguin 4.0 was released. Prior to this milestone, Penguin operated on a batch update schedule, with Google announcing periodic refreshes that would re-evaluate websites’ backlink profiles and adjust rankings accordingly. Penguin 1.1 (March 2012) and Penguin 1.2 (October 2012) were data refreshes that allowed previously penalized sites to recover if they had cleaned up their link profiles. Penguin 2.0 (May 2013) represented a more technically advanced version that affected approximately 2.3% of English queries and was the first iteration to look beyond homepage and top-level category pages, examining deeper site structures for evidence of link spam. Penguin 2.1 (October 2013) and Penguin 3.0 (October 2014) continued this evolution with incremental improvements to detection capabilities. However, the release of Penguin 4.0 fundamentally changed how the algorithm operates by integrating it into Google’s core algorithm as a real-time component. This transformation meant that websites are now evaluated continuously, and ranking adjustments happen immediately as link profiles change, rather than waiting for scheduled updates. The shift to real-time processing also changed Penguin’s penalty mechanism from outright ranking penalties to link devaluation, where spammy links are simply ignored rather than causing site-wide ranking drops.

| Assessment Factor | High-Quality Links | Low-Quality/Spam Links | Penguin Detection |

|---|---|---|---|

| Link Source Authority | Established, trusted domains with strong domain authority | New domains, low DA sites, or known spam networks | Analyzes domain history, trust signals, and topical relevance |

| Anchor Text | Natural, varied, contextually relevant phrases | Exact-match keywords, repetitive, over-optimized | Flags unnatural anchor text patterns and keyword stuffing |

| Link Velocity | Gradual, organic acquisition over time | Sudden spikes or unnatural patterns | Monitors acquisition speed and consistency |

| Contextual Relevance | Links from topically related, authoritative sources | Links from unrelated or low-quality sources | Evaluates semantic relevance between linking and target sites |

| Link Placement | Editorial links within content, natural context | Footer links, sidebar links, or link farm placements | Assesses link placement and contextual integration |

| Linking Site Quality | High-quality content, legitimate business presence | Thin content, auto-generated pages, or PBNs | Examines overall site quality and content authenticity |

| Nofollow Status | Properly attributed when appropriate | Misused or absent on paid/promotional links | Recognizes proper use of nofollow for non-editorial links |

The Penguin Update fundamentally transformed SEO practices by establishing link quality as a critical ranking factor and discouraging the widespread use of black-hat link-building techniques. Prior to Penguin, many SEO agencies and webmasters engaged in aggressive link-buying campaigns, link exchanges, and PBN creation without significant consequences. The algorithm’s launch created immediate market disruption, with thousands of websites experiencing dramatic ranking drops and traffic losses. This shift forced the SEO industry to adopt more ethical, white-hat practices focused on earning links through high-quality content creation and genuine relationship building. The Penguin penalty became a cautionary tale that influenced how businesses approached link building, leading to increased investment in content marketing, public relations, and brand building as primary link acquisition strategies. Industry research shows that understanding and complying with Penguin’s requirements became essential for long-term SEO success, with many organizations implementing backlink audits and link monitoring as standard practices. The algorithm also elevated the importance of domain authority, topical relevance, and link velocity as key metrics that SEO professionals monitor when evaluating link opportunities. Over 78% of enterprises now use some form of AI-driven content monitoring or link analysis tools to track their backlink profiles and ensure compliance with Google’s quality guidelines, a direct result of Penguin’s influence on industry standards.

Recovering from a Penguin downgrade requires a systematic approach involving backlink audits, link removal, and strategic use of Google’s disavow tool. The first step is conducting a comprehensive audit of all incoming links using Google Search Console and third-party tools like Ahrefs, SEMrush, or Moz, which provide detailed backlink profiles and quality assessments. Webmasters must then evaluate each linking domain individually to determine whether the link is high-quality and natural or represents a manipulative practice. For links that are clearly spammy or low-quality, the recommended approach is to contact the linking website’s webmaster and request removal. Google explicitly recommends against paying for link removals, as this perpetuates the problem of link commerce. For links that cannot be removed through outreach, the disavow tool in Google Search Console allows webmasters to submit a file telling Google to ignore specific links when evaluating the site. It’s important to note that disavowing a link also removes any positive value it might have provided, so the tool should only be used for genuinely harmful links. Once a disavow file is submitted, Google processes it immediately, but the actual impact on rankings depends on when Google recrawls the disavowed links. Since Penguin 4.0 runs in real-time, recovery can happen relatively quickly—sometimes within days or weeks—once sufficient corrective action has been taken. However, webmasters should not expect rankings to return to pre-penalty levels, as some of those rankings may have been artificially inflated by the low-quality links being removed.

As AI search platforms like ChatGPT, Perplexity, Google AI Overviews, and Claude become increasingly important for brand visibility, understanding Penguin’s principles becomes relevant beyond traditional search rankings. These AI systems often cite authoritative sources and high-quality websites when generating responses, and websites with strong backlink profiles and clean link histories are more likely to be recognized as authoritative by AI training data and retrieval systems. A website penalized by Penguin for link spam may not only suffer in Google’s organic search results but could also be deprioritized by AI systems that evaluate source credibility. Conversely, websites that maintain clean, high-quality backlink profiles are more likely to be cited as authoritative sources in AI-generated responses. This creates an additional incentive for maintaining Penguin compliance beyond traditional SEO metrics. AmICited and similar AI monitoring platforms help organizations track how their domains appear in AI-generated responses, providing visibility into whether their authority is being recognized across multiple AI search systems. The intersection of Penguin compliance and AI citation tracking represents a new frontier in digital marketing, where link quality directly impacts both traditional search visibility and emerging AI search visibility.

The Penguin Update continues to evolve as Google refines its understanding of link quality and manipulative practices. The shift to real-time processing in 2016 represented a fundamental change in how the algorithm operates, but Google continues to enhance its detection capabilities through machine learning and artificial intelligence. Recent developments in Google’s SpamBrain system, announced in 2022, represent the next evolution in spam detection, incorporating advanced AI to identify both sites buying links and sites used for distributing links. This suggests that future iterations of link spam detection will become even more sophisticated, potentially making it harder for manipulative practices to evade detection. The industry trend toward white-hat SEO and earned media will likely continue accelerating as Google’s algorithms become more effective at distinguishing natural links from artificial ones. Additionally, as AI search platforms gain prominence, the importance of maintaining clean backlink profiles extends beyond Google’s algorithm to encompass broader recognition as an authoritative source across multiple AI systems. Organizations that prioritize link quality, brand authority, and content excellence will be better positioned to succeed in both traditional search and emerging AI search environments. The Penguin Update’s legacy is not just a specific algorithm but a fundamental shift in how search engines and AI systems evaluate website authority, making link quality a permanent and increasingly important factor in digital visibility.

A Penguin downgrade is algorithmic and happens automatically when Google's Penguin algorithm detects link spam patterns, with no manual intervention required. A Penguin penalty (or manual action) is issued by Google's webspam team after human review and requires a reconsideration request to lift. Since Penguin 4.0 became real-time in 2016, algorithmic downgrades can be recovered from almost immediately once corrective actions are taken, whereas manual penalties require explicit approval from Google.

The Penguin Update uses machine learning algorithms to analyze backlink profiles and identify patterns of manipulative link-building, including purchased links, link exchanges, private blog networks (PBNs), and unnatural anchor text patterns. The algorithm evaluates the ratio of high-quality, natural links versus low-quality spam links pointing to a website. It also examines the relevance and authority of linking domains to determine if links appear artificially obtained rather than editorially earned.

Yes, recovery is possible through a multi-step process involving backlink audits, removal of spammy links, and using Google's disavow tool for links you cannot remove directly. Since Penguin 4.0 runs in real-time, recovery can happen relatively quickly once you've cleaned up your link profile and Google recrawls those pages. However, recovery doesn't guarantee a return to previous rankings, as some of those rankings may have been artificially inflated by the low-quality links you're removing.

Penguin penalizes links from link farms, paid link networks, private blog networks (PBNs), reciprocal link exchanges, and sites with unnatural anchor text patterns. It also targets links from low-quality directories, comment spam, forum spam, and links obtained through manipulative practices. Additionally, Penguin flags links from high-authority sites that appear to be sold or placed as advertorials without proper nofollow tags, as these violate Google's link scheme guidelines.

Since Penguin 4.0 was released in September 2016, the algorithm runs continuously in real-time as part of Google's core algorithm. This means Google constantly evaluates backlink profiles and adjusts rankings based on link quality without waiting for scheduled updates. Previously, Penguin operated on a batch update schedule with refreshes announced by Google, but real-time processing eliminated the need for these announcements and allows for faster recovery when issues are corrected.

The disavow tool is a Google Search Console feature that allows webmasters to tell Google to ignore specific backlinks when evaluating their site. It's used as a last resort when you cannot directly contact webmasters to remove spammy links. By disavowing low-quality links, you prevent them from negatively impacting your rankings under the Penguin algorithm. However, disavowed links also stop providing any positive value, so it should only be used for genuinely harmful links.

The original Penguin Update launched in April 2012 affected more than 3% of all search queries according to Google's estimates. Subsequent updates had varying impacts: Penguin 2.0 affected approximately 2.3% of English queries, Penguin 2.1 affected about 1% of queries, and Penguin 3.0 affected less than 1% of English search queries. These percentages represent millions of websites globally, making Penguin one of the most significant algorithm updates in search history.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Learn about Google's Panda Update, the 2011 algorithm change targeting low-quality content. Understand how it works, its impact on SEO, and recovery strategies ...

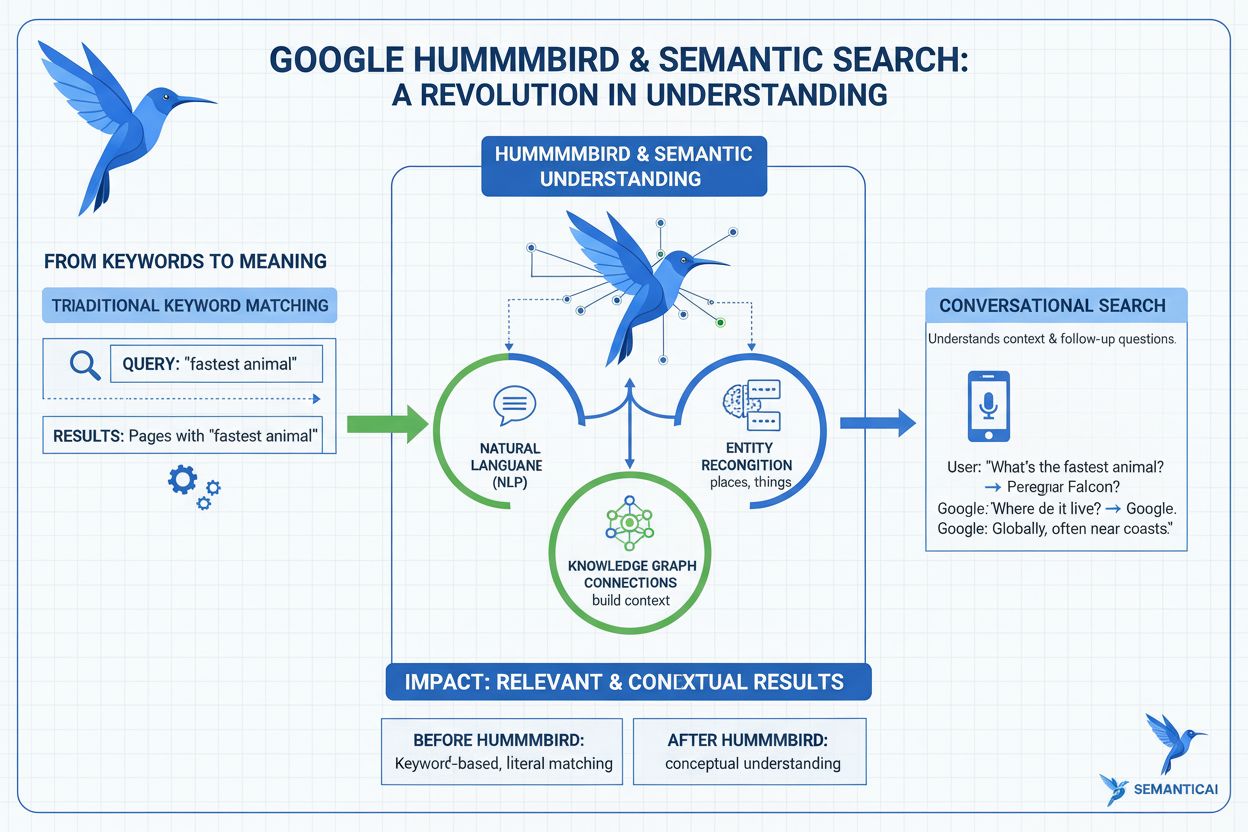

Learn what the Hummingbird Update is, how it revolutionized semantic search in 2013, and why it matters for AI monitoring and brand visibility across search pla...

Learn what Google Algorithm Updates are, how they work, and their impact on SEO. Understand core updates, spam updates, and ranking changes.

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.