Search Quality Evaluator

Learn what Search Quality Evaluators do, how they assess search results, and their role in improving Google Search. Understand E-E-A-T, rating scales, and quali...

Google’s Quality Rater Guidelines are comprehensive evaluation standards used by approximately 16,000 external human raters worldwide to assess search result quality and help improve Google’s ranking algorithms. These guidelines define how raters evaluate pages using E-E-A-T (Experience, Expertise, Authoritativeness, and Trustworthiness) criteria and determine whether search results meet user intent, though individual ratings do not directly affect website rankings.

Google's Quality Rater Guidelines are comprehensive evaluation standards used by approximately 16,000 external human raters worldwide to assess search result quality and help improve Google's ranking algorithms. These guidelines define how raters evaluate pages using E-E-A-T (Experience, Expertise, Authoritativeness, and Trustworthiness) criteria and determine whether search results meet user intent, though individual ratings do not directly affect website rankings.

Quality Rater Guidelines are Google’s comprehensive evaluation standards and handbook that define how approximately 16,000 external human raters assess the quality of search results worldwide. These guidelines serve as the foundation for Google’s rigorous testing process to validate whether their automated ranking systems deliver helpful, reliable, and relevant information to users. The guidelines establish standardized criteria for evaluating web pages and search results, ensuring consistency across different raters, languages, and geographic regions. Published and regularly updated by Google, these guidelines are publicly available and represent one of the most detailed frameworks for understanding what Google considers high-quality content. The Quality Rater Guidelines are not a ranking algorithm themselves, but rather a quality assurance mechanism that helps Google measure how well their algorithms perform in delivering content that users find trustworthy and useful.

The concept of using human raters to evaluate search quality emerged in the early 2000s as Google recognized that automated systems alone could not fully understand content quality the way humans do. Over the past two decades, the guidelines have evolved significantly to reflect changes in the web, user behavior, and technology. In 2022, Google made a major update to the guidelines by adding the first “E” to create E-E-A-T, emphasizing Experience as a critical quality signal alongside Expertise, Authoritativeness, and Trustworthiness. This evolution reflected growing recognition that first-hand experience and demonstrated knowledge are increasingly important for establishing credibility. The guidelines have also expanded to address modern content formats including short-form video, AI-generated content, and user-generated content on forums and discussion platforms. According to Google’s official documentation, the guidelines have been updated more than 50 times since their inception, with the most recent comprehensive update in November 2023 simplifying the “Needs Met” scale definitions and adding guidance for diverse web page types and modern content formats.

The E-E-A-T framework represents the cornerstone of Quality Rater Guidelines and consists of four interconnected dimensions that raters evaluate when assessing content quality. Experience refers to the first-hand, practical knowledge and direct involvement of the content creator with the topic. For example, a product review carries more weight if the reviewer has actually used the product, or medical advice is more credible if provided by someone who has treated patients. Expertise encompasses the demonstrated skill, knowledge, and qualifications of the creator in their field, which can be established through credentials, professional background, or years of focused work in a specific domain. Authoritativeness extends beyond individual expertise to evaluate whether the creator, the main content, and the website itself are recognized as trusted authorities on the topic by other experts and the broader community. This can be demonstrated through citations, awards, media recognition, or established reputation in the field. Trustworthiness, which Google emphasizes as the most critical component, evaluates whether the content is accurate, honest, transparent about its sources, safe from malware or deception, and reliable for users to depend upon. Together, these four dimensions create a comprehensive quality assessment that goes far beyond simple keyword matching or link analysis.

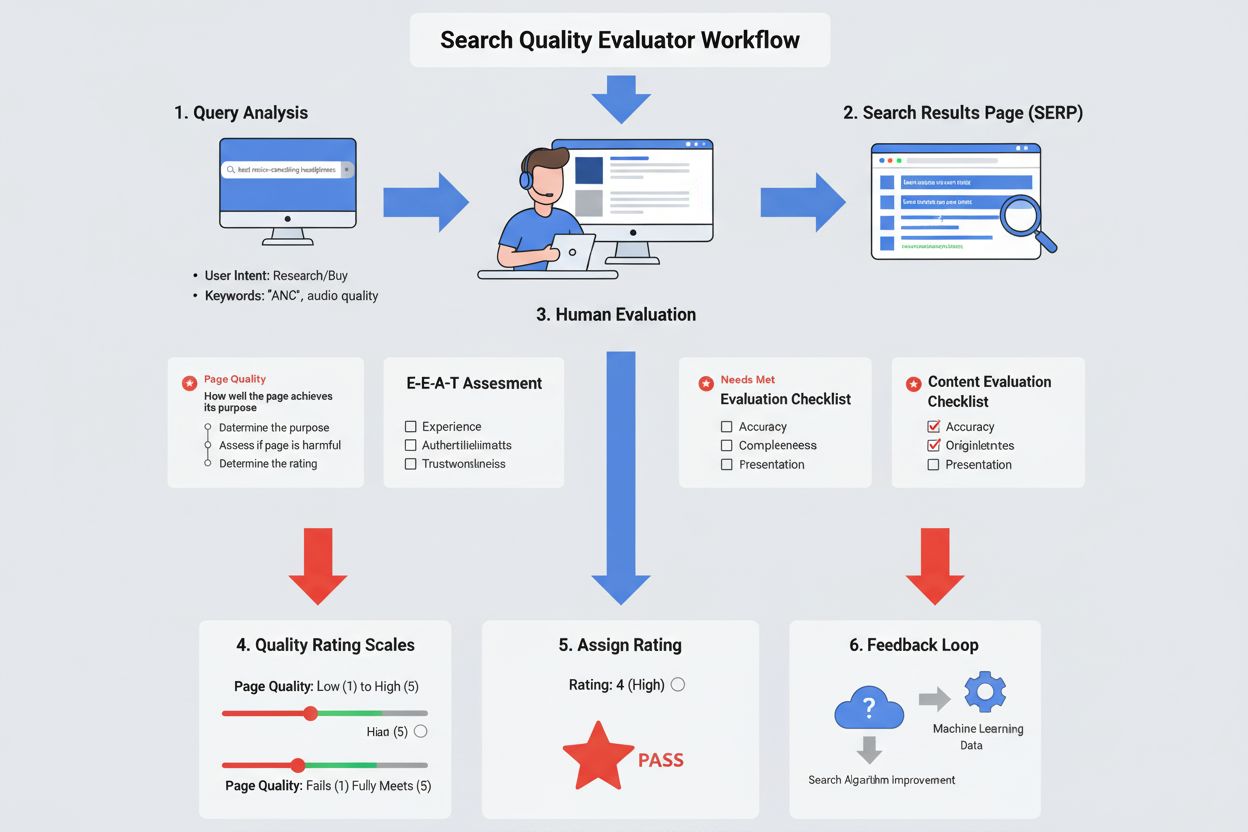

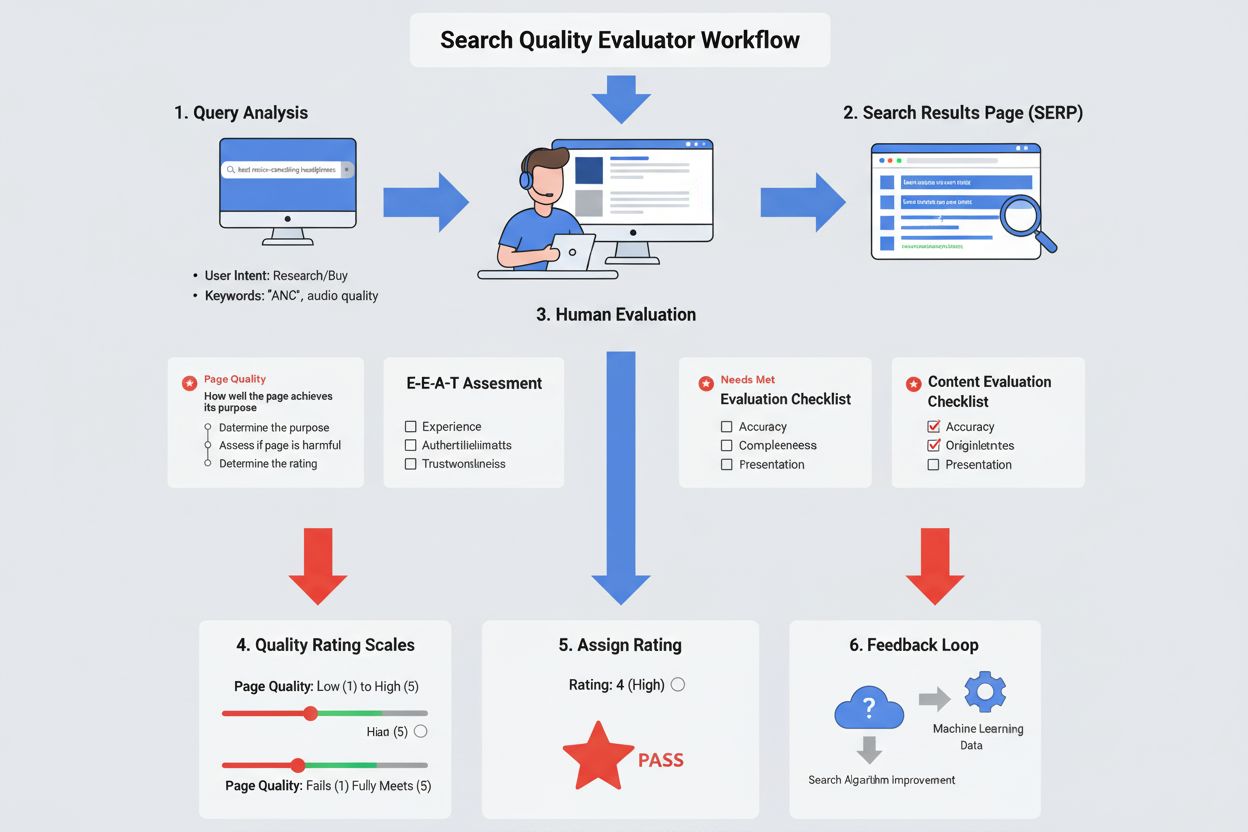

The Page Quality (PQ) rating process involves three systematic steps that raters follow to evaluate every page in their assigned sample. First, raters must determine the purpose of the page—whether it’s a news homepage meant to inform users about current events, a shopping page designed to sell products, a forum page meant to facilitate discussion, or any other specific purpose. Understanding purpose is critical because different page types have different quality expectations; a humor page and an encyclopedia page can both be highest quality if they achieve their respective purposes excellently. Second, raters assess whether the page’s purpose is harmful or deceptive, which would immediately warrant a Lowest quality rating. This includes pages designed to mislead users, spread misinformation, facilitate illegal activities, or cause harm to individuals or society. Third, raters determine the actual quality rating on a five-point scale from Lowest to Highest, considering how well the page achieves its beneficial purpose. The rating process requires raters to evaluate the Main Content quality by assessing whether it demonstrates significant effort, originality, and skill to create. Raters also research the website and creator’s reputation by looking at real user experiences and expert opinions, ensuring that quality assessments reflect how the broader community views the source’s credibility.

The Needs Met (NM) rating focuses specifically on how useful a search result is for satisfying a particular user’s search intent, which is determined from the query text and, when relevant, the user’s location. This rating process involves two key steps: first, raters interpret what the user was actually looking for when they entered their search query, recognizing that many queries have multiple possible interpretations. For instance, a search for “mercury” could mean the planet, the chemical element, the car brand, or the musician Freddie Mercury’s former band. Second, raters evaluate how well the search result satisfies that interpreted intent using a six-point scale: Fails to Meet (completely fails to address the user’s need), Slightly Meets (less helpful for the dominant interpretation), Moderately Meets (helpful for common interpretations), Highly Meets (very helpful), Fully Meets (completely satisfies the user’s need), and N/A (for certain query types with one specific expected result). When determining the Needs Met rating, raters consider whether the result “fits” the query, whether the information is current and up-to-date, whether it’s accurate and trustworthy for information-seeking queries, and whether it would satisfy the user without requiring them to search again. This distinction between Page Quality and Needs Met is crucial: a page could be exceptionally well-written and authoritative but receive a low Needs Met rating if it doesn’t match what the user was searching for.

| Aspect | Quality Rater Guidelines | E-E-A-T Framework | YMYL Standards | Automated Ranking Signals |

|---|---|---|---|---|

| Purpose | Evaluate search result quality using human judgment | Assess content credibility through four dimensions | Apply heightened standards to sensitive topics | Automatically rank pages using algorithmic signals |

| Who Evaluates | ~16,000 external human raters globally | Integrated into rater guidelines | Raters with specialized training | Google’s automated systems |

| Key Criteria | Page Quality, Needs Met, E-E-A-T | Experience, Expertise, Authoritativeness, Trust | Health, finance, safety, societal impact | Links, content relevance, user behavior, freshness |

| Direct Impact on Rankings | No direct impact; used for algorithm validation | Informs algorithm design; not a direct ranking factor | Influences algorithm weighting for sensitive topics | Direct impact on search rankings |

| Scope | Evaluates individual pages and search results | Applies to all content types and topics | Applies only to “Your Money or Your Life” topics | Applies to all indexed content |

| Update Frequency | Updated multiple times annually | Evolves with guidelines; most recent update November 2023 | Continuously refined based on user feedback | Updated through core updates and algorithm changes |

| Transparency | Publicly available guidelines document | Publicly explained in Google documentation | Publicly identified YMYL topic categories | Limited public disclosure of specific signals |

| Training Required | Raters must pass certification tests | Raters trained on E-E-A-T assessment | Specialized training for YMYL evaluation | Continuous machine learning and refinement |

YMYL topics represent a special category within the Quality Rater Guidelines where Google applies significantly higher quality standards because content in these areas could substantially impact a person’s health, financial stability, safety, or the welfare of society. Examples of YMYL topics include medical and health information, financial advice and investment guidance, legal information and services, news and current events that affect public welfare, government services and civic information, and information about major life decisions. For YMYL pages, raters must verify that content demonstrates exceptionally strong E-E-A-T signals before assigning high quality ratings. A medical article about treating a serious condition, for instance, must come from a recognized medical authority, cite peer-reviewed research, and demonstrate clear expertise—a blog post from an unqualified author would receive a much lower rating for the same topic. According to Google’s guidelines, approximately 15-20% of all search queries are estimated to touch on YMYL topics, making this a significant portion of search quality evaluation. The heightened standards for YMYL reflect Google’s recognition that low-quality content in these areas poses real risks to users’ wellbeing, and therefore the company’s responsibility to prioritize authoritative, trustworthy sources is particularly acute.

Quality Raters serve a critical but often misunderstood role in Google’s search improvement process: they validate whether Google’s automated ranking systems are working as intended, rather than directly determining rankings themselves. Google conducts more than 4,725 search algorithm improvements annually, and each proposed change goes through a rigorous evaluation process that includes feedback from Quality Raters. When Google develops a potential improvement to its ranking systems, it assigns a sample of searches (typically several hundred) to a group of raters who evaluate the results using the guidelines. Raters might compare two different sets of search results—one with the proposed change and one without—and indicate which they prefer and why. This feedback helps Google’s data scientists, product managers, and engineers determine whether the proposed change actually improves search quality for real users. The aggregated ratings from thousands of raters across different regions and languages provide statistical validation that an algorithm change is beneficial before it’s deployed to the billions of searches Google processes daily. This human-in-the-loop approach ensures that Google’s automated systems remain aligned with what real users find helpful and trustworthy, serving as a crucial quality control mechanism in an increasingly complex search landscape.

Google’s commitment to implementing Quality Rater Guidelines consistently across global markets involves recruiting and training raters from diverse geographic regions and linguistic backgrounds. The approximately 16,000 raters are distributed strategically across four major regions: EMEA (Europe, Middle East, Africa) with roughly 4,000 raters, North America with approximately 7,000 raters, LATAM (Latin America) with about 1,000 raters, and APAC (Asia-Pacific) with around 4,000 raters. These raters collectively speak more than 80 languages, enabling Google to evaluate search quality in virtually every language and region where Google Search operates. All raters must pass certification tests on the guidelines to ensure they understand and apply the standards consistently, and they receive ongoing training as the guidelines evolve. Importantly, raters are instructed to base their evaluations on the cultural standards and information needs of their specific locale rather than their personal opinions or preferences. This localization approach recognizes that what constitutes high-quality, trustworthy content can vary across cultures and regions—for example, authoritative sources for medical information might differ between countries, or the relevance of certain types of content might vary based on local context and user needs.

Content creators seeking to align their work with Quality Rater Guidelines should focus on several key principles that reflect what raters evaluate. First, demonstrate clear authorship and expertise by including bylines, author bios, and information about credentials or experience relevant to the topic. Raters specifically look for evidence that content was created by someone with genuine knowledge or experience in the subject matter. Second, provide original, comprehensive content that goes beyond simply summarizing or rewriting information from other sources; raters assess whether content demonstrates significant effort, originality, and skill. Third, establish trustworthiness through transparency by clearly citing sources, explaining methodology, and being honest about limitations or uncertainties in the information presented. Fourth, maintain accuracy and currency by fact-checking content thoroughly and updating it when new information becomes available, particularly important for YMYL topics and time-sensitive information. Fifth, understand your audience and purpose by creating content primarily to help your existing or intended audience rather than to manipulate search rankings. Finally, disclose automation and AI usage when applicable, explaining why automation was useful and how it was employed in content creation. These principles align with the E-E-A-T framework and the people-first content approach that Google’s systems are designed to reward.

The Quality Rater Guidelines continue to evolve in response to changes in technology, user behavior, and the information landscape. Recent updates have addressed emerging challenges including the evaluation of AI-generated and AI-assisted content, the assessment of short-form video and other modern content formats, and the evaluation of user-generated content on forums and discussion platforms. As artificial intelligence becomes increasingly prevalent in content creation, the guidelines have been updated to clarify that AI-generated content can receive high quality ratings if it demonstrates strong E-E-A-T and serves user needs, but that disclosure of AI usage is important for transparency. The guidelines also increasingly emphasize the importance of first-hand experience and demonstrated expertise, reflecting a broader shift toward valuing authentic, authoritative voices in an era of abundant information. Looking forward, Quality Rater Guidelines will likely continue to adapt as search behavior evolves, new content formats emerge, and Google’s understanding of what constitutes helpful, reliable information deepens. For content creators and SEO professionals, staying informed about Quality Rater Guidelines updates is essential for understanding how Google evaluates content quality and for developing strategies that align with these evolving standards. The guidelines represent not just a technical specification but a philosophical commitment to prioritizing user needs and content quality over manipulation, making them central to the future of search and information discovery.

No, individual Quality Rater ratings do not directly impact how specific pages rank in Google Search. Instead, aggregated ratings from thousands of raters are used to measure how well Google's automated ranking systems are performing overall. Raters provide feedback that helps Google validate whether their algorithms are delivering helpful, reliable content. The ratings serve as a quality control mechanism similar to how restaurants use customer feedback cards, rather than as a direct ranking factor.

E-E-A-T stands for Experience, Expertise, Authoritativeness, and Trustworthiness. These four criteria help raters evaluate whether content demonstrates credibility and reliability. Experience refers to first-hand knowledge of the creator, Expertise means demonstrated skill or knowledge in the topic, Authoritativeness indicates the creator or site is recognized as a trusted authority, and Trustworthiness means the content is accurate, honest, and safe. Google's systems give extra weight to E-E-A-T signals, especially for YMYL (Your Money or Your Life) topics that could impact health, finances, or safety.

YMYL stands for 'Your Money or Your Life' and refers to topics that could significantly impact a person's health, financial stability, safety, or the welfare of society. Examples include medical advice, financial planning, legal information, and news about major events. Quality Raters apply very high Page Quality standards to YMYL content because low-quality pages could potentially cause real harm to users. This means YMYL pages must demonstrate exceptionally strong E-E-A-T signals to receive high quality ratings.

Google works with approximately 16,000 external Search Quality Raters distributed across different regions globally. These raters represent diverse locales and collectively speak over 80 languages. The distribution includes approximately 4,000 raters in EMEA (Europe, Middle East, Africa), 7,000 in North America, 1,000 in LATAM (Latin America), and 4,000 in APAC (Asia-Pacific). This geographic and linguistic diversity ensures that raters can accurately represent the information needs and cultural standards of users in their respective regions.

Page Quality (PQ) rating evaluates how well a page achieves its intended purpose, considering factors like E-E-A-T, originality, and whether the content is harmful. Needs Met (NM) rating assesses how useful a search result is for satisfying a specific user's search intent. A page could have high Page Quality but low Needs Met if it's excellent content but doesn't match what the user was searching for. Conversely, a page might have moderate Page Quality but high Needs Met if it directly answers the user's specific query despite being less comprehensive overall.

The Quality Rater Guidelines evaluate content based on its quality, usefulness, and trustworthiness regardless of whether it's human-written or AI-generated. However, the guidelines emphasize transparency about content creation methods. If automation or AI is used to substantially generate content, creators should disclose this and explain why automation was useful. The guidelines focus on whether content demonstrates E-E-A-T and serves user needs, not on the creation method itself. Content created primarily to manipulate search rankings through automation violates Google's spam policies.

For Page Quality ratings, raters use a five-point scale: Lowest (untrustworthy, deceptive, or harmful), Low (lacks important dimensions despite beneficial purpose), Medium (beneficial purpose achieved but not meriting High rating), High (beneficial purpose achieved well), and Highest (beneficial purpose achieved very well). For Needs Met ratings, the scale includes: Fails to Meet (FailsM), Slightly Meets (SM), Moderately Meets (MM), Highly Meets (HM), and Fully Meets (FullyM), with a special N/A category for certain query types.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Learn what Search Quality Evaluators do, how they assess search results, and their role in improving Google Search. Understand E-E-A-T, rating scales, and quali...

Page Quality Rating is Google's assessment framework evaluating webpage quality through E-E-A-T, content originality, and user satisfaction. Learn the rating sc...

Needs Met Rating evaluates search result quality by measuring user satisfaction with query fulfillment. Learn the five-level scale and its impact on search rank...