Relevance Signal

Relevance signals are indicators that AI systems use to evaluate content applicability. Learn how keyword matching, semantic relevance, authority, and freshness...

A quality signal is an indicator or metric that search engines and AI systems use to evaluate the excellence, reliability, and trustworthiness of content. These signals encompass factors like expertise, authoritativeness, trustworthiness (E-E-A-T), user engagement metrics, content depth, and backlink profiles that collectively determine whether content meets quality standards for ranking and citation in search results and AI responses.

A quality signal is an indicator or metric that search engines and AI systems use to evaluate the excellence, reliability, and trustworthiness of content. These signals encompass factors like expertise, authoritativeness, trustworthiness (E-E-A-T), user engagement metrics, content depth, and backlink profiles that collectively determine whether content meets quality standards for ranking and citation in search results and AI responses.

A quality signal is a measurable indicator or metric that search engines, AI systems, and content evaluation frameworks use to assess the excellence, reliability, and trustworthiness of digital content. These signals represent the observable characteristics and behaviors that distinguish high-quality, authoritative content from low-quality or unreliable material. Quality signals operate across multiple dimensions—from individual page characteristics to domain-wide reputation factors to the credentials of content creators themselves. They form the foundation of how modern search engines and AI systems determine which content deserves visibility, ranking prominence, and citation in search results and generative AI responses. Understanding quality signals is critical for content creators, publishers, and brands seeking visibility not just in traditional search engines but increasingly in AI-powered platforms like ChatGPT, Perplexity, Google AI Overviews, and Claude.

The concept of quality signals has evolved significantly since the early days of search engines. In the 1990s and early 2000s, search engines relied primarily on simple signals like keyword density and exact-match domain names to assess content quality. However, as search technology matured and users demanded more relevant results, search engines began incorporating increasingly sophisticated quality signals. Google’s introduction of PageRank in 1998 represented a paradigm shift, treating backlinks as quality signals that indicated user trust and content authority. The evolution continued with major algorithm updates: Google Panda (2011) introduced content quality assessment at scale, while subsequent updates like Penguin (2012) refined link quality evaluation. In 2022, Google expanded its quality framework by adding “Experience” to the original E-A-T concept, creating E-E-A-T to reflect the growing importance of first-hand expertise. Today, quality signals have become increasingly sophisticated, incorporating machine learning systems like RankBrain, RankEmbed, and DeepRank that analyze hundreds of signals simultaneously. According to research from Search Engine Land, over 80 distinct quality signals now influence how Google evaluates content across document, domain, and entity levels. This evolution reflects a fundamental shift from simple keyword matching to comprehensive quality assessment that mirrors how humans evaluate information credibility.

Quality signals operate across three distinct but interconnected levels that together create a comprehensive quality assessment framework. Document-level signals evaluate individual pieces of content, including factors like originality, comprehensiveness, grammar quality, citation practices, and how well the content satisfies user intent. These signals examine whether a specific page demonstrates expertise through in-depth coverage, proper sourcing, and clear presentation. Domain-level signals assess the overall quality and trustworthiness of an entire website or publishing platform, including factors like site architecture, security measures (HTTPS), business verification, link profile quality, and historical performance metrics. These signals help search engines understand whether a domain consistently publishes reliable content and maintains professional standards. Source entity-level signals evaluate the credentials, reputation, and track record of the content creator or publishing organization, including author credentials, publication history, peer endorsements, and professional recognition. This three-tiered approach allows search engines to assess quality from multiple perspectives: Is this specific piece of content excellent? Is the publisher trustworthy? Is the author credible? When all three levels demonstrate strong quality signals, content receives maximum visibility and citation potential.

E-E-A-T stands for Experience, Expertise, Authoritativeness, and Trustworthiness—the foundational quality signal framework that Google and other search systems use to evaluate content. Experience refers to whether the content creator has genuine, first-hand experience with the topic they’re writing about. A product review written by someone who actually used the product carries more weight than one written without personal experience. Expertise measures the content creator’s knowledge, skills, and subject matter mastery in their field. This can be demonstrated through author bios, professional certifications, case studies, and the depth of knowledge evident in the content itself. Authoritativeness evaluates the overall authority of the content creator, the content itself, and the website hosting it. This is reinforced through citations from authoritative sources, high-quality backlinks, and recognition as a leader in the field. Trustworthiness, which Google identifies as the most critical component, focuses on the reliability and factual accuracy of content, transparency about sources, and the credibility of the creator. According to Google’s official guidance, E-E-A-T signals are particularly important for YMYL (Your Money or Your Life) topics—content about health, finances, legal matters, and other areas where inaccuracy could significantly impact people’s wellbeing. Research from Clearscope indicates that approximately 78% of enterprises now use AI-driven content monitoring tools to track how their E-E-A-T signals influence visibility across search engines and AI platforms.

The application of quality signals differs meaningfully between traditional search engines and AI-powered systems, reflecting their distinct purposes and evaluation methodologies. Traditional search engines like Google use quality signals primarily to rank pages in search results, with emphasis on link authority, domain reputation, user engagement metrics, and content comprehensiveness. Google’s systems analyze quality signals to determine which pages best answer a user’s query and deserve top positions in search results. The ranking process involves hundreds of signals working in concert, with quality signals serving as one major category among many ranking factors. AI search systems like ChatGPT, Perplexity, and Google AI Overviews use quality signals differently—to select authoritative sources for training data and to identify which sources to cite when generating responses. These systems prioritize source credibility, factual accuracy, comprehensiveness, and original research more heavily than traditional search engines. An AI system generating a response about medical treatments will preferentially cite sources with strong medical expertise signals and trustworthiness indicators. This distinction is crucial for content creators: optimizing for traditional search rankings and optimizing for AI citation visibility require slightly different approaches, though strong quality signals benefit visibility across both environments. According to research from Search Engine Land, approximately 65% of enterprise content teams now track quality signals specifically to improve their visibility in AI-generated responses, recognizing that AI systems are becoming increasingly important discovery channels.

| Quality Signal Category | Traditional Search Engines | AI Search Systems | Content Monitoring Platforms |

|---|---|---|---|

| E-E-A-T Signals | High importance for YMYL topics; influences ranking | Critical for source selection; determines citation likelihood | Tracked to measure brand authority and trustworthiness |

| Backlink Quality | Primary ranking factor; domain authority indicator | Secondary factor; used to verify source credibility | Monitored to assess domain reputation and influence |

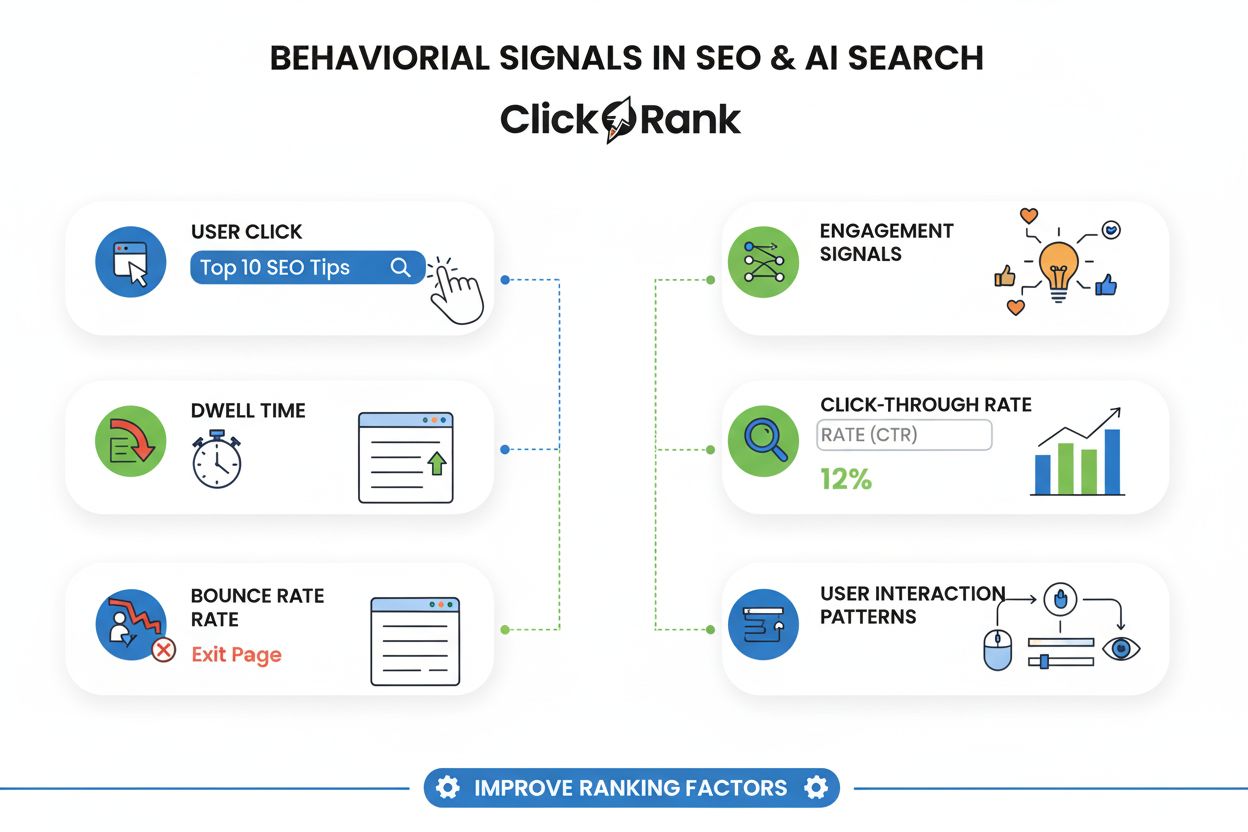

| User Engagement | CTR, dwell time, bounce rate influence rankings | Indirect signal; indicates content value and clarity | Tracked to measure content resonance and audience satisfaction |

| Content Freshness | Important for time-sensitive queries | Important for current information; less critical for evergreen topics | Monitored to ensure content remains relevant and accurate |

| Author Credentials | Supports E-E-A-T assessment; influences rankings | Primary factor in source selection for citations | Tracked to measure expert visibility and recognition |

| Content Comprehensiveness | Correlates with rankings; longer content often ranks higher | Critical for response quality; comprehensive sources preferred | Measured to assess content depth and information value |

| Domain Security (HTTPS) | Ranking factor; trust signal | Source credibility indicator | Monitored as basic trustworthiness requirement |

| Citation Practices | Supports authority signals; indicates research quality | Essential for source credibility; cited sources preferred | Tracked to measure content reliability and sourcing quality |

Search engines and AI systems implement quality signal evaluation through sophisticated machine learning systems that analyze hundreds of signals simultaneously. Google’s quality assessment systems include Coati (formerly Panda), which evaluates content quality at the site and document levels, and the Helpful Content System, which identifies content created primarily to help users versus content created to manipulate search rankings. These systems use classifiers—machine learning models trained on quality signals—to predict whether content meets quality standards. RankBrain, Google’s AI system, analyzes user behavior signals like click-through rates and dwell time to understand whether users find content satisfying. NavBoost, another Google system, ranks pages based on user interaction signals, treating user behavior as implicit feedback about content quality. AI systems like ChatGPT and Perplexity implement quality signal evaluation through their training data selection and retrieval-augmented generation (RAG) processes. When these systems need to cite sources for responses, they evaluate quality signals to identify the most credible, authoritative sources. They assess factors like author expertise, source domain reputation, content comprehensiveness, and factual accuracy. The systems learn to recognize quality signals through training on high-quality datasets and through reinforcement learning from human feedback that rewards citations of authoritative sources. AmICited and similar monitoring platforms track quality signals by analyzing how often brands and domains appear in AI responses, correlating visibility with quality signal strength. These platforms measure quality signals including backlink profiles, domain authority metrics, author credentials, content freshness, and user engagement indicators to help organizations understand what drives their visibility in AI search results.

Quality signals influence how content ranks through multiple interconnected mechanisms that work at different stages of the search and retrieval process. Initial relevance assessment uses quality signals to filter content, ensuring that only content meeting minimum quality standards enters the ranking pool. Content with poor grammar, thin coverage, or low domain authority may be filtered out before ranking algorithms even evaluate it. Ranking score calculation incorporates quality signals as inputs to machine learning models that predict which pages best satisfy user intent. A page with strong E-E-A-T signals, high-quality backlinks, and positive user engagement metrics receives a higher quality score, which boosts its ranking position. Reranking and personalization use quality signals to adjust rankings based on individual user preferences and search context. A user with a history of clicking on academic sources might see higher-quality, research-backed content ranked more prominently. Citation selection in AI systems uses quality signals to determine which sources appear in generated responses. When Perplexity generates a response about climate science, it preferentially cites sources with strong scientific expertise signals and trustworthiness indicators. Research from Backlinko analyzing over 11.8 million Google search results found that pages with more referring domains (a quality signal) consistently ranked higher than pages with fewer backlinks. Similarly, studies by SEMRush found significant correlations between quality signals like content depth, user engagement metrics, and Google rankings. The relationship between quality signals and rankings is not deterministic—a single strong quality signal doesn’t guarantee high rankings—but rather probabilistic, with multiple signals working together to influence ranking positions.

Organizations can measure and monitor quality signals through a combination of tools, metrics, and analytical approaches that provide visibility into content quality across multiple dimensions. Backlink analysis tools like Ahrefs, SEMRush, and Moz measure link quality signals by analyzing backlink profiles, domain authority, anchor text quality, and link velocity. These tools help organizations understand how their link profile compares to competitors and identify opportunities to improve link quality. Content analysis platforms like Clearscope and Surfer SEO evaluate document-level quality signals including content comprehensiveness, keyword coverage, readability, and topical depth. These tools compare content against top-ranking competitors to identify quality gaps. User engagement analytics through Google Analytics and Search Console reveal signals like click-through rate, average session duration, bounce rate, and pages per session. These metrics indicate whether users find content satisfying and valuable. Brand monitoring tools track mentions, reviews, and social signals that contribute to domain-level trustworthiness and authority signals. Author credential verification can be assessed through LinkedIn profiles, publication history, speaking engagements, and professional certifications. AI visibility tracking platforms like AmICited specifically monitor how often brands and content appear in AI-generated responses, correlating visibility with quality signal strength. Organizations should establish baseline measurements of their quality signals, track changes over time, and benchmark against competitors to understand their relative quality position. According to research from Content Science Review, organizations that actively monitor quality signals report 34% higher organic traffic growth compared to those that don’t systematically track quality metrics.

YMYL (Your Money or Your Life) content—topics that could significantly impact a person’s health, financial stability, safety, or wellbeing—receives heightened quality signal scrutiny from search engines and AI systems. Google applies E-E-A-T principles more stringently to YMYL content because the consequences of inaccurate information are severe. Medical advice, financial planning guidance, legal information, and safety-related content all fall into the YMYL category. For YMYL content, quality signals must be exceptionally strong. Author credentials become paramount—medical content should be written by licensed healthcare professionals or reviewed by medical experts. Source citations must reference peer-reviewed research, clinical studies, or authoritative medical organizations. Domain authority matters significantly, with established medical institutions and health organizations receiving preference over newer or less-established sources. Factual accuracy is non-negotiable, with any errors potentially resulting in ranking penalties or exclusion from AI citation. Transparency about conflicts of interest is essential—financial content should disclose any affiliate relationships or financial incentives. Research from Google’s Search Quality Rater Guidelines indicates that YMYL content receives approximately 40% more scrutiny in quality assessment compared to non-YMYL content. For organizations publishing YMYL content, investing in strong quality signals—particularly author credentials, expert review processes, and comprehensive sourcing—is not optional but essential for visibility. AI systems like ChatGPT and Perplexity apply similar heightened standards when selecting YMYL sources, preferring citations from established medical institutions, financial regulatory bodies, and legal authorities over less authoritative sources.

The emergence of AI-generated and AI-assisted content has created new considerations for quality signal evaluation. Search engines and AI systems now assess whether content was created with AI assistance, whether it was disclosed, and whether the AI-generated content meets quality standards. Google’s guidance on AI-generated content emphasizes that the origin of content (human-written vs. AI-generated) is less important than whether the content demonstrates quality and helpfulness. However, AI-generated content faces additional scrutiny on quality signals because it may lack the first-hand experience signal that human-created content can demonstrate. Disclosure of AI usage has become a quality signal itself—content that transparently discloses AI assistance is viewed more favorably than content that hides its AI origins. Human review and editing of AI-generated content strengthens quality signals by ensuring accuracy, adding original insights, and demonstrating human expertise. Original research and data in AI-assisted content significantly strengthen quality signals, as AI systems can synthesize information but cannot conduct original research. Organizations using AI to assist content creation should focus on maintaining strong quality signals by ensuring human expertise is evident, disclosing AI usage, fact-checking thoroughly, and adding original insights that AI alone cannot provide. According to research from Search Engine Journal, AI-assisted content that maintains strong E-E-A-T signals and includes human expertise performs comparably to purely human-written content in search rankings, while AI-generated content without human review shows 23% lower average rankings.

Quality signals continue to evolve as search technology advances and user expectations change. Emerging quality signal categories include content accessibility (readability for users with disabilities), environmental sustainability claims verification, and diversity representation in content creation. Entity-based quality assessment is becoming more sophisticated, with search engines increasingly evaluating quality signals at the entity level—assessing the overall quality and trustworthiness of organizations, authors, and publishers across all their content. Behavioral quality signals are expanding beyond traditional engagement metrics to include more nuanced user interactions like annotation behavior, sharing patterns, and how users navigate between related content. Fact-checking integration is becoming a more explicit quality signal, with search engines and AI systems increasingly incorporating automated fact-checking and verification of claims. Sustainability and ethical signals may become quality factors as organizations and users increasingly value responsible, ethical content creation. Multimodal quality assessment will evaluate quality signals across text, images, video, and audio content simultaneously, rather than treating each format separately. Personalized quality assessment may evolve to evaluate content quality relative to individual user expertise levels and information needs, rather than applying uniform quality standards. The integration of quality signals with emerging technologies like blockchain-based content verification and decentralized identity systems could create new ways to verify author credentials and content authenticity. Organizations should anticipate these changes by building content practices that emphasize genuine expertise, transparency, ethical standards, and user-centric value creation—qualities that will likely remain important regardless of how specific quality signals evolve.

Organizations that excel at quality signal optimization gain significant competitive advantages in both traditional search and AI-powered discovery channels. Search visibility advantage comes from strong quality signals that help content rank higher and appear more frequently in search results. AI citation advantage emerges as AI systems increasingly prefer citing sources with strong quality signals, making high-quality content more likely to appear in ChatGPT responses, Perplexity answers, and Google AI Overviews. Brand authority advantage develops as quality signals accumulate over time, establishing organizations as recognized authorities in their fields. User trust advantage results from consistent demonstration of expertise, trustworthiness, and user-centric value creation, leading to higher engagement, repeat visits, and word-of-mouth recommendations. Resilience advantage comes from strong quality signals that provide protection against algorithm updates—content with genuine quality is less vulnerable to ranking fluctuations than content optimized primarily for search engines. Content longevity advantage emerges as high-quality content continues to attract links, engagement, and citations long after publication, providing sustained visibility and value. Organizations competing in crowded markets increasingly recognize that quality signal optimization is not a short-term tactic but a fundamental business strategy. According to research from HubSpot, organizations that systematically optimize quality signals report 47% higher organic traffic, 34% higher conversion rates, and 56% higher customer lifetime value compared to organizations that focus primarily on keyword optimization. This data underscores that quality signals are not just ranking factors but business drivers that influence customer acquisition, trust, and long-term value creation.

Quality signals operate at three levels: document-level signals (content originality, grammar, citations), domain-level signals (trustworthiness, authoritativeness, expertise), and source entity-level signals (author credentials, reputation, peer endorsements). These signals work together to create a comprehensive quality assessment that search engines use to rank content and determine its suitability for citation in AI responses.

While ranking factors are specific algorithmic inputs that directly influence search positions, quality signals are broader indicators of content excellence that inform multiple ranking systems. Quality signals feed into various algorithms like Google's Helpful Content System and RankBrain, which then apply them as ranking factors. A single quality signal can influence multiple ranking factors simultaneously.

For platforms like AmICited that track brand mentions in AI responses, quality signals determine whether content gets cited by systems like ChatGPT, Perplexity, and Google AI Overviews. AI systems prioritize high-quality sources with strong E-E-A-T signals, making quality signal optimization essential for achieving visibility in generative AI search results and citations.

E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) represents the core quality signal framework that Google and other search systems use. These four dimensions work together to assess whether content comes from credible sources with genuine knowledge. Strong E-E-A-T signals indicate high content quality, particularly for YMYL (Your Money or Your Life) topics where accuracy and reliability are critical.

Yes, quality signals can be measured through various metrics including user engagement data (CTR, dwell time, bounce rate), backlink quality and quantity, content freshness, author credentials, and brand reputation indicators. Tools can track these signals across domains and documents, though some signals like trustworthiness require analysis of multiple data points to establish patterns and trends.

User engagement metrics like click-through rate, dwell time, and repeat visits serve as quality signals because they indicate whether users find content valuable and trustworthy. When users spend more time on a page, return frequently, or share content, these behaviors signal to search engines that the content meets user needs and demonstrates quality, which can improve rankings and citation likelihood.

Backlinks function as quality signals by indicating that other authoritative websites endorse and reference your content. High-quality backlinks from relevant, trustworthy domains signal that your content is authoritative and valuable. The quality, relevance, and diversity of backlinks matter more than quantity, with links from topically-related authority sites carrying more weight as quality indicators.

Different platforms weight quality signals differently based on their algorithms and purposes. Google emphasizes E-E-A-T and user behavior signals, while AI systems like ChatGPT and Perplexity prioritize source credibility and content comprehensiveness. Traditional search engines focus on link authority, while AI systems may weight original research, citations, and factual accuracy more heavily when selecting sources for responses.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Relevance signals are indicators that AI systems use to evaluate content applicability. Learn how keyword matching, semantic relevance, authority, and freshness...

Behavioral signals measure user interactions like CTR, dwell time, and bounce rate. Learn how user action patterns affect SEO rankings and AI search visibility ...

Learn what AI content quality thresholds are, how they're measured, and why they matter for monitoring AI-generated content across ChatGPT, Perplexity, and othe...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.