Query Fanout: How LLMs Generate Multiple Searches Behind the Scenes

Discover how modern AI systems like Google AI Mode and ChatGPT decompose single queries into multiple searches. Learn query fanout mechanisms, implications for ...

Query Fanout is the AI process where a single user query is automatically expanded into multiple related sub-queries to gather comprehensive information from different angles. This technique helps AI systems understand true user intent and deliver more accurate, contextually relevant responses by exploring various interpretations and aspects of the original question.

Query Fanout is the AI process where a single user query is automatically expanded into multiple related sub-queries to gather comprehensive information from different angles. This technique helps AI systems understand true user intent and deliver more accurate, contextually relevant responses by exploring various interpretations and aspects of the original question.

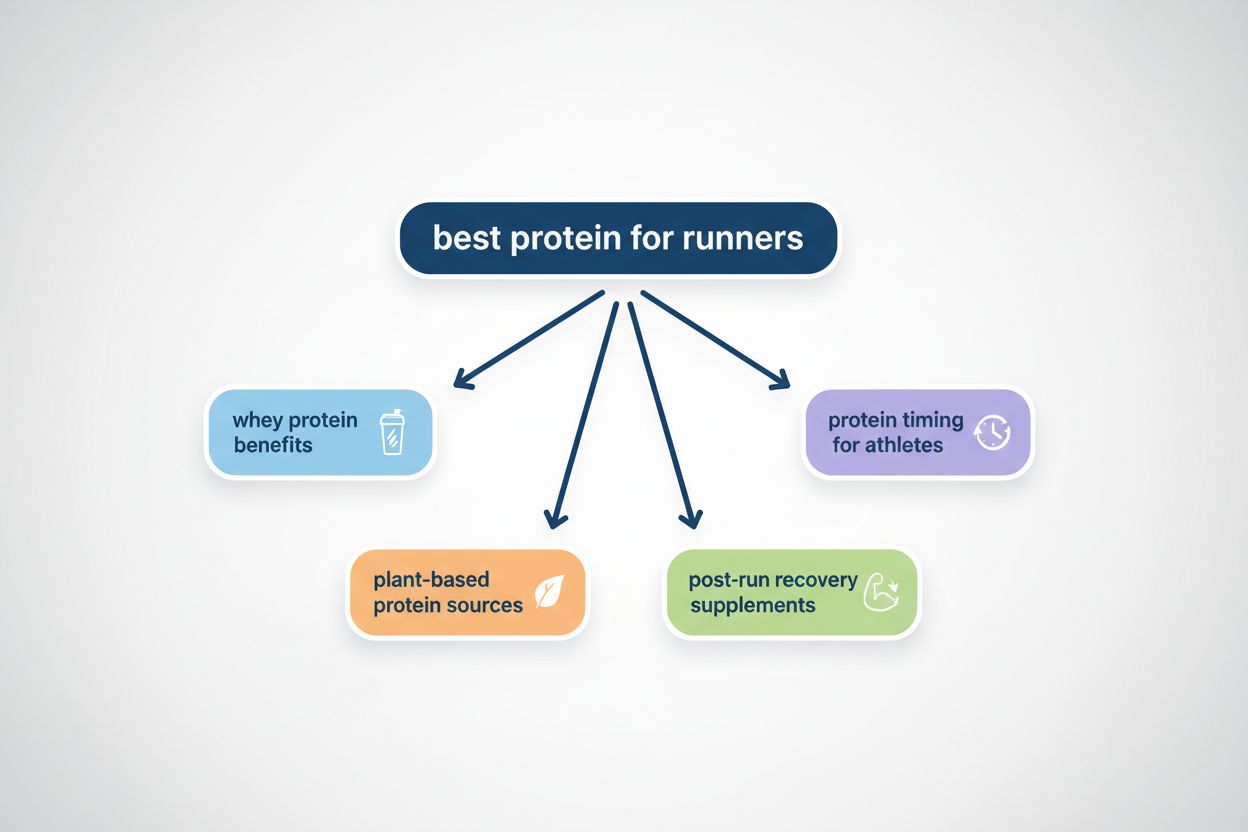

Query Fanout is the process where AI systems automatically expand a single user query into multiple related sub-queries to gather comprehensive information from different angles. Rather than simply matching keywords like traditional search engines, query fanout enables AI to understand the true intent behind a question by exploring various interpretations and related topics. For example, when a user searches for “best protein for runners,” an AI system using query fanout might automatically generate sub-queries like “whey protein benefits,” “plant-based protein sources,” and “post-run recovery supplements.” This technique is fundamental to how modern AI search systems like Google AI Mode, ChatGPT, Perplexity, and Gemini deliver more accurate and contextually relevant responses. By breaking down complex queries into simpler, more focused sub-questions, AI systems can retrieve more targeted information and synthesize it into comprehensive answers that address multiple dimensions of what users are actually looking for.

The technical mechanism of query fanout follows a systematic five-step process that transforms a single query into actionable intelligence. First, the AI system interprets the original query to identify its core intent and potential ambiguities. Next, it generates multiple sub-queries based on inferred themes, topics, and related concepts that might help answer the original question more completely. These sub-queries are then executed in parallel across search infrastructure, with Google’s approach using its custom version of Gemini to break questions into different subtopics and issue multiple queries simultaneously on behalf of the user. The system then clusters and groups the retrieved results by topic, entity type, and intent, layering citations accordingly so that different aspects of the answer are properly sourced. Finally, the AI synthesizes all this information into a single coherent response that addresses the original query from multiple angles. In practice, Google’s AI Mode might execute eight or more background searches for a moderately complex query, while the more advanced Deep Search feature can issue dozens or even hundreds of queries over several minutes to provide exceptionally thorough research on complex topics like purchasing decisions.

| Step | Description | Example |

|---|---|---|

| 1. Interpretation | AI analyzes original query for intent | “best CRM for small business” |

| 2. Sub-query Generation | System creates related variations | “free CRM tools”, “CRM with email automation” |

| 3. Parallel Execution | Multiple searches run simultaneously | All sub-queries searched at once |

| 4. Result Clustering | Results grouped by topic/entity | Group 1: Free tools, Group 2: Paid solutions |

| 5. Synthesis | AI combines results into coherent answer | Single comprehensive response with citations |

AI systems employ query fanout for several strategic reasons that fundamentally improve response quality and reliability:

Ambiguity Resolution - A single query like “Jaguar speed” could refer to either the car manufacturer’s performance specifications or the animal’s hunting velocity, and query fanout helps the system test multiple interpretations to identify the most likely user intent.

Factual Grounding and Hallucination Reduction - By retrieving evidence from multiple independent sources for each branch of the query, the AI can cross-check claims and verify information before presenting it, significantly lowering the risk of confident-but-wrong answers.

Perspective Diversity - Query fanout pulls information across different content types—clinical studies, buyer’s guides, forum discussions, and brand websites—ensuring that answers balance authority with practical applicability.

Complex Query Handling - The technique excels at handling complex, layered queries that require synthesis of information from multiple domains.

Novel Answer Generation - Query fanout enables AI systems to answer questions that haven’t been clearly answered online before by combining multiple pieces of information to draw new conclusions that no single source explicitly addresses.

The distinction between query fanout and traditional search represents a fundamental shift in how information retrieval works. Traditional search engines operate primarily through keyword matching, returning a ranked list of results based on how well individual pages match the exact terms in a query, with users responsible for refining their searches if initial results don’t satisfy their needs. Query fanout, by contrast, focuses on intent understanding rather than keyword matching, with the system automatically exploring multiple angles and interpretations without requiring user intervention. In traditional search, users must often conduct several follow-up searches to get a complete picture—searching for “best CRM software,” then “free CRM tools,” then “CRM with email automation”—whereas query fanout handles this exploration automatically within a single interaction. This shift has profound implications for content creators and marketers, who can no longer rely solely on optimizing for individual keywords but must instead ensure their content addresses the full cluster of related topics and intents that AI systems will explore. The change also fundamentally alters SEO strategy, moving focus from ranking for specific search terms to achieving visibility across multiple related queries and building topical authority that positions content as relevant to broader topic clusters.

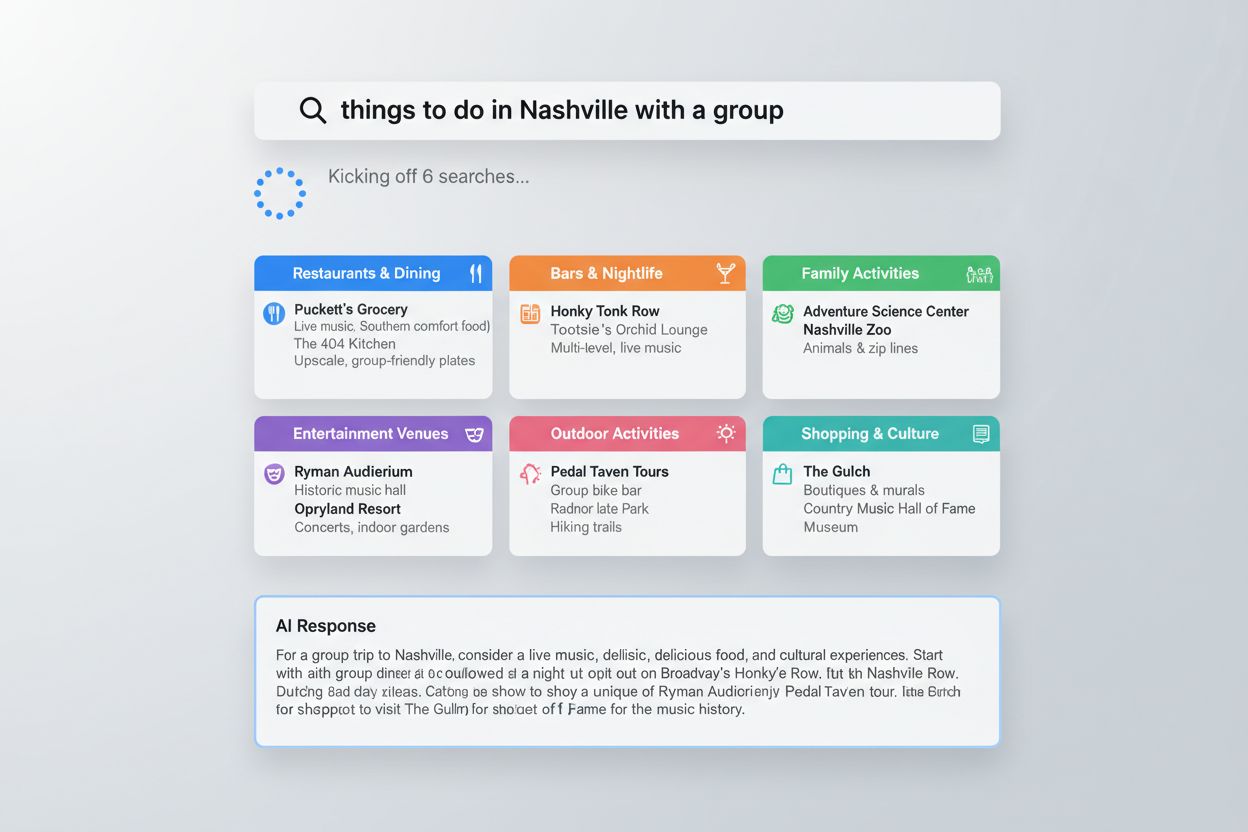

Query fanout manifests in practical, observable ways across major AI platforms. When a user asks Google AI Mode “things to do in Nashville with a group,” the system automatically fans out the query into sub-questions about great restaurants, bars, family-friendly activities, and entertainment venues, then synthesizes the results into a comprehensive guide tailored to group activities. ChatGPT demonstrates similar behavior when handling “best X” queries, addressing multiple angles such as “best for budget,” “best for features,” and “best for specific use cases” within a single response. Deep Search functionality showcases the technique’s power for complex decisions—when researching home safes, the system might spend several minutes executing dozens of queries about fire resistance ratings, insurance implications, specific product models, and user reviews, ultimately delivering an incredibly thorough response with links to specific products and detailed comparisons. Beyond these examples, query fanout powers shopping recommendations, restaurant suggestions, and stock comparisons, with different AI platforms implementing the technique through integration with internal tools like Google Finance and the Shopping Graph, which updates 2 billion times per hour to ensure real-time accuracy. This real-time data integration capability means that query fanout isn’t limited to static information but can incorporate current pricing, availability, market data, and other dynamic information that changes constantly.

Query fanout fundamentally changes how brands achieve visibility in AI-generated responses, creating both opportunities and challenges for organizations seeking to influence how they’re portrayed in AI answers. Because query fanout causes AI systems to explore multiple sub-queries, brands must now appear in results across multiple related searches, not just the primary query—meaning a company optimized only for “CRM software” might miss opportunities to appear in results for “free CRM tools” or “CRM with email automation.” The importance of being featured favorably in AI responses has grown exponentially, as these responses directly influence consumer decisions and often reduce users’ need to consult other information sources. Understanding the distinction between AI mentions (unlinked references to your brand within AI responses) and AI citations (linked references to your content) is crucial, as citations provide both visibility and credibility while mentions raise awareness without direct traffic attribution. This is where monitoring tools like AmICited.com become essential—they track how your brand appears across multiple AI platforms (Google AI Mode, ChatGPT, Perplexity, Gemini, and others), showing not just whether you’re mentioned, but where you appear in the response hierarchy, how often you’re cited, and what sentiment surrounds your brand mentions. Organizations that understand query fanout and actively optimize for it gain significant competitive advantages in AI search visibility, as they’re more likely to appear in the multiple sub-query results that collectively determine overall AI response quality.

Optimizing for query fanout requires a fundamentally different approach than traditional keyword-focused SEO. The first step is to identify core topics directly related to your business and expertise, as these represent the areas where you can most credibly and authoritatively address the multiple angles that query fanout explores. Next, create topic clusters consisting of a central pillar page that provides broad overview of a core topic, surrounded by cluster pages that address specific subtopics—this structure helps AI systems recognize your content as a comprehensive resource across multiple related queries. Plan comprehensive content that covers not just the primary topic but all the subtopics, comparisons, and question variations that AI systems might explore when fanning out a query, ensuring each page serves as a hub that satisfies multiple intents simultaneously. Write for NLP (natural language processing) by using clear definitions, full sentences, and self-contained sections that AI systems can easily parse and extract information from, rather than relying on keyword density or other traditional SEO tactics. Implement schema markup to add machine-readable labels to different types of data on your pages, helping AI systems interpret your content more accurately—for example, using Product schema to label product names and images, or Offer schema for pricing and availability information. Focus on semantic completeness by ensuring your content clearly references related entities, concepts, and relationships that appear across the fan-out branches, and build a strong internal linking strategy with contextual anchor text to signal topical depth and help AI systems understand how your content pieces relate to each other.

The way you structure and format content directly impacts how effectively AI systems can extract and utilize information for query fanout responses. Write in chunks—self-contained, meaningful sections that can stand alone and be easily processed, retrieved, and summarized by AI systems—using full sentences and restating context where helpful rather than relying on fragmented bullet points or keyword-heavy copy. Provide clear definitions when introducing new concepts, as AI systems often seek out definitions as part of the query fanout process and will prioritize pages that explicitly define terms. Use descriptive subheadings to break content into logical sections and employ proper heading hierarchy (H2, H3, H4) to show relationships between topics, helping AI systems identify content related to highly specific queries. Structure content with tables and lists to create easily parsable information that AI systems can extract and reorganize, and use clear, conversational language that avoids jargon, overly complex sentence structures, and unnecessary fluff. The Stripe website exemplifies these best practices, with solutions pages tailored to different business stages and use cases, subsections providing direct detailed information on relevant subtopics, and comprehensive coverage across blog posts, customer stories, support documentation, and other resources. This multi-format, deeply structured approach helps AI systems recognize Stripe’s relevance to various intents and extract useful information for fanned-out queries, contributing to their exceptional performance in AI search visibility across platforms like Google AI Mode, SearchGPT, ChatGPT, Perplexity, and Gemini.

Measuring success in query fanout optimization requires specialized tools and metrics that go beyond traditional SEO analytics. Tools like Semrush’s AI Visibility Toolkit and AmICited provide insights into your brand’s performance across multiple AI platforms, showing your share of voice for non-branded queries across Google AI Mode, SearchGPT, ChatGPT, Perplexity, Gemini, and other systems. These platforms reveal not just whether your brand is mentioned, but where it appears in the response hierarchy—whether you’re cited first, second, or further down—which directly correlates with visibility and influence. Tracking mentions versus citations separately is crucial, as citations provide both visibility and traffic while mentions raise awareness; understanding this distinction helps you prioritize optimization efforts. Sentiment analysis in AI responses shows how your brand is portrayed—whether AI systems emphasize your strengths or highlight weaknesses—allowing you to identify areas for improvement in how you’re discussed. Competitive benchmarking against rivals reveals gaps in your AI visibility strategy and opportunities to outperform competitors in specific query clusters. The importance of continuous monitoring cannot be overstated, as AI systems evolve rapidly, new platforms emerge, and query patterns change; regular tracking ensures you can adapt your strategy and maintain visibility as the landscape shifts.

The trajectory of query fanout points toward increasingly sophisticated query understanding and more complex AI reasoning processes. As AI systems evolve, they will likely develop even more nuanced abilities to decompose queries into sub-questions, understand implicit context, and synthesize information across increasingly diverse sources. The blurring lines between traditional and AI search will continue, with traditional search engines incorporating more AI-driven query understanding while AI systems increasingly integrate real-time search capabilities, creating a hybrid landscape where optimization strategies must address both paradigms. This evolution necessitates a fundamental shift in how organizations approach search optimization, moving away from keyword ranking toward contextual visibility and ensuring content appears across the full spectrum of related queries that AI systems explore. Topical authority—establishing deep, comprehensive expertise across related topics—becomes increasingly important as AI systems reward content that demonstrates mastery of entire topic clusters rather than individual keywords. The emerging best practices for query fanout optimization emphasize semantic completeness, entity relationships, content structure, and cross-platform visibility monitoring, requiring organizations to think holistically about how their content ecosystem addresses the multiple angles and interpretations that AI systems will explore when answering user questions.

Query Fanout is the automatic process where AI systems break down a single query into multiple sub-queries to understand true intent and gather comprehensive information. Query Expansion, by contrast, is a technique to add related terms to improve retrieval, which can be either manual or automatic. Query Fanout is more sophisticated and intent-focused, while query expansion is primarily keyword-focused.

The number varies based on query complexity. Simple queries might generate 1-3 sub-queries, while moderately complex queries typically produce 5-8 sub-queries. Advanced features like Google's Deep Search can execute dozens or even hundreds of background queries over several minutes for exceptionally thorough research on complex topics.

Yes, indirectly. Content optimized for Query Fanout tends to perform better in traditional search as well, because the optimization process requires comprehensive topic coverage, clear structure, and semantic completeness—all factors that search engines reward. However, the primary benefit is improved visibility in AI-generated responses rather than traditional search rankings.

Major AI platforms implementing Query Fanout include Google AI Mode, ChatGPT, Perplexity, Gemini, and other LLM-based search systems. Each platform implements the technique slightly differently, but all use some form of query decomposition to improve answer quality and relevance.

Create topic clusters with pillar and cluster pages, write comprehensive content covering subtopics and related questions, implement schema markup for structured data, use clear headings and formatting, build strong internal linking, and focus on semantic completeness. Write for natural language processing by using clear definitions and self-contained sections that AI systems can easily parse.

Query Fanout increases opportunities for AI citations by ensuring your content appears in results for multiple related sub-queries. When AI systems explore various angles of a question, they're more likely to discover and cite your content if it comprehensively addresses those different angles and perspectives.

Query Fanout significantly improves user experience by enabling AI systems to deliver more accurate, comprehensive answers without requiring users to refine their queries multiple times. Users get better-targeted responses that address multiple dimensions of their question in a single interaction.

Yes, Query Fanout helps reduce hallucinations by cross-checking information across multiple sources. When AI systems retrieve evidence from different sources for each branch of a fanned-out query, they can verify claims and identify outliers, significantly lowering the risk of confident-but-wrong answers.

Track how your content appears across AI platforms when queries are expanded. Understand your AI visibility and citations with AmICited's comprehensive monitoring platform.

Discover how modern AI systems like Google AI Mode and ChatGPT decompose single queries into multiple searches. Learn query fanout mechanisms, implications for ...

Comprehensive guide to evaluating GEO vendors with critical questions about technical capabilities, AI citation tracking, content strategy, and performance metr...

Learn the essential first steps to optimize your content for AI search engines like ChatGPT, Perplexity, and Google AI Overviews. Discover how to structure cont...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.