Readability Score for AI Search: How to Optimize Content for AI Answers

Learn what readability scores mean for AI search visibility. Discover how Flesch-Kincaid, sentence structure, and content formatting impact AI citations in Chat...

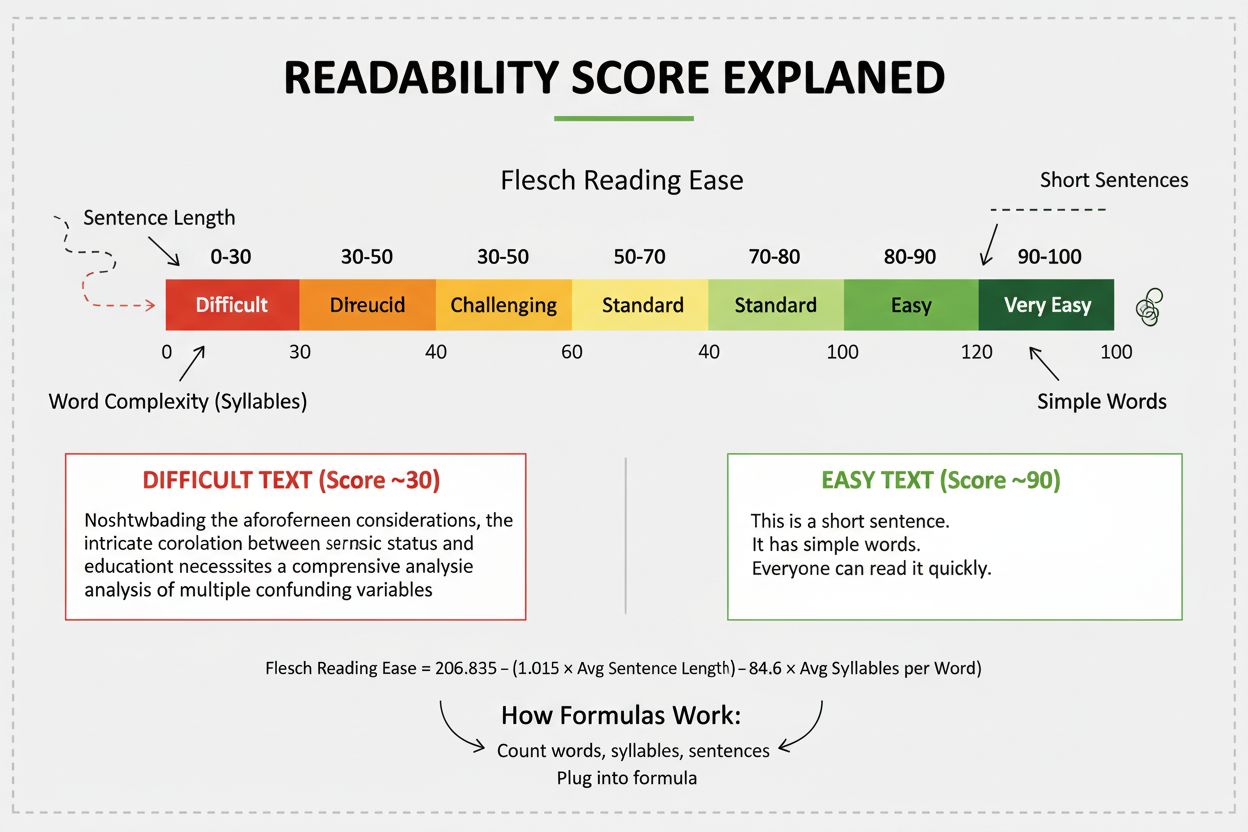

A readability score is a quantitative metric that measures how easily readers can understand written content by analyzing linguistic factors such as sentence length, word complexity, and syllable count. Scores typically range from 0-100, with higher scores indicating easier-to-read content, and are calculated using formulas like Flesch Reading Ease or Flesch-Kincaid Grade Level.

A readability score is a quantitative metric that measures how easily readers can understand written content by analyzing linguistic factors such as sentence length, word complexity, and syllable count. Scores typically range from 0-100, with higher scores indicating easier-to-read content, and are calculated using formulas like Flesch Reading Ease or Flesch-Kincaid Grade Level.

Readability score is a quantitative measurement that evaluates how easily readers can understand written content by analyzing specific linguistic and structural elements. The score typically ranges from 0 to 100, with higher values indicating content that is easier to comprehend. Readability scores are calculated using mathematical formulas that examine factors such as average sentence length, word complexity measured by syllable count, and vocabulary difficulty. These metrics have become essential tools for content creators, marketers, educators, and organizations seeking to ensure their written materials are accessible to their intended audiences. The concept emerged from linguistic research demonstrating that certain textual characteristics directly correlate with comprehension difficulty, making it possible to predict how challenging a piece of content will be for readers at different education levels.

The modern readability movement began in the 1940s when Rudolf Flesch, a consultant with the Associated Press, developed the Flesch Reading Ease formula to help improve newspaper readability. This groundbreaking work demonstrated that readability could be measured objectively rather than relying solely on subjective editorial judgment. In the 1970s, the U.S. Navy adapted Flesch’s work to create the Flesch-Kincaid Grade Level, which directly correlates text difficulty to U.S. school grade levels. This formula was developed to ensure that technical manuals used in military training could be understood by personnel with varying educational backgrounds. Since then, numerous readability formulas have been developed, including the Gunning Fog Index, SMOG Index, Dale-Chall Formula, and Coleman-Liau Index, each offering slightly different approaches to measuring text complexity. Over 70 years later, readability formulas remain widely used across industries, with research showing that 60% of U.S. corporations have adopted readability formulas to evaluate their customer-facing communications. The Plain Writing Act of 2010 further legitimized readability assessment by requiring federal agencies to use clear communication that the public can understand, establishing readability as a legal requirement in government communications.

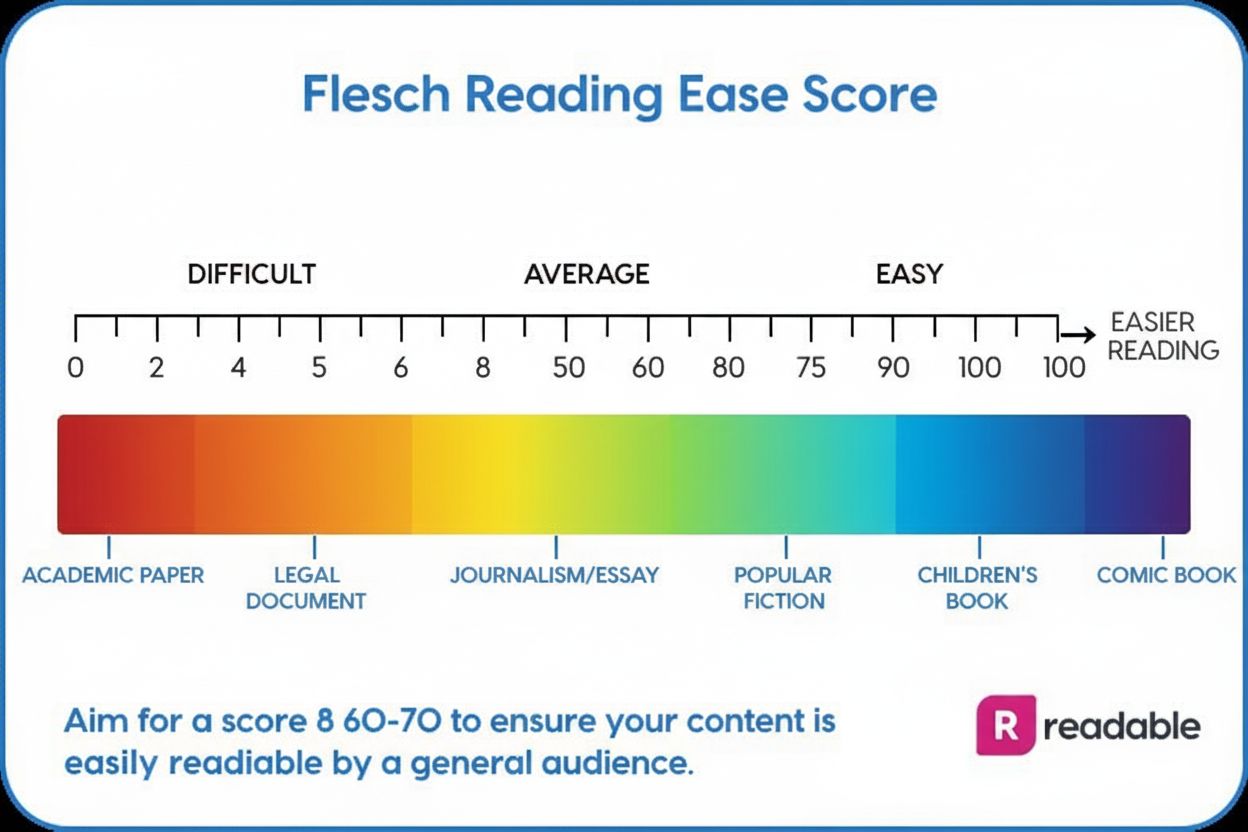

Readability formulas are algorithms that analyze various linguistic characteristics of text to estimate reading difficulty. The most commonly used formula, the Flesch Reading Ease, calculates scores using two primary variables: the average number of words per sentence and the average number of syllables per word. The mathematical formula weighs these factors to produce a score between 0 and 100, where 100 represents extremely easy-to-read content and 0 represents extremely difficult text. The Flesch-Kincaid Grade Level uses a similar approach but converts the result into a U.S. grade level equivalent, making it intuitive for educational contexts. For example, a score of 8 indicates that the text requires an eighth-grade reading level for comprehension. Other formulas like the Gunning Fog Index incorporate additional variables such as the percentage of complex words (those with three or more syllables), while the Dale-Chall Formula analyzes vocabulary against a list of 3,000 familiar words to determine difficulty. The SMOG Index focuses on polysyllabic words and sentence length, making it particularly useful for healthcare and technical documentation. Each formula produces slightly different results for the same text because they weight linguistic factors differently, which is why content creators often use multiple readability tools to gain a comprehensive understanding of their content’s accessibility.

Understanding what readability scores mean is essential for applying them effectively to content strategy. The Flesch Reading Ease scale provides clear interpretations: scores of 90-100 indicate very easy-to-read content suitable for 11-year-olds; 80-90 represents easy-to-read material; 70-80 is fairly easy and appropriate for 13-15 year-olds; 60-70 is easily understood by 13-15 year-olds; 50-60 is fairly difficult; 30-50 is difficult and best understood by college graduates; and 0-30 is very difficult, requiring university-level education. For general audiences, content creators should aim for a score between 60-70, which represents an eighth to ninth-grade reading level. The Flesch-Kincaid Grade Level directly translates to school grades: 0-3 is kindergarten/elementary, 3-6 is elementary, 6-9 is middle school, 9-12 is high school, 12-15 is college, and 15-18 is post-graduate level. Research indicates that the average reading age of adults in the United States is 7th-8th grade, meaning most readers comprehend content written at this level more easily. Additionally, studies show that at least one in ten website visitors will be dyslexic, and many more have cognitive difficulties or learning disabilities, making readability scores particularly important for inclusive web design. The relationship between readability and comprehension is not linear; research published in Reading Research Quarterly found that readability formulas account for only 40% of the differences in how well people understand text, with reader prior knowledge and experience playing equally significant roles.

| Formula Name | Scale Type | Primary Factors | Best Use Case | Score Range | Interpretation |

|---|---|---|---|---|---|

| Flesch Reading Ease | 0-100 Scale | Sentence length, syllables per word | General audiences, marketing content | 0-100 | Higher = easier to read |

| Flesch-Kincaid Grade Level | Grade Equivalents | Sentence length, syllables per word | Educational materials, textbooks | 0-18+ | Matches U.S. school grades |

| Gunning Fog Index | Grade Equivalents | Sentence length, complex words (3+ syllables) | Business writing, technical docs | 6-17+ | Years of education needed |

| SMOG Index | Grade Equivalents | Polysyllabic words, sentence length | Healthcare, medical writing | 6-18+ | Estimates grade level needed |

| Dale-Chall Formula | Reading Scale | Sentence length, familiar word list | General audiences, public documents | 4.9-9.9+ | Difficulty rating scale |

| Coleman-Liau Index | Grade Equivalents | Characters per word, sentences per 100 words | Digital content, web copy | -3 to 16+ | U.S. grade level equivalent |

| Automated Readability Index (ARI) | Grade Equivalents | Characters per word, words per sentence | Technical writing, software documentation | 0-14+ | Grade level required |

Readability scores depend on several interconnected linguistic factors that collectively determine text complexity. Sentence length is perhaps the most significant factor; sentences containing many words require readers to maintain more information in working memory simultaneously, increasing cognitive load. Studies demonstrate that sentences of 11 words are considered easy to read, those at 21 words become fairly difficult, and sentences exceeding 29 words are very difficult for most readers. Word length and syllable count directly correlate with comprehension difficulty; longer words with more syllables are harder to process than shorter, simpler words. For example, “it was a lackadaisical attempt” is more difficult to read than “it was a lazy attempt,” despite conveying the same meaning. Vocabulary complexity extends beyond syllable count to include the familiarity of words; technical jargon, abstract concepts, and uncommon words increase reading difficulty. Passive voice usage also affects readability; passive constructions require readers to mentally reorganize sentence structure to identify the actor and action, whereas active voice presents information in a more natural, direct manner. Punctuation and formatting influence readability by providing visual cues that help readers parse meaning; proper use of periods, commas, and white space reduces cognitive strain. Sentence variety matters as well; texts with monotonous sentence structures become tedious and harder to follow, while varied sentence lengths and structures maintain reader engagement. The syntactic complexity of sentences—the arrangement of grammatical elements—also impacts comprehension; sentences with multiple clauses, embedded phrases, and complex grammatical structures require more cognitive effort to parse than simple, straightforward sentences.

The business implications of readability scores are substantial and measurable across multiple performance metrics. Research from HubSpot’s analysis of over 50,000 blog posts revealed that content with optimal readability scores (around 60-70 on the Flesch Reading Ease scale) generated approximately 30% more leads than content with poor readability scores. Bounce rate reduction is another critical business outcome; studies show that posts scoring between 70-80 Flesch Reading Ease experience 30% lower bounce rates compared to poorly readable content. User engagement metrics improve significantly with better readability; visitors spend more time on pages with readable content, explore more pages within a site, and are more likely to complete desired actions such as signing up for newsletters or making purchases. Conversion rates directly correlate with readability; when content is easy to understand, readers are more likely to trust the information and take recommended actions. 86% of users favor readable websites, indicating that readability is a fundamental expectation rather than a nice-to-have feature. From an accessibility perspective, improved readability benefits users with dyslexia, cognitive disabilities, and non-native speakers, expanding the potential audience for content. Brand perception is enhanced by readable content; organizations that communicate clearly are perceived as more professional, trustworthy, and competent. Customer satisfaction increases when documentation, product descriptions, and support materials are easy to understand, reducing support inquiries and improving customer retention. Legal compliance has become increasingly important; the Plain Writing Act of 2010 requires federal agencies to use clear communication, and many organizations voluntarily adopt readability standards to demonstrate commitment to accessibility and user-centered design.

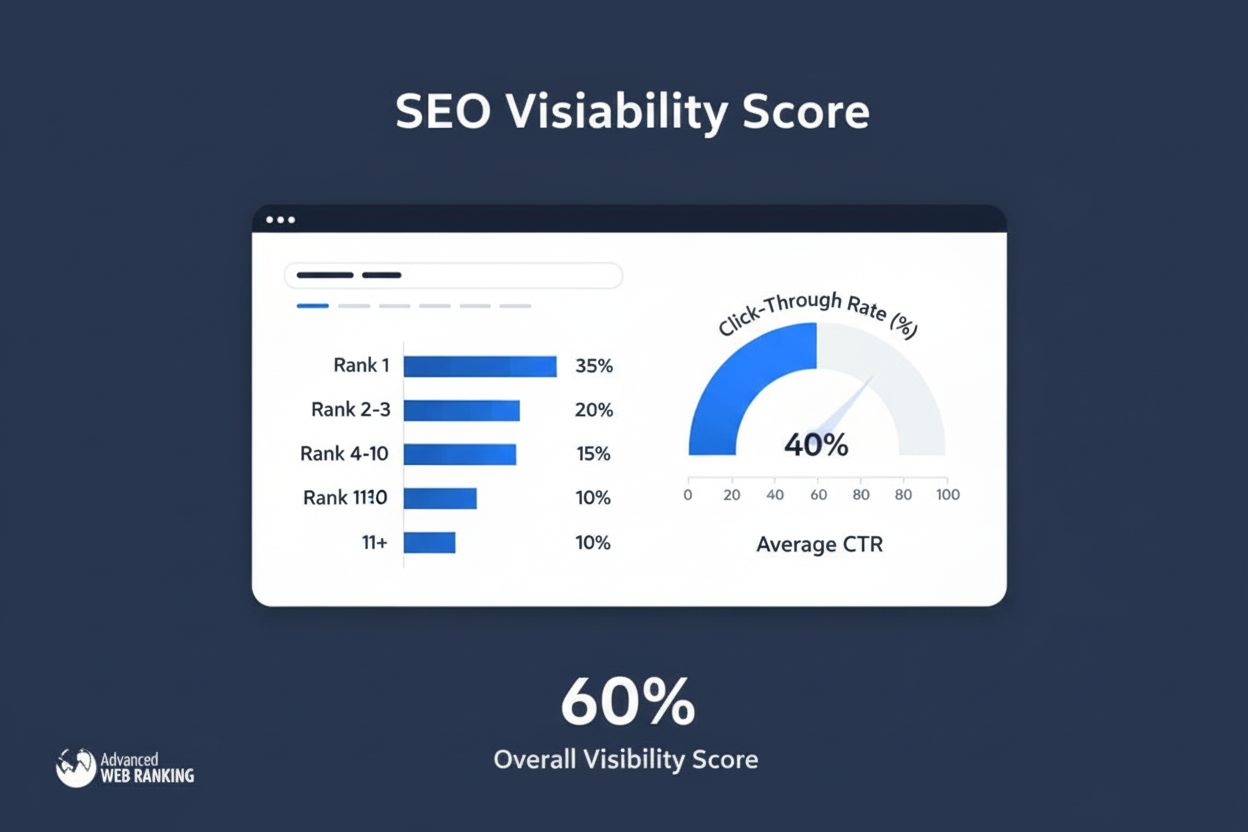

The emergence of AI content monitoring platforms like AmICited has introduced new dimensions to readability score importance. When content appears in AI-generated responses from systems like ChatGPT, Perplexity, Google AI Overviews, and Claude, the readability of source material directly affects how accurately AI systems can extract, summarize, and cite information. Higher readability scores make it easier for AI language models to parse content structure, identify key concepts, and generate accurate summaries. AI systems trained on large text corpora have learned to recognize patterns associated with readable content, and they tend to prioritize and cite sources with clear, well-structured writing. Lower readability scores may result in AI systems misinterpreting content, generating inaccurate summaries, or failing to cite sources properly. Research on AI abstract readability shows that AI-generated content with readability scores of 8.5-8.4 (Flesch-Kincaid Grade Level) performs better in downstream applications than content with lower readability scores. For organizations using AmICited to monitor brand mentions in AI responses, understanding readability becomes crucial for ensuring accurate representation. Content optimization for AI citation requires balancing traditional SEO readability standards with AI comprehension requirements. Structured content with clear headings, bullet points, and logical flow is more likely to be accurately cited by AI systems. Technical documentation and white papers benefit particularly from readability optimization, as AI systems frequently cite these sources when answering complex queries. The intersection of readability and AI monitoring represents an emerging best practice where organizations must consider both human readers and machine learning systems when evaluating content quality.

Improving readability scores requires systematic application of evidence-based writing techniques. The following practices have been proven to enhance content accessibility:

Despite their widespread adoption, readability formulas have significant limitations that content creators must understand. Syntax-focused analysis means formulas ignore semantic meaning; a sentence can score as readable while conveying confusing or contradictory information. Subjectivity in results occurs because different formulas produce different scores for identical text; the Flesch Reading Ease and Gunning Fog Index may rate the same passage differently due to different weighting of factors. Overlooked visual elements represent a major limitation; formulas cannot assess how headings, images, white space, and layout affect comprehension, yet these elements significantly impact actual readability. Jargon treatment is problematic; formulas count specialized vocabulary as complex words even when readers in that field find them familiar and easy to understand. Diversity and accessibility limitations mean formulas were designed primarily for native English speakers and may not accurately assess readability for non-native speakers, individuals with learning disabilities, or those using assistive technologies. Engagement measurement is impossible with formulas; they cannot evaluate whether content is interesting, motivating, or emotionally compelling—factors that significantly influence comprehension and retention. Writing style nuances are ignored; tone, voice, rhetorical devices, and figurative language can enhance or diminish comprehension but are invisible to readability algorithms. Context and prior knowledge effects are unmeasured; a reader’s background knowledge, familiarity with the subject matter, and cultural context dramatically affect comprehension regardless of readability scores. Research published in Reading Research Quarterly demonstrated that readability formulas account for only 40% of comprehension variance, with reader characteristics and prior knowledge accounting for the remaining 60%.

The future of readability assessment is evolving beyond traditional formula-based approaches toward more sophisticated, context-aware methods. Natural Language Processing (NLP) and machine learning technologies are enabling more nuanced readability evaluation that considers semantic meaning, discourse structure, and contextual factors beyond surface-level linguistics. Studies show that NLP tools can now predict readability with accuracy up to 70% in certain contexts, based on research published in the Proceedings of the National Academy of Sciences. AI-powered readability tools are emerging that can evaluate content quality across multiple dimensions simultaneously, providing more comprehensive feedback than traditional formulas. Personalized readability assessment represents a frontier where readability scores could be tailored to individual reader profiles, considering their education level, domain expertise, and reading preferences. Multimodal content analysis will increasingly incorporate visual elements, multimedia, and interactive components into readability evaluation, recognizing that modern content extends beyond text. Real-time readability feedback during content creation is becoming standard in writing platforms, allowing creators to optimize readability as they write rather than after completion. Integration with AI monitoring systems like AmICited will make readability scores increasingly important for ensuring accurate AI citation and content representation. Accessibility standards are evolving to incorporate readability as a core component of digital accessibility, with WCAG guidelines increasingly emphasizing clear, readable content. Industry-specific readability standards are developing; healthcare, legal, financial, and technical sectors are establishing readability benchmarks tailored to their audiences’ needs. The convergence of traditional readability metrics with AI comprehension requirements suggests that future content optimization will need to satisfy both human readers and machine learning systems simultaneously, creating new challenges and opportunities for content creators and organizations monitoring their brand presence across AI platforms.

The ideal readability score depends on your target audience, but most SEO experts recommend aiming for a Flesch Reading Ease score between 60-70 (equivalent to 8th-9th grade level) for general audiences. Research shows that content scoring in this range generates approximately 30% more leads than poorly readable content. For technical or specialized audiences, slightly lower scores may be acceptable if the content serves their expertise level.

Readability scores have a direct impact on user engagement metrics. Studies show that posts with higher readability scores (70-80 Flesch Reading Ease) experience 30% lower bounce rates compared to poorly readable content. Additionally, 86% of users favor readable websites, and improved readability can increase time-on-page and reduce bounce rates, which are critical signals for search engine rankings.

The most widely-used readability formulas include the Flesch Reading Ease (0-100 scale), Flesch-Kincaid Grade Level (US grade equivalents), Gunning Fog Index, SMOG Index, Dale-Chall Formula, and Coleman-Liau Index. Each formula analyzes different linguistic factors such as sentence length, syllable count, and word complexity. The Flesch-Kincaid Grade Level is particularly popular and is built into Microsoft Word and various SEO tools.

Readability scores are increasingly important for AI content monitoring platforms like AmICited that track brand mentions across AI systems such as ChatGPT, Perplexity, and Google AI Overviews. When your content appears in AI responses, its readability score affects how well the AI system can extract, summarize, and present your information. Higher readability scores make content more likely to be cited accurately by AI systems.

Yes, extremely high readability scores (90-100) can indicate oversimplified content that may lack depth or sophistication. A score of 90-100 suggests content suitable for 11-year-olds, which may not be appropriate for professional, technical, or academic audiences. The goal is to match your readability score to your target audience's education level and expectations while maintaining content quality and authority.

Sentence length and word complexity are the two primary factors in most readability formulas. Sentences averaging 11 words are considered easy to read, while those at 21 words become fairly difficult, and 29+ words are very difficult. Similarly, words with fewer syllables are easier to comprehend than polysyllabic words. Research shows that limiting sentences to 15-20 words and using simpler vocabulary significantly improves readability scores.

Readability formulas have significant limitations: they ignore content relevance, cultural context, and reader prior knowledge; they overlook visual formatting and layout; they treat all jargon equally despite domain-specific familiarity; and they cannot measure engagement or emotional impact. Research shows readability formulas account for only 40% of comprehension differences, with reader experience and background knowledge playing equally important roles in understanding.

To improve readability scores, simplify vocabulary by avoiding jargon, break content into shorter paragraphs (3-4 sentences maximum), use active voice instead of passive voice, limit sentences to 15-20 words, incorporate transition words, use subheadings and bullet points, and add white space. Tools like Hemingway Editor, Yoast SEO, and Readable provide real-time feedback on readability issues and specific recommendations for improvement.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Learn what readability scores mean for AI search visibility. Discover how Flesch-Kincaid, sentence structure, and content formatting impact AI citations in Chat...

Flesch Reading Ease is a readability metric scoring text 0-100 based on sentence length and word complexity. Learn how this scale impacts AI content optimizatio...

Visibility Score measures search presence by calculating estimated clicks from organic rankings. Learn how this metric works, its calculation methods, and why i...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.