Keyword Stuffing

Learn what keyword stuffing is, why it's harmful to SEO, how Google detects it, and best practices to avoid this black-hat tactic that damages rankings and user...

Search engine spam refers to deliberate manipulation tactics used to artificially influence search engine rankings through deceptive techniques that violate search engine guidelines. These practices include keyword stuffing, cloaking, link farms, and hidden text, designed to deceive algorithms rather than provide genuine value to users.

Search engine spam refers to deliberate manipulation tactics used to artificially influence search engine rankings through deceptive techniques that violate search engine guidelines. These practices include keyword stuffing, cloaking, link farms, and hidden text, designed to deceive algorithms rather than provide genuine value to users.

Search engine spam, also known as spamdexing, refers to the deliberate manipulation of search engine indexes through deceptive techniques designed to artificially inflate a website’s ranking position. The term encompasses a broad range of unethical practices that violate search engine guidelines, including keyword stuffing, cloaking, link farms, hidden text, and sneaky redirects. These tactics prioritize deceiving search algorithms over providing genuine value to users, fundamentally undermining the integrity of search results. When search engines detect spam on a website, they typically impose penalties ranging from ranking reductions to complete removal from search indexes, making the site invisible to potential visitors.

Search engine spam has existed since the early days of the internet when search algorithms were less sophisticated and easier to manipulate. In the 1990s and early 2000s, spamdexing techniques proliferated as webmasters discovered they could artificially boost rankings through simple keyword repetition and link manipulation. As search engines evolved, particularly after Google’s introduction of PageRank and subsequent algorithm updates like Panda (2011) and Penguin (2012), the detection and penalization of spam became increasingly sophisticated. The emergence of SpamBrain, Google’s AI-based spam-prevention system introduced in 2022, marked a significant shift toward machine learning-driven spam detection. Today, search engines employ advanced algorithms that analyze hundreds of ranking factors, making traditional spam tactics largely ineffective while simultaneously creating new opportunities for sophisticated manipulation attempts.

Keyword stuffing remains one of the most recognizable spam tactics, involving the unnatural repetition of target keywords throughout page content, meta tags, and hidden elements. This practice artificially inflates keyword density without providing coherent, valuable information to readers. Cloaking represents a more deceptive approach, where different content is served to search engine crawlers than what users see in their browsers, typically using JavaScript or server-side techniques to detect and differentiate between bot and human visitors. Link farms and Private Blog Networks (PBNs) constitute another major category of spam, involving networks of low-quality websites created solely to generate artificial backlinks to target sites. These networks exploit the fact that search engines historically weighted backlinks heavily as ranking signals, though modern algorithms have become adept at identifying and devaluing such artificial link schemes.

Sneaky redirects manipulate user behavior by sending visitors to different URLs than what search engines crawl, often redirecting users to irrelevant or malicious content after they click through from search results. Hidden text and links involve placing content in colors matching the background, using extremely small fonts, or positioning text off-screen—making it invisible to human visitors while remaining visible to search crawlers. Comment spam and forum spam exploit user-generated content platforms by automatically posting links and promotional content across blogs, forums, and social media platforms. Content scraping involves copying content from other websites without permission or modification, then republishing it to artificially inflate a site’s content volume and capture search traffic. Each of these tactics represents an attempt to game search algorithms rather than earn rankings through legitimate means.

| Aspect | Search Engine Spam (Black Hat) | Legitimate SEO (White Hat) | Gray Hat SEO |

|---|---|---|---|

| Primary Goal | Manipulate algorithms through deception | Provide value to users and earn rankings naturally | Exploit gray areas in guidelines |

| Content Quality | Low-quality, keyword-stuffed, or scraped | High-quality, original, user-focused content | Mixed quality with some questionable tactics |

| Link Building | Artificial links from farms, PBNs, or hacked sites | Natural links earned through quality content | Purchased links or reciprocal linking schemes |

| Technical Methods | Cloaking, hidden text, sneaky redirects | Clean HTML, proper meta tags, structured data | JavaScript redirects, doorway pages |

| User Experience | Poor; content designed for algorithms, not users | Excellent; content designed for user satisfaction | Moderate; some user experience compromises |

| Search Engine Penalties | Manual actions, deindexing, ranking drops | None; continuous improvement in rankings | Potential penalties if detected |

| Long-term Viability | Unsustainable; penalties inevitable | Sustainable; builds lasting authority | Risky; depends on detection likelihood |

| Recovery Time | Months to years; some benefits irreversible | N/A; no penalties to recover from | Weeks to months if caught |

SpamBrain represents a fundamental shift in how search engines combat spam through artificial intelligence and machine learning. Introduced by Google in 2022, SpamBrain analyzes patterns across billions of websites to identify spam characteristics with unprecedented accuracy. The system operates continuously, examining both on-page factors (content quality, keyword distribution, structural elements) and off-page signals (link profiles, domain history, user behavior patterns). SpamBrain’s machine learning models have been trained on vast datasets of known spam and legitimate websites, enabling the system to recognize new spam variations even before they become widespread. The AI system can identify sophisticated spam attempts that might evade rule-based detection systems, including coordinated link schemes, content manipulation, and hacked website exploitation. Google has reported that SpamBrain’s improvements have reduced low-quality, unoriginal content in search results by approximately 45% since its implementation, demonstrating the effectiveness of AI-driven spam detection.

The proliferation of search engine spam directly degrades the quality and usefulness of search results for end users. When spam content ranks highly, users encounter irrelevant, low-quality, or misleading information instead of authoritative sources that genuinely answer their queries. This degradation of search quality undermines user trust in search engines and forces platforms to invest heavily in spam detection and removal. The presence of spam also creates an unfair competitive landscape where legitimate businesses struggle to compete against sites using unethical tactics, at least temporarily. Search engines respond to spam proliferation with periodic spam updates—notable improvements to their spam-detection systems that are announced and tracked separately from core algorithm updates. Google’s December 2024 spam update, for example, applied globally across all languages and took up to one week to fully roll out, demonstrating the scale and frequency of spam-fighting efforts. The continuous arms race between spammers and search engines consumes significant computational resources and engineering effort that could otherwise be directed toward improving search quality.

Websites caught engaging in search engine spam face a spectrum of consequences, from algorithmic penalties to manual actions and complete deindexing. Manual actions represent Google’s most direct response to spam, where human reviewers identify violations and apply specific penalties through Google Search Console. These manual actions notify site owners of specific violations—such as unnatural links, keyword stuffing, or cloaking—and typically result in significant ranking drops or removal from search results. Algorithmic penalties occur when Google’s automated systems detect spam patterns and reduce a site’s visibility without human intervention. These penalties can be temporary, lasting until the spam is removed and the site is recrawled, or permanent if the violations are severe or repeated. In extreme cases, websites are completely deindexed, meaning they no longer appear in Google search results at all, effectively erasing their organic search visibility. Recovery from manual actions requires comprehensive remediation, detailed documentation of changes, and submission of a reconsideration request through Google Search Console. However, even after successful recovery, websites cannot regain ranking benefits previously generated by spammy links—those benefits are permanently lost.

The emergence of AI-powered search systems like ChatGPT, Perplexity, Google AI Overviews, and Claude introduces new dimensions to the search engine spam problem. These systems rely on web content to generate responses, making them vulnerable to spam and low-quality content that could influence their outputs. Unlike traditional search engines that display ranked lists of results, AI systems synthesize information into natural language responses, potentially amplifying the impact of spam content if it influences the training data or retrieval mechanisms. Organizations like AmICited have emerged to address this challenge, providing AI prompts monitoring platforms that track where brands and domains appear in AI-generated responses. This monitoring capability is crucial because spam content appearing in AI responses can damage brand reputation and visibility in ways that differ from traditional search results. As AI systems become increasingly prevalent in how users discover information, the importance of monitoring and preventing spam in these new search paradigms grows exponentially. The challenge for AI systems is distinguishing between authoritative sources and spam content when making decisions about which information to include in generated responses.

Organizations committed to sustainable search visibility should implement comprehensive strategies to avoid spam tactics and maintain compliance with search engine guidelines. Content strategy should prioritize creating original, high-quality, user-focused content that naturally incorporates relevant keywords without artificial repetition or manipulation. Link building efforts should focus on earning natural backlinks through content quality, industry relationships, and legitimate outreach rather than purchasing links or participating in link schemes. Technical SEO should emphasize clean HTML, proper meta tags, structured data implementation, and transparent redirects that treat search engines and users identically. Regular audits of website content, links, and technical elements help identify potential spam issues before they trigger search engine penalties. Monitoring tools should track keyword rankings, backlink profiles, and search visibility to detect unusual changes that might indicate spam problems or competitive attacks. User experience optimization ensures that content serves human visitors first, with search engine optimization as a secondary consideration. Organizations should also establish clear internal policies prohibiting spam tactics and educating team members about the risks and consequences of manipulation attempts.

The future of search engine spam detection will increasingly rely on advanced artificial intelligence and machine learning systems that can identify sophisticated manipulation attempts in real time. SpamBrain and similar AI systems will continue evolving to detect new spam variations faster than human spammers can develop them, creating an accelerating arms race between detection and evasion. The integration of AI-powered search systems into mainstream search experiences will necessitate new spam-detection approaches tailored to how these systems retrieve and synthesize information. Search engines will likely implement more sophisticated user behavior analysis to identify spam based on how real users interact with content, rather than relying solely on technical signals. Cross-platform monitoring will become increasingly important as spam tactics evolve to target multiple search systems simultaneously—traditional search engines, AI chatbots, and emerging search paradigms. The rise of generative AI content creates new spam challenges, as automated systems can generate plausible-sounding but low-quality or misleading content at scale. Organizations like AmICited will play an increasingly critical role in helping brands monitor their visibility across diverse search systems and detect spam threats before they impact visibility. Ultimately, the most effective spam prevention will combine advanced algorithmic detection with human expertise, user feedback mechanisms, and industry collaboration to maintain search quality and user trust.

Search engine spam violates search engine guidelines and uses deceptive tactics to manipulate rankings, while legitimate SEO follows white-hat practices that provide genuine value to users. Legitimate SEO focuses on creating quality content, earning natural backlinks, and improving user experience, whereas spam tactics like keyword stuffing and cloaking attempt to trick algorithms. Google's guidelines explicitly prohibit spam tactics, and websites using them risk manual penalties or complete deindexing from search results.

Google uses **SpamBrain**, an AI-based spam-prevention system that continuously monitors websites for violations of spam policies. SpamBrain employs machine learning algorithms to identify patterns associated with spam, including unnatural link profiles, keyword stuffing, cloaking, and other deceptive techniques. The system analyzes both on-page and off-page factors, user behavior signals, and content quality metrics to distinguish between legitimate content and spam. Google also conducts manual reviews and receives user reports through Search Console to identify and penalize spammy websites.

The primary search engine spam tactics include **keyword stuffing** (overloading pages with keywords), **cloaking** (showing different content to search engines and users), **sneaky redirects** (redirecting users to different pages than search engines see), **link farms** (networks of low-quality sites created solely for linking), **hidden text and links** (content invisible to users but visible to crawlers), and **comment spam** (automated spam posted in comments and forums). Each tactic attempts to manipulate search algorithms through deception rather than providing genuine user value.

Google imposes several penalties for search engine spam, ranging from ranking drops to complete deindexing. Sites may receive **manual actions** through Google Search Console indicating specific violations, or they may be affected by algorithmic spam updates that automatically reduce visibility. In severe cases, websites can be completely removed from Google's index, making them invisible in search results. Recovery requires identifying and removing all spam elements, then requesting reconsideration through Search Console. The recovery process can take months, and some ranking benefits lost due to spammy links cannot be regained.

Search engine spam poses significant challenges for AI-powered search systems like **ChatGPT**, **Perplexity**, and **Google AI Overviews**. These systems rely on web content to generate responses, and spam content can pollute their training data or influence their outputs. Platforms like AmICited monitor where brands and domains appear in AI responses, helping organizations track whether spam or low-quality content is affecting their visibility. As AI systems become more prevalent, spam detection becomes increasingly critical to ensure these platforms provide accurate, trustworthy information to users.

Yes, websites can recover from spam penalties, but the process requires comprehensive remediation. Site owners must identify all spam elements—including keyword stuffing, unnatural links, cloaking, and hidden content—and remove them completely. After cleanup, they should submit a reconsideration request through Google Search Console with detailed documentation of changes made. Recovery typically takes several months as Google's systems need time to recrawl and re-evaluate the site. However, ranking benefits previously gained from spammy links cannot be recovered, and sites must focus on building legitimate authority through quality content and natural link acquisition.

Monitoring search engine spam is crucial because spam content can damage brand reputation and visibility across search platforms. Competitors may use spam tactics targeting your brand, or your own website might be compromised and used for spam. Tools like **AmICited** help organizations track their brand mentions across AI search systems and traditional search engines, identifying whether spam or low-quality content is appearing in place of legitimate brand information. This monitoring enables proactive detection of spam issues and helps maintain brand integrity in search results and AI-generated responses.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Learn what keyword stuffing is, why it's harmful to SEO, how Google detects it, and best practices to avoid this black-hat tactic that damages rankings and user...

Cloaking is a black-hat SEO technique showing different content to search engines vs users. Learn how it works, its risks, detection methods, and why it violate...

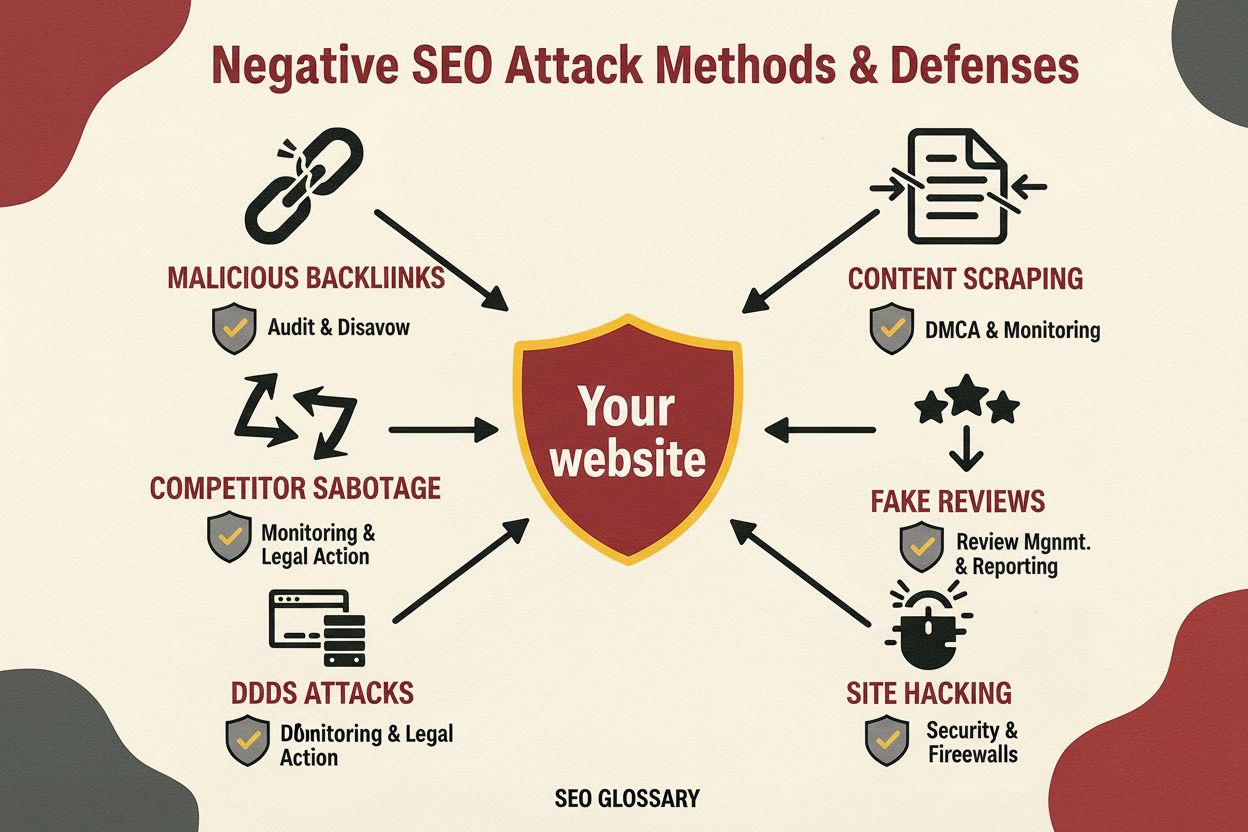

Negative SEO is the practice of using unethical techniques to harm competitor rankings. Learn about attack tactics, detection methods, and how to protect your s...