Original Research - First-party data and studies

Original research and first-party data are proprietary studies and customer information collected directly by brands. Learn how they build authority, drive AI c...

Secondary research is the analysis and interpretation of existing data previously collected by other researchers or organizations for different purposes. It involves synthesizing published datasets, reports, academic journals, and other sources to answer new research questions or validate hypotheses without conducting original data collection.

Secondary research is the analysis and interpretation of existing data previously collected by other researchers or organizations for different purposes. It involves synthesizing published datasets, reports, academic journals, and other sources to answer new research questions or validate hypotheses without conducting original data collection.

Secondary research, also known as desk research, is a systematic research methodology that involves analyzing, synthesizing, and interpreting existing data previously collected by other researchers, organizations, or institutions for different purposes. Rather than gathering original data through surveys, interviews, or experiments, secondary research leverages published datasets, reports, academic journals, government statistics, and other compiled information sources to answer new research questions or validate hypotheses. This approach represents a fundamental shift from data collection to data analysis and interpretation, enabling organizations to extract actionable insights from information that already exists in the public domain or within organizational archives. The term “secondary” refers to the fact that researchers are working with data that is secondary to its original collection purpose—data originally gathered for one objective is reanalyzed to address different research questions or business challenges.

The practice of secondary research has evolved significantly over the past century, transforming from library-based literature reviews to sophisticated digital data analysis. Historically, researchers relied on physical libraries, archives, and published materials to conduct secondary analysis, a time-consuming process that limited research scope and accessibility. The digital revolution fundamentally changed secondary research by making vast datasets instantly accessible through online databases, government portals, and academic repositories. Today, the global market research industry generates $140 billion in annual revenue as of 2024, with secondary research representing a substantial portion of this market. The growth trajectory is remarkable—the industry expanded from $102 billion in 2021 to $140 billion in 2024, representing a 37.25% increase in just three years. This expansion reflects increasing organizational reliance on data-driven decision-making and the recognition that secondary research provides cost-effective pathways to market insights. The emergence of AI-powered data analysis tools has further revolutionized secondary research, enabling researchers to process massive datasets, identify patterns, and extract insights at unprecedented speeds. According to recent research, 69% of market research professionals have incorporated synthetic data and AI analysis into their secondary research efforts, demonstrating the field’s rapid technological evolution.

Secondary research data originates from two primary categories: internal sources and external sources. Internal secondary data includes information already collected and stored within an organization, such as sales databases, customer transaction histories, previous research projects, campaign performance metrics, and website analytics. This internal data provides competitive advantages because it remains exclusive to the organization and reflects actual business performance. External secondary data encompasses publicly available or purchasable information from government agencies, academic institutions, market research firms, industry associations, and media outlets. Government sources provide census data, economic statistics, and regulatory information; academic sources offer peer-reviewed research and longitudinal studies; market research agencies publish industry reports and competitive analyses; and industry associations compile sector-specific data and trends. The diversity of secondary sources enables researchers to triangulate findings across multiple perspectives and validate conclusions through cross-source verification.

| Aspect | Secondary Research | Primary Research |

|---|---|---|

| Data Collection | Analyzes existing data collected by others | Collects original data directly from sources |

| Timeline | Days to weeks | Weeks to months |

| Cost | Low to minimal (often free) | High (participant recruitment, administration) |

| Data Control | No control over methodology or quality | Full control over research design and execution |

| Specificity | May not address specific research questions | Tailored to exact research objectives |

| Researcher Bias | Unknown bias from original collectors | Potential bias from current researchers |

| Data Exclusivity | Non-exclusive (competitors access same data) | Exclusive ownership of findings |

| Sample Size | Often large-scale datasets | Varies based on budget and scope |

| Relevance | May require adaptation to current needs | Directly relevant to current research goals |

| Speed to Insights | Immediate access to compiled information | Requires time for data collection and analysis |

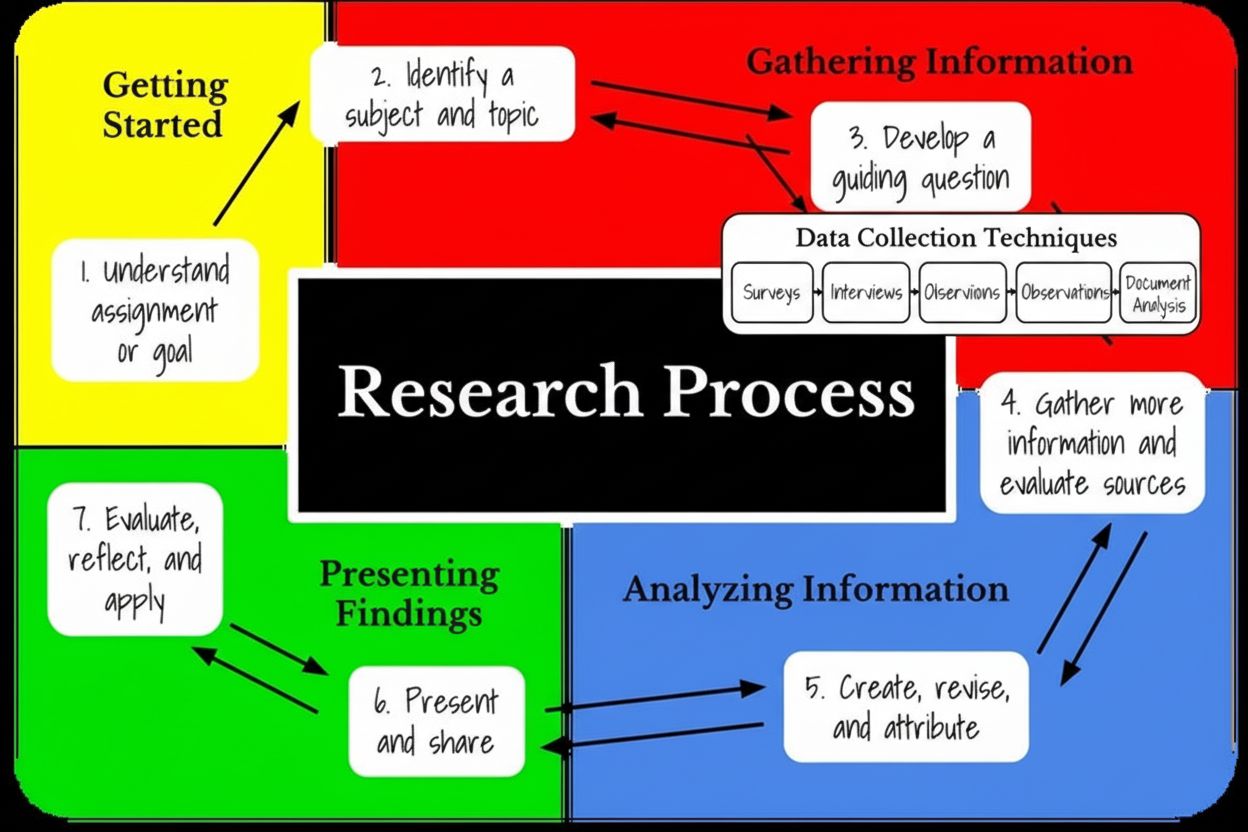

Secondary research methodology follows a structured five-step process that ensures rigorous analysis and valid conclusions. The first step involves clearly defining the research topic and identifying specific research questions that secondary data might address. Researchers must articulate what they want to achieve—whether exploratory (understanding why something happened) or confirmatory (validating hypotheses). The second step requires identifying and locating appropriate secondary data sources, considering factors like data relevance, source credibility, publication date, and geographic scope. The third step involves systematically collecting and organizing the data, often requiring researchers to access multiple databases, verify source authenticity, and consolidate information into analyzable formats. During this phase, researchers must evaluate data quality, assess methodology transparency, and determine whether data collection timeframes align with research needs. The fourth step focuses on combining and comparing datasets, identifying patterns across different sources, and recognizing trends or anomalies that emerge from comparative analysis. Researchers may need to filter unusable data, reconcile conflicting information, and organize findings into coherent narratives. The final step involves comprehensive analysis and interpretation, where researchers examine whether secondary data adequately answers original research questions, identify knowledge gaps, and determine whether supplementary primary research is necessary. This structured approach ensures that secondary research produces credible, actionable insights rather than superficial conclusions.

One of the most compelling advantages of secondary research is its dramatic cost efficiency compared to primary research methodologies. Secondary data analysis is almost always less expensive than conducting primary research, with organizations typically saving 50-70% on research budgets by leveraging existing datasets. Since data collection represents the most expensive component of primary research—including participant recruitment, incentives, survey administration, and field operations—secondary research eliminates these substantial costs entirely. Most secondary data sources are available for free through government agencies, public libraries, and academic repositories, or available at minimal cost through subscription services. The time savings are equally significant: secondary research can be completed in days or weeks, whereas primary research typically requires weeks to months. Researchers can access compiled datasets immediately through online platforms, enabling rapid decision-making for time-sensitive business challenges. Additionally, secondary data is typically pre-cleaned and organized in electronic formats, eliminating the labor-intensive data preparation phase that consumes significant resources in primary research. For organizations with limited budgets or tight timelines, secondary research provides an accessible pathway to market insights, competitive intelligence, and trend analysis. The global market research industry’s growth to $140 billion reflects increasing organizational investment in research, with secondary research representing a cost-effective component of comprehensive research strategies.

In the context of AI monitoring and generative engine optimization, secondary research plays a critical role in establishing baselines and understanding how AI systems cite sources. Platforms like AmICited leverage secondary research principles to track brand mentions across AI systems including ChatGPT, Perplexity, Google AI Overviews, and Claude. By analyzing existing data about competitor citations, industry trends, and historical brand performance in AI responses, organizations can identify patterns in how AI systems select and cite sources. Secondary research helps establish benchmarks for AI visibility, enabling brands to understand their current position relative to competitors and industry standards. Organizations can analyze secondary data about content performance, citation patterns, and AI system preferences to optimize their content strategy for better AI citations. This integration of secondary research with AI monitoring creates a comprehensive understanding of how brands appear in generative search results and AI-powered responses. The analysis of existing citation data, competitor strategies, and industry trends provides context for interpreting real-time AI monitoring data, enabling more sophisticated optimization strategies. As 47% of researchers worldwide regularly use AI in their market research activities, the convergence of secondary research methodology with AI-powered analysis tools is reshaping how organizations understand their market position and AI visibility.

Ensuring secondary research data quality requires rigorous validation processes and critical assessment of source credibility. Researchers must examine the original research methodology, including sample size, population characteristics, data collection procedures, and potential biases that may have influenced results. Peer-reviewed academic journals maintain higher credibility standards than blogs or opinion pieces, as they undergo expert review before publication. Government agencies and established research institutions typically employ rigorous quality controls, making their data more reliable than self-published sources. Cross-referencing findings across multiple independent sources helps validate conclusions and identify inconsistencies that may indicate data quality issues. Researchers should assess whether the original study’s timeframe aligns with current research needs, as data collected five years ago may not reflect current market conditions or consumer behaviors. The publication date is critical—secondary data becomes less relevant as time passes, particularly in fast-moving industries where market conditions change rapidly. Researchers should also consider whether the original data collection methodology matches their research requirements, as different methodologies may produce incomparable results. Contacting original researchers or organizations can provide additional context about data collection processes, response rates, and any known limitations. This comprehensive validation approach ensures that secondary research conclusions rest on credible, high-quality data rather than potentially flawed or outdated information.

Secondary research offers numerous strategic advantages that make it an essential component of comprehensive research programs. Easily accessible data is readily available through online databases, libraries, and government portals, requiring minimal technical expertise to locate and access. The faster research timeline enables organizations to answer research questions in days rather than months, supporting rapid decision-making and competitive responsiveness. Low financial costs make secondary research accessible to organizations with limited research budgets, democratizing access to market insights. Secondary research can drive additional research actions by identifying knowledge gaps that warrant primary research investigation, serving as a foundation for more targeted studies. The ability to scale results quickly using large-scale datasets like census data enables researchers to draw conclusions about broad populations without conducting expensive large-scale surveys. Secondary research provides pre-research insights that help organizations determine whether research should proceed, potentially saving resources by identifying that answers already exist in published literature. The breadth and depth of available data enables researchers to examine trends across multiple years, identify patterns, and understand historical context that informs current decision-making. Organizations can leverage competitive advantages by accessing internal secondary data that competitors cannot obtain, providing unique insights into organizational performance and market position.

Despite its advantages, secondary research presents significant limitations that researchers must carefully consider. Outdated data represents a primary concern, as secondary sources may not reflect current market conditions, consumer preferences, or technological changes. In fast-moving industries, secondary data can become obsolete within months, requiring researchers to verify that information remains relevant. Lack of control over methodology means researchers cannot verify how original data was collected, whether quality standards were maintained, or whether unknown biases influenced results. The inability to customize data to specific research questions often requires researchers to adapt their objectives to available information rather than finding data that perfectly addresses their needs. Non-exclusive data access means competitors can access the same secondary sources, eliminating competitive advantages that primary research might provide. Unknown researcher bias from original data collectors may have influenced results in ways that current researchers cannot detect or correct. Data relevance gaps may require researchers to supplement secondary findings with primary research to address specific questions. The complexity of data integration when combining multiple secondary sources with different methodologies, timeframes, and populations can introduce analytical challenges. Researchers must invest significant effort in data verification and validation to ensure that secondary sources meet quality standards and provide reliable insights.

The future of secondary research is being fundamentally reshaped by artificial intelligence, machine learning, and advanced analytics technologies. AI-powered tools now enable researchers to process massive datasets, identify complex patterns, and extract insights that would be impossible to detect through manual analysis. 83% of market research professionals plan to invest in AI for their research activities in 2025, indicating widespread recognition of AI’s transformative potential. The integration of synthetic data into secondary research is accelerating, with over 70% of market researchers expecting synthetic data to account for more than 50% of data collection within three years. This shift reflects the growing importance of AI-generated insights and the need to supplement traditional secondary sources with algorithmically-generated data. Automated content analysis using natural language processing enables researchers to analyze qualitative secondary sources at scale, identifying themes, sentiment, and semantic relationships across thousands of documents. The convergence of secondary research with generative engine optimization (GEO) strategies is creating new opportunities for organizations to understand how AI systems cite and reference sources. As AI systems like ChatGPT, Perplexity, and Claude become primary information sources for consumers, secondary research methodologies are evolving to analyze how these systems select, cite, and present information. Organizations are increasingly using secondary research to establish baselines for AI visibility, understanding how their brands appear in AI-generated responses compared to competitors. The future will likely see secondary research becoming more sophisticated, real-time, and integrated with AI monitoring platforms that track brand mentions across multiple AI systems simultaneously. This evolution represents a fundamental shift from historical secondary research toward dynamic, AI-enhanced analysis that provides continuous insights into market position, competitive landscape, and AI visibility.

Organizations seeking to maximize secondary research effectiveness should implement structured best practices that ensure rigorous analysis and actionable insights. Define clear research objectives before beginning secondary research, articulating specific questions that secondary data might address and establishing success criteria for the research project. Prioritize source credibility by favoring peer-reviewed academic sources, government agencies, and established research institutions over self-published or biased sources. Establish verification protocols that require cross-referencing findings across multiple independent sources before drawing conclusions. Document methodology by recording which sources were consulted, how data was analyzed, and what limitations or biases may have influenced results. Assess data recency by verifying that secondary data reflects current market conditions and hasn’t become obsolete due to rapid industry changes. Combine with primary research when secondary data fails to address specific research questions or when validation of secondary findings is necessary. Leverage internal data by conducting thorough audits of organizational databases and previous research projects before seeking external secondary sources. Utilize AI-powered analysis tools to process large secondary datasets efficiently and identify patterns that manual analysis might miss. Monitor AI visibility by integrating secondary research insights with AI monitoring platforms like AmICited to understand how brands appear in AI-generated responses. Establish update schedules for secondary research projects, recognizing that market conditions change and periodic re-analysis may be necessary to maintain insight accuracy.

Secondary research remains an essential methodology for organizations seeking cost-effective, rapid insights into market conditions, competitive landscapes, and consumer trends. As the global market research industry continues its expansion—growing from $102 billion in 2021 to $140 billion in 2024—secondary research represents an increasingly important component of comprehensive research strategies. The integration of AI and machine learning technologies is transforming secondary research from a manual, time-consuming process into an automated, sophisticated analytical discipline capable of processing massive datasets and identifying complex patterns. Organizations that master secondary research methodology gain significant competitive advantages, enabling rapid decision-making, cost-effective market analysis, and informed strategic planning. The emergence of AI monitoring platforms like AmICited demonstrates how secondary research principles are evolving to address new challenges in the generative AI era, where understanding how AI systems cite and reference sources has become critical to brand visibility and market positioning. As 47% of researchers worldwide now regularly use AI in their market research activities, the future of secondary research lies in the sophisticated integration of traditional research methodology with cutting-edge AI capabilities. Organizations that combine rigorous secondary research practices with AI-powered analysis tools, real-time monitoring platforms, and strategic validation protocols will be best positioned to extract maximum value from existing data while maintaining the credibility and accuracy necessary for confident decision-making in an increasingly complex, AI-driven business environment.

Primary research involves collecting original data directly from sources through surveys, interviews, or observations, while secondary research analyzes existing data previously gathered by others. Primary research is more time-consuming and expensive but provides tailored insights, whereas secondary research is faster and more cost-effective but may not address specific research questions precisely. Both methods are often combined for comprehensive research strategies.

Secondary research sources include government statistics and census data, academic journals and peer-reviewed publications, market research reports from professional agencies, company reports and white papers, industry association data, news archives and media publications, and internal organizational databases. These sources can be internal (from within your organization) or external (publicly available or purchased from third parties). The choice of source depends on research objectives, data relevance, and credibility requirements.

Secondary research eliminates data collection expenses since information has already been gathered and compiled by others. Researchers avoid costs associated with recruiting participants, conducting surveys or interviews, and managing field operations. Additionally, secondary data is often available for free or at minimal cost through public databases, libraries, and government agencies. Organizations can save 50-70% on research budgets by leveraging existing datasets, making it ideal for resource-constrained teams.

Secondary research data may be outdated, potentially missing recent market changes or trends. The original data collection methodology is unknown, raising questions about data quality and validity. Researchers have no control over how data was collected, potentially introducing unknown biases. Secondary datasets may not precisely address specific research questions, requiring researchers to adapt their objectives. Additionally, secondary data lacks exclusivity, meaning competitors can access the same information.

Organizations should examine the original research methodology, publication date, and source reputation before using secondary data. Peer-reviewed academic journals and government agencies typically maintain higher credibility standards than blogs or opinion pieces. Cross-referencing data across multiple independent sources helps validate findings and identify inconsistencies. Researchers should assess whether the original study's sample size, population, and research design align with their needs. Contacting original researchers or organizations can provide additional context about data collection processes.

Secondary research provides historical context and baseline data for AI monitoring platforms like AmICited, which track brand mentions across AI systems like ChatGPT, Perplexity, and Claude. By analyzing existing data about competitor mentions, industry trends, and historical brand performance, organizations can establish benchmarks for AI visibility. Secondary research helps identify patterns in how AI systems cite sources, enabling brands to optimize their content strategy for better AI citations and visibility in generative search results.

AI tools now automate secondary data analysis, enabling researchers to process large datasets faster and identify patterns that would be difficult to detect manually. Around 47% of researchers worldwide regularly use AI in their market research activities, with adoption rates reaching 58% in Asia-Pacific regions. AI-powered content analysis tools can recognize themes, semantic connections, and relationships within secondary sources. However, 73% of researchers express confidence in applying AI to secondary research, while concerns about skill gaps remain among some teams.

Secondary research can be completed in days or weeks since data is already collected and organized, whereas primary research typically requires weeks to months for planning, data collection, and analysis. Organizations can access secondary data immediately through online databases and libraries, enabling rapid decision-making. The speed advantage makes secondary research ideal for time-sensitive business decisions, competitive analysis, and preliminary research phases. However, the trade-off is that secondary data may not provide the specific, current insights that primary research delivers.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Original research and first-party data are proprietary studies and customer information collected directly by brands. Learn how they build authority, drive AI c...

Learn what the research phase information gathering stage is, its importance in research methodology, data collection techniques, and how it impacts AI monitori...

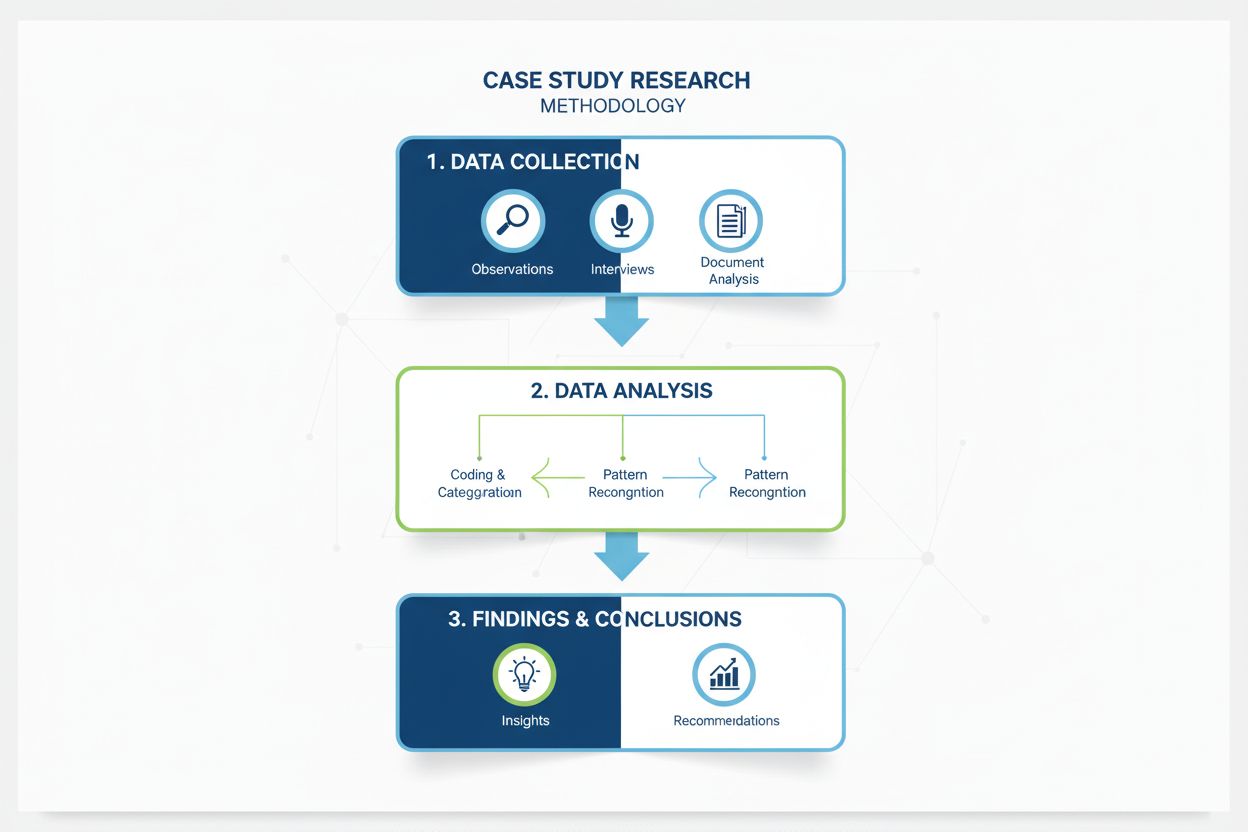

Comprehensive definition of case study research methodology. Learn how case studies provide in-depth analysis of specific examples, data collection methods, and...