Thin Content Definition and AI Penalties: Complete Guide

Learn what thin content is, how AI systems detect it, and whether ChatGPT, Perplexity, and Google AI penalize low-quality pages. Expert guide with detection met...

Thin content refers to web pages with little or no valuable information for users, typically lacking depth, originality, or meaningful insights. These pages often fail to answer user search intent and are frequently penalized by Google’s algorithms, particularly since the Panda update, which targeted low-quality and shallow content across the web.

Thin content refers to web pages with little or no valuable information for users, typically lacking depth, originality, or meaningful insights. These pages often fail to answer user search intent and are frequently penalized by Google's algorithms, particularly since the Panda update, which targeted low-quality and shallow content across the web.

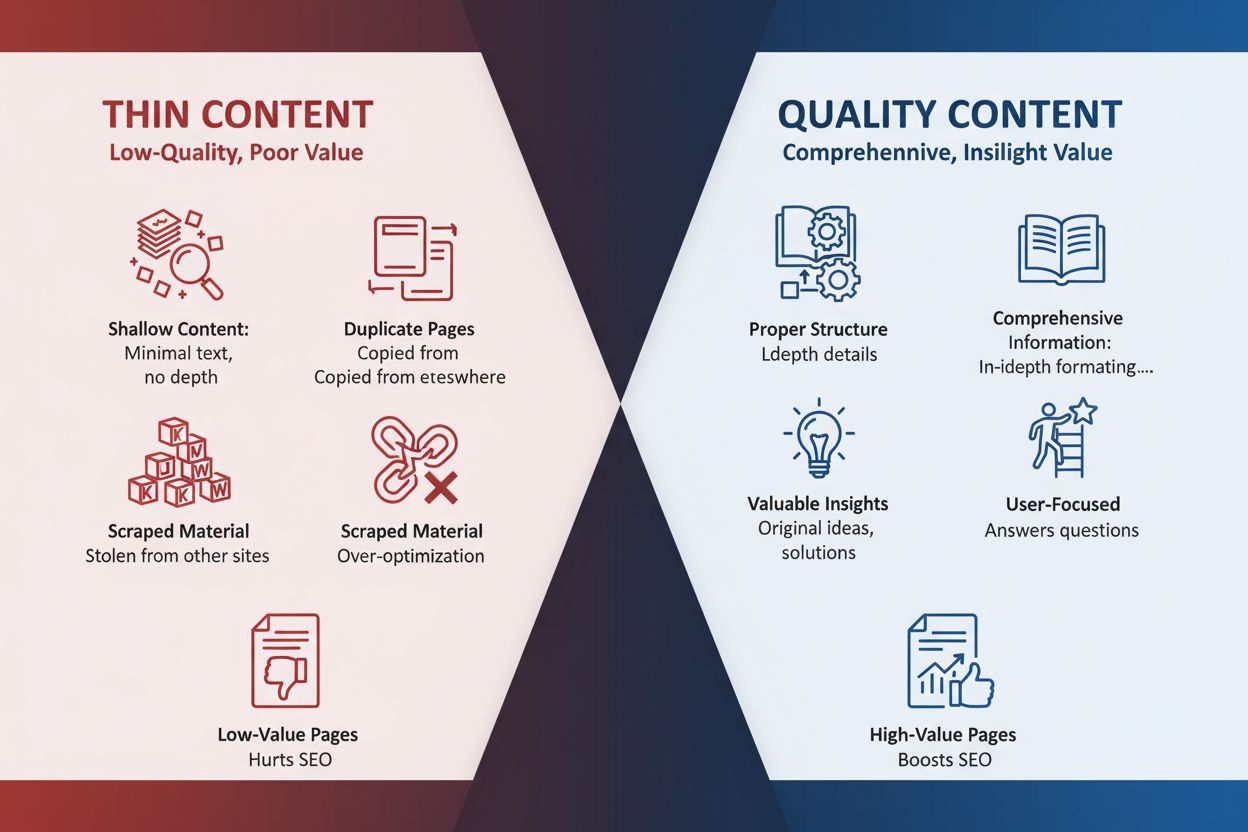

Thin content refers to web pages that provide little or no valuable information to users, typically characterized by insufficient depth, lack of originality, or minimal meaningful insights. These pages fail to adequately answer user search intent and often consist of shallow information, poor structure, or content created primarily to manipulate search rankings rather than serve user needs. Thin content is fundamentally different from quality content because it lacks the expertise, authority, and trustworthiness that modern search algorithms prioritize. The term encompasses a broad range of low-quality pages, from automatically generated material to manually created content that simply doesn’t meet user expectations or search engine standards. Understanding what constitutes thin content is essential for website owners, content creators, and digital marketers because these pages actively harm search visibility, user experience, and overall site authority.

The problem of thin content became widespread during the late 2000s with the rise of “content farms”—websites that mass-produced low-quality articles optimized for search engines rather than user value. Companies like Demand Media and eHow exemplified this model, creating thousands of shallow articles daily to capture search traffic and generate ad revenue. By 2010, user complaints about declining search quality had reached critical levels, prompting Google to take action. In February 2011, Google launched the Panda algorithm, a groundbreaking update designed specifically to identify and demote low-quality, thin content pages. The initial Panda update impacted 11.8% of Google queries, demonstrating the scale of the thin content problem across the web. Google’s Amit Singhal later explained that the algorithm was developed by having human quality raters evaluate pages using 23 specific questions about content quality, expertise, originality, and trustworthiness. The algorithm then used machine learning to identify patterns that separated high-quality sites from thin content sites. This marked a fundamental shift in how search engines evaluated content, moving beyond simple keyword matching to assess actual user value.

Thin content exhibits several identifiable characteristics that distinguish it from quality material. Pages with thin content typically contain fewer than 300 words of substantive information, though word count alone doesn’t determine quality. More importantly, thin pages lack original insights, fail to provide comprehensive coverage of their topic, and often contain grammatical errors, poor structure, or confusing organization. Thin content frequently appears on pages created through automated processes, such as dynamically generated product pages with minimal unique descriptions or AI-generated articles without human review and editing. Duplicate or near-duplicate content across multiple URLs represents another form of thin content, where pages use synonyms or minor rewording to create the illusion of uniqueness without adding real value. Doorway pages—created specifically to rank for particular keywords before redirecting users elsewhere—exemplify intentional thin content creation. Scraped content, copied directly from other sources without permission or attribution, is inherently thin because it provides no new perspective or value. Pages dominated by advertisements, with main content pushed below the fold or obscured by popups, are also considered thin because the actual informational value is minimized. Identifying thin content requires both automated tools and manual review, as some thin pages may appear structurally complete while lacking substantive value.

| Characteristic | Thin Content | Quality Content | Duplicate Content |

|---|---|---|---|

| Word Count | Often <300 words or excessive filler | Sufficient length to comprehensively cover topic | Variable; can be any length |

| Originality | Lacks original insights or research | Contains unique perspectives and original analysis | Identical or near-identical to existing content |

| User Value | Minimal; fails to answer search intent | High; directly addresses user questions | May have value but creates redundancy |

| Structure & Clarity | Poor organization; confusing flow | Well-organized with clear headings and logical progression | Structure may be clear but content is repeated |

| E-E-A-T Signals | Lacks expertise, authority, trustworthiness | Demonstrates clear expertise and credibility | May have E-E-A-T but lacks uniqueness |

| Search Engine Treatment | Algorithmically demoted; may trigger manual penalties | Prioritized in rankings | Filtered; only preferred version ranks |

| Examples | Doorway pages, scraped content, keyword-stuffed articles | In-depth guides, original research, expert analysis | Product pages with identical descriptions, syndicated articles |

| Recovery Method | Expand, improve, or delete | Maintain and update regularly | Use canonical tags or 301 redirects |

Google’s approach to identifying thin content has evolved significantly since the Panda algorithm’s introduction. Modern detection relies on machine learning systems that evaluate hundreds of ranking signals simultaneously, comparing pages against human quality ratings and user behavior patterns. The algorithm assesses whether content provides substantial value compared to competing pages in search results, considering factors like originality, depth, comprehensiveness, and alignment with search intent. Pages that fail to provide meaningful answers to user queries are flagged as thin, regardless of their technical structure or keyword optimization. Google’s E-E-A-T framework—Experience, Expertise, Authoritativeness, and Trustworthiness—has become increasingly central to thin content detection. Pages lacking clear author credentials, relying on unverified sources, or making unsubstantiated claims are more likely to be classified as thin. The algorithm also considers user behavior signals: high bounce rates, low time-on-page, and quick returns to search results all indicate that users found the content unhelpful. Google can penalize thin content through two mechanisms: algorithmic filtering, which gradually reduces visibility through core updates, and manual actions, where Google’s webspam team issues explicit penalties visible in Search Console. Recovery from thin content penalties requires demonstrating substantial improvement in content quality, not merely adding more words or keywords.

Understanding the various forms of thin content helps website owners identify and address problems on their own sites. Scraped content represents one of the most egregious types, where entire articles are copied from other websites without permission, attribution, or added value. This practice violates copyright and provides zero unique benefit to users. Doorway pages are created specifically to rank for particular keywords, often with minimal content and aggressive internal linking designed to funnel users to other pages. These pages prioritize search engine manipulation over user experience. Automatically generated content produced by software without human review frequently lacks coherence, contains errors, and fails to address actual user needs. Keyword-stuffed pages repeat target keywords unnaturally throughout the content, prioritizing search engine signals over readability and user comprehension. Thin affiliate pages promote products or services with little original analysis or unique perspective, simply copying manufacturer descriptions or competitor reviews. Pages with excessive advertising obscure the main content with ads, popups, and distracting elements that degrade user experience and signal low content priority. Duplicate content across multiple URLs, whether intentional or accidental, dilutes ranking signals and confuses search engines about which version to prioritize. Low-quality user-generated content, such as spam comments or poorly written guest posts, can make entire sections of a site appear thin. Shallow product pages with only manufacturer descriptions and no original insights or customer guidance represent thin content in e-commerce contexts. Each type requires different remediation strategies, from deletion and redirection to comprehensive rewriting and optimization.

Thin content creates cascading negative effects across multiple dimensions of website performance. From an SEO perspective, thin pages waste crawl budget—the limited resources Google allocates to crawling your site—on pages that don’t deserve ranking visibility. This means fewer resources are available for crawling and indexing your valuable content. Thin pages also dilute internal linking equity and confuse search engines about your site’s topical authority. When a site contains numerous thin pages alongside quality content, Google’s algorithms struggle to identify which pages represent your site’s true expertise and value. This uncertainty reduces rankings for all pages, not just the thin ones. Thin content directly contradicts Google’s stated goal of providing users with the most relevant, helpful, and authoritative results. Pages that fail to satisfy search intent trigger high bounce rates and quick returns to search results, sending clear signals to Google that the page isn’t meeting user needs. From a user experience perspective, thin content frustrates visitors who expected comprehensive answers but found shallow, vague, or unhelpful information. This poor experience damages brand trust and reduces the likelihood of repeat visits or conversions. Users encountering thin content are more likely to click back to search results and try competitors’ pages, which further signals to Google that your content isn’t valuable. For businesses relying on organic search traffic, thin content represents a direct loss of potential customers and revenue. The cumulative effect of thin content across a site can trigger algorithmic penalties that suppress visibility for all pages, creating a downward spiral in search performance that requires significant effort to reverse.

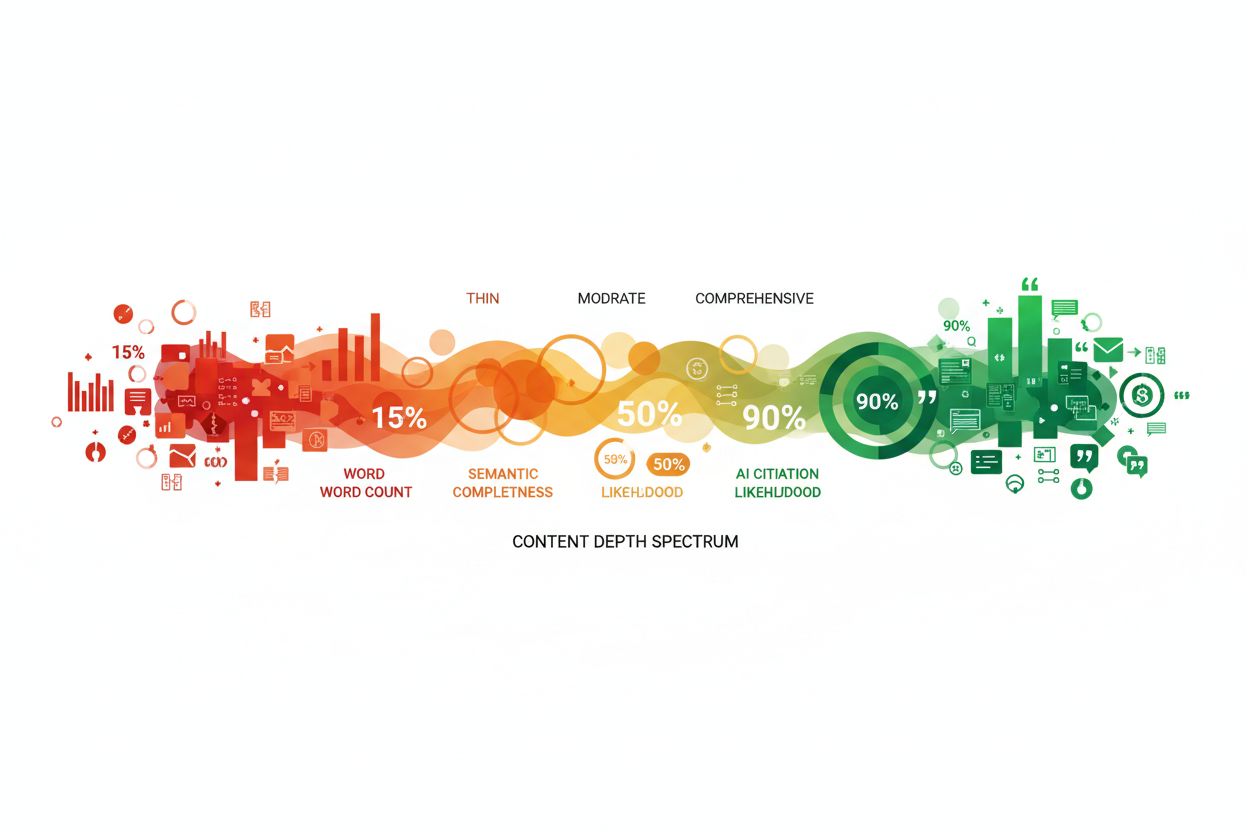

As artificial intelligence systems like ChatGPT, Perplexity, Google AI Overviews, and Claude become increasingly important for information discovery, thin content faces new challenges in the AI era. These systems are trained on high-quality, authoritative sources and are designed to cite and reference pages that provide comprehensive, original, and trustworthy information. Thin content is systematically filtered out during AI training and retrieval processes because it lacks the depth and originality that AI systems prioritize. When AI systems generate responses, they preferentially cite pages that demonstrate clear expertise, provide unique insights, and comprehensively address user queries. Pages with thin content rarely meet these criteria, resulting in reduced visibility in AI-generated answers. For organizations using platforms like AmICited to monitor brand and domain appearances in AI responses, thin content represents a significant competitive disadvantage. While competitors with high-quality, comprehensive content appear frequently in AI citations, thin content pages are overlooked entirely. This creates a new dimension of SEO importance: content must not only rank in traditional search results but also be authoritative and valuable enough to be cited by AI systems. The shift toward AI-powered search means that thin content becomes even more problematic, as it fails both traditional search algorithms and modern AI evaluation systems. Organizations should view thin content remediation as essential for maintaining visibility across all search channels, including emerging AI platforms.

Identifying thin content requires a combination of automated tools and manual evaluation. Begin by reviewing your site from a user’s perspective, reading pages as if you were a visitor searching for information. Ask yourself whether each page answers the user’s question comprehensively, provides original insights, and offers value that competitors don’t provide. Check Google Search Console for manual action penalties under the “Security & Manual Actions” section; any penalties indicate that Google has identified quality issues on your site. Analyze Google Analytics to identify pages with consistently low traffic, high bounce rates, or sudden ranking drops, as these patterns often indicate thin content. Run a comprehensive site audit using tools like Semrush, Ahrefs, or Search Atlas to identify duplicate content, missing meta descriptions, thin pages, and other technical issues. Pay special attention to pages with very low word counts, minimal unique content, or excessive similarity to other pages on your site. Use rank tracking tools to monitor which pages are losing visibility over time; sustained ranking drops often correlate with thin content issues. Evaluate all pages against Google’s E-E-A-T standards: Does the author have clear credentials? Are sources reputable and verified? Is the content well-written and free of errors? Does it provide comprehensive coverage of the topic? Create a spreadsheet documenting pages you’ve reviewed, noting which ones need improvement, which should be redirected, and which should be deleted. Prioritize pages that receive traffic or have backlinks, as these have the most impact on your site’s overall authority and visibility.

Once you’ve identified thin content on your site, you have several options for remediation. The most effective approach is expanding and improving thin pages by adding original insights, relevant data, examples, and comprehensive coverage of the topic. This involves researching what users actually want to know about the topic, identifying gaps in your current content, and filling those gaps with valuable information. Use keyword research tools to identify related questions and topics that users search for, then incorporate answers to those questions into your content. Add visuals like infographics, charts, and images to break up text and provide additional value. Ensure your content is well-structured with clear headings, short paragraphs, and logical flow that makes it easy for users to find information. For pages that can’t be meaningfully improved, implement 301 redirects to send users and search equity to more relevant, higher-quality pages. This preserves any backlinks pointing to the thin page while consolidating ranking signals on your best content. For intentional duplicates, use canonical tags to indicate the preferred version and prevent search engines from treating them as separate pages. Combining thin pages into comprehensive resources is another effective strategy; if you have multiple short articles on related topics, merge them into a single, authoritative guide that covers all aspects comprehensively. Repurposing thin content into new formats—converting articles into infographics, videos, or interactive tools—can add value and reach new audiences. Finally, for pages with no traffic, no backlinks, and no strategic value, deletion may be appropriate, though Google recommends improving content rather than removing it whenever possible. The key is matching your remediation strategy to each page’s specific situation and potential value.

The definition and detection of thin content continues to evolve as search technology advances and user expectations change. Google’s increasing emphasis on E-E-A-T signals suggests that future thin content detection will place even greater weight on demonstrating genuine expertise, original research, and trustworthiness. The rise of AI-generated content has created new challenges, as low-quality AI-generated pages that lack human review and editing are increasingly recognized as thin content. Google’s 2024 updates specifically targeted AI-generated content that provides no unique value, indicating that automation alone is no longer sufficient for content creation. The integration of machine learning into Google’s core ranking systems means that thin content detection will become more sophisticated and nuanced, potentially identifying subtle forms of low-quality content that current algorithms miss. As AI search systems become more prevalent, thin content will face additional pressure because these systems prioritize authoritative, comprehensive sources. Organizations that fail to address thin content will find themselves increasingly invisible not just in traditional search but also in AI-generated answers. The future of content strategy must prioritize original research, unique perspectives, comprehensive coverage, and clear expertise to remain visible across all search channels. Content creators should expect that thin content will become progressively less tolerable as search engines and AI systems continue to raise quality standards. The competitive advantage will increasingly belong to organizations that invest in creating genuinely valuable, original, and authoritative content rather than attempting to game search algorithms with shallow material.

While related, thin content and duplicate content are distinct issues. Thin content refers to pages with little valuable information regardless of originality, while duplicate content involves identical or near-identical material across multiple URLs. A page can be thin without being duplicated, and duplicated content can sometimes be thin. Google treats them differently: duplicate content is filtered algorithmically, while thin content is penalized for lack of quality and user value.

Google's Panda algorithm, launched in February 2011, uses machine learning to classify content quality by comparing ranking signals against human quality ratings. The algorithm evaluates factors like originality, depth, expertise, trustworthiness, and whether content provides substantial value compared to competitors. Panda was incorporated into Google's core algorithm in 2016, meaning thin content detection is now part of ongoing ranking evaluations rather than separate updates.

Yes, thin content can often be improved rather than deleted. Google recommends adding more high-quality content to strengthen thin pages rather than removing them entirely. Improvements include expanding topics with original insights, adding relevant data and examples, updating outdated information, improving structure and readability, and ensuring E-E-A-T signals. Only delete content if it has no traffic, no backlinks, and no strategic value to your site.

Common types include: scraped or plagiarized content copied without attribution, doorway pages created solely for keyword ranking, automatically generated low-quality content, duplicate content across multiple pages, keyword-stuffed pages with unnatural repetition, affiliate pages lacking original value, pages with excessive ads that obscure main content, and content that fails to meet Google's E-E-A-T standards for expertise and trustworthiness.

Thin content is less likely to be cited by AI systems like ChatGPT, Perplexity, and Google AI Overviews because these systems prioritize authoritative, original, and comprehensive sources. Pages with insufficient depth and value are filtered out during AI training and retrieval processes. For platforms like AmICited that monitor brand appearances in AI responses, thin content on your domain reduces visibility and citation likelihood in AI-generated answers.

Thin content typically fails to match or satisfy user search intent. When a user searches for information, they expect comprehensive, relevant answers that address their specific question or need. Thin pages with vague, shallow, or generic information don't meet this expectation, causing high bounce rates and signaling to Google that the page isn't valuable. Aligning content with search intent requires depth, clarity, and direct answers to user questions.

Identify thin content by: reviewing pages from a user's perspective to assess value and clarity, checking Google Search Console for manual action penalties, analyzing Google Analytics for pages with consistently low traffic or sudden ranking drops, running a site audit to detect duplicate or overly similar content, using rank tracking tools to monitor performance changes, and evaluating content against E-E-A-T standards for expertise, authority, and trustworthiness.

While exact statistics vary, Google's Panda update in February 2011 impacted 11.8% of queries, indicating widespread thin content problems at that time. Studies show that sites with large numbers of low-quality pages are more likely to experience ranking penalties. The prevalence of thin content remains significant, particularly among sites using automated content generation, affiliate marketing, or content farms without editorial oversight.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Learn what thin content is, how AI systems detect it, and whether ChatGPT, Perplexity, and Google AI penalize low-quality pages. Expert guide with detection met...

Learn how to enhance thin content for AI systems like ChatGPT and Perplexity. Discover strategies for adding depth, improving content structure, and optimizing ...

Learn how to create content deep enough for AI systems to cite. Discover why semantic completeness matters more than word count for ChatGPT, Perplexity, and Goo...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.