Synthetic Data Training

Learn about synthetic data training for AI models, how it works, benefits for machine learning, challenges like model collapse, and implications for brand repre...

Training data is the dataset used to teach machine learning models how to make predictions, recognize patterns, and generate content by learning from labeled or unlabeled examples. It forms the foundation of model development, directly impacting accuracy, performance, and the model’s ability to generalize to new, unseen data.

Training data is the dataset used to teach machine learning models how to make predictions, recognize patterns, and generate content by learning from labeled or unlabeled examples. It forms the foundation of model development, directly impacting accuracy, performance, and the model's ability to generalize to new, unseen data.

Training data is the foundational dataset used to teach machine learning models how to make predictions, recognize patterns, and generate content. It consists of examples or samples that enable algorithms to learn relationships and patterns within information, forming the basis for all machine learning development. Training data can include structured information like spreadsheets and databases, or unstructured data such as images, videos, text, and audio. The quality, diversity, and volume of training data directly determine a model’s accuracy, reliability, and ability to perform effectively on new, unseen data. Without adequate training data, even the most sophisticated algorithms cannot function effectively, making it the cornerstone of successful AI and machine learning projects.

The concept of training data emerged alongside machine learning in the 1950s and 1960s, but its critical importance became widely recognized only in the 2010s as deep learning revolutionized artificial intelligence. Early machine learning projects relied on manually curated, relatively small datasets, often containing thousands of examples. The explosion of digital data and computational power transformed this landscape dramatically. By 2024, according to Stanford’s AI Index Report, nearly 90% of notable AI models came from industry sources, reflecting the massive scale of training data collection and utilization. Modern large language models like GPT-4 and Claude are trained on datasets containing hundreds of billions of tokens, representing an exponential increase from earlier models. This evolution has made training data management and quality assurance critical business functions, with organizations investing heavily in data infrastructure, labeling tools, and governance frameworks to ensure their models perform reliably.

Training data quality fundamentally determines machine learning model performance, yet many organizations underestimate its importance relative to algorithm selection. Research from ScienceDirect and industry studies consistently demonstrate that high-quality training data produces more accurate, reliable, and trustworthy models than larger datasets of poor quality. The principle of “garbage in, garbage out” remains universally applicable—models trained on corrupted, biased, or irrelevant data will produce unreliable outputs regardless of algorithmic sophistication. Data quality encompasses multiple dimensions including accuracy (correctness of labels), completeness (absence of missing values), consistency (uniform formatting and standards), and relevance (alignment with the problem being solved). Organizations implementing rigorous data quality assurance processes report 15-30% improvements in model accuracy compared to those using unvetted data. Additionally, high-quality training data reduces the need for extensive model retraining and fine-tuning, lowering operational costs and accelerating time-to-production for AI applications.

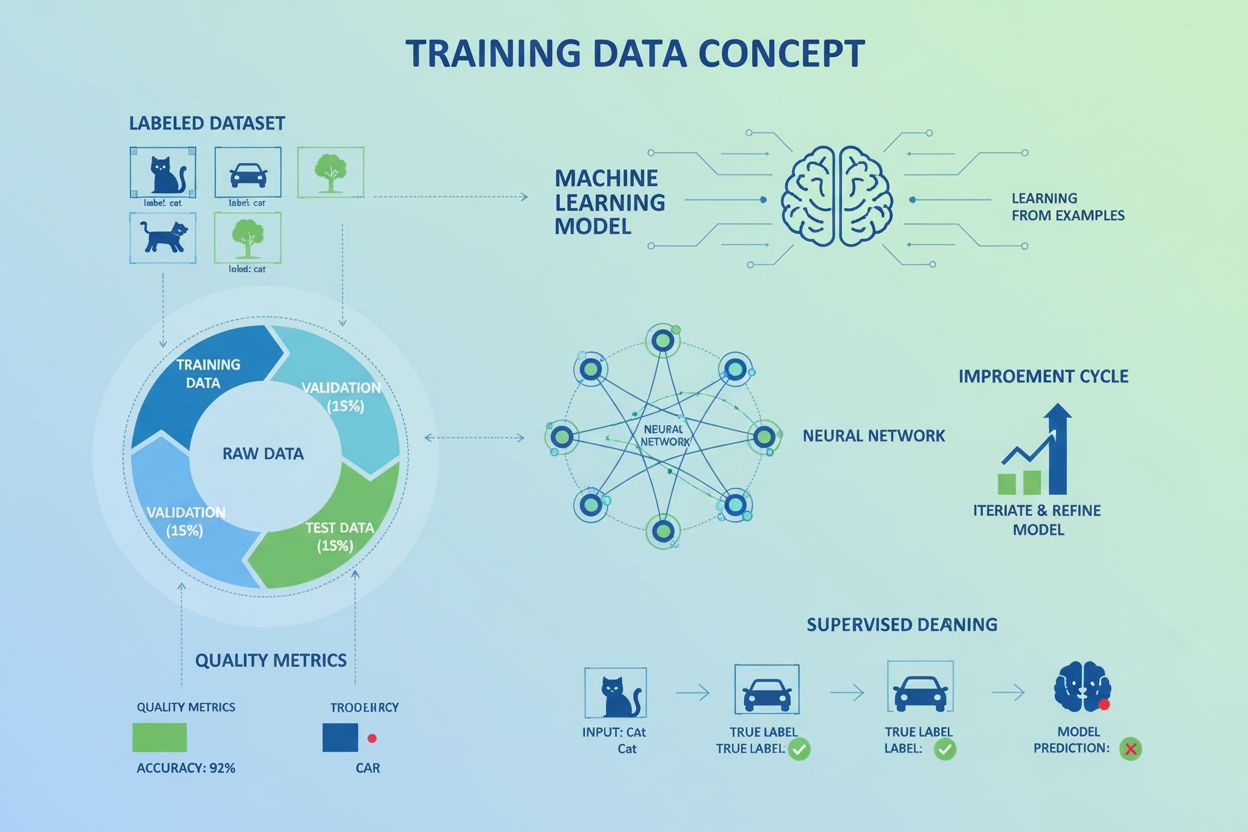

Before training data can be used effectively, it must undergo a comprehensive preparation process that typically consumes 60-80% of a data scientist’s time on machine learning projects. Data collection is the first step, involving gathering relevant examples from diverse sources including public datasets, internal databases, sensors, user interactions, and third-party providers. The collected raw data then enters the data cleaning and transformation phase, where missing values are handled, duplicates are removed, and inconsistencies are corrected. Feature engineering follows, where raw data is transformed into machine-readable formats with relevant features extracted or created. The dataset is then split into three distinct subsets: approximately 70-80% for training, 10-15% for validation, and 10-15% for testing. Data labeling is performed for supervised learning tasks, where human annotators or automated systems assign meaningful tags to examples. Finally, data versioning and documentation ensure reproducibility and traceability throughout the model development lifecycle. This multi-stage pipeline is essential for ensuring that models learn from clean, relevant, and properly structured information.

| Aspect | Supervised Learning | Unsupervised Learning | Semi-Supervised Learning |

|---|---|---|---|

| Training Data Type | Labeled data with features and target outputs | Unlabeled data without predefined outputs | Mix of labeled and unlabeled data |

| Data Preparation | Requires human annotation and labeling | Minimal preprocessing; raw data acceptable | Moderate labeling effort; leverages unlabeled data |

| Model Objective | Learn specific patterns to predict outcomes | Discover inherent structure and patterns | Improve predictions using limited labeled data |

| Common Applications | Classification, regression, spam detection | Clustering, anomaly detection, segmentation | Medical imaging, semi-automated labeling |

| Data Volume Requirements | Moderate to large (thousands to millions) | Large (millions to billions of examples) | Smaller labeled set + large unlabeled set |

| Quality Sensitivity | Very high; label accuracy critical | Moderate; pattern discovery more forgiving | High for labeled portion; moderate for unlabeled |

| Example Use Case | Email spam detection with labeled emails | Customer segmentation without predefined groups | Disease diagnosis with limited expert labels |

Supervised learning represents the most common approach to machine learning and relies entirely on labeled training data where each example includes both input features and the correct output or target value. In this paradigm, human annotators or domain experts assign meaningful labels to raw data, teaching the model the relationship between inputs and desired outputs. For instance, in medical imaging applications, radiologists label X-ray images as “normal,” “suspicious,” or “malignant,” enabling models to learn diagnostic patterns. The labeling process is often the most time-consuming and expensive component of supervised learning projects, particularly when domain expertise is required. Research indicates that one hour of video data can require up to 800 hours of human annotation, creating significant bottlenecks in model development. To address this challenge, organizations increasingly employ human-in-the-loop approaches where automated systems pre-label data and humans review and correct predictions, dramatically reducing annotation time while maintaining quality. Supervised learning excels at tasks with clear, measurable outcomes, making it ideal for applications like fraud detection, sentiment analysis, and object recognition where training data can be precisely labeled.

Unsupervised learning takes a fundamentally different approach to training data, working with unlabeled datasets to discover inherent patterns, structures, and relationships without human guidance. In this approach, the model independently identifies clusters, associations, or anomalies within the data based on statistical properties and similarities. For example, an e-commerce platform might use unsupervised learning on customer purchase history to automatically segment customers into groups like “high-value frequent buyers,” “occasional discount shoppers,” and “new customers,” without predefined categories. Unsupervised learning is particularly valuable when the desired outcomes are unknown or when exploring data to understand its structure before applying supervised methods. However, unsupervised models cannot predict specific outcomes and may discover patterns that don’t align with business objectives. The training data for unsupervised learning requires less preprocessing than supervised data since labeling is unnecessary, but the data must still be clean and representative. Clustering algorithms, dimensionality reduction techniques, and anomaly detection systems all rely on unsupervised training data to function effectively.

A fundamental principle in machine learning is the proper division of training data into distinct subsets to ensure models generalize effectively to new data. The training set (typically 70-80% of data) is used to fit the model by adjusting its parameters and weights through iterative optimization algorithms like gradient descent. The validation set (10-15% of data) serves a different purpose—it evaluates model performance during training and enables fine-tuning of hyperparameters without directly influencing the final model. The test set (10-15% of data) provides an unbiased final evaluation on completely unseen data, simulating real-world performance. This three-way split is critical because using the same data for training and evaluation leads to overfitting, where models memorize training data rather than learning generalizable patterns. Cross-validation techniques, such as k-fold cross-validation, further enhance this approach by rotating which data serves as training versus validation, providing more robust performance estimates. The optimal split ratio depends on dataset size, model complexity, and available computational resources, but the 70-10-10 or 80-10-10 split represents industry best practice for most applications.

Training data is the primary source of bias in machine learning models, as algorithms learn and amplify patterns present in their training examples. If training data underrepresents certain demographic groups, contains historical biases, or reflects systemic inequalities, the resulting model will perpetuate and potentially amplify these biases in its predictions. Research from MIT and NIST demonstrates that AI bias stems not only from biased data but also from how data is collected, labeled, and selected. For example, facial recognition systems trained predominantly on lighter-skinned individuals show significantly higher error rates for darker-skinned faces, directly reflecting training data composition. Addressing bias requires deliberate strategies including diverse data collection to ensure representation across demographics, bias audits to identify problematic patterns, and debiasing techniques to remove or mitigate identified biases. Organizations building trustworthy AI systems invest heavily in training data curation, ensuring datasets reflect the diversity of real-world populations and use cases. This commitment to fair training data is not merely ethical—it’s increasingly a business and legal requirement as regulations like the EU AI Act mandate fairness and non-discrimination in AI systems.

Large language models like ChatGPT, Claude, and Perplexity are trained on massive datasets containing hundreds of billions of tokens from diverse internet sources including books, websites, academic papers, and other text. The composition and quality of this training data directly determines the model’s knowledge, capabilities, limitations, and potential biases. Training data cutoff dates (e.g., ChatGPT’s April 2024 knowledge cutoff) represent a fundamental limitation—models cannot know about events or information beyond their training data. The sources included in training data influence how models respond to queries and what information they prioritize. For instance, if training data contains more English-language content than other languages, the model will perform better in English. Understanding training data composition is essential for assessing model reliability and identifying potential gaps or biases. AmICited monitors how AI systems like ChatGPT, Perplexity, and Google AI Overviews reference and cite information, tracking whether training data influences their responses and how your domain appears in AI-generated content. This monitoring capability helps organizations understand their visibility in AI systems and assess how training data shapes AI recommendations.

The machine learning field is experiencing a significant shift in training data strategy, moving away from the “bigger is better” mentality toward more sophisticated, quality-focused approaches. Synthetic data generation represents one major innovation, where organizations use AI itself to create artificial training examples that augment or replace real-world data. This approach addresses data scarcity, privacy concerns, and cost challenges while enabling controlled experimentation. Another trend is the emphasis on smaller, higher-quality datasets tailored to specific tasks or domains. Rather than training models on billions of generic examples, organizations are building curated datasets of thousands or millions of high-quality examples relevant to their specific use case. For example, legal AI systems trained exclusively on legal documents and case law outperform general-purpose models on legal tasks. Data-centric AI represents a philosophical shift where practitioners focus as much on data quality and curation as on algorithm development. Automated data cleaning and preprocessing using AI itself is accelerating this trend, with newer algorithms capable of removing low-quality text, detecting duplicates, and filtering irrelevant content at scale. These emerging approaches recognize that in the era of large models, training data quality, relevance, and diversity matter more than ever for achieving superior model performance.

The role and importance of training data will continue to evolve as AI systems become more sophisticated and integrated into critical business and societal functions. Foundation models trained on massive, diverse datasets are becoming the baseline for AI development, with organizations fine-tuning these models on smaller, task-specific training datasets rather than training from scratch. This shift reduces the need for enormous training datasets while increasing the importance of high-quality fine-tuning data. Regulatory frameworks like the EU AI Act and emerging data governance standards will increasingly mandate transparency about training data composition, sources, and potential biases, making training data documentation and auditing essential compliance activities. AI monitoring and attribution will become increasingly important as organizations track how their content appears in AI training data and how AI systems cite or reference their information. Platforms like AmICited represent this emerging category, enabling organizations to monitor their brand presence across AI systems and understand how training data influences AI responses. The convergence of synthetic data generation, automated data quality tools, and human-in-the-loop workflows will make training data management more efficient and scalable. Finally, as AI systems become more powerful and consequential, the ethical and fairness implications of training data will receive greater scrutiny, driving investment in bias detection, fairness audits, and responsible data practices across the industry.

Training data is used to fit and teach the model by adjusting its parameters. Validation data evaluates the model during training and helps fine-tune hyperparameters without influencing the final model. Test data provides an unbiased final evaluation on completely unseen data to assess real-world performance. Typically, datasets are split 70-80% training, 10-15% validation, and 10-15% testing to ensure proper model generalization.

While larger datasets can improve model performance, high-quality training data is critical for accuracy and reliability. Poor-quality data introduces noise, bias, and inconsistencies that lead to inaccurate predictions, following the principle of 'garbage in, garbage out.' Research shows that well-curated, smaller datasets often outperform larger datasets with quality issues, making data quality a primary concern for machine learning success.

Training data directly shapes model behavior and can perpetuate or amplify biases present in the data. If training data underrepresents certain demographics or contains historical biases, the model will learn and reproduce those biases in its predictions. Ensuring diverse, representative training data and removing biased examples is essential for building fair, trustworthy AI systems that perform equitably across all user groups.

Data labeling, or human annotation, involves adding meaningful tags or labels to raw data so models can learn from it. For supervised learning, accurate labels are essential because they teach the model the correct patterns and relationships. Domain experts often perform labeling to ensure accuracy, though this process is time-consuming. Automated labeling tools and human-in-the-loop approaches are increasingly used to scale labeling efficiently.

Supervised learning uses labeled training data where each example has a corresponding correct output, allowing the model to learn specific patterns and make predictions. Unsupervised learning uses unlabeled data, allowing the model to discover patterns independently without predefined outcomes. Semi-supervised learning combines both approaches, using a mix of labeled and unlabeled data to improve model performance when labeled data is scarce.

Overfitting occurs when a model learns the training data too well, including its noise and quirks, rather than learning generalizable patterns. This happens when training data is too small, too specific, or when the model is too complex. The model performs well on training data but fails on new data. Proper data splitting, cross-validation, and using diverse training data help prevent overfitting and ensure models generalize effectively.

Generally, larger training datasets improve model performance by providing more examples for the model to learn from. However, the relationship is not linear—diminishing returns occur as datasets grow. Research indicates that doubling training data typically improves accuracy by 2-5%, depending on the task. The optimal dataset size depends on model complexity, task difficulty, and data quality, making both quantity and quality essential considerations.

Training data determines the knowledge, capabilities, and limitations of AI systems. For platforms like ChatGPT, Perplexity, and Claude, the training data cutoff date limits their knowledge of recent events. Understanding training data sources helps users assess model reliability and potential biases. AmICited monitors how these AI systems cite and reference information, tracking whether training data influences their responses and recommendations across different domains.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Learn about synthetic data training for AI models, how it works, benefits for machine learning, challenges like model collapse, and implications for brand repre...

Complete guide to opting out of AI training data collection across ChatGPT, Perplexity, LinkedIn, and other platforms. Learn step-by-step instructions to protec...

Understand the difference between AI training data and live search. Learn how knowledge cutoffs, RAG, and real-time retrieval impact AI visibility and content s...