What is Vector Search and How Does It Work?

Learn how vector search uses machine learning embeddings to find similar items based on meaning rather than exact keywords. Understand vector databases, ANN alg...

Vector search is a method of finding similar items in a dataset by representing data as mathematical vectors and comparing them using distance metrics like cosine similarity or Euclidean distance. This approach enables semantic understanding beyond keyword matching, allowing systems to discover relationships and similarities based on meaning rather than exact text matches.

Vector search is a method of finding similar items in a dataset by representing data as mathematical vectors and comparing them using distance metrics like cosine similarity or Euclidean distance. This approach enables semantic understanding beyond keyword matching, allowing systems to discover relationships and similarities based on meaning rather than exact text matches.

Vector search is a method of finding similar items in a dataset by representing data as mathematical vectors and comparing them using distance metrics to measure semantic similarity. Unlike traditional keyword-based search that relies on exact text matches, vector search understands the meaning and context behind data by converting it into high-dimensional numerical representations called vector embeddings. This approach enables systems to discover relationships and similarities based on semantic content rather than surface-level features, making it particularly powerful for applications requiring contextual understanding. Vector search has become foundational to modern AI systems, enabling semantic search, recommendation engines, anomaly detection, and retrieval-augmented generation (RAG) across platforms like ChatGPT, Perplexity, Google AI Overviews, and Claude.

At its core, vector search transforms data into numerical representations where proximity in space indicates semantic similarity. Each data point—whether text, image, or audio—is converted into a vector, which is essentially an array of numbers representing features or meaning. For example, the word “restaurant” might be represented as [0.2, -0.5, 0.8, 0.1], where each number captures different aspects of the word’s semantic meaning. The fundamental principle is that semantically similar items will have vectors positioned close together in this high-dimensional space, while dissimilar items will be far apart. This mathematical structure allows computers to compare concepts based on meaning rather than exact keyword matches, enabling a search for “best dining establishments” to return results for “top-rated restaurants” even without exact word overlap.

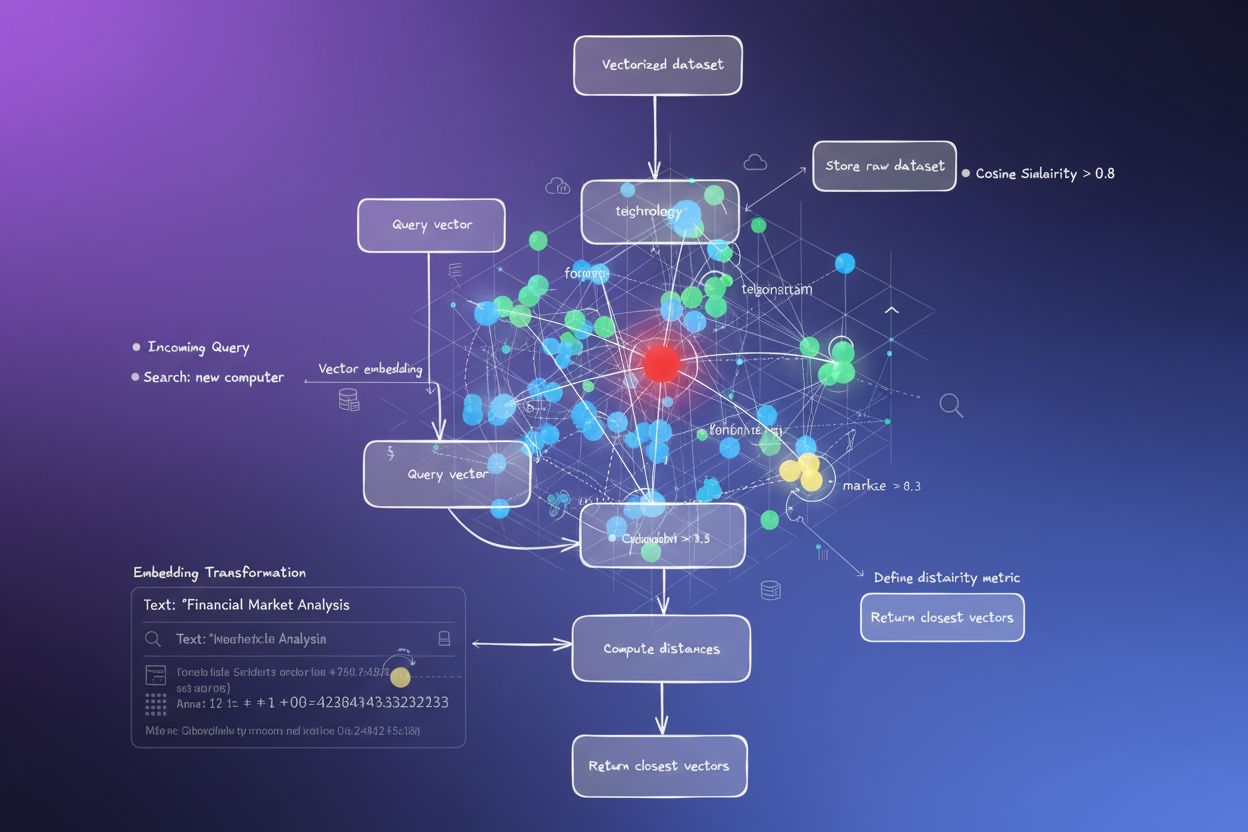

The process of converting data into vectors is called embedding, performed by machine learning models trained on large datasets. These models learn to map similar concepts to nearby locations in vector space through exposure to billions of examples. Common embedding models include Word2Vec, which learns word relationships from context; BERT (Bidirectional Encoder Representations from Transformers), which captures contextual meaning; and CLIP (Contrastive Language-Image Pre-training), which handles multimodal data. The resulting embeddings typically range from 100 to 1,000+ dimensions, creating a rich mathematical representation of semantic relationships. When a user performs a search, their query is converted into a vector using the same embedding model, and the system then calculates distances between the query vector and all stored vectors to identify the most similar items.

Vector search relies on distance metrics to quantify how similar two vectors are. The three primary metrics are cosine similarity, Euclidean distance, and dot product similarity, each with distinct mathematical properties and use cases. Cosine similarity measures the angle between two vectors, ranging from -1 to 1, where 1 indicates identical direction (maximum similarity) and 0 indicates orthogonal vectors (no relationship). This metric is particularly valuable for NLP applications because it focuses on semantic direction regardless of vector magnitude, making it ideal for comparing documents of different lengths. Euclidean distance calculates the straight-line distance between vectors in multidimensional space, considering both magnitude and direction. This metric is sensitive to scale, making it useful when the magnitude of vectors carries meaningful information, such as in recommendation systems where purchase frequency matters.

Dot product similarity combines aspects of both metrics, considering magnitude and direction while offering computational efficiency. Many large language models use dot product for training, making it the appropriate choice for those applications. The selection of the correct distance metric is critical—research shows that using the same metric that trained your embedding model produces optimal results. For instance, the all-MiniLM-L6-v2 model was trained using cosine similarity, so using cosine similarity in your index will produce the most accurate results. Organizations implementing vector search must carefully match their chosen metric to their embedding model and use case to ensure both accuracy and performance.

| Aspect | Vector Search | Keyword Search | Hybrid Search |

|---|---|---|---|

| Matching Method | Semantic similarity based on meaning | Exact word or phrase matching | Combines both semantic and keyword matching |

| Query Understanding | Understands intent and context | Requires exact keywords present | Leverages both approaches for comprehensive results |

| Synonym Handling | Automatically finds synonyms and related terms | Misses synonyms unless explicitly indexed | Captures synonyms through both methods |

| Performance on Vague Queries | Excellent—understands intent | Poor—requires precise keywords | Very good—covers both interpretations |

| Computational Cost | Higher—requires embedding and similarity calculations | Lower—simple string matching | Moderate—runs both searches in parallel |

| Scalability | Requires specialized vector databases | Works with traditional databases | Requires hybrid-capable systems |

| Use Cases | Semantic search, recommendations, RAG, anomaly detection | Exact phrase search, structured data | Enterprise search, AI monitoring, brand tracking |

| Example | Searching “healthy dinner ideas” finds “nutritious meal prep” | Only finds results with exact words “healthy” and “dinner” | Finds both exact matches and semantically related content |

Implementing vector search involves several interconnected steps that transform raw data into searchable semantic representations. The first step is data ingestion and preprocessing, where raw documents, images, or other data are cleaned and normalized. Next comes vector transformation, where an embedding model converts each data item into a numerical vector, typically ranging from 100 to 1,000+ dimensions. These vectors are then stored in a vector database or index structure optimized for high-dimensional data. When a search query arrives, it undergoes the same embedding process to create a query vector. The system then uses distance metrics to calculate similarity scores between the query vector and all stored vectors, ranking results by their proximity to the query.

To make this process efficient at scale, systems employ Approximate Nearest Neighbor (ANN) algorithms like HNSW (Hierarchical Navigable Small World), IVF (Inverted File Index), or ScaNN (Scalable Nearest Neighbors). These algorithms trade perfect accuracy for speed, enabling searches across millions or billions of vectors in milliseconds rather than seconds. HNSW, for example, organizes vectors in a multi-layered graph structure where higher layers contain long-range connections for fast traversal, while lower layers contain short-range connections for precision. This hierarchical approach reduces search complexity from linear O(n) to logarithmic O(log n), making large-scale vector search practical. The choice of algorithm depends on factors like dataset size, query volume, latency requirements, and available computational resources.

Vector search has become essential for AI monitoring platforms like AmICited that track brand mentions across AI systems. Traditional keyword-based monitoring would miss paraphrased mentions, contextual references, and semantic variations of brand names or domain URLs. Vector search enables these platforms to detect when your brand is mentioned in AI-generated responses even when the exact wording differs. For example, if your domain is “amicited.com,” vector search can identify mentions of “AI prompt monitoring platform” or “brand visibility in generative AI” as contextually related to your business, even without explicit URL mentions. This semantic understanding is crucial for comprehensive AI citation tracking across ChatGPT, Perplexity, Google AI Overviews, and Claude.

The market for vector search technology is experiencing explosive growth, reflecting enterprise recognition of its value. According to market research, the vector database market was valued at $1.97 billion in 2024 and is projected to reach $10.60 billion by 2032, growing at a compound annual growth rate (CAGR) of 23.38%. Additionally, Databricks reported 186% growth in vector database adoption in just the first year following their vector search public preview in December 2023. This rapid adoption demonstrates that enterprises increasingly recognize vector search as critical infrastructure for AI applications. For organizations monitoring their presence in AI systems, vector search provides the semantic understanding necessary to capture all meaningful mentions, not just exact keyword matches.

Vector search performance at scale depends critically on sophisticated indexing techniques that balance speed, accuracy, and memory usage. HNSW (Hierarchical Navigable Small World) has emerged as one of the most popular approaches, organizing vectors in a multi-layered graph where each layer contains progressively shorter-range connections. The algorithm starts searches at the top layer with long-range connections for rapid traversal, then descends through layers with increasingly precise connections. Research shows that HNSW achieves state-of-the-art performance with recall rates exceeding 99% while maintaining sub-millisecond query latencies. However, HNSW requires significant memory—benchmarks show that indexing 1 million vectors with HNSW can require 0.5GB to 5GB depending on parameters, making memory optimization important for large-scale deployments.

IVF (Inverted File Index) offers an alternative approach by clustering vectors and indexing them by cluster centroids. This technique reduces search space by focusing on relevant clusters rather than searching all vectors. ScaNN (Scalable Nearest Neighbors), developed by Google Research, optimizes specifically for inner product search and offers excellent performance for recommendation systems. Product Quantization (PQ) compresses vectors by dividing them into subvectors and quantizing each independently, reducing memory requirements by 10-100x at the cost of some accuracy. Organizations implementing vector search must carefully select indexing techniques based on their specific requirements—whether they prioritize recall accuracy, search speed, memory efficiency, or some combination thereof. The field continues to evolve rapidly, with new algorithms and optimization techniques emerging regularly to address the computational challenges of high-dimensional vector operations.

The definition and application of vector search continues to evolve as AI systems become more sophisticated and enterprise adoption accelerates. The future direction points toward hybrid search systems that combine vector search with traditional keyword search and advanced filtering capabilities. These hybrid approaches leverage the semantic understanding of vector search while maintaining the precision and familiarity of keyword matching, delivering superior results for complex queries. Additionally, multimodal vector search is emerging as a critical capability, enabling systems to search across text, images, audio, and video simultaneously using unified embedding spaces. This development will enable more intuitive and comprehensive search experiences across diverse data types.

For organizations monitoring their presence in AI systems, the evolution of vector search has profound implications. As AI platforms like ChatGPT, Perplexity, Google AI Overviews, and Claude increasingly rely on vector search for content retrieval and ranking, understanding how your brand appears semantically becomes as important as traditional keyword visibility. The shift toward semantic understanding means that brand monitoring and AI citation tracking must evolve beyond simple keyword detection to capture contextual mentions and semantic relationships. Organizations that invest in understanding vector search and its applications will be better positioned to optimize their visibility in generative AI systems. The convergence of vector search technology with AI monitoring platforms represents a fundamental shift in how brands understand and manage their presence in the AI-driven information landscape.

Traditional keyword search looks for exact word matches in documents, while vector search understands semantic meaning and context. Vector search converts text into numerical representations called embeddings, allowing it to find relevant results even when exact keywords don't match. For example, searching for 'wireless headphones' using vector search will also return results for 'Bluetooth earbuds' because they share similar semantic meaning, whereas keyword search would miss this connection.

Vector embeddings are numerical representations of data (text, images, audio) converted into arrays of numbers that capture semantic meaning. They're created using machine learning models like Word2Vec, BERT, or transformer-based models that learn to map similar concepts close together in high-dimensional space. For instance, the word 'king' and 'queen' would have embeddings positioned near each other because they share semantic relationships, while 'king' and 'banana' would be far apart.

The three primary distance metrics are cosine similarity (measures angle between vectors), Euclidean distance (measures straight-line distance), and dot product similarity (considers both magnitude and direction). Cosine similarity is most common for NLP applications because it focuses on semantic direction regardless of vector magnitude. The choice of metric should match the one used to train your embedding model for optimal accuracy.

Vector search enables AI monitoring platforms like AmICited to track brand mentions across AI systems (ChatGPT, Perplexity, Google AI Overviews, Claude) by understanding semantic context rather than exact keyword matches. This allows detection of paraphrased mentions, related concepts, and contextual references to your brand, providing comprehensive visibility into how your domain appears in AI-generated responses across multiple platforms.

ANN algorithms like HNSW (Hierarchical Navigable Small World) enable fast similarity searches across millions of vectors by finding approximate rather than exact nearest neighbors. These algorithms use hierarchical graph structures to reduce search complexity from linear to logarithmic, making vector search practical for large-scale applications. HNSW organizes vectors in multi-layered graphs where longer-range connections exist at higher layers for faster traversal.

Enterprises generate massive amounts of unstructured data (emails, documents, support tickets) that traditional keyword search struggles to organize effectively. Vector search enables semantic understanding of this data, powering applications like intelligent search, recommendation systems, anomaly detection, and retrieval-augmented generation (RAG). According to market research, the vector database market is projected to grow from $2.65 billion in 2025 to $8.95 billion by 2030, reflecting enterprise adoption.

Vector databases are specialized systems optimized for storing, indexing, and querying high-dimensional vector data. They implement efficient indexing techniques like HNSW, IVF (Inverted File Index), and ScaNN to enable fast similarity searches at scale. Examples include Milvus, Pinecone, Weaviate, and Zilliz Cloud. These databases handle the computational complexity of vector operations, allowing organizations to build production-ready semantic search and AI applications without managing infrastructure complexity.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Learn how vector search uses machine learning embeddings to find similar items based on meaning rather than exact keywords. Understand vector databases, ANN alg...

Learn how vector embeddings enable AI systems to understand semantic meaning and match content to queries. Explore the technology behind semantic search and AI ...

Learn how embeddings work in AI search engines and language models. Understand vector representations, semantic search, and their role in AI-generated answers.

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.