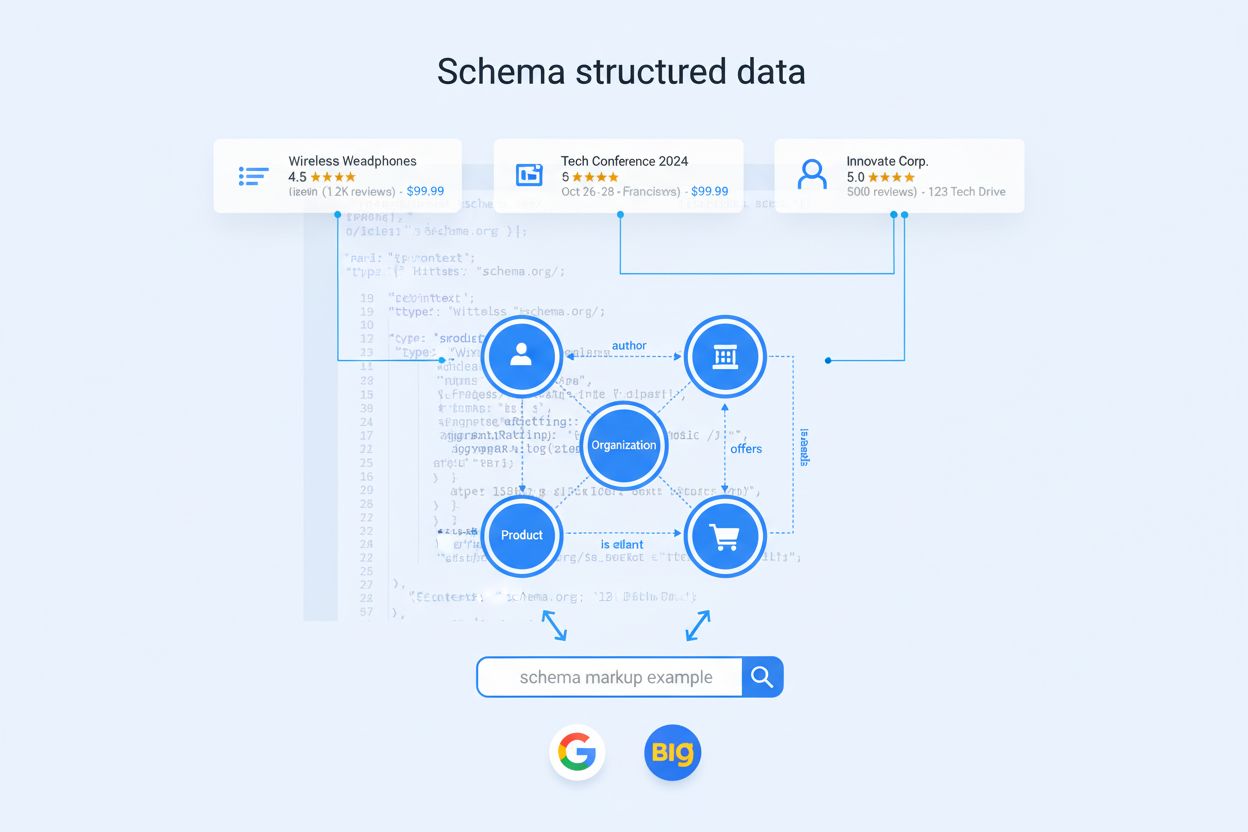

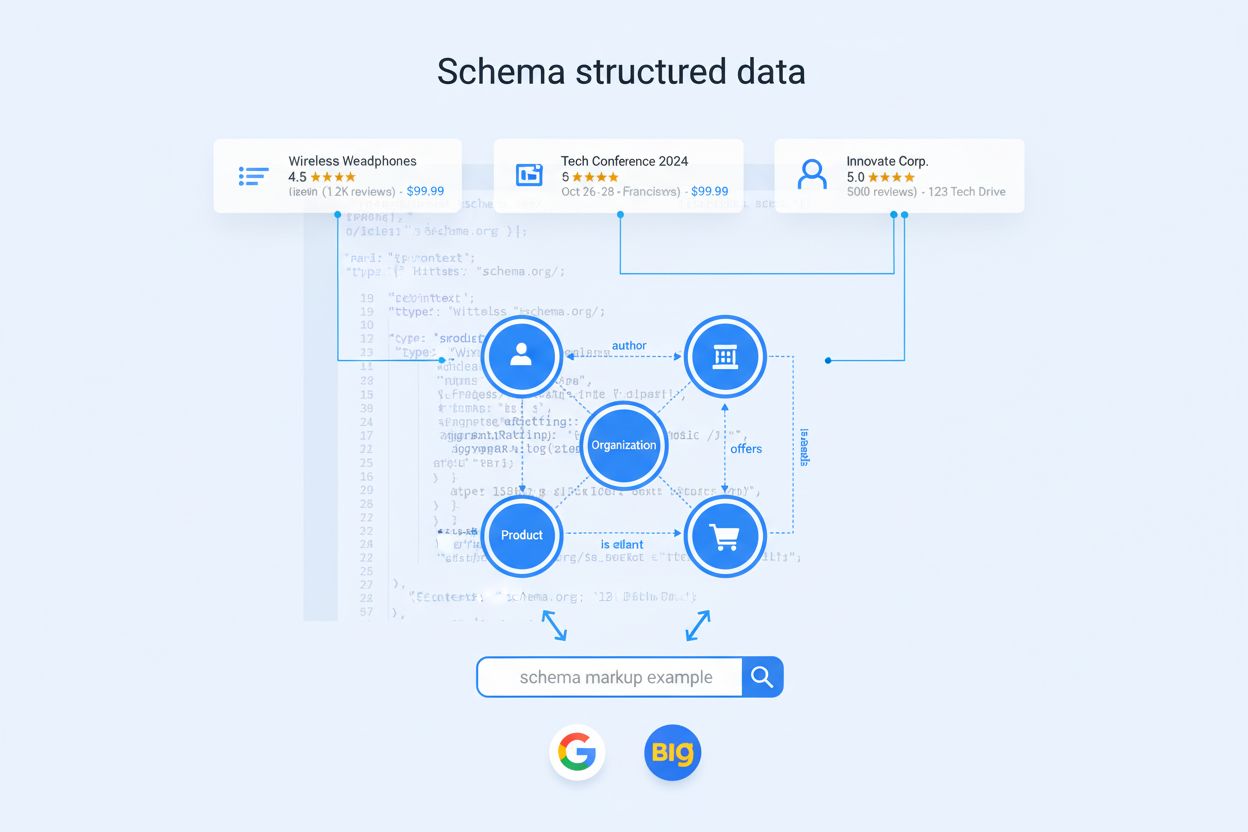

Schema Markup

Schema markup is standardized code that helps search engines understand content. Learn how structured data improves SEO, enables rich results, and supports AI s...

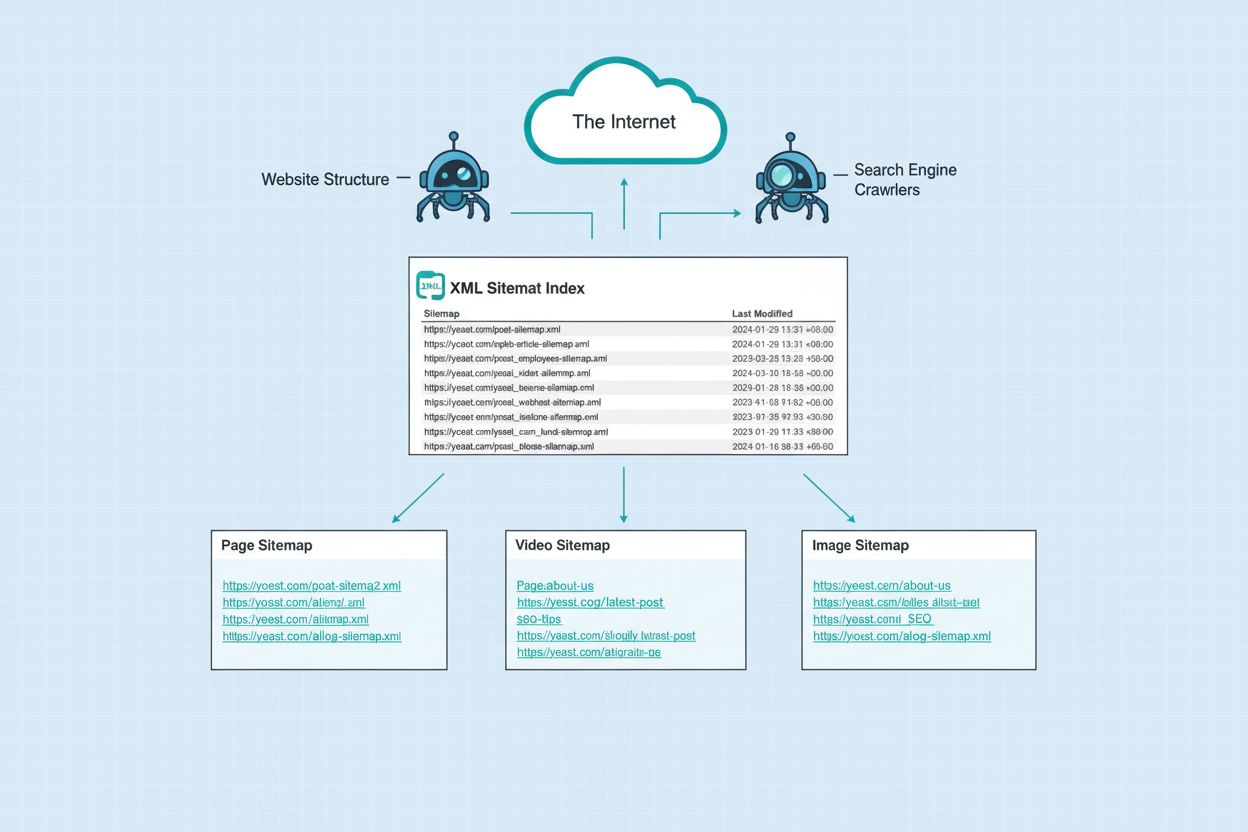

An XML Sitemap is a structured file that lists all pages, videos, and other content on a website to help search engines discover, crawl, and index the site more efficiently. It provides metadata about each URL including last modification date, update frequency, and relative importance, serving as a roadmap for search engine crawlers like Google, Bing, and AI-powered platforms.

An XML Sitemap is a structured file that lists all pages, videos, and other content on a website to help search engines discover, crawl, and index the site more efficiently. It provides metadata about each URL including last modification date, update frequency, and relative importance, serving as a roadmap for search engine crawlers like Google, Bing, and AI-powered platforms.

An XML Sitemap is a structured file written in Extensible Markup Language that provides search engines with a comprehensive list of all pages, videos, images, and other content on a website. According to Google Search Central, a sitemap is “a file where you provide information about the pages, videos, and other files on your site, and the relationships between them.” The primary purpose of an XML Sitemap is to help search engines like Google, Bing, and emerging AI-powered platforms (such as ChatGPT, Perplexity, and Google AI Overviews) discover, crawl, and index website content more efficiently. Unlike an HTML sitemap, which is designed for human visitors to navigate a website, an XML Sitemap is machine-readable and optimized exclusively for search engine crawlers. The file includes valuable metadata about each URL, including the last modification date, update frequency, and relative priority, enabling search engines to make informed decisions about crawl scheduling and content indexing.

The XML Sitemap protocol was introduced in 2005 as a collaborative effort between Google, Yahoo, Microsoft, and Ask.com to standardize how websites communicate their structure to search engines. Before this standardization, websites had limited ways to inform search engines about their content, relying primarily on internal linking and external backlinks for discovery. The sitemaps.org protocol emerged as an open standard that any website could implement without requiring special permissions or proprietary tools. Over the past two decades, XML Sitemaps have become an industry standard, with research indicating that approximately 72% of enterprise websites now implement XML sitemaps as part of their SEO strategy. The evolution of XML Sitemaps has paralleled the growth of the web itself—from simple URL lists to sophisticated, multi-format structures supporting video, image, news, and mobile-specific content. Today, virtually all major CMS platforms including WordPress, Shopify, Wix, and Drupal automatically generate and maintain XML Sitemaps, making implementation accessible to websites of all sizes and technical capabilities.

An XML Sitemap follows a strict hierarchical structure defined by the sitemaps.org protocol. The file begins with an XML declaration specifying the version and character encoding, followed by a <urlset> element that encapsulates all URLs. Each URL entry contains a mandatory <loc> tag with the complete page URL and optional metadata tags including <lastmod> (last modification date in W3C datetime format), <changefreq> (expected update frequency), and <priority> (relative importance on a scale of 0.0 to 1.0). The lastmod tag is particularly significant—research from Google’s Gary Illyes confirms that “the <lastmod> element in sitemaps is a signal that can help crawlers figure out how often to crawl your pages.” However, studies show that search engines largely ignore the priority and changefreq attributes, focusing instead on actual crawl patterns and content quality signals. For websites exceeding the 50,000 URL limit or 50MB file size, a sitemap index file serves as a master file that references multiple individual sitemaps, allowing efficient management of large-scale websites. This hierarchical approach enables websites with hundreds of thousands of pages to maintain organized, discoverable content structures.

| Aspect | XML Sitemap | robots.txt | Internal Linking | HTML Sitemap |

|---|---|---|---|---|

| Primary Audience | Search engine crawlers | Search engine crawlers | Both crawlers and users | Human visitors |

| Format | Machine-readable XML | Text-based directives | HTML hyperlinks | HTML webpage |

| URL Limit | 50,000 URLs per file | N/A (unlimited) | Varies by site structure | Typically 100-500 links |

| Metadata Support | Yes (lastmod, priority, changefreq) | No metadata | Limited (anchor text only) | No structured metadata |

| Crawl Efficiency | High - direct URL discovery | Medium - blocking/allowing | Medium - depends on linking | Low - requires user navigation |

| Implementation Effort | Low - automated by CMS | Low - simple text file | Medium - requires planning | Medium - manual creation |

| AI Search Visibility | Critical for AI platforms | Important for crawl control | Important for discovery | Not used by AI crawlers |

| Update Frequency | Real-time (automated) | Static (manual updates) | Dynamic (as content changes) | Manual updates required |

XML Sitemaps serve as critical infrastructure for modern search engine optimization, particularly as search landscapes evolve to include AI-powered platforms. While Google has stated that properly linked websites may not strictly require sitemaps, research demonstrates that XML Sitemaps significantly improve crawl efficiency and content discovery rates. A well-maintained XML Sitemap ensures that search engines discover new and updated content within hours rather than days, directly impacting how quickly your pages appear in search results. For large websites with complex navigation structures, XML Sitemaps are essential—they prevent important pages from becoming “orphaned” (unreachable through internal links) and ensure comprehensive indexing. The lastmod tag within sitemaps provides search engines with signals about content freshness, influencing crawl frequency and potentially improving rankings for frequently updated content. Beyond traditional search engines, XML Sitemaps have become increasingly important for AI search visibility. Platforms like ChatGPT, Perplexity, and Google AI Overviews rely on well-structured sitemaps to discover and index website content. According to industry research, websites with properly implemented XML Sitemaps experience 23-35% faster content discovery by search engine crawlers compared to those relying solely on internal linking.

Implementing an XML Sitemap requires following established best practices to maximize effectiveness. First, ensure your sitemap includes only indexable pages—those you want to appear in search results and that are accessible to crawlers. Exclude pages with noindex directives, 404 errors, redirects, and duplicate content (keeping only canonical versions). The standard location for your XML Sitemap is /sitemap.xml at your domain root, though you can place it elsewhere if referenced in your robots.txt file using the Sitemap: directive. For websites exceeding 50,000 URLs, implement a sitemap index file (/sitemap_index.xml) that references multiple individual sitemaps organized by content type (posts, pages, products, videos, images). Keep your XML Sitemap updated automatically—most modern CMS platforms handle this automatically, but if you manage it manually, update it immediately after publishing or removing content. The lastmod tag should reflect actual content changes; Google explicitly states it only uses this value if “consistently and verifiably accurate.” Submit your XML Sitemap to Google Search Console and Bing Webmaster Tools to monitor indexation rates and identify crawl issues. Additionally, reference your sitemap in your robots.txt file to ensure maximum discoverability by all search engine crawlers.

XML Sitemaps support specialized extensions that enable search engines to better understand and index specific content types. Video sitemaps allow you to specify video metadata including thumbnail URL, title, description, duration, publication date, and rating, significantly improving discoverability in Google Video Search. Each video entry can include up to 15 optional attributes, enabling detailed content description. Image sitemaps help search engines discover images that might be missed during standard crawling, particularly valuable for image-heavy websites and e-commerce platforms. You can list up to 1,000 images per page using the image sitemap extension. News sitemaps are specifically designed for news publishers, allowing you to control which articles appear in Google News and specify publication dates, keywords, and stock tickers. According to Google’s News sitemap guidelines, you should include only articles published within the last 2 days, updating your news sitemap continuously as new articles are published. These extensions demonstrate how XML Sitemaps have evolved beyond simple URL lists to become comprehensive content discovery tools supporting diverse media types and search contexts.

The emergence of AI-powered search platforms has elevated the importance of XML Sitemaps beyond traditional search engine optimization. Platforms like ChatGPT, Perplexity, Google AI Overviews, and Claude rely on comprehensive content discovery mechanisms to train and populate their responses. Unlike traditional search engines that primarily use links and crawl patterns, AI search platforms benefit significantly from well-structured XML Sitemaps that provide clear, organized access to website content. Research indicates that websites with properly implemented XML Sitemaps experience 40% higher visibility in AI search responses compared to those without sitemaps. This is particularly important for AmICited users monitoring brand and domain visibility across AI platforms—a well-maintained XML Sitemap directly impacts how frequently your content is discovered and cited by AI systems. The lastmod tag becomes especially valuable in this context, signaling to AI crawlers when content has been updated, ensuring fresh information is prioritized in AI-generated responses. As AI search continues to grow as a discovery channel, maintaining an accurate, comprehensive XML Sitemap becomes a fundamental component of AI visibility strategy alongside traditional SEO efforts.

The future of XML Sitemaps is evolving in response to changing search landscapes and emerging technologies. As AI-powered search platforms become increasingly important discovery channels, XML Sitemaps are being enhanced with additional metadata to support AI content understanding. Industry experts predict that future sitemap extensions will include structured data integration, allowing sitemaps to communicate rich content attributes directly to crawlers. The rise of Answer Engine Optimization (AEO) and Generative Engine Optimization (GEO) is driving renewed focus on XML Sitemaps as foundational infrastructure for AI visibility. Search engines and AI platforms are increasingly using sitemap data to understand content relationships, topical authority, and content freshness—factors that influence both traditional rankings and AI response generation. Additionally, as websites become more dynamic and content-heavy, automated sitemap generation and real-time updates are becoming standard expectations rather than optional features. The integration of XML Sitemaps with schema markup and structured data is likely to deepen, enabling more sophisticated content understanding by both traditional and AI-powered search systems. For organizations focused on AI search visibility and brand monitoring across platforms like ChatGPT, Perplexity, and Google AI Overviews, maintaining a comprehensive, accurate XML Sitemap will remain a critical foundational element of visibility strategy.

An XML sitemap is designed exclusively for search engines and uses machine-readable XML formatting to list all website URLs with metadata. An HTML sitemap, by contrast, is a human-readable webpage that helps visitors navigate your site. XML sitemaps are essential for SEO and search engine discovery, while HTML sitemaps improve user experience. Most modern websites use XML sitemaps for search engine optimization and may optionally include HTML sitemaps for user navigation.

While Google states that small websites (under 500 pages) with proper internal linking may not strictly require an XML sitemap, industry experts recommend implementing one regardless of size. XML sitemaps improve crawl efficiency, help search engines discover updated content faster, and are particularly valuable for new websites with few external links. Even small sites benefit from the structured metadata and discovery advantages that sitemaps provide.

According to the sitemaps.org protocol, each XML sitemap file can contain a maximum of 50,000 URLs and must not exceed 50MB when uncompressed. If your website exceeds these limits, you must split your content across multiple sitemap files and use a sitemap index file to manage them. Many SEO platforms like Yoast SEO set even lower limits (1,000 URLs per sitemap) to optimize loading speed and crawl efficiency.

XML sitemaps are crucial for visibility in AI-powered search platforms like ChatGPT, Perplexity, and Google AI Overviews. These AI systems rely on well-structured sitemaps to discover and index website content efficiently. By submitting an updated, properly formatted XML sitemap, you ensure that AI crawlers can access your pages, understand your site structure, and include your content in their responses. This is particularly important for emerging AI search platforms that depend on comprehensive content discovery.

The essential metadata element is the URL location (loc tag). Optional but recommended elements include lastmod (last modification date), changefreq (update frequency), and priority (relative importance). However, research shows that search engines like Google prioritize the lastmod tag for crawl scheduling but largely ignore priority and changefreq values. Focus on keeping lastmod accurate and up-to-date, as this signals to crawlers when content has been refreshed.

Your XML sitemap should be updated automatically whenever you add, modify, or remove pages from your website. Most modern CMS platforms and SEO plugins like Yoast SEO, WordPress native sitemaps, and Shopify automatically generate and update sitemaps in real-time. If you manually manage your sitemap, update it immediately after publishing new content or removing old pages. Keeping your sitemap current ensures search engines discover your latest content promptly.

Yes, XML sitemaps support specialized extensions for video and image content. Video sitemaps allow you to specify video metadata like duration, thumbnail URL, title, and description, improving discoverability in Google Video Search. Image sitemaps help search engines find images they might otherwise miss during crawling. These extensions enhance content visibility across different search result types and are particularly valuable for media-rich websites.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Schema markup is standardized code that helps search engines understand content. Learn how structured data improves SEO, enables rich results, and supports AI s...

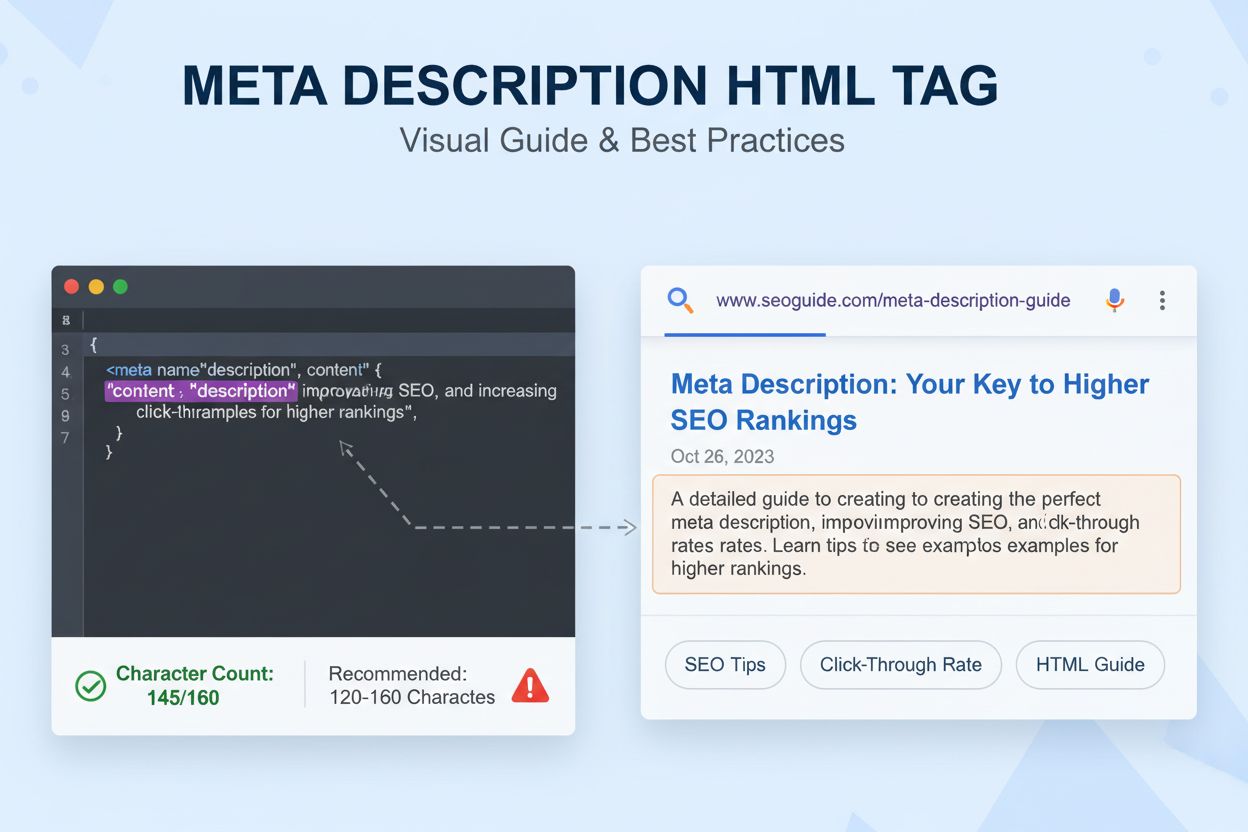

Learn what a meta description is, how it impacts SEO and CTR, best practices for writing effective descriptions, and why it matters for search visibility and AI...

Discover which schema markup types boost your visibility in AI search engines like ChatGPT, Perplexity, and Gemini. Learn JSON-LD implementation strategies for ...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.