How exactly do AI engines crawl and index content? It's not like traditional SEO and I'm confused

Community discussion on how AI engines index content. Real experiences from technical SEOs understanding AI crawler behavior and content processing.

Traditional SEO duplicate content handling is well-understood: canonicals, redirects, parameter handling, etc.

But how do AI systems handle duplicate content? The rules seem different.

What I’ve noticed:

Questions:

Anyone else dealing with this issue?

Great question. AI handles duplicates very differently from Google.

Google approach:

AI approach (varies by system):

| AI System | Duplicate Handling |

|---|---|

| Training-based (ChatGPT) | Whatever was in training data, likely multiple versions |

| Search-based (Perplexity) | Real-time deduplication based on current search |

| Hybrid (Google AI) | Mix of index signals and AI understanding |

The core issue:

AI models trained on web data may have ingested content from both your site AND scraper sites. They don’t inherently know which is original.

What actually matters for AI:

Canonical tags alone won’t solve AI attribution issues.

Technical measures that help AI identify your content as original:

1. Clear authorship signals:

- Author name prominently displayed

- Author schema markup

- Link to author profile/bio

- Author consistent across your content

2. Publication date prominence:

- Clear publish date on page

- DatePublished in schema

- Updated dates where relevant

3. Entity disambiguation:

- Organization schema

- About page with clear entity information

- Consistent NAP across web

4. llms.txt implementation:

- Explicitly tell AI what your site is about

- Identify your primary content

- Note ownership/attribution

5. Content uniqueness signals:

- Original images with your metadata

- Unique data points not available elsewhere

- First-person perspectives

The key insight:

Make it OBVIOUS to AI systems that you’re the original source through consistent, clear signals - not just canonical tags they may not respect.

Practical example from our experience:

The problem we had:

Our product documentation was getting cited, but attributed to third-party sites that had republished it (with permission).

What we discovered:

What fixed it:

Clear ownership signals on original content

Unique content additions

Link structure

Result:

After 2 months, AI shifted to citing our original documentation instead of duplicates.

Adding the scraper site angle:

Why scraper sites sometimes get cited instead of you:

What you can do:

Technical measures:

Attribution protection:

Proactive signals:

The frustrating truth:

Once AI has trained on scraper content, you can’t undo that. You can only influence future retrieval by strengthening your authority signals.

Enterprise perspective on duplicate content for AI:

Our challenges:

Our approach:

| Content Type | Strategy |

|---|---|

| Language variants | Hreflang + clear language signals in content |

| Regional variants | Unique local examples, local author signals |

| Partner content | Clear attribution, distinct perspectives |

| UGC | Moderation + unique editorial commentary |

What we found:

AI systems are surprisingly good at understanding content relationships when given clear signals. The key is making relationships EXPLICIT.

Example:

Instead of just canonical tags, we added:

Making it human-readable helps AI understand relationships too.

AI crawler control options:

Current AI crawler user agents:

| Crawler | Company | robots.txt control |

|---|---|---|

| GPTBot | OpenAI | Respects robots.txt |

| Google-Extended | Google AI | Respects robots.txt |

| Anthropic-AI | Anthropic | Respects robots.txt |

| CCBot | Common Crawl | Respects robots.txt |

| PerplexityBot | Perplexity | Respects robots.txt |

Blocking duplicate content from AI:

# Block print versions from AI crawlers

User-agent: GPTBot

Disallow: /print/

Disallow: /*?print=

User-agent: Google-Extended

Disallow: /print/

Disallow: /*?print=

Considerations:

The llms.txt approach:

Rather than blocking, you can use llms.txt to DIRECT AI to your canonical content:

# llms.txt

Primary content: /docs/

Canonical documentation: https://yoursite.com/docs/

This is still emerging but more elegant than blocking.

Content strategy angle on duplicate prevention:

The best duplicate content strategy is not having duplicates:

Instead of:

Content uniqueness tactics:

| Tactic | How It Helps |

|---|---|

| Unique data points | Can’t be duplicated if it’s your data |

| First-person experience | Specific to you |

| Expert quotes | Attributed to specific people |

| Original images | With metadata showing ownership |

| Proprietary frameworks | Your unique methodology |

The mindset:

If your content could be copy-pasted without anyone noticing, it’s not differentiated enough. Create content that’s clearly YOURS.

This discussion has completely reframed how I think about duplicate content for AI. Summary of my action items:

Technical implementation:

Strengthen authorship signals

Clear ownership indicators

Selective AI crawler control

Content uniqueness audit

Strategic approach:

Thanks everyone for the insights. This is much more nuanced than traditional duplicate content handling.

Get personalized help from our team. We'll respond within 24 hours.

Monitor which of your content pages get cited by AI platforms. Identify duplicate content issues affecting your AI visibility.

Community discussion on how AI engines index content. Real experiences from technical SEOs understanding AI crawler behavior and content processing.

Learn how to manage and prevent duplicate content when using AI tools. Discover canonical tags, redirects, detection tools, and best practices for maintaining u...

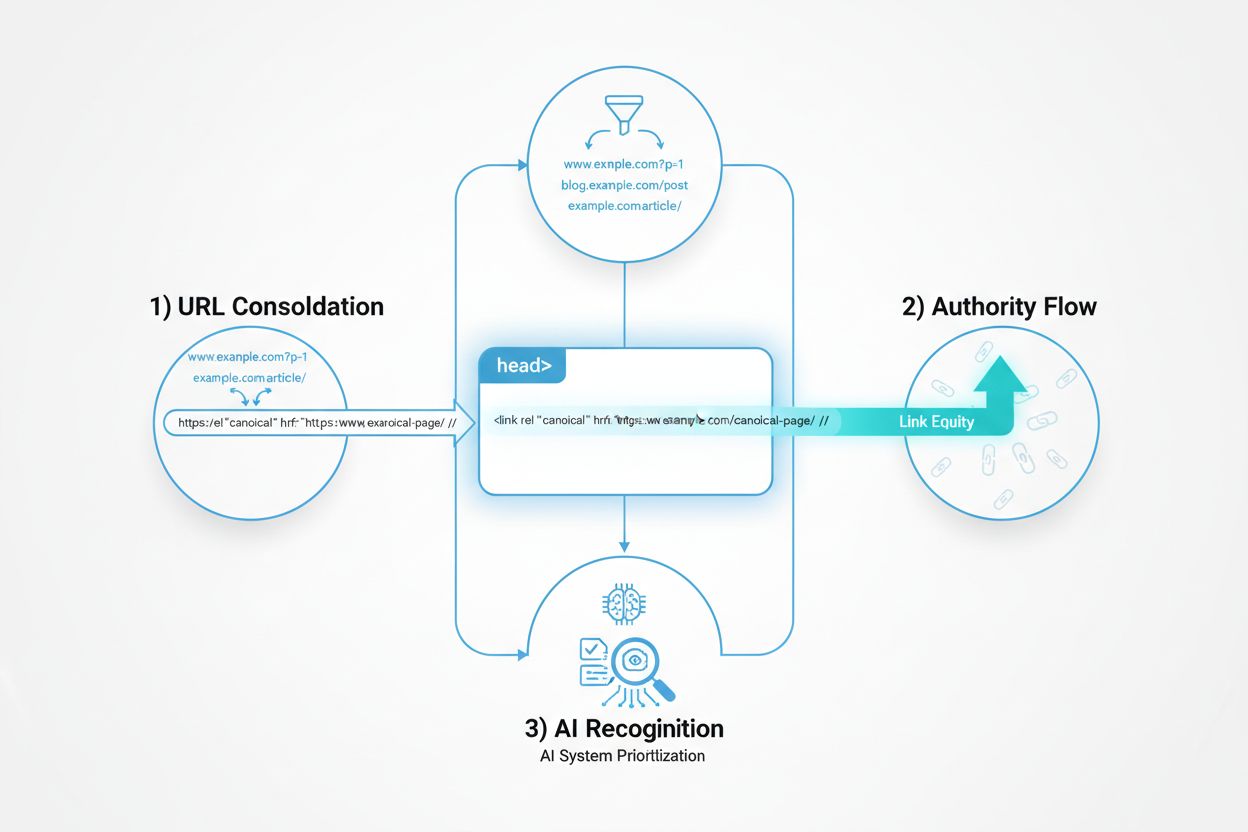

Learn how canonical URLs prevent duplicate content problems in AI search systems. Discover best practices for implementing canonicals to improve AI visibility a...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.