What is an AI Content Audit and Why Does Your Brand Need One?

Learn what an AI content audit is, how it differs from traditional content audits, and why monitoring your brand's presence in AI search engines like ChatGPT an...

Processes for challenging inaccurate or harmful AI-generated brand content. AI Content Disputes refer to formal challenges when artificial intelligence systems generate misleading information about brands, products, or organizations. These disputes arise from AI hallucinations, incorrect citations, and misrepresentations that damage brand reputation across platforms like ChatGPT, Perplexity, and Google AI Overviews. Effective dispute resolution requires monitoring, documentation, direct outreach to AI companies, and strategic content creation to correct misinformation.

Processes for challenging inaccurate or harmful AI-generated brand content. AI Content Disputes refer to formal challenges when artificial intelligence systems generate misleading information about brands, products, or organizations. These disputes arise from AI hallucinations, incorrect citations, and misrepresentations that damage brand reputation across platforms like ChatGPT, Perplexity, and Google AI Overviews. Effective dispute resolution requires monitoring, documentation, direct outreach to AI companies, and strategic content creation to correct misinformation.

An AI Content Dispute refers to a formal challenge or complaint process when artificial intelligence systems generate, cite, or present inaccurate, misleading, or harmful information about a brand, product, or organization. These disputes arise when AI-powered search engines, chatbots, and language models produce content that misrepresents facts, attributes incorrect information, or damages brand reputation through AI hallucinations—instances where AI systems confidently present false information as fact. As AI search becomes increasingly central to how consumers discover and evaluate brands, with platforms like ChatGPT, Perplexity, Google AI Overviews, and Gemini influencing purchasing decisions for millions of users, the ability to challenge and correct inaccurate AI-generated content has become critical for brand protection and reputation management.

The impact of inaccurate AI-generated content extends far beyond traditional brand monitoring concerns. Unlike social media posts that fade from feeds within hours, AI-generated brand mentions can persist across multiple platforms and influence consumer perception for months or years. Nearly 50% of people trust AI recommendations, and with over 700 million weekly ChatGPT users, a single inaccurate statement about your brand can reach tens of millions of potential customers. When AI systems cite incorrect pricing, misattribute product features, or recommend competitors instead of your brand, the consequences directly affect customer acquisition, conversion rates, and market positioning.

| Aspect | Traditional Brand Disputes | AI Content Disputes |

|---|---|---|

| Lifespan | Hours to days (social media) | Months to years (persistent in AI models) |

| Reach | Limited to platform followers | Millions of AI users globally |

| Correction Speed | Immediate reply possible | Requires content strategy & outreach |

| Verification | Human review | AI-generated, harder to fact-check |

| Impact on Search | Affects SEO rankings | Affects AI Share of Voice & recommendations |

| Audience Trust | Variable by platform | High (50%+ trust AI) |

| Permanence | Deletable by poster | Embedded in AI training data |

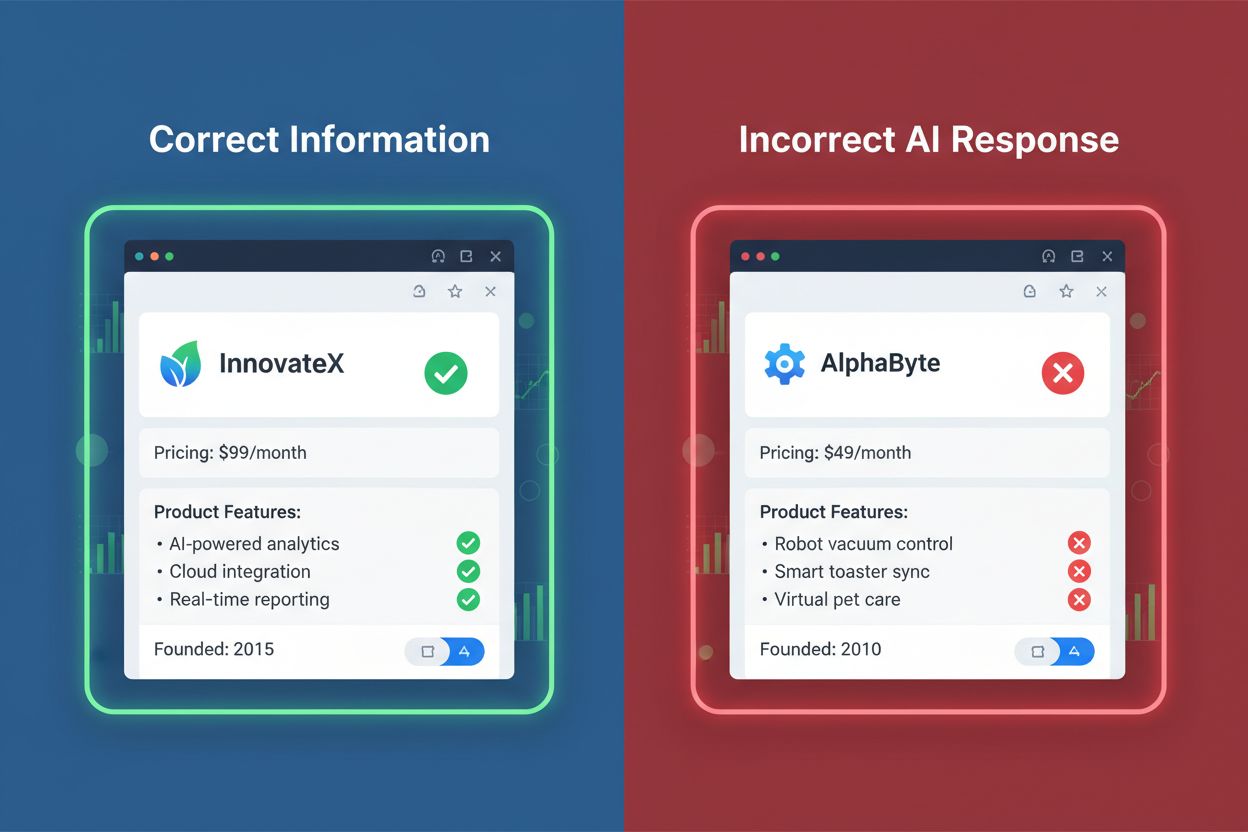

Real-world examples demonstrate the severity: AI systems have cited incorrect pricing for subscription services, listed discontinued products as current offerings, and attributed features to competitors that actually belong to the original brand. These errors directly influence purchasing decisions and can result in lost revenue, damaged customer relationships, and diminished competitive positioning in AI-driven search results.

AI systems generate various categories of inaccurate or harmful content about brands. Understanding these types helps organizations identify and prioritize dispute resolution efforts:

Understanding why AI systems produce inaccurate brand information is essential for developing effective dispute resolution strategies. AI hallucinations occur when language models generate plausible-sounding but false information, a fundamental characteristic of how these systems work. Rather than retrieving facts from a database, AI models predict the next word based on statistical patterns in training data, sometimes producing confident assertions about information that doesn’t exist or is outdated. Many AI systems use Retrieval Augmented Generation (RAG), which supplements training data with real-time web searches, but this process can amplify errors if source websites contain inaccurate information or if the AI misinterprets the context. Training data limitations mean that information about newer products, recent company changes, or updated pricing may not be reflected in AI responses. Additionally, bias in source selection occurs when AI systems disproportionately weight certain websites—particularly high-authority domains like Wikipedia, Reddit, or industry review sites—which may contain outdated or incomplete information about your brand.

Effective dispute management begins with systematic monitoring across multiple AI platforms. Manual monitoring involves periodically querying AI systems with brand-related questions and reviewing responses for accuracy, but this approach is time-consuming and captures only a fraction of potential mentions. Automated AI monitoring tools provide comprehensive tracking across platforms including ChatGPT, Perplexity, Google AI Overviews, Gemini, Claude, and DeepSeek, analyzing thousands of AI-generated responses to identify inaccuracies. Leading platforms like AmICited.com specialize in tracking how AI systems reference brands, providing sentiment analysis to identify negative portrayals and citation analysis to reveal which sources AI systems rely on when discussing your brand. Competing solutions like Authoritas offer customizable prompt tracking with statistical confidence measures, Profound provides enterprise-scale daily monitoring with detailed response analysis, and Ahrefs Brand Radar tracks AI mentions alongside traditional search visibility. Tools like Otterly and Peec.ai offer more affordable entry-level options for smaller brands beginning to monitor their AI presence. Effective monitoring should track not just brand mentions but also AI Share of Voice—the percentage of AI recommendations your brand receives compared to competitors in relevant categories.

Once inaccurate AI content is identified, multiple resolution pathways exist depending on the severity and nature of the dispute. Direct outreach to AI companies involves contacting platforms like OpenAI, Anthropic, Google, and Perplexity with documented evidence of inaccuracies, requesting corrections or retraining of models. Many AI companies have established feedback mechanisms and take brand reputation concerns seriously, particularly when errors affect customer trust or involve factual misstatements. Content creation and optimization addresses the root cause by ensuring your website contains clear, accurate, comprehensive information about your brand, products, pricing, and company history—information that AI systems will cite when generating responses. Creating dedicated comparison pages and FAQ sections that directly address common questions and competitor comparisons increases the likelihood that AI systems will cite your authoritative content rather than third-party sources. Public relations and media outreach can amplify corrections through industry publications and news coverage, influencing the web sources that AI systems rely on. Influencer partnerships and third-party reviews on high-authority platforms like G2, Capterra, or industry-specific review sites provide alternative sources for AI systems to cite, improving brand representation in AI responses. The most effective approach combines multiple strategies: correcting inaccuracies on your website, creating authoritative content that AI systems will cite, and directly engaging with AI companies when disputes involve factual errors that harm brand reputation.

A growing ecosystem of specialized tools helps brands monitor, analyze, and manage their presence in AI-generated content. AmICited.com leads the market for AI brand monitoring, tracking mentions across major AI platforms with detailed sentiment analysis and citation tracking to identify which sources influence AI responses about your brand. Authoritas combines AI search monitoring with traditional SEO tracking, offering customizable prompt generation and statistical confidence measures for enterprise-scale analysis. Profound provides daily monitoring with comprehensive response analysis and conversation exploration features to understand what questions trigger brand mentions. Ahrefs Brand Radar integrates AI mention tracking with search visibility metrics, showing how your brand appears across six major AI indexes alongside traditional search performance. Otterly.ai offers affordable weekly monitoring with automatic reporting, ideal for brands beginning their AI visibility journey. Peec.ai provides mid-tier solutions with clean dashboards and competitor benchmarking. These platforms share common capabilities including mention frequency tracking, sentiment analysis, competitor comparison, and citation source identification, but differ in update frequency (real-time to weekly), platform coverage, customization options, and pricing models. Selecting the right tool depends on your organization’s size, budget, and the depth of AI search analysis required.

As AI content disputes become more common, legal and regulatory frameworks are evolving to address brand protection and content accuracy. The Federal Trade Commission (FTC) has taken enforcement action against companies making deceptive claims about AI capabilities, including cases where AI content detection tools were advertised with inflated accuracy claims. Copyright and trademark concerns arise when AI systems misattribute intellectual property or generate content that violates brand rights. Emerging regulations in the EU, UK, and proposed U.S. legislation increasingly require AI systems to disclose training data sources and provide mechanisms for correcting inaccurate information. Liability questions remain unsettled: determining whether responsibility for inaccurate AI content falls on AI developers, the companies whose content was used in training, or the brands themselves. Forward-thinking organizations should monitor regulatory developments and ensure their dispute resolution processes align with emerging legal standards around AI transparency and accuracy.

Proactive brand management in the AI era requires a multi-layered approach combining prevention, monitoring, and rapid response. Maintain accurate website information across all pages, ensuring that pricing, product descriptions, company history, and key facts are current and clearly presented—this becomes the authoritative source AI systems cite. Create comprehensive brand documentation including detailed FAQ pages, comparison pages versus competitors, and “about us” content that directly addresses questions AI systems are likely to encounter. Monitor regularly using specialized AI monitoring tools to catch inaccuracies early before they spread across multiple AI platforms. Respond quickly when disputes are identified, using both direct outreach to AI companies and content creation to correct misinformation. Build brand authority through third-party mentions on high-authority sites, industry reviews, and media coverage that AI systems will prioritize as sources. Optimize for AI search by creating content that answers the specific questions users ask AI systems, ensuring your brand appears in relevant responses. Organizations that combine these practices—accurate information, comprehensive documentation, active monitoring, rapid response, and authority building—significantly reduce the risk and impact of AI content disputes while improving their overall visibility in AI-driven search results.

An AI content dispute occurs when artificial intelligence systems generate, cite, or present inaccurate, misleading, or harmful information about a brand, product, or organization. These disputes arise from AI hallucinations—instances where AI systems confidently present false information as fact—and can significantly damage brand reputation across platforms like ChatGPT, Perplexity, and Google AI Overviews.

Unlike social media posts that fade within hours, AI-generated brand mentions persist for months or years and reach millions of users. Nearly 50% of people trust AI recommendations, and with over 700 million weekly ChatGPT users, a single inaccurate statement can influence tens of millions of potential customers and directly affect purchasing decisions.

Use specialized AI monitoring tools like AmICited.com, Authoritas, or Profound to track how AI systems reference your brand across ChatGPT, Perplexity, Google AI Overviews, and Gemini. These tools provide sentiment analysis, citation tracking, and competitor benchmarking to identify inaccuracies, negative portrayals, and missing mentions.

Common disputes include factual errors (incorrect pricing, founding dates, product features), misattributed information, competitor favoritism in recommendations, negative sentiment amplification, missing brand mentions in relevant categories, and harmful associations with unrelated or controversial topics.

Effective resolution combines multiple strategies: direct outreach to AI companies with documented evidence, creating accurate and comprehensive content on your website, developing comparison pages and FAQ sections, PR and media outreach to influence source materials, and building brand authority through third-party reviews and mentions on high-authority platforms.

AI systems produce inaccurate content due to hallucinations (generating plausible-sounding false information), training data limitations (outdated information), Retrieval Augmented Generation errors (misinterpreting web sources), and bias in source selection (overweighting certain websites while ignoring others).

Leading platforms include AmICited.com (specialized AI brand monitoring), Authoritas (customizable prompt tracking with statistical confidence), Profound (enterprise-scale daily monitoring), Ahrefs Brand Radar (AI mentions with search visibility), Otterly (affordable weekly monitoring), and Peec.ai (mid-tier solutions with competitor benchmarking).

Resolution timelines vary significantly. Direct corrections on your website can influence AI responses within weeks as models update. Direct outreach to AI companies may take 1-3 months. Building authority through third-party content and PR efforts typically requires 2-6 months to show measurable impact on AI recommendations.

Discover how AmICited helps brands track and manage their presence across ChatGPT, Perplexity, Google AI Overviews, and other AI platforms. Get real-time alerts when AI systems misrepresent your brand and take action to protect your reputation.

Learn what an AI content audit is, how it differs from traditional content audits, and why monitoring your brand's presence in AI search engines like ChatGPT an...

Learn what AI content detection is, how detection tools work using machine learning and NLP, and why they matter for brand monitoring, education, and content au...

Learn effective strategies to identify, monitor, and correct inaccurate information about your brand in AI-generated answers from ChatGPT, Perplexity, and other...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.