Content Relevance Scoring

Learn how content relevance scoring uses AI algorithms to measure how well content matches user queries and intent. Understand BM25, TF-IDF, and how search engi...

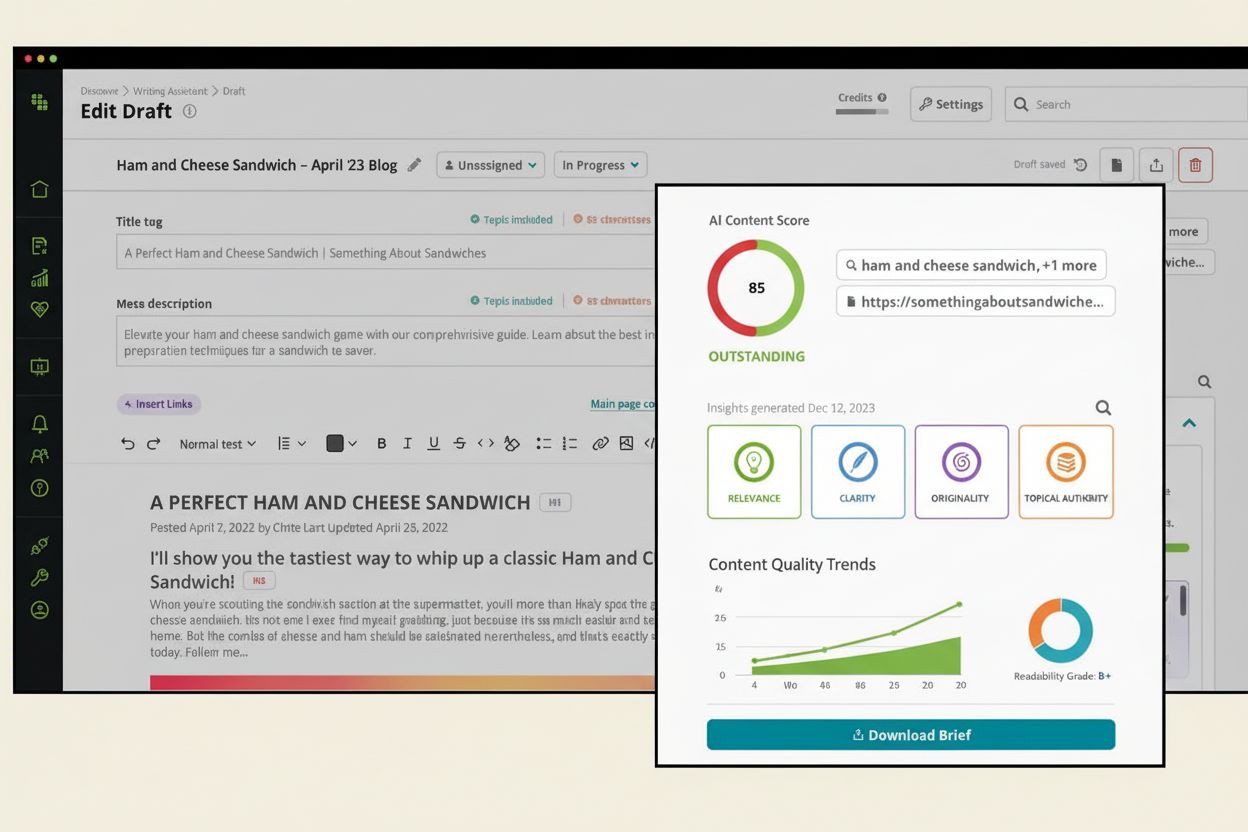

An AI Content Score is a quantitative metric that evaluates the overall quality, relevance, and optimization potential of content for AI systems and search engines. It synthesizes multiple factors including clarity, topical authority, originality, and user intent alignment into a single numerical rating, typically on a 0-100 scale, to assess content suitability for AI visibility and performance.

An AI Content Score is a quantitative metric that evaluates the overall quality, relevance, and optimization potential of content for AI systems and search engines. It synthesizes multiple factors including clarity, topical authority, originality, and user intent alignment into a single numerical rating, typically on a 0-100 scale, to assess content suitability for AI visibility and performance.

An AI Content Score is a quantitative metric that evaluates the overall quality, relevance, and optimization potential of content specifically for artificial intelligence systems and modern search engines. This score synthesizes dozens of individual data points—including clarity, topical authority, originality, semantic richness, and user intent alignment—into a single numerical rating, typically presented on a 0-100 scale. The primary purpose of an AI Content Score is to provide an objective, data-driven benchmark that helps content creators, marketers, and SEO professionals understand how well their content is positioned to be discovered, understood, and cited by AI systems like ChatGPT, Perplexity, Google AI Overviews, and Claude. Unlike traditional SEO metrics that focus on keyword density and backlink profiles, AI Content Scores measure whether content genuinely serves user needs, demonstrates expertise, and provides the kind of authoritative information that AI systems prioritize when generating responses. This shift reflects a fundamental change in how content is evaluated in the age of generative AI, where the ability to be cited as a trusted source matters as much as traditional search rankings.

The concept of measuring content quality has evolved dramatically over the past decade. In the early days of SEO, content evaluation was relatively straightforward—marketers focused on keyword density, meta tags, and backlink counts. However, as search engines became more sophisticated, particularly with the introduction of natural language processing (NLP) and machine learning algorithms like Google’s BERT and MUM, the definition of “quality content” expanded significantly. The rise of generative AI and answer engines has accelerated this evolution further. According to industry research, over 78% of enterprises now use AI-driven content monitoring tools to track how their content performs across multiple platforms. This shift has created a critical need for new measurement systems that can evaluate content through the lens of AI systems rather than traditional search algorithms. AI Content Scores emerged as a response to this need, providing a framework that accounts for how AI models actually assess and utilize content. The development of these scoring systems represents a maturation of the content optimization industry, moving from simple keyword metrics to sophisticated, multi-dimensional quality assessments that reflect how modern AI systems evaluate information credibility and relevance.

An effective AI Content Score evaluates content across five interconnected dimensions, each contributing to the overall assessment of AI-readiness. Structural Optimization measures how well content is organized for both human readability and machine parsing, including heading hierarchy, paragraph length, use of lists, and overall readability scores. Semantic Richness assesses the depth and comprehensiveness of content meaning, evaluating entity density, topical coverage, internal linking patterns, and external citations that establish context and authority. AI Interpretability focuses on how explicitly content communicates its meaning to machines through structured data like JSON-LD schema markup, ensuring that AI systems can accurately understand the page’s purpose and content. Conversational Relevance measures alignment with how users actually query AI systems, evaluating whether content is structured as questions and answers, covers related topics, and addresses the full user journey. Finally, Generative Engagement Rate represents the performance-based component, tracking actual visibility in AI answers, citation frequency, sentiment context, and click-through rates from AI-generated responses. Each component is typically scored on a 0-5 scale, then weighted according to strategic priorities before being normalized to a final 0-100 score. This multi-dimensional approach ensures that AI Content Scores capture the full complexity of what makes content valuable to AI systems, rather than reducing quality to a single metric.

| Scoring Dimension | Traditional SEO Score | AI Content Score | GEO Content Score |

|---|---|---|---|

| Primary Focus | Keyword optimization, backlinks, technical factors | User intent alignment, topical authority, clarity | AI visibility, entity density, conversational relevance |

| Evaluation Method | Keyword density analysis, link profile assessment | NLP analysis, semantic understanding, E-E-A-T signals | Multi-engine sampling, decision compression analysis |

| Key Metrics | Keyword frequency, domain authority, page speed | Originality, expertise, content depth, structure | Structural optimization, semantic richness, engagement rate |

| Scoring Scale | Typically 0-100 or 0-10 | 0-100 (normalized) | 0-100 (weighted components) |

| Target Audience | Google, Bing, traditional search engines | ChatGPT, Perplexity, Claude, AI answer engines | Multiple AI platforms simultaneously |

| Update Frequency | Monthly to quarterly | Real-time or weekly | 30-day rolling assessment |

| Correlation with Rankings | Direct impact on SERP position | Indirect impact via citation selection | Predictive of AI answer inclusion |

| Implementation Complexity | Moderate; established best practices | High; requires NLP and ML expertise | Very high; requires multi-platform data |

AI Content Scores are calculated through a sophisticated process that begins with content crawling and ingestion, where AI tools break down written content into analyzable units. The system then performs feature extraction, identifying dozens of signals including keyword density, semantic relevance, sentence structure, grammar quality, and sentiment. This is followed by comparative analysis, where the content’s features are benchmarked against established high-performing content within the industry or topic area. A predefined scoring model—often a complex machine learning algorithm—then weights different features based on their impact on content quality and performance. For example, how thoroughly a topic is explored typically carries more weight than minor grammatical errors. The model calculates an overall score and provides detailed feedback on specific improvement areas. What distinguishes modern AI Content Scoring from older approaches is that it moves beyond purely technical SEO factors to evaluate qualitative aspects like intent alignment and audience connection. According to research from leading content optimization platforms, the most effective AI Content Scores are based on real-time search data rather than static benchmarks, ensuring that scores remain accurate as user behavior and AI algorithms evolve. This data-driven approach means that a piece of content scoring 87 on an AI Content Score isn’t just a number—it represents specific, actionable insights about why that content is well-positioned for AI visibility and what changes could improve it further.

Experience, Expertise, Authoritativeness, and Trustworthiness (E-E-A-T) have become central to how both traditional search engines and AI systems evaluate content quality. AI Content Scores increasingly incorporate E-E-A-T signals as core components of their evaluation framework. Experience is demonstrated through first-hand knowledge, personal case studies, and original research that shows the author has direct involvement with the topic. Expertise is established through author credentials, professional certifications, and demonstrated subject matter knowledge throughout the content. Authoritativeness comes from being recognized as a leader in the field, supported by citations from other authoritative sources and consistent publication of high-quality content. Trustworthiness is perhaps the most critical factor, encompassing accuracy, transparency about sources, clear author attribution, and adherence to ethical standards. AI systems, particularly those used for high-stakes queries in health, finance, or legal domains, heavily weight E-E-A-T signals when deciding which sources to cite. An AI Content Score that incorporates strong E-E-A-T indicators signals to AI systems that the content is reliable and worthy of citation. This is why content from established brands, industry experts, and authoritative publications tends to score higher and receive more visibility in AI-generated responses. For organizations building content strategies around AI Content Scores, investing in author credibility, source verification, and transparent expertise demonstration becomes as important as traditional content optimization tactics.

Implementing an AI Content Score system requires a structured approach that begins with defining clear scoring criteria aligned with your business objectives. The first step is to establish baseline metrics by selecting a representative sample of your content and scoring it using your chosen methodology or platform. This reveals the current state of your content library and identifies patterns in what’s performing well versus what needs improvement. Next, set target thresholds for different content types—for example, critical business communications might require a minimum score of 4.5 out of 5 on each component, while regular blog posts might target 4.0. The actual scoring process involves collecting data on each of the five core components: running your content through readability analyzers and structure checkers for Structural Optimization, using NLP tools to assess Semantic Richness, validating schema markup for AI Interpretability, analyzing query alignment for Conversational Relevance, and tracking actual AI visibility for Generative Engagement Rate. This data is then fed into a calculation engine—often a spreadsheet or business intelligence tool—that applies your predetermined weights and generates final scores. For enterprise organizations, this process is typically automated through a pipeline where crawlers gather on-page data, AI answer trackers monitor performance, and a BI platform runs calculations and generates dashboards. The most important aspect of implementation is consistency—establishing clear rubrics and applying them uniformly across your content library ensures that scores are comparable and actionable. Many organizations find that starting with a smaller pilot program on high-value content pages allows them to refine their scoring methodology before scaling to their entire content library.

Several critical factors significantly influence AI Content Scores, and understanding these can help guide optimization efforts. Topical Depth and Comprehensiveness is perhaps the most important factor—AI systems reward content that thoroughly explores a topic, answers related questions, and provides context through subtopics and related entities. Content that only scratches the surface of a topic, no matter how well-written, will score lower than content that provides authoritative, in-depth coverage. Clarity and Readability directly impact scores because AI systems need to understand content clearly to evaluate its quality and relevance. Content with short paragraphs, clear headings, logical flow, and accessible language scores higher than dense, jargon-heavy content. Originality and Unique Insights are heavily weighted because AI systems are trained to identify and reward content that adds new information or perspectives rather than simply rehashing existing content. Structural Elements like proper heading hierarchy, schema markup, and internal linking help AI systems understand content organization and context. User Intent Alignment measures whether content directly answers what users are actually asking, which is critical because AI systems increasingly prioritize content that matches conversational queries. Author Credibility and E-E-A-T Signals influence scores because AI systems need confidence that the source is trustworthy and knowledgeable. Finally, Freshness and Currency matter for time-sensitive topics—content that’s been recently updated or addresses current events scores higher than outdated content. Organizations that focus on optimizing these factors systematically see the most significant improvements in their AI Content Scores and corresponding increases in AI visibility.

Achieving and maintaining high AI Content Scores requires a strategic, ongoing approach to content optimization. Expand Topical Coverage by going beyond surface-level information to address related subtopics, answer common questions, and provide thorough explanations that establish your content as an authoritative resource. Improve Clarity and Conciseness by simplifying complex sentences, explaining jargon, breaking lengthy paragraphs into shorter ones, and using active voice throughout. Strengthen Structure and Flow through clear, descriptive headings that guide readers, bulleted or numbered lists that present information digestibly, and logical progression that helps both humans and AI systems understand your content. Leverage Diverse Language by avoiding repetitive phrasing, varying vocabulary and sentence structures, and using rich, natural language that avoids keyword stuffing. Optimize for User Intent by ensuring your content directly answers the questions your audience is asking and addressing the full customer journey from awareness to decision. Implement Structured Data through valid, specific schema markup that helps AI systems understand your content’s purpose and context. Build Internal Linking Strategies that connect related content and establish topical clusters, helping AI systems understand your expertise across multiple related topics. Enhance Author Credibility by including detailed author bios, credentials, and links to authoritative profiles. Monitor and Iterate by regularly rescoring content, tracking performance in AI systems, and making data-driven adjustments based on what’s working. These practices work together to create a comprehensive optimization strategy that improves AI Content Scores while simultaneously improving content quality for human readers.

As AI systems continue to evolve, AI Content Scoring methodologies will become increasingly sophisticated and nuanced. The next generation of AI Content Scores will likely incorporate real-time sentiment analysis to distinguish between positive and negative mentions of your content in AI responses, providing more granular insights into how your content is being used. Multi-language and multi-format evaluation will become standard, as AI systems increasingly process video, audio, and visual content alongside text. Predictive modeling will allow organizations to forecast how content changes will impact future AI visibility before publishing, enabling more confident optimization decisions. Bias detection and mitigation will become a core component of scoring, as AI systems face increasing scrutiny around fairness and representation. Cross-platform scoring will evolve to account for differences in how various AI systems (ChatGPT, Perplexity, Google AI Overviews, Claude) evaluate and prioritize content, allowing organizations to optimize for multiple platforms simultaneously. The integration of behavioral signals—such as how often users click through from AI answers to your content—will provide more direct performance feedback. Additionally, as Answer Engine Optimization (AEO) and Generative Engine Optimization (GEO) become mainstream practices, AI Content Scores will likely become as standard and essential as traditional SEO metrics are today. Organizations that invest in understanding and optimizing their AI Content Scores now will have a significant competitive advantage as these systems mature and become more influential in determining content visibility and business outcomes.

Traditional SEO scores focus primarily on keyword density, backlink profiles, and technical factors like page speed and mobile-friendliness. AI Content Scores, by contrast, evaluate how well content aligns with user intent, topical depth, semantic richness, and whether AI systems will find it authoritative and useful. While SEO scores measure optimization for search algorithms, AI Content Scores measure optimization for generative AI systems like ChatGPT and Perplexity that synthesize information from multiple sources to create answers.

AI systems use content quality signals to determine which sources are most authoritative and trustworthy for citation in generated responses. A higher AI Content Score indicates that content demonstrates expertise, originality, and comprehensive topical coverage—factors that make it more likely to be selected as a source. When multiple sources address the same query, AI systems prioritize those with stronger quality signals, making content scoring directly correlated with visibility in AI-generated answers across platforms like Google AI Overviews, Perplexity, and Claude.

The primary components include structural optimization (heading hierarchy, readability, formatting), semantic richness (entity density, topical coverage, internal linking), AI interpretability (schema markup validity and completeness), conversational relevance (alignment with user queries and Q&A format), and generative engagement rate (actual visibility in AI answers). Each component is typically scored on a 0-5 scale and then weighted according to strategic priorities before being normalized to a 0-100 final score.

While AI Content Scores provide strong directional guidance and correlate with performance potential, they are not perfect predictors. A high score indicates that content meets quality benchmarks and best practices, but actual rankings depend on additional factors including domain authority, backlink profile, user engagement metrics, and competitive landscape. AI Content Scores work best as part of a comprehensive optimization strategy rather than as standalone ranking guarantees, and should be combined with performance monitoring and ongoing refinement.

Content should be rescored whenever significant updates are made, typically on a quarterly basis for high-priority pages, and at minimum annually for the entire content library. As AI algorithms evolve and user search behavior changes, content that previously scored well may need optimization. Rescoring helps identify content that has degraded in quality or relevance, and allows teams to prioritize resources toward pages with the highest impact potential for both traditional search and AI visibility.

Generally, scores of 70-89 indicate well-optimized content with good potential for AI visibility, while scores of 90+ represent best-in-class content. Scores below 40 typically indicate content requiring significant remediation. However, the ideal target depends on your industry, content type, and competitive landscape. Rather than chasing perfect scores, focus on consistency and continuous improvement, as the most valuable aspect of content scoring is the actionable feedback it provides for optimization, not the numerical rating itself.

AmICited tracks where your brand and content appear in AI-generated responses across platforms like ChatGPT, Perplexity, Google AI Overviews, and Claude. Understanding your AI Content Score helps explain why certain content gets cited while others don't. Higher-scoring content is more likely to be selected as authoritative sources by AI systems, directly impacting your visibility in AI responses. By combining AI Content Score optimization with AmICited's citation tracking, you can measure the correlation between content quality improvements and increased AI mentions and citations.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Learn how content relevance scoring uses AI algorithms to measure how well content matches user queries and intent. Understand BM25, TF-IDF, and how search engi...

Learn how to measure content performance in AI systems including ChatGPT, Perplexity, and other AI answer generators. Discover key metrics, KPIs, and monitoring...

Community discussion on perplexity score in content and language models. Writers and AI experts discuss whether it matters for content creation and optimization...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.