What is BERT and is it still relevant in 2024-2025?

Learn about BERT, its architecture, applications, and current relevance. Understand how BERT compares to modern alternatives and why it remains essential for NL...

The BERT Update is Google’s October 2019 algorithm improvement that uses Bidirectional Encoder Representations from Transformers to enhance natural language understanding in search queries. It impacts approximately 10% of all search queries by enabling Google to better understand context, prepositions, and semantic meaning in conversational and complex search phrases.

The BERT Update is Google's October 2019 algorithm improvement that uses Bidirectional Encoder Representations from Transformers to enhance natural language understanding in search queries. It impacts approximately 10% of all search queries by enabling Google to better understand context, prepositions, and semantic meaning in conversational and complex search phrases.

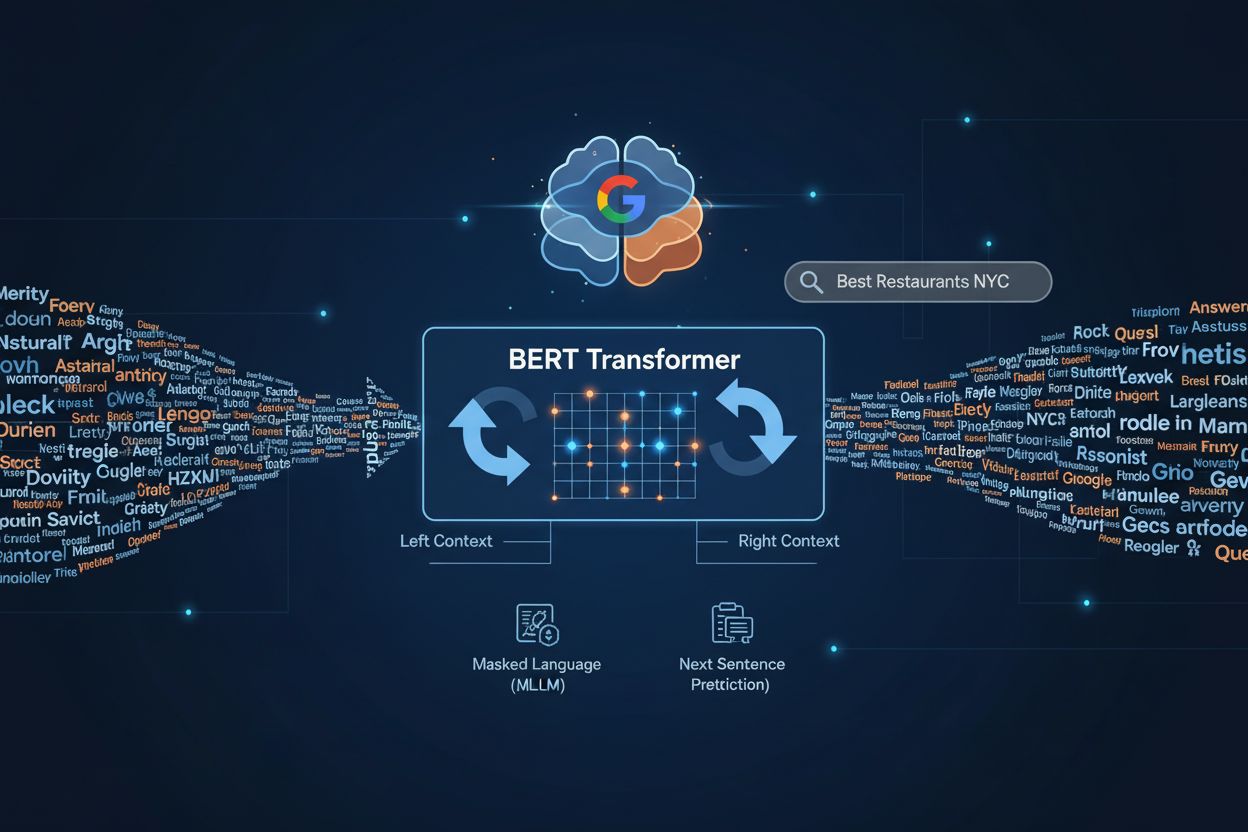

The BERT Update is a major Google search algorithm improvement announced on October 25, 2019, that fundamentally changed how the search engine understands natural language. BERT stands for Bidirectional Encoder Representations from Transformers, a neural network-based technique for natural language processing that enables Google to comprehend the context, nuance, and semantic meaning of words in search queries. Rather than analyzing words individually or sequentially from left to right, BERT processes text bidirectionally—examining each word in relation to all surrounding words simultaneously—allowing Google to grasp the full contextual meaning of complex, conversational queries. According to Google’s official announcement by Pandu Nayak, Vice President of Search, this update represents one of the biggest leaps forward in search technology in the past five years, impacting approximately 10% of all search queries (roughly 560 million queries daily in the United States alone). The BERT Update was particularly designed to improve search results for longer, more natural language queries where prepositions and contextual relationships between words are critical to understanding user intent.

The development of BERT represents the culmination of years of research into natural language processing and machine learning at Google. Google researchers introduced BERT as an open-source framework in October 2018, building upon earlier advances in transformer-based neural networks. The technology emerged from Google’s broader efforts to move beyond simple keyword matching toward semantic understanding—a journey that began with the Hummingbird Update in 2013 and continued with RankBrain in 2015. However, while RankBrain helped Google understand novel queries by matching them to similar ones, BERT introduced a fundamentally different approach by reading text bidirectionally. This breakthrough was made possible by advances in machine learning and the availability of more powerful computing infrastructure, including Cloud TPUs (Tensor Processing Units), which Google deployed for the first time to serve search results at scale. The research team at Google AI recognized that previous algorithms struggled with understanding the importance of small words like “for,” “to,” and “no” in queries, often misinterpreting user intent. BERT’s bidirectional training methodology solved this problem by allowing the algorithm to consider the full context of every word in a sentence, not just the words that came before or after it in sequence.

BERT operates through a sophisticated neural network architecture that processes language in a fundamentally different way than previous algorithms. The core innovation is its bidirectional approach: instead of reading text from left to right or right to left sequentially, BERT analyzes all words in a sentence simultaneously, understanding each word’s meaning based on its relationship to every other word in the context. This is achieved through transformer models, which use attention mechanisms to weigh the importance of different words relative to each other. When a user enters a search query, BERT tokenizes the text into individual components and then processes these tokens through multiple layers of transformer encoders. Each layer refines the algorithm’s understanding of word relationships and contextual meaning. The “bidirectional” aspect is crucial: it means BERT doesn’t just look at what comes before a word to understand it; it also considers what comes after, providing a complete contextual picture. For example, in the query “do estheticians stand a lot at work,” BERT understands that “stand” refers to physical positioning (a verb related to job demands) rather than “stand-alone” (a compound adjective), because it analyzes the full sentence context. This bidirectional processing enables BERT to handle ambiguous words with multiple meanings, understand the significance of prepositions, and grasp subtle linguistic nuances that previous algorithms missed. The model was trained on massive amounts of unlabeled text data, allowing it to learn language patterns and semantic relationships without requiring manual annotation.

The practical impact of the BERT Update on search results has been substantial, particularly for complex and conversational queries. Google demonstrated this through several real-world examples in their official announcement. One notable example involved the query “2019 Brazil traveler to USA need a visa”—before BERT, Google’s algorithm focused too heavily on keyword matching and returned results about U.S. citizens traveling to Brazil, completely missing the directional context indicated by the word “to.” After BERT, the search engine correctly understood that the query was about a Brazilian traveling to the United States and returned relevant visa information for that specific scenario. Another example showed how BERT improved results for “do estheticians stand a lot at work” by understanding that “stand” referred to the physical demands of the job rather than matching it with “stand-alone” in irrelevant results. These improvements mean that users can now search in a more natural, conversational manner without resorting to what Google calls “keyword-ese”—the practice of typing awkward strings of keywords that users thought search engines would understand. With BERT, users can ask questions the way they would naturally speak, and Google will comprehend their intent more accurately. This shift has been particularly beneficial for voice search, where queries tend to be longer and more conversational. The update also improved featured snippets, with Google applying BERT models to better identify which content sections most accurately and concisely answer user questions, resulting in more relevant position-zero results.

| Algorithm | Year Released | Primary Focus | Processing Method | Query Impact | Key Innovation |

|---|---|---|---|---|---|

| RankBrain | 2015 | Understanding novel queries | Sequential pattern matching | ~15% of queries | Handles unseen search queries through similarity matching |

| BERT | 2019 | Contextual language understanding | Bidirectional transformer analysis | ~10% of queries | Reads text in both directions simultaneously for full context |

| MUM | 2021 (limited rollout) | Multimodal and multilingual understanding | Multitask unified model | Expanding | 1,000x more powerful than BERT; handles images, video, text |

| Hummingbird | 2013 | Natural language search | Semantic keyword analysis | ~90% of queries | Introduced semantic search and conversational queries |

| Panda | 2011 | Content quality assessment | Content evaluation | Variable | Penalized low-quality and thin content |

The BERT Update fundamentally shifted SEO best practices away from rigid keyword optimization toward semantic SEO and user intent alignment. Since BERT rewards naturally-written, contextually relevant content, SEO professionals had to adapt their strategies accordingly. One critical implication is that keyword stuffing and artificial keyword placement became even less effective, as BERT can now distinguish between natural language usage and forced keyword insertion. Content creators must focus on writing clearly and grammatically correct material that genuinely addresses user questions, rather than optimizing for specific keyword phrases. The update also emphasized the importance of topic clusters and comprehensive content coverage—instead of targeting individual keywords, successful SEO now involves creating in-depth content that thoroughly explores a topic from multiple angles, naturally incorporating related terms and concepts. Featured snippets became more competitive, as BERT’s improved understanding means only genuinely helpful, well-structured answers are likely to be selected for position zero. Additionally, the update highlighted the significance of prepositions and small connecting words that were previously overlooked; content must now use these words naturally and correctly, as BERT understands their importance to meaning. Long-tail keywords and conversational phrases became more valuable, as BERT excels at understanding these natural language patterns. However, it’s important to note that BERT doesn’t replace traditional SEO fundamentals—backlinks, site speed, mobile optimization, and technical SEO remain critical ranking factors. BERT simply means that content quality, clarity, and semantic relevance have become more important than ever.

While BERT was specifically developed for Google Search, its principles and underlying technology have influenced how other AI systems process natural language. ChatGPT, Claude, Perplexity, and Google AI Overviews all employ similar transformer-based architectures and bidirectional processing methods for understanding user queries and generating responses. Understanding BERT’s approach to natural language processing is therefore relevant for anyone tracking how their content appears across multiple AI platforms. For Google AI Overviews (formerly SGE—Search Generative Experience), BERT’s contextual understanding helps determine which sources are cited and how content is summarized in AI-generated responses. The algorithm’s ability to understand semantic meaning means that content doesn’t need to match queries word-for-word to be selected; instead, content that addresses the underlying user intent is more likely to be featured. For Perplexity AI, which emphasizes source attribution and conversational search, BERT-like processing helps the system understand which sources best answer complex, multi-faceted questions. ChatGPT and Claude use transformer architectures similar to BERT’s, though at much larger scales, allowing them to understand nuanced user requests and generate contextually appropriate responses. This means that content optimized for BERT’s principles—clear, contextually relevant, naturally written material that addresses user intent—is more likely to be cited and featured across these AI platforms. For brands and content creators using AmICited to monitor their presence in AI search results, understanding BERT’s emphasis on semantic relevance and contextual meaning is crucial for optimizing content that will be selected by these AI systems.

Since its introduction in 2019, BERT has continued to evolve and influence Google’s search algorithm development. The technology served as a foundation for MUM (Multitask Unified Model), announced in May 2021, which Google describes as 1,000 times more powerful than BERT. MUM extends BERT’s capabilities by handling multiple types of content (text, images, video) simultaneously and understanding information across different languages without requiring separate training for each language. This represents a significant leap forward in AI’s ability to understand and process information comprehensively. Looking forward, the trajectory of natural language processing in search suggests continued emphasis on semantic understanding, user intent recognition, and contextual relevance. As AI systems become more sophisticated, the distinction between keyword matching and semantic understanding will become even more pronounced. Content creators and SEO professionals should expect that future algorithm updates will further reward high-quality, naturally-written content that genuinely addresses user needs. The rise of generative AI in search results means that understanding how algorithms like BERT interpret content is increasingly important for ensuring proper attribution and visibility. Additionally, as voice search and conversational AI continue to grow, BERT’s strength in processing natural language will remain relevant. The technology also has implications beyond search—BERT’s principles are being applied to content moderation, sentiment analysis, and other natural language processing tasks. For organizations monitoring their brand presence in AI systems, staying informed about BERT and related technologies helps explain why certain content gets selected for AI responses while other content doesn’t. The future of search will likely involve even more sophisticated understanding of user intent, context, and semantic meaning, building directly on the foundation that BERT established.

To optimize content for BERT and maintain visibility in modern search results, content creators should follow several evidence-based practices. Write naturally and conversationally: Use language that sounds human and natural rather than artificially optimized for keywords. BERT rewards content that reads well and communicates clearly. Focus on user intent: Understand what users actually want to find when they search for a particular topic, and create content that directly addresses that intent. Use comprehensive topic coverage: Instead of targeting individual keywords, create in-depth content that thoroughly explores a topic, naturally incorporating related concepts and terminology. Structure content clearly: Use headings, subheadings, bullet points, and logical flow to help both readers and search engines understand your content’s organization and meaning. Answer questions directly: Include FAQ sections and clear answers to common questions related to your topic, as BERT excels at matching question-based queries to relevant answers. Maintain grammatical accuracy: BERT can now distinguish between grammatically correct and incorrect content, so proper grammar and syntax matter more than ever. Use prepositions and connecting words naturally: Don’t avoid small words like “for,” “to,” “by,” and “with”—use them naturally as they contribute to semantic meaning. Create content for humans first: Remember that BERT is designed to reward content that genuinely helps users, not content optimized for algorithms. The best SEO strategy is to create valuable, helpful content that serves your audience’s needs. Implement structured data: Use schema markup to help search engines understand your content’s meaning and context, complementing BERT’s natural language understanding. Monitor long-tail and conversational keywords: Track how your content performs for longer, more natural search phrases, as these are where BERT’s improvements are most visible.

+++

BERT stands for Bidirectional Encoder Representations from Transformers. Google researchers introduced BERT as an open-source machine learning framework in October 2018, and Google officially applied it to search rankings on October 25, 2019. This update represented one of the most significant improvements to Google Search in five years, fundamentally changing how the search engine processes and understands natural language queries.

While RankBrain (2015) helped Google understand novel search queries by matching them to similar ones, BERT goes deeper by reading text bidirectionally—analyzing words in relation to all surrounding words simultaneously rather than sequentially. BERT understands context, prepositions, and nuanced meaning more accurately than RankBrain, making it particularly effective for longer, conversational queries where small words like 'for' and 'to' significantly change meaning.

Google stated that BERT impacts approximately 10% of all search queries in the United States for English-language searches, which translates to roughly 560 million queries per day. The update also affects featured snippets across 24 countries in multiple languages, demonstrating its global significance in improving search result relevance and accuracy.

There is no direct BERT optimization strategy like there is for mobile optimization. Instead, BERT rewards high-quality, naturally-written content that clearly answers user questions. Focus on writing grammatically correct, contextually relevant content that addresses user intent comprehensively. Ensure your content uses natural language, covers topics thoroughly, and provides genuine value—these practices align with BERT's emphasis on semantic understanding over keyword matching.

BERT uses bidirectional processing, meaning it reads text from both left to right and right to left simultaneously, understanding how each word relates to all other words in the sentence. This allows BERT to grasp the full context and nuanced meaning of queries. For example, in 'Brazil traveler to USA needs visa,' BERT understands that 'to' indicates direction from Brazil to the USA, not the reverse, providing more relevant results.

Google applies BERT models to both search rankings and featured snippets. BERT improves featured snippet selection by better understanding which content sections most accurately and concisely answer user questions. This means pages with clear, well-structured answers to common questions are more likely to be selected for position zero, as BERT can now more accurately evaluate content relevance and answer quality.

BERT significantly improves voice search performance because voice queries tend to be more conversational and natural than typed queries. Since BERT excels at understanding natural language, longer phrases, and contextual meaning, it delivers better results for voice searches. Users can now ask questions in a natural, conversational manner without resorting to 'keyword-ese,' and BERT will understand their intent more accurately.

No, BERT complements rather than replaces traditional SEO fundamentals. Backlinks, site speed, mobile optimization, and technical SEO remain important ranking factors. BERT specifically improves how Google understands content meaning and user intent, so it works alongside these other ranking signals. A comprehensive SEO strategy must address all factors—BERT simply means content quality and natural language clarity have become more critical.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Learn about BERT, its architecture, applications, and current relevance. Understand how BERT compares to modern alternatives and why it remains essential for NL...

Community discussion on whether BERT optimization still matters in the age of GPT-4 and other large language models. Understanding what's changed for SEO and AI...

Discover how algorithm updates impact AI search visibility across ChatGPT, Perplexity, Google AI Overviews, and Claude. Learn ranking factors and optimization s...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.