How to Handle Duplicate Content for AI Search Engines

Learn how to manage and prevent duplicate content when using AI tools. Discover canonical tags, redirects, detection tools, and best practices for maintaining u...

Duplicate content refers to identical or highly similar content appearing on multiple URLs, either within the same website or across different domains. This issue confuses search engines and dilutes the ranking authority of pages, negatively impacting SEO performance and visibility in both traditional search and AI-powered search results.

Duplicate content refers to identical or highly similar content appearing on multiple URLs, either within the same website or across different domains. This issue confuses search engines and dilutes the ranking authority of pages, negatively impacting SEO performance and visibility in both traditional search and AI-powered search results.

Duplicate content refers to identical or highly similar content appearing on multiple URLs, either within the same website (internal duplication) or across different domains (external duplication). This fundamental SEO issue occurs when search engines encounter multiple versions of the same material and must determine which version is most relevant to index and display in search results. According to research cited by industry experts, approximately 25-30% of all web content is duplicate, making this one of the most pervasive challenges in digital marketing. The problem extends beyond traditional search engines to AI-powered search systems like Perplexity, ChatGPT, Google AI Overviews, and Claude, where duplicate content creates confusion about content authority and original sources. For a page to qualify as duplicate content, it must have noticeable overlap in wording, structure, and format with another piece, little to no original information, and minimal added value compared to similar pages.

The concept of duplicate content has evolved significantly since the early days of search engine optimization. When search engines first emerged in the 1990s, duplicate content was less of a concern because the web was smaller and more fragmented. However, as the internet expanded and content management systems became more sophisticated, the ability to create multiple URLs serving identical content became trivial. Google’s official stance on duplicate content, established through multiple communications from their webmaster team, clarifies that while they don’t penalize honest duplicate content, they do address it algorithmically by selecting a canonical version to index and rank. This distinction is crucial: Google doesn’t issue manual penalties for technical duplication, but the presence of duplicates still harms SEO performance through authority dilution and crawl budget waste.

The rise of e-commerce platforms, content management systems, and URL parameter tracking in the 2000s and 2010s dramatically increased duplicate content issues. Session IDs, sorting parameters, and filtering options created virtually infinite URL combinations serving identical content. Simultaneously, content syndication became a standard practice, with publishers republishing content across multiple domains. The emergence of AI search engines and large language models in 2023-2024 introduced a new dimension to duplicate content challenges. These systems must determine not only which URL to rank but also which source to cite when multiple identical versions exist. This creates opportunities for brand monitoring platforms like AmICited to track how duplicate content affects visibility across AI search engines.

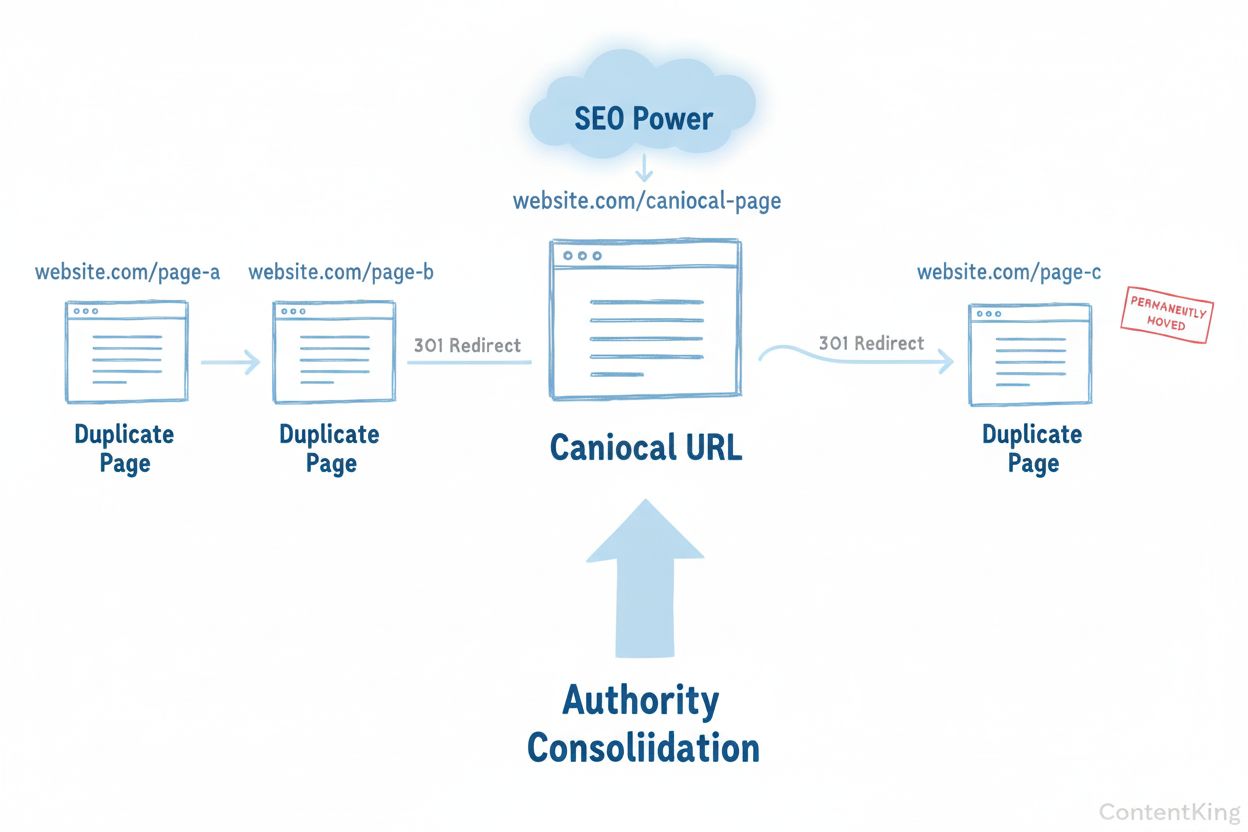

Duplicate content negatively impacts SEO through multiple mechanisms, each reducing your site’s visibility and ranking potential. The primary issue is authority dilution: when you have multiple URLs with identical content, any backlinks pointing to those pages are split across versions instead of consolidated on a single authoritative page. For example, if one version receives 50 backlinks and another receives 30, you’re splitting your ranking power instead of having 80 backlinks pointing to one page. This fragmentation significantly weakens your ability to rank for competitive keywords.

Search engines also face indexation challenges when encountering duplicate content. They must decide which version to include in their index and which to exclude. If Google chooses the wrong version—perhaps a lower-quality or less authoritative URL—your preferred page may not rank at all. Additionally, duplicate content wastes crawl budget, the limited time and resources search engines allocate to crawl your website. A study by industry experts found that fixing duplicate content issues alone can result in 20% or higher increases in organic traffic for affected sites. This dramatic improvement occurs because search engines can now focus crawling resources on unique, valuable content rather than wasting time on duplicates.

The impact extends to click-through rates and user experience. When multiple versions of the same content appear in search results, users may click on a lower-quality version, leading to higher bounce rates and reduced engagement signals. For AI search engines and LLMs, duplicate content creates additional confusion about content authority and original sources. When ChatGPT or Perplexity encounters multiple identical versions of content, the system must determine which URL represents the authoritative source for citation purposes. This uncertainty can result in citations to non-preferred URLs or inconsistent attribution across different AI responses.

| Issue Type | Cause | Internal/External | Best Solution | Strength of Signal |

|---|---|---|---|---|

| URL Parameters | Tracking, filtering, sorting (e.g., ?color=blue&size=10) | Internal | Canonical tags or parameter handling in GSC | Strong |

| Domain Variations | HTTP vs. HTTPS, www vs. non-www | Internal | 301 redirects to preferred version | Very Strong |

| Pagination | Content split across multiple pages | Internal | Self-referencing canonical tags | Moderate |

| Session IDs | Visitor tracking appended to URLs | Internal | Self-referencing canonical tags | Strong |

| Content Syndication | Authorized republishing on other domains | External | Canonical tags + noindex on syndicated versions | Moderate |

| Content Scraping | Unauthorized copying on other domains | External | DMCA takedown requests + canonical tags | Weak (requires enforcement) |

| Trailing Slashes | URLs with and without trailing slash | Internal | 301 redirects to standardized format | Very Strong |

| Print-Friendly Versions | Separate URL for printable content | Internal | Canonical tag pointing to main version | Strong |

| Landing Pages | Similar pages for paid search campaigns | Internal | Noindex tag on landing pages | Strong |

| Staging Environments | Testing sites accidentally indexed | Internal | HTTP authentication or noindex | Very Strong |

Understanding how duplicate content technically manifests is essential for implementing effective solutions. URL parameters represent one of the most common technical causes, particularly in e-commerce and content-heavy websites. When a website uses parameters for filtering (e.g., example.com/shoes?size=9&color=blue), each parameter combination creates a new URL with identical or nearly identical content. A single product page with five size options and ten color options creates 50 different URLs serving essentially the same content. Search engines must crawl and process each variation, consuming crawl budget and potentially fragmenting ranking authority.

Domain configuration issues create another major source of duplication. Many websites are accessible through multiple domain variations: http://example.com, https://example.com, http://www.example.com, and https://www.example.com. Without proper configuration, all four versions may be indexed as separate pages. Similarly, trailing slash inconsistencies (URLs ending with or without a forward slash) and URL casing (Google treats URLs as case-sensitive) create additional duplicate versions. A single page might be accessible through example.com/products/shoes/, example.com/products/shoes, example.com/Products/Shoes, and example.com/products/Shoes/, each potentially indexed as a separate page.

Session IDs and tracking parameters add another layer of complexity. When websites append session identifiers or tracking codes to URLs (e.g., ?utm_source=twitter&utm_medium=social&utm_campaign=promo), each unique combination creates a new URL. While these parameters serve legitimate tracking purposes, they create duplicate content from a search engine perspective. Pagination across multiple pages also generates duplicate content issues, particularly when pages contain overlapping content or when search engines struggle to understand the relationship between paginated pages.

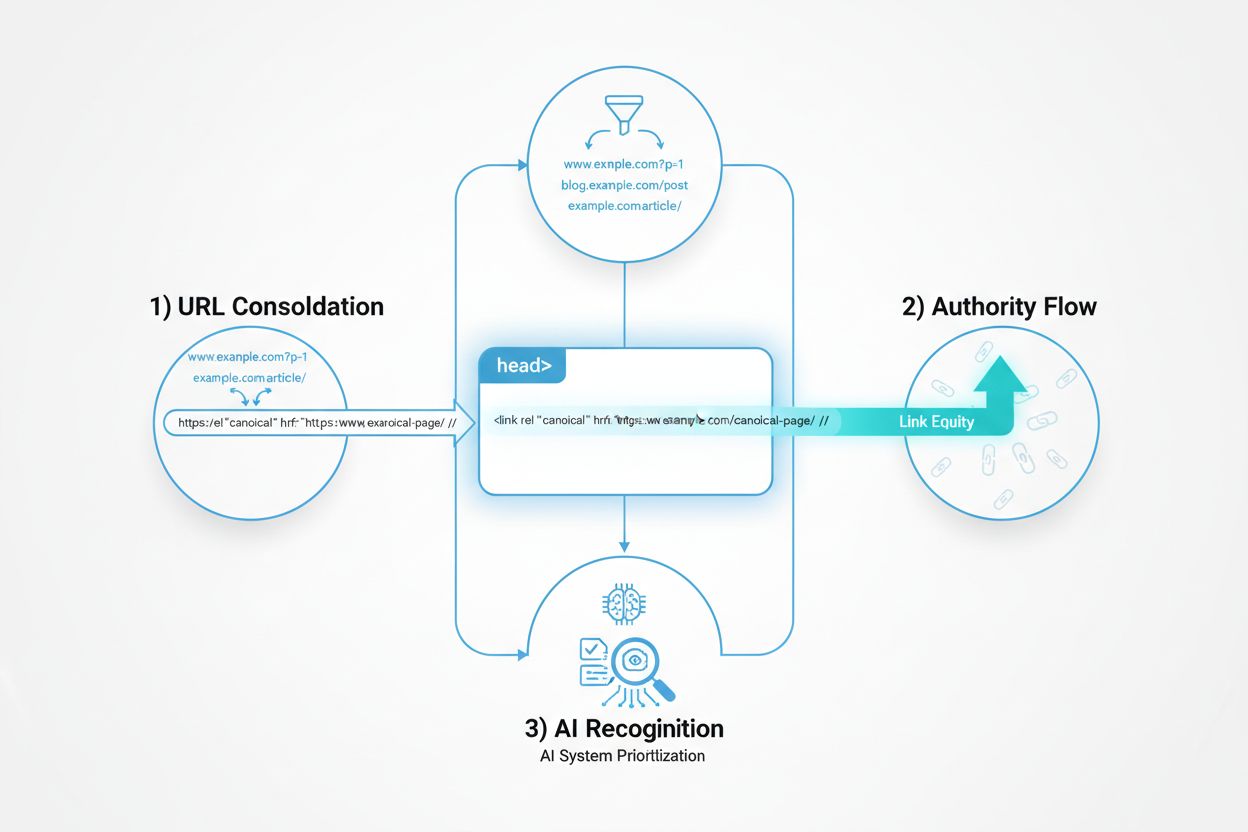

The emergence of AI-powered search engines and large language models has introduced new dimensions to duplicate content challenges. When Perplexity, ChatGPT, Google AI Overviews, and Claude encounter multiple identical versions of content, they must determine which source to cite and how to attribute information. This creates significant implications for brand monitoring and visibility tracking. A platform like AmICited that monitors where your brand appears in AI responses must account for duplicate content when tracking citations.

For example, if your company publishes an article on your official website (company.com/blog/article), but the same content is syndicated on three other domains, an AI system might cite any of these four versions. From a brand visibility perspective, citations to non-preferred URLs dilute your brand authority and may direct traffic to competitor sites or lower-quality republications. Duplicate content across domains also creates challenges for AI systems in determining original authorship. If a competitor scrapes your content and publishes it on their domain before search engines index your version, AI systems might incorrectly attribute the content to the competitor.

The consolidation of authority becomes even more critical in the AI search landscape. When you implement canonical tags or 301 redirects to consolidate duplicate content, you’re not just improving traditional search rankings—you’re also improving the likelihood that AI systems will correctly identify and cite your preferred URL. This is particularly important for brand protection and thought leadership, where being cited as the authoritative source matters for credibility and traffic. Organizations using AmICited to monitor their AI visibility benefit from understanding how duplicate content affects their appearance in AI responses across multiple platforms.

Duplicate content originates from both technical and intentional sources, each requiring different solutions. On the technical side, misconfigured web servers represent a leading cause. When servers aren’t properly configured to standardize domain formats, content becomes accessible through multiple URLs. A homepage might be reachable through example.com, www.example.com, example.com/index.html, and example.com/index.php, each potentially indexed as a separate page. Content management systems also frequently create duplicates through their taxonomy and categorization features. A blog post assigned to multiple categories might be accessible through different category URLs, each serving the same content.

E-commerce platforms generate substantial duplicate content through product filtering and sorting. When customers filter products by size, color, price range, or other attributes, each filter combination creates a new URL. Without proper canonicalization, a single product might have hundreds of duplicate URLs. Pagination across article series or product listings creates additional duplication, particularly when pages contain overlapping content or when search engines struggle to understand the relationship between pages in a series.

Intentional duplication stems from legitimate business practices that create unintended SEO consequences. Content syndication, where publishers republish content on multiple domains with permission, creates external duplicates. Landing pages for paid search campaigns often duplicate existing content with minor modifications to target specific keywords. Print-friendly versions of articles create separate URLs with identical content. While these practices serve legitimate purposes, they create duplicate content that requires proper management through canonical tags or noindex directives.

Unauthorized content scraping represents the most problematic form of external duplication. Competitors or content aggregators copy your content and republish it on their domains, sometimes ranking above your original content if their domain has higher authority. This is particularly damaging because you lose traffic and authority to unauthorized copies of your own content.

Fixing duplicate content requires a multi-faceted approach tailored to the specific cause and context. The strongest solution is implementing 301 redirects, which permanently move one URL to another and transfer all ranking authority to the target URL. This method is ideal when you want to eliminate duplicate URLs entirely, such as when standardizing domain formats (redirecting HTTP to HTTPS or non-www to www versions). Most hosting providers and content management systems offer straightforward ways to implement 301 redirects through configuration files or admin panels.

Canonical tags provide a powerful alternative when you need to keep multiple URLs accessible to users but want search engines to prioritize one version. By adding <link rel="canonical" href="https://preferred-url.com"> to the head section of duplicate pages, you signal your preference without requiring redirects. This approach works particularly well for URL parameters, pagination, and syndicated content. The canonical tag tells search engines to consolidate ranking authority and backlink power to the specified URL while still allowing the duplicate URL to remain accessible.

Noindex tags prevent search engines from indexing specific pages while keeping them accessible to users. This solution works well for landing pages, print-friendly versions, staging environments, and search result pages that shouldn’t appear in search results. By adding <meta name="robots" content="noindex"> to the page header, you tell search engines to exclude the page from their index without requiring redirects or canonical tags.

Content differentiation addresses duplicate content by making each page unique and valuable. Rather than having multiple similar pages, you can rewrite content with unique insights, add original research or expert quotes, include practical examples, and provide actionable steps. This approach transforms potential duplicates into complementary content that serves different purposes and audiences.

For external duplicate content caused by unauthorized scraping, you can submit DMCA takedown requests through Google’s legal troubleshooter tool. You can also contact the website owner directly and request removal or proper attribution with canonical tags. If direct contact fails, legal action may be necessary to protect your intellectual property.

The definition and impact of duplicate content continues to evolve as search technology advances and new platforms emerge. Historically, duplicate content was primarily a concern for traditional search engines like Google, Bing, and Yahoo. However, the rise of AI-powered search engines and large language models has introduced new dimensions to this challenge. These systems must not only identify duplicate content but also determine which version represents the authoritative source for citation purposes.

Future trends suggest that duplicate content management will become increasingly important for brand visibility and authority in AI search. As more users rely on AI search engines for information, the ability to control which version of your content gets cited becomes critical. Organizations will need to implement proactive duplicate content management strategies not just for traditional SEO but specifically to optimize their appearance in AI responses. This includes ensuring that canonical URLs are clearly specified, that preferred versions are easily discoverable by AI crawlers, and that brand attribution is unambiguous.

The integration of AI monitoring tools like AmICited into standard SEO workflows represents an important evolution. These platforms help organizations understand how duplicate content affects their visibility across multiple AI search engines simultaneously. As AI systems become more sophisticated at identifying original sources and attributing content correctly, the importance of proper canonicalization and duplicate content management will only increase. Organizations that proactively manage duplicate content today will be better positioned to maintain visibility and authority in tomorrow’s AI-driven search landscape.

Emerging technologies like blockchain-based content verification and decentralized identity systems may eventually provide additional tools for managing duplicate content and proving original authorship. However, for the foreseeable future, traditional solutions like canonical tags, 301 redirects, and noindex directives remain the most effective approaches. The key is implementing these solutions consistently and monitoring their effectiveness across both traditional search engines and AI-powered search systems to ensure your brand maintains optimal visibility and authority.

Internal duplicate content occurs when multiple URLs on the same website contain identical or highly similar content, such as product descriptions appearing on multiple pages or pages accessible through different URL parameters. External duplicate content refers to identical content existing on different domains, often through content syndication or unauthorized scraping. Both types negatively impact SEO, but internal duplication is more controllable through technical solutions like canonical tags and 301 redirects.

Google does not typically issue manual penalties for duplicate content unless it appears intentional and designed to manipulate search rankings at scale. However, duplicate content still harms SEO performance by confusing search engines about which version to index and rank, diluting backlink authority across multiple URLs, and wasting crawl budget. The key distinction is that Google addresses the issue through algorithmic selection rather than punitive penalties for honest technical mistakes.

Duplicate content creates challenges for AI systems like ChatGPT, Perplexity, and Claude when determining which version to cite as the authoritative source. When multiple URLs contain identical content, AI models may struggle to identify the original source, potentially citing lower-authority versions or creating confusion about content ownership. This is particularly important for brand monitoring platforms tracking where your content appears in AI responses, as duplicate content can fragment your visibility across AI search engines.

Common causes include URL parameters used for tracking or filtering (e.g., ?color=blue&size=large), domain variations (HTTP vs. HTTPS, www vs. non-www), pagination across multiple pages, content syndication, session IDs, print-friendly versions, and misconfigured web servers. Technical issues like trailing slashes, URL casing inconsistencies, and index pages (index.html, index.php) also create duplicates. Additionally, human-driven causes such as copying content for landing pages or other websites republishing your content without permission contribute significantly to duplicate content problems.

A canonical tag is an HTML element (rel="canonical") that specifies which URL is the preferred version when multiple URLs contain identical or similar content. By adding a canonical tag to duplicate pages pointing to the main version, you signal to search engines which page should be indexed and ranked. This consolidates ranking authority and backlink power to a single URL without requiring redirects, making it ideal for situations where you need to keep multiple URLs accessible to users but want search engines to prioritize one version.

You can identify duplicate content using Google Search Console's Index Coverage report, which flags pages with duplicate content issues. Tools like Semrush Site Audit, Screaming Frog, and Conductor can scan your entire website and flag pages that are at least 85% identical. For external duplicate content, services like Copyscape search the web for copies of your content. Regular audits checking for unique page titles, meta descriptions, and H1 headings also help identify internal duplication issues.

Duplicate content wastes your site's crawl budget—the limited time and resources search engines allocate to crawl your website. When Googlebot encounters multiple versions of the same content, it spends crawling resources on duplicates instead of discovering and indexing new or updated pages. For large websites, this can significantly reduce the number of unique pages indexed. By consolidating duplicates through canonical tags, 301 redirects, or noindex tags, you preserve crawl budget for content that matters, improving overall indexation and ranking potential.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Learn how to manage and prevent duplicate content when using AI tools. Discover canonical tags, redirects, detection tools, and best practices for maintaining u...

Learn how canonical URLs prevent duplicate content problems in AI search systems. Discover best practices for implementing canonicals to improve AI visibility a...

Community discussion on how AI systems handle duplicate content differently from traditional search engines. SEO professionals share insights on content uniquen...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.