Financial Services AI Visibility: Compliance and Optimization

Monitor how your financial brand appears in AI-generated answers. Learn compliance requirements, visibility strategies, and optimization techniques for regulate...

Regulatory requirements for financial institutions to transparently disclose their use of artificial intelligence in decision-making, risk management, and customer-facing applications. Encompasses SEC, CFPB, FINRA, and other regulatory expectations for documenting AI governance, model performance, and impacts on consumers and markets.

Regulatory requirements for financial institutions to transparently disclose their use of artificial intelligence in decision-making, risk management, and customer-facing applications. Encompasses SEC, CFPB, FINRA, and other regulatory expectations for documenting AI governance, model performance, and impacts on consumers and markets.

Financial AI Disclosure refers to the regulatory requirements and best practices for financial institutions to transparently disclose their use of artificial intelligence in decision-making processes, risk management, and customer-facing applications. It encompasses SEC, CFPB, FINRA, and other regulatory expectations for documenting AI governance, model performance, and potential impacts on consumers and markets. In December 2024, the SEC’s Investor Advisory Committee (IAC) advanced a formal recommendation that the agency issue guidance requiring issuers to disclose information about the impact of artificial intelligence on their companies. The IAC cited a “lack of consistency” in contemporary AI disclosures, which “can be problematic for investors seeking clear and comparable information.” Only 40% of S&P 500 companies provide AI-related disclosures, and just 15% disclose information about board oversight of AI, despite 60% of S&P 500 companies viewing AI as a material risk.

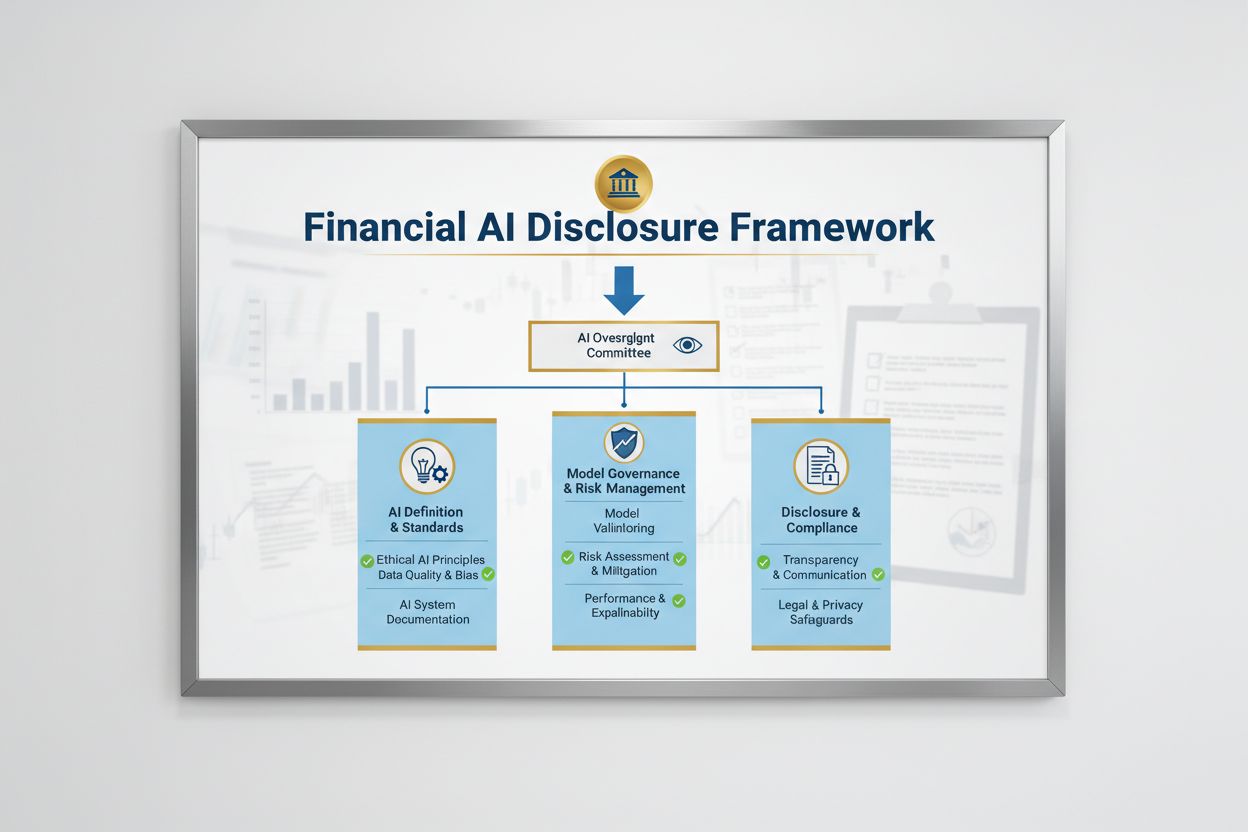

The regulatory landscape for Financial AI Disclosure is shaped by multiple federal agencies applying existing technology-neutral laws to AI systems. The SEC’s Investor Advisory Committee proposed an initial framework with three key pillars: (1) requiring issuers to define what they mean by “Artificial Intelligence,” (2) disclosing board oversight mechanisms for AI deployment, and (3) reporting on material AI deployments and their effects on internal operations and consumer-facing products. Beyond the SEC, the CFPB, FINRA, OCC, and Federal Reserve have all issued guidance emphasizing that existing consumer protection laws—including fair lending, data privacy, and anti-fraud statutes—apply to AI systems regardless of the technology used. These agencies take a technology-neutral approach, meaning that compliance obligations under the Equal Credit Opportunity Act (ECOA), Fair Credit Reporting Act (FCRA), Gramm-Leach-Bliley Act (GLBA), and Unfair or Deceptive Acts or Practices (UDAAP) standards remain unchanged when AI is involved.

| Regulator | Focus Area | Key Requirement |

|---|---|---|

| SEC | Investor disclosure, conflicts of interest | Define AI, disclose board oversight, report material deployments |

| CFPB | Fair lending, consumer protection | Ensure AI models comply with ECOA, provide adverse action notices |

| FINRA | Broker-dealer operations, customer communications | Establish AI governance policies, supervise AI usage across all functions |

| OCC/Federal Reserve/FDIC | Bank model governance, operational risk | Validate AI models, document controls, assess reliability |

| FTC | Deceptive practices, data handling | Monitor AI-related claims, prevent unfair data use |

Effective Financial AI Disclosure requires clear board-level oversight of artificial intelligence deployment and risk management. The SEC’s IAC recommendation emphasizes that investors have a legitimate interest in understanding whether there are clear lines of authority regarding the deployment of AI technology on internal business operations and product lines. Financial institutions must establish governance structures that assign responsibility for AI oversight to the Board of Directors or a designated board committee, ensuring that AI-related risks are managed at the highest levels of the organization. This governance framework should include documented policies and procedures for AI development, testing, validation, and deployment, with regular reporting to the board on model performance, identified risks, and remediation efforts. Without clear board accountability, financial institutions risk regulatory scrutiny and potential enforcement actions for inadequate oversight of material AI systems.

Financial institutions must separately disclose the material effects of AI deployment on internal business operations and consumer-facing products. For internal operations, disclosures should address the impact of AI on human capital (such as workforce reductions or upskilling requirements), financial reporting accuracy, governance processes, and cybersecurity risks. For consumer-facing matters, institutions should disclose investments in AI-driven platforms, integration of AI within products and services, regulatory impacts from AI use, and how AI influences pricing strategies or business benchmarks. The SEC recommends integrating these disclosures into existing Regulation S-K disclosure items (such as Items 101, 103, 106, and 303) on a materiality-informed basis, rather than establishing entirely new disclosure categories. This approach allows financial institutions to leverage existing disclosure frameworks while providing investors with clear, comparable information about AI’s role in the organization’s operations and strategic direction.

High-quality data is foundational to responsible AI deployment in financial services, yet many institutions struggle with data governance frameworks that meet regulatory expectations. Financial institutions must ensure that data used to train, test, and validate AI models is clean, complete, standardized, and comprehensive, with clear documentation of data sources, lineage, and any known limitations. Data security protections must prevent “data poisoning”—the manipulation of training data to compromise model integrity—and safeguard against unauthorized access or disclosure of sensitive information. The Gramm-Leach-Bliley Act (GLBA) provides baseline protections for consumer financial data, but many regulators and consumer advocates argue that these protections are insufficient in the AI context, particularly given the expansion of data collection and use for model training. Financial institutions must also address intellectual property concerns, ensuring that they have proper authorization to use data for AI development and that they respect third-party intellectual property rights. Implementing robust data governance frameworks, including data minimization principles, access controls, and regular audits, is essential for demonstrating compliance with regulatory expectations.

Regulatory agencies have made clear that AI models used in financial decision-making must be tested for bias and potential discriminatory outcomes, regardless of the model’s overall accuracy or performance. The “black box” problem—where complex AI systems produce outputs that are difficult or impossible to explain—creates significant compliance risk, particularly in consumer-facing applications such as credit underwriting, pricing, and fraud detection. Financial institutions must implement processes to detect and mitigate bias throughout the model lifecycle, including bias testing during development, validation, and ongoing monitoring. Fair lending compliance requires that institutions be able to explain the specific reasons for adverse decisions (such as credit denials) and demonstrate that their AI models do not have a disparate impact on protected classes. Testing for “less discriminatory alternatives”—methods that achieve the same business objectives while reducing bias—is increasingly expected by regulators. Many institutions are adopting explainable AI (xAI) techniques, such as feature importance analysis and decision trees, to improve transparency and accountability in AI-driven decisions.

Financial institutions using AI in consumer-facing applications must comply with existing consumer protection laws, including requirements for adverse action notices, data privacy disclosures, and fair treatment. When an AI system is used to make or significantly influence decisions about credit, insurance, or other financial products, consumers have a right to understand why they were denied or offered less favorable terms. The CFPB has emphasized that creditors subject to the Equal Credit Opportunity Act (ECOA) are not permitted to use “black box” models when they cannot provide specific and accurate reasons for adverse actions. Additionally, the Unfair or Deceptive Acts or Practices (UDAAP) standard applies to AI-driven systems that may harm consumers through opaque data collection, privacy violations, or steering toward unsuitable products. Financial institutions must ensure that AI systems used in customer service, product recommendations, and account management are transparent about their use of AI and provide clear disclosures about how consumer data is collected, used, and protected.

Most financial institutions rely on third-party vendors to develop, deploy, or maintain AI systems, creating significant third-party risk management (TPRM) obligations. The federal banking agencies’ Interagency Guidance on Third-Party Relationships emphasizes that financial institutions remain responsible for the performance and compliance of third-party AI systems, even when the institution did not develop the model itself. Effective TPRM for AI requires robust due diligence before engaging a vendor, including assessment of the vendor’s data governance practices, model validation processes, and ability to provide transparency into model operations. Financial institutions must also monitor for concentration risk—the potential systemic impact if a small number of AI providers experience disruptions or failures. Supply chain risks, including the reliability of data sources and the stability of third-party infrastructure, must be evaluated and documented. Contracts with AI vendors should include clear requirements for data security, model performance monitoring, incident reporting, and the ability to audit or test the AI system. Smaller financial institutions, in particular, may struggle with the technical expertise required to evaluate complex AI systems, creating a need for industry standards or certification programs to facilitate vendor assessment.

Financial institutions operating across borders face an increasingly complex patchwork of AI regulations, with the European Union’s AI Act representing the most comprehensive regulatory framework to date. The EU AI Act classifies AI systems used in credit scoring, fraud prevention, and anti-money laundering as “high-risk,” requiring extensive documentation, bias testing, human oversight, and conformity assessments. The OECD and G7 have also issued principles and recommendations for responsible AI governance in financial services, emphasizing transparency, fairness, accountability, and human oversight. Many of these international standards have extraterritorial reach, meaning that financial institutions serving customers in the EU, UK, or other regulated jurisdictions may need to comply with those standards even if they are headquartered elsewhere. Regulatory harmonization efforts are ongoing, but significant differences remain between jurisdictions, creating compliance challenges for global financial institutions. Proactive alignment with the highest international standards—such as the EU AI Act and OECD principles—can help institutions prepare for future regulatory developments and reduce the risk of enforcement actions across multiple jurisdictions.

Financial institutions should implement comprehensive documentation and governance practices to demonstrate compliance with Financial AI Disclosure expectations. Key best practices include:

By adopting these practices, financial institutions can reduce regulatory risk, build stakeholder trust, and position themselves as leaders in responsible AI deployment within the financial services industry.

Financial AI Disclosure refers to the regulatory requirements for financial institutions to transparently disclose their use of artificial intelligence in decision-making, risk management, and customer-facing applications. It encompasses SEC, CFPB, FINRA, and other regulatory expectations for documenting AI governance, model performance, and impacts on consumers and markets. The SEC's Investor Advisory Committee recommended in December 2024 that financial institutions define their use of AI, disclose board oversight mechanisms, and report on material AI deployments.

Financial institutions must disclose AI usage to protect investors, consumers, and market integrity. Regulators have found that only 40% of S&P 500 companies provide AI-related disclosures, creating inconsistency and information gaps for investors. Disclosure requirements ensure that stakeholders understand how AI impacts financial decisions, risk management, and consumer outcomes. Additionally, existing consumer protection laws—including fair lending and data privacy statutes—apply to AI systems, making disclosure essential for regulatory compliance.

The SEC's Investor Advisory Committee recommended three key pillars for AI disclosure: (1) requiring issuers to define what they mean by 'Artificial Intelligence,' (2) disclosing board oversight mechanisms for AI deployment, and (3) reporting on material AI deployments and their effects on internal operations and consumer-facing products. These recommendations are designed to provide investors with clear, comparable information about how AI impacts financial institutions' operations and strategic direction.

Financial AI Disclosure focuses specifically on regulatory requirements for transparent communication about AI usage to external stakeholders (investors, regulators, consumers), while general AI governance refers to internal processes and controls for managing AI systems. Disclosure is the external-facing component of AI governance, ensuring that stakeholders have access to material information about AI deployment, risks, and impacts. Both are essential for responsible AI deployment in financial services.

AI deployment disclosures should address both internal operations and consumer-facing impacts. For internal operations, institutions should disclose impacts on human capital, financial reporting, governance, and cybersecurity risks. For consumer-facing products, disclosures should cover investments in AI-driven platforms, integration of AI within products, regulatory impacts, and how AI influences pricing or business strategies. Disclosures should be integrated into existing regulatory disclosure items (such as SEC Regulation S-K) on a materiality-informed basis.

Fair lending laws, including the Equal Credit Opportunity Act (ECOA) and Fair Credit Reporting Act (FCRA), apply to AI systems used in credit decisions regardless of the technology used. Financial institutions must be able to explain the specific reasons for adverse decisions (such as credit denials) and demonstrate that their AI models do not have a disparate impact on protected classes. Regulators expect institutions to test AI models for bias, implement 'less discriminatory alternatives' where appropriate, and maintain documentation of fairness testing and validation.

Most financial institutions rely on third-party vendors for AI systems, creating significant third-party risk management (TPRM) obligations. Financial institutions remain responsible for the performance and compliance of third-party AI systems, even when they did not develop the model. Effective TPRM for AI requires robust due diligence, ongoing monitoring, assessment of vendor data governance practices, and documentation of model performance. Institutions must also monitor for concentration risk—the potential systemic impact if a small number of AI providers experience disruptions.

The European Union's AI Act represents the most comprehensive regulatory framework for AI, classifying financial AI systems as 'high-risk' and requiring extensive documentation, bias testing, and human oversight. The OECD and G7 have also issued principles for responsible AI governance. Many of these international standards have extraterritorial reach, meaning financial institutions serving customers in regulated jurisdictions may need to comply with those standards. Proactive alignment with international standards can help institutions prepare for future regulatory developments and reduce enforcement risk.

Track how your financial institution is referenced in AI responses across GPTs, Perplexity, and Google AI Overviews. Ensure your brand maintains visibility and accuracy in AI-generated financial content.

Monitor how your financial brand appears in AI-generated answers. Learn compliance requirements, visibility strategies, and optimization techniques for regulate...

Explore the future of AI visibility monitoring, from transparency standards to regulatory compliance. Learn how brands can prepare for the AI-driven information...

Learn how to present AI visibility results to C-suite executives. Discover key metrics, dashboard design, and best practices for executive AI reporting and gove...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.