Crawlability

Crawlability is the ability of search engines to access and navigate website pages. Learn how crawlers work, what blocks them, and how to optimize your site for...

Indexability refers to whether a webpage can be successfully crawled, analyzed, and stored in a search engine’s index for potential inclusion in search results. It depends on technical factors like robots.txt directives, noindex tags, canonical URLs, and content quality signals that determine if a page is eligible for indexing.

Indexability refers to whether a webpage can be successfully crawled, analyzed, and stored in a search engine's index for potential inclusion in search results. It depends on technical factors like robots.txt directives, noindex tags, canonical URLs, and content quality signals that determine if a page is eligible for indexing.

Indexability is the ability of a webpage to be successfully crawled, analyzed, and stored in a search engine’s index for potential inclusion in search results. Unlike crawlability—which focuses on whether search engines can access a page—indexability determines whether that accessed page is deemed worthy of inclusion in the search engine’s database. A page can be perfectly crawlable but still not indexable if it contains a noindex directive, fails quality assessments, or violates other indexing rules. Indexability is the critical bridge between technical accessibility and actual search visibility, making it one of the most important concepts in search engine optimization and generative engine optimization (GEO). Without proper indexability, even the highest-quality content remains invisible to both traditional search engines and AI-powered discovery platforms.

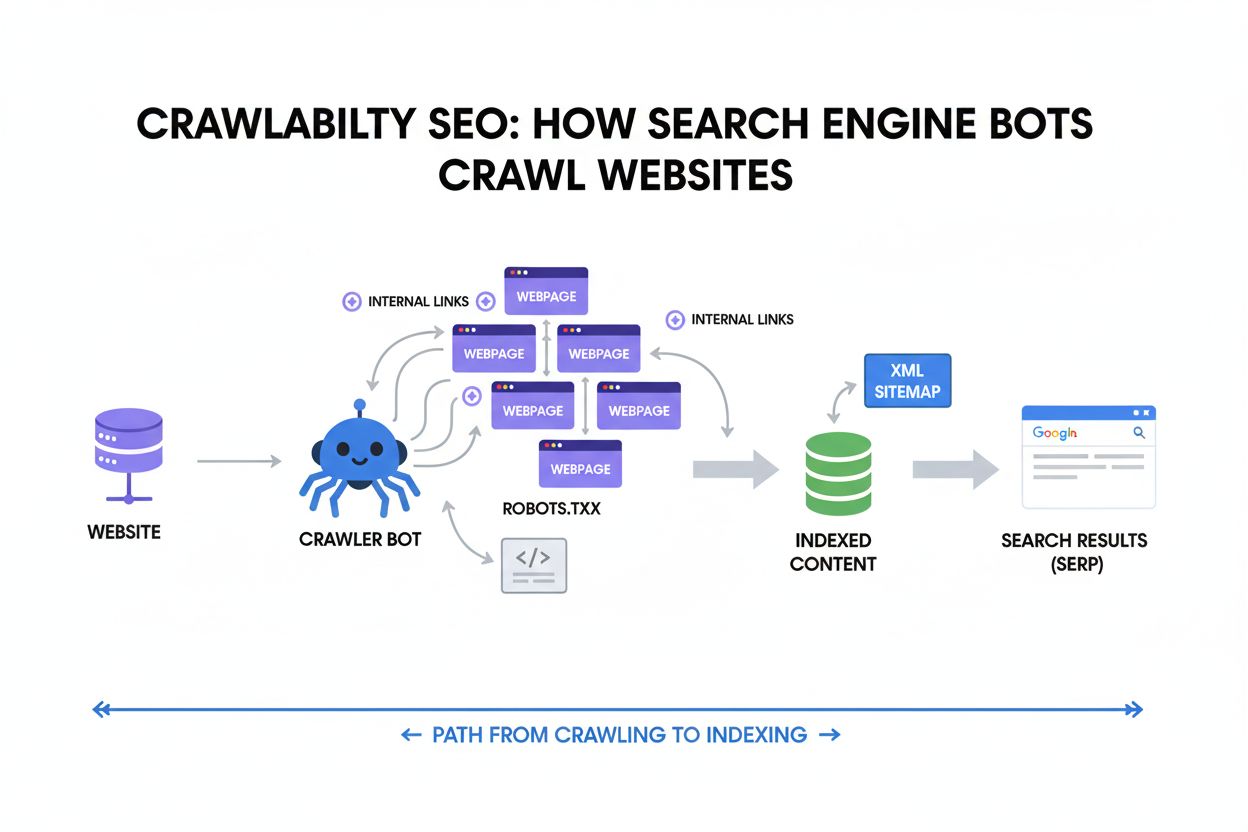

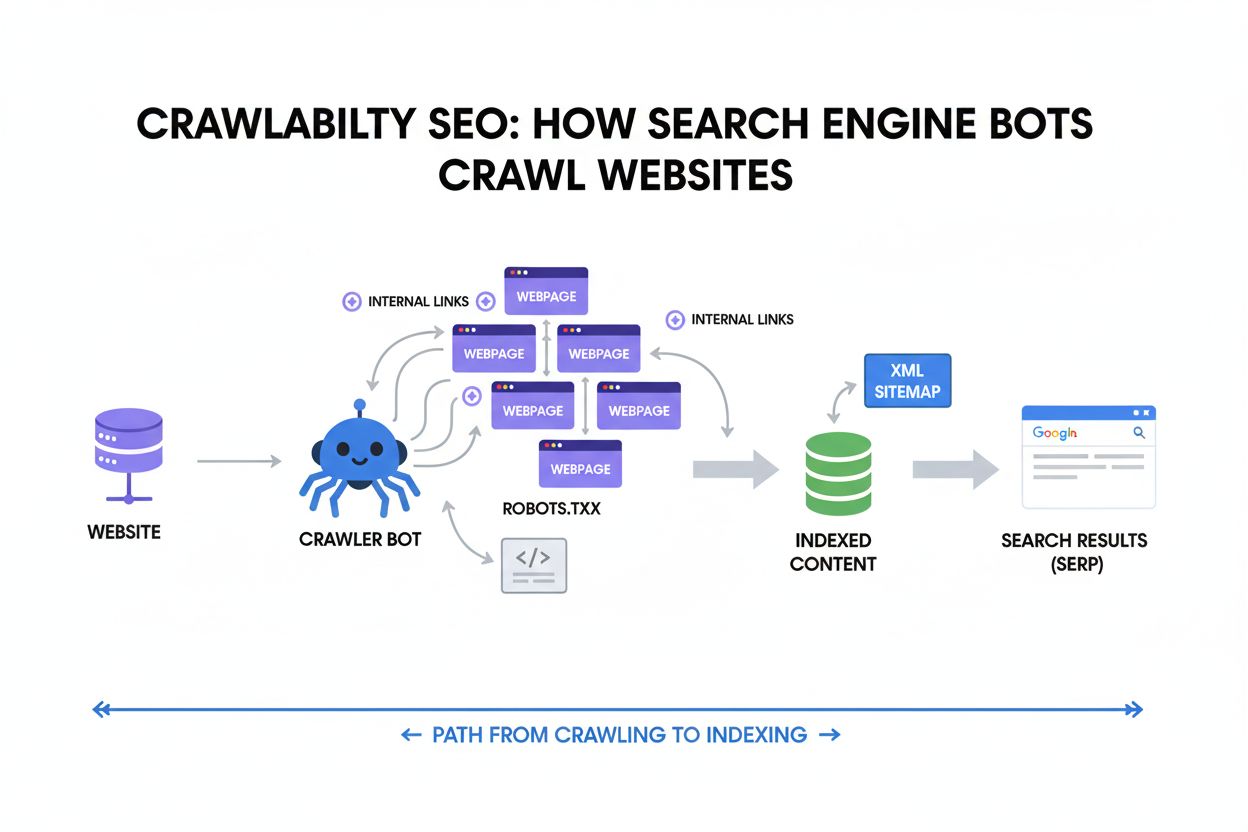

While often confused, crawlability and indexability serve different purposes in the search engine pipeline. Crawlability refers to whether Googlebot and other search engine crawlers can discover and access a webpage through links, sitemaps, or external references. It answers the question: “Can the search engine bot reach this page?” Indexability, by contrast, answers: “Should this page be stored in the search engine’s index?” A page can be highly crawlable—easily accessible to bots—yet still fail to be indexed if it contains a noindex meta tag, has duplicate content issues, or lacks sufficient quality signals. According to research by Botify, a 30-day study analyzing 413 million unique web pages found that while 51% of pages weren’t being crawled, 37% of crawled pages weren’t indexable due to quality or technical issues. This distinction is crucial because fixing crawlability issues alone won’t guarantee indexation; both technical factors and content quality must align for successful indexing.

Several technical mechanisms directly control whether a page can be indexed. The noindex meta tag is the most explicit control, implemented as <meta name="robots" content="noindex"> in a page’s HTML head section or as an X-Robots-Tag: noindex HTTP header. When search engines encounter this directive, they will not index the page, regardless of its quality or external links pointing to it. The robots.txt file controls crawl access but doesn’t directly prevent indexing; if a page is blocked by robots.txt, crawlers cannot see the noindex tag, potentially allowing the page to remain indexed if discovered through external links. Canonical tags specify which version of a page should be indexed when duplicate or similar content exists across multiple URLs. Incorrect canonical implementation—such as pointing to the wrong URL or creating circular references—can prevent the intended page from being indexed. HTTP status codes also influence indexability: pages returning 200 OK are indexable, while 301 redirects indicate permanent moves, 302 redirects suggest temporary changes, and 404 errors indicate missing pages that cannot be indexed. Understanding and properly implementing these technical factors is essential for maintaining strong indexability across your website.

| Concept | Definition | Primary Focus | Impact on Search Visibility | Control Method |

|---|---|---|---|---|

| Indexability | Whether a crawled page can be stored in search index | Inclusion in search database | Direct—indexed pages are eligible for ranking | noindex tags, quality signals, canonicals |

| Crawlability | Whether search bots can access and read a page | Bot access and discovery | Prerequisite for indexability | robots.txt, internal links, sitemaps |

| Renderability | Whether search engines can process JavaScript and dynamic content | Content visibility to crawlers | Affects what content is indexed | Server-side rendering, pre-rendering tools |

| Rankability | Whether an indexed page can rank for specific keywords | Position in search results | Determines visibility for queries | Content quality, authority, relevance signals |

| Discoverability | Whether users can find a page through search or links | User access to content | Depends on indexing and ranking | SEO optimization, link building, promotion |

The indexability decision involves multiple evaluation stages that occur after a page is crawled. First, search engines perform rendering, where they execute JavaScript and process dynamic content to understand the complete page structure and content. During this stage, Google assesses whether critical elements like headings, meta tags, and structured data are properly implemented. Second, engines evaluate content quality by analyzing whether the page offers original, helpful, and relevant information. Pages with thin content—minimal text, low word count, or generic information—often fail this quality threshold. Third, search engines check for duplicate content issues; if multiple URLs contain identical or near-identical content, the engine selects one canonical version to index while potentially excluding others. Fourth, semantic relevance is assessed through natural language processing to determine whether the content truly addresses user intent and search queries. Finally, trust and authority signals are evaluated, including factors like page structure, internal linking patterns, external citations, and overall domain authority. According to data from HTTP Archive’s 2024 Web Almanac, 53.4% of desktop pages and 53.9% of mobile pages include index directives in their robots meta tags, indicating widespread awareness of indexability controls. However, many sites still struggle with indexability due to improper implementation of these technical factors.

Content quality has become increasingly important in indexability decisions, particularly following Google’s emphasis on E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) signals. Search engines now evaluate whether content demonstrates genuine expertise, provides original insights, and serves a clear purpose for users. Pages that appear to be automatically generated, scraped from other sources, or created primarily for search engine manipulation are frequently not indexed, even if they’re technically sound. High-quality indexability requires content that is well-structured with clear headings, logical flow, and comprehensive coverage of the topic. Pages should include supporting evidence such as statistics, case studies, expert quotes, or original research. The use of schema markup and structured data helps search engines understand content context and increases the likelihood of indexation. Additionally, content freshness matters; regularly updated pages signal to search engines that the information is current and relevant. Pages that haven’t been updated in years may be deprioritized for indexing, particularly in fast-moving industries. The relationship between content quality and indexability means that SEO professionals must focus not only on technical implementation but also on creating genuinely valuable content that serves user needs.

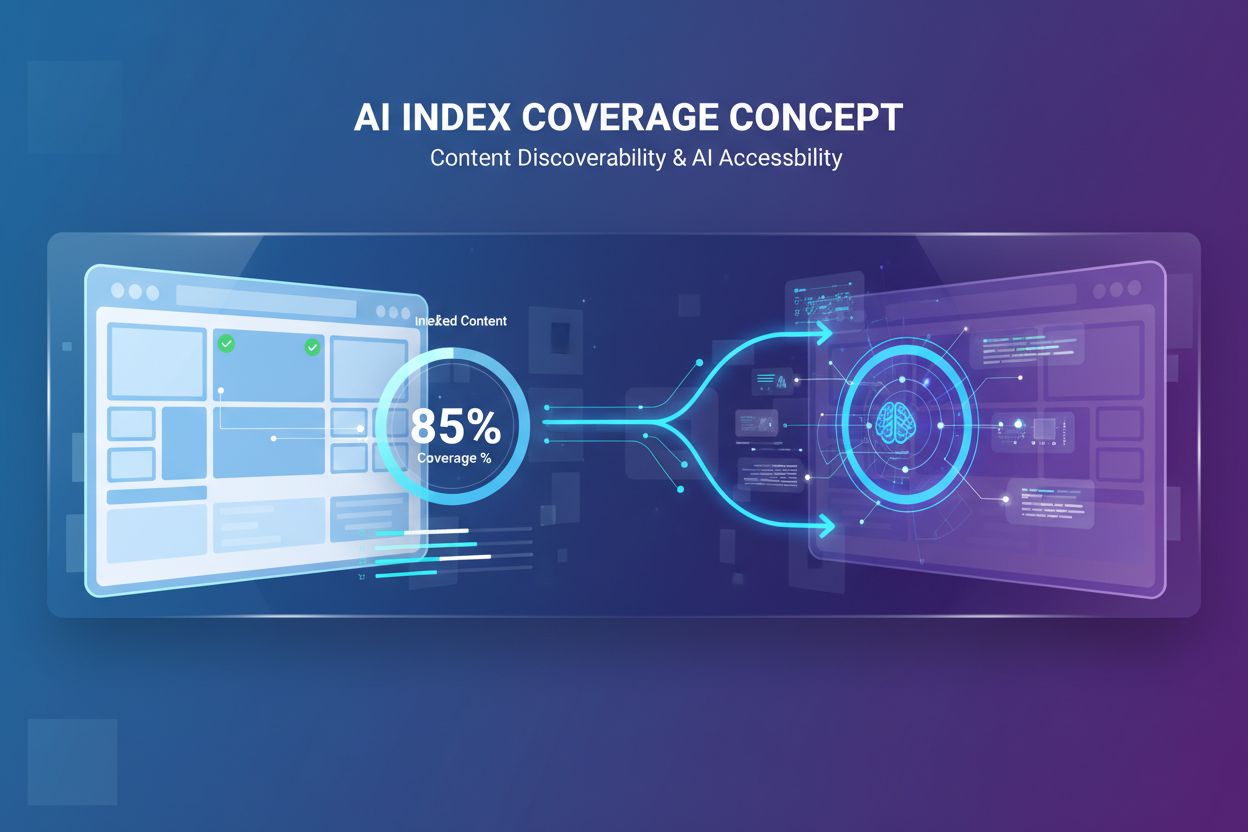

The rise of AI Overviews, ChatGPT, Perplexity, and other large language models (LLMs) has expanded the importance of indexability beyond traditional search results. These AI systems rely on indexed content from search engines as training data and source material for generating responses. When a page is indexed by Google, it becomes eligible for discovery by AI crawlers like OAI-SearchBot (ChatGPT’s crawler) and other AI platforms. However, indexability for AI search involves additional considerations beyond traditional SEO. AI systems evaluate content for semantic clarity, factual accuracy, and citation-worthiness. Pages that are indexed but lack clear structure, proper citations, or authoritative signals may not be selected for inclusion in AI-generated responses. According to research from Prerender.io, one customer saw an 800% increase in referral traffic from ChatGPT after optimizing their site’s indexability and ensuring proper rendering for AI crawlers. This demonstrates that strong indexability practices directly impact visibility across multiple discovery surfaces. Organizations must now consider indexability not just for Google Search, but for the entire ecosystem of AI-powered search and discovery platforms that rely on indexed content.

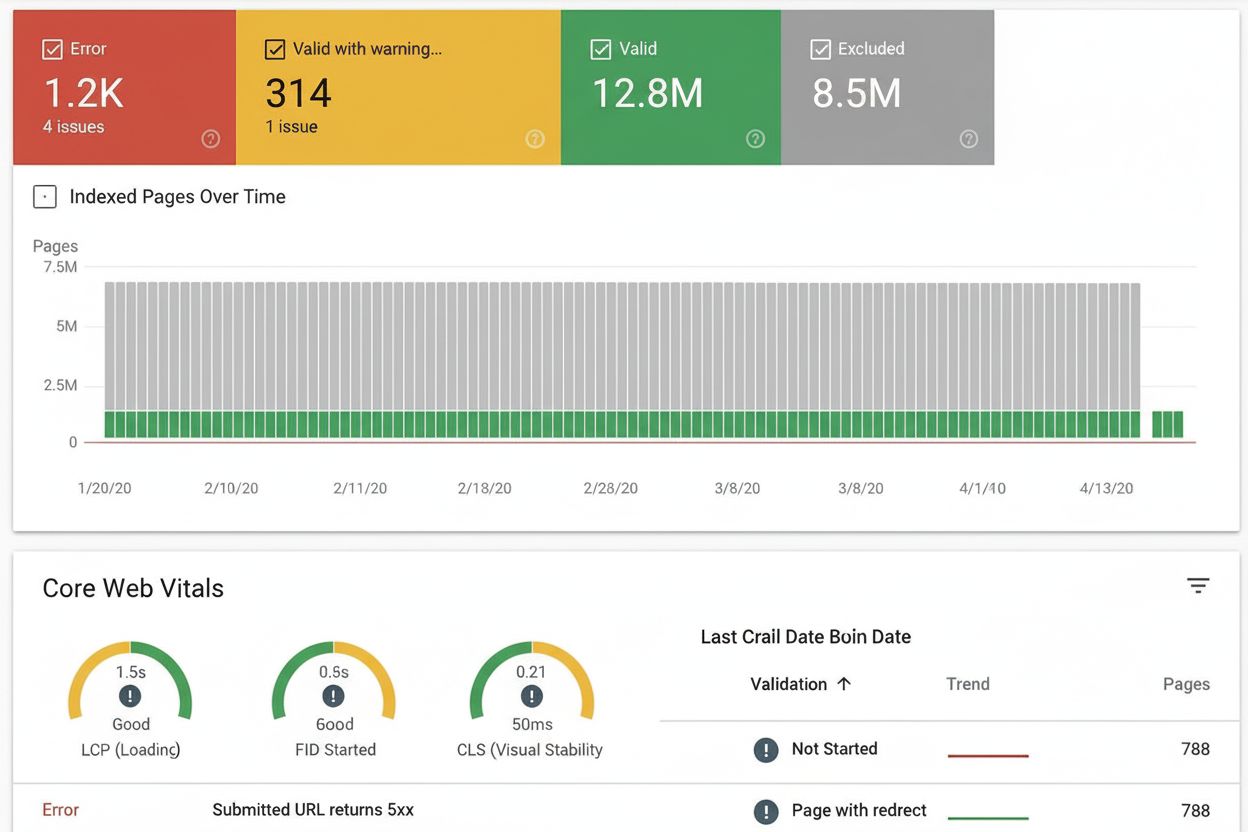

Several common issues prevent pages from being indexed despite being crawlable. Unintentional noindex tags are a frequent culprit, often inherited from CMS templates or accidentally applied during site redesigns. Audit your site using Google Search Console to identify pages marked with noindex, then verify whether this was intentional. Thin or duplicate content is another major blocker; pages with minimal original information or content that closely mirrors other pages on your site may not be indexed. Consolidate duplicate pages through 301 redirects or use canonical tags to specify the preferred version. Broken internal linking and orphaned pages—those with no internal links pointing to them—make it difficult for crawlers to discover and prioritize pages for indexing. Strengthen your internal linking structure by ensuring key pages are linked from your navigation menu and high-authority pages. Redirect chains and redirect loops waste crawl budget and confuse search engines about which page should be indexed. Audit your redirects and ensure they point directly to the final destination. JavaScript rendering issues prevent search engines from seeing critical content if it’s only loaded client-side. Use server-side rendering (SSR) or pre-rendering tools like Prerender.io to ensure all content is visible in the initial HTML. Slow page load times and server errors (5xx status codes) can prevent indexation; monitor your site’s performance and fix technical issues promptly.

Effective indexability management requires ongoing monitoring and measurement. Google Search Console provides the primary tool for tracking indexability, with the “Page Indexing” report (formerly “Index Coverage”) showing exactly how many pages are indexed versus excluded and why. This report categorizes pages as “Indexed,” “Crawled – currently not indexed,” “Discovered – currently not indexed,” or “Excluded by noindex tag.” Tracking these metrics over time reveals trends and helps identify systemic issues. The Index Efficiency Ratio (IER) is a valuable metric calculated as indexed pages divided by intended indexable pages. If you have 10,000 pages that should be indexable but only 6,000 are indexed, your IER is 0.6, indicating 40% of your intended content isn’t visible. Monitoring IER over time helps measure the impact of indexability improvements. Server log analysis provides another critical perspective, showing which pages Googlebot actually requests and how frequently. Tools like Semrush’s Log File Analyzer reveal whether your most important pages are being crawled regularly or if crawl budget is being wasted on low-priority content. Site crawlers like Screaming Frog and Sitebulb help identify technical indexability issues such as broken links, redirect chains, and improper canonical tags. Regular audits—monthly for smaller sites, quarterly for larger ones—help catch indexability problems before they impact search visibility.

Achieving strong indexability requires a systematic approach combining technical implementation and content strategy. First, prioritize pages that matter: focus indexability efforts on pages that drive business value, such as product pages, service pages, and cornerstone content. Not every page needs to be indexed; strategic exclusion of low-value pages through noindex tags actually improves crawl efficiency. Second, ensure proper canonicalization: use self-referencing canonical tags on most pages, and only use cross-domain canonicals when intentionally consolidating content. Third, implement robots.txt correctly: use it to block technical folders and low-priority resources, but never block pages you want indexed. Fourth, create high-quality, original content: focus on depth, clarity, and usefulness rather than keyword density. Fifth, optimize site structure: maintain a logical hierarchy with key pages accessible within three clicks from the homepage, and use clear internal linking to guide both users and crawlers. Sixth, add structured data: implement schema markup for content types like articles, FAQs, products, and organizations to help search engines understand your content. Seventh, ensure technical soundness: fix broken links, eliminate redirect chains, optimize page load speed, and monitor for server errors. Finally, keep content fresh: regularly update important pages to signal that information is current and relevant. These practices work together to create an environment where search engines can confidently index your most valuable content.

Indexability is evolving as search technology advances. The rise of mobile-first indexing means Google primarily crawls and indexes the mobile version of pages, making mobile optimization essential for indexability. The increasing importance of Core Web Vitals and page experience signals suggests that technical performance will play a larger role in indexability decisions. As AI search becomes more prevalent, indexability requirements may shift to emphasize semantic clarity, factual accuracy, and citation-worthiness over traditional ranking factors. The emergence of zero-click searches and featured snippets means that even indexed pages must be optimized for extraction and summarization by search engines and AI systems. Organizations should expect that indexability standards will continue to become more selective, with search engines indexing fewer but higher-quality pages. This trend makes it increasingly important to focus on creating genuinely valuable content and ensuring proper technical implementation rather than attempting to index every possible page variation. The future of indexability lies in quality over quantity, with search engines and AI systems becoming more sophisticated at identifying and prioritizing content that truly serves user needs.

For organizations using AI monitoring platforms like AmICited, understanding indexability is crucial for tracking brand visibility across multiple discovery surfaces. When your pages are properly indexed by Google, they become eligible for citation in AI-generated responses on platforms like ChatGPT, Perplexity, Google AI Overviews, and Claude. However, indexability alone doesn’t guarantee AI visibility; your content must also be semantically relevant, authoritative, and properly structured for AI systems to select it as a source. AmICited helps organizations monitor whether their indexed content is actually being cited and referenced in AI responses, providing insights into how indexability translates to real visibility across the AI search ecosystem. By combining traditional indexability monitoring with AI citation tracking, organizations can understand the full picture of their search visibility and make data-driven decisions about content optimization and technical SEO improvements.

Crawlability refers to whether search engine bots can access and read a webpage, while indexability determines whether that crawled page can be stored in the search engine's index. A page can be crawlable but not indexable if it contains a noindex tag or fails quality assessments. Both are essential for search visibility, but crawlability is the prerequisite for indexability.

The noindex meta tag or HTTP header explicitly tells search engines not to include a page in their index, even if the page is crawlable. When Googlebot encounters a noindex directive, it will drop the page from search results entirely. This is useful for pages like thank-you pages or duplicate content that serve a purpose but shouldn't appear in search results.

The robots.txt file controls which pages search engines can crawl, but it doesn't directly prevent indexing. If a page is blocked by robots.txt, crawlers cannot see the noindex tag, so the page might still appear in results if other sites link to it. For effective indexability control, use noindex tags rather than robots.txt blocking for pages you want to exclude from search results.

Search engines evaluate content quality as part of the indexability decision. Pages with thin content, duplicate information, or low value may be crawled but not indexed. Google's indexing algorithms assess whether content is original, helpful, and relevant to user intent. High-quality, unique content with clear structure and proper formatting is more likely to be indexed.

The index efficiency ratio (IER) is calculated as indexed pages divided by intended indexable pages. For example, if 10,000 pages should be indexable but only 6,000 are indexed, your IER is 0.6. This metric helps measure how effectively your site's content is being included in search indexes and identifies gaps between potential and actual visibility.

Canonical tags tell search engines which version of a page to treat as the authoritative source when duplicate or similar content exists. Incorrect canonical implementation can prevent the right page from being indexed or cause Google to index an unintended version. Self-referencing canonicals (where the canonical matches the page's own URL) are best practice for most pages.

Yes, a page can be indexed without ranking for any keywords. Indexing means the page is stored in the search engine's database and eligible to appear in results. Ranking is a separate process where search engines determine which indexed pages to show for specific queries. Many indexed pages never rank because they don't match user search intent or lack sufficient authority signals.

AI search engines like ChatGPT, Perplexity, and Claude use indexed content from traditional search engines as training data and source material. If your pages aren't indexed by Google, they're less likely to be discovered and cited by AI systems. Ensuring strong indexability in traditional search engines is foundational for visibility across AI-powered search platforms.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Crawlability is the ability of search engines to access and navigate website pages. Learn how crawlers work, what blocks them, and how to optimize your site for...

Index coverage measures which website pages are indexed by search engines. Learn what it means, why it matters for SEO, and how to monitor and fix indexing issu...

Learn what AI Index Coverage is and why it matters for your brand's visibility in ChatGPT, Google AI Overviews, and Perplexity. Discover technical factors, best...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.