Creating LLM Meta Answers: Standalone Insights AI Can Quote

Learn how to create LLM meta answers that AI systems cite. Discover structural techniques, answer density strategies, and citation-ready content formats that in...

Content that directly addresses how language models might interpret and respond to related queries, designed to improve visibility in AI-generated answers across platforms like ChatGPT, Google AI Overviews, and Perplexity. LLM Meta Answers represent synthesized responses that combine information from multiple sources into cohesive, conversational answers that address user intent.

Content that directly addresses how language models might interpret and respond to related queries, designed to improve visibility in AI-generated answers across platforms like ChatGPT, Google AI Overviews, and Perplexity. LLM Meta Answers represent synthesized responses that combine information from multiple sources into cohesive, conversational answers that address user intent.

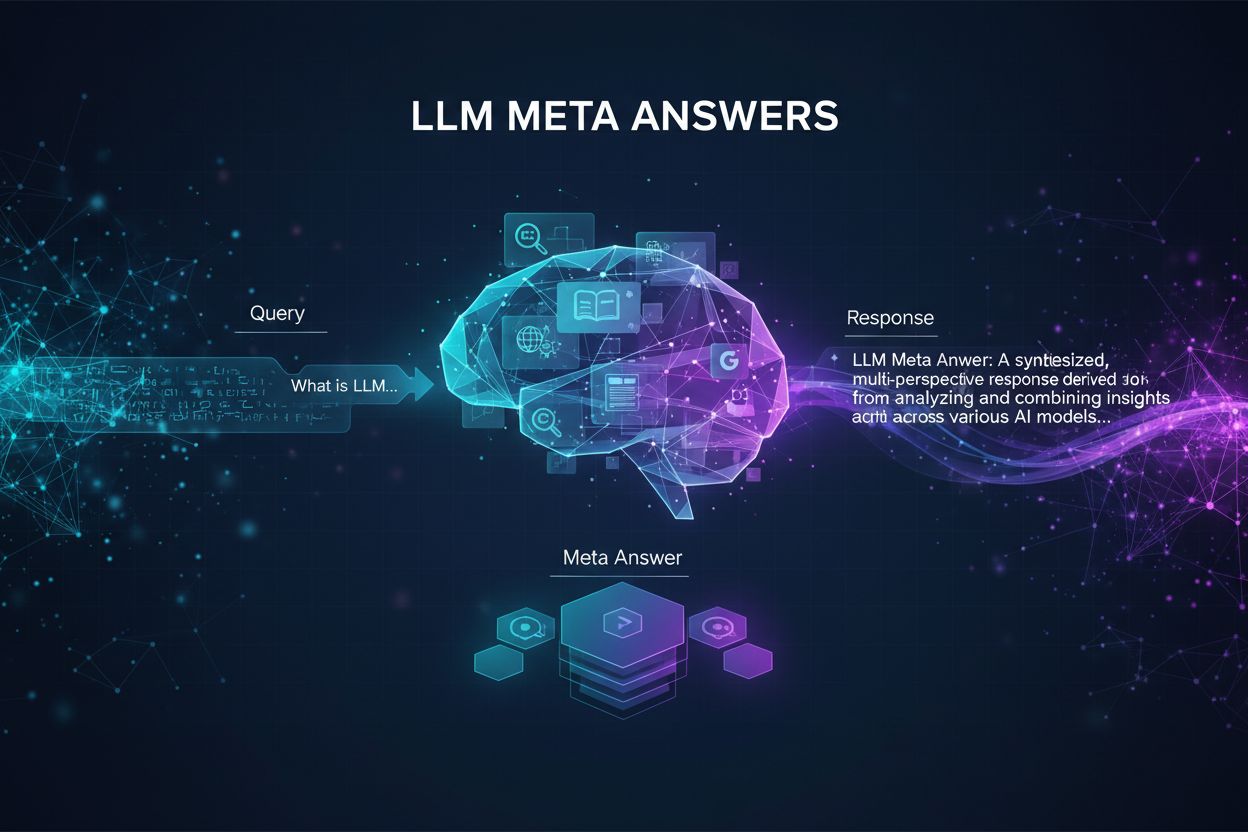

LLM Meta Answers represent the synthesized, AI-generated responses that large language models produce when users query them through platforms like ChatGPT, Claude, or Google’s AI Overviews. These answers are fundamentally different from traditional search results because they combine information from multiple sources into a cohesive, conversational response that directly addresses user intent. Rather than presenting a list of links, LLMs analyze retrieved content and generate original text that incorporates facts, perspectives, and insights from their training data and retrieval-augmented generation (RAG) systems. Understanding how LLMs construct these meta answers is essential for content creators who want their work to be cited and referenced in AI-generated responses. The visibility of your content in these AI responses has become as important as ranking in traditional search results, making LLM optimization (LLMO) a critical component of modern content strategy.

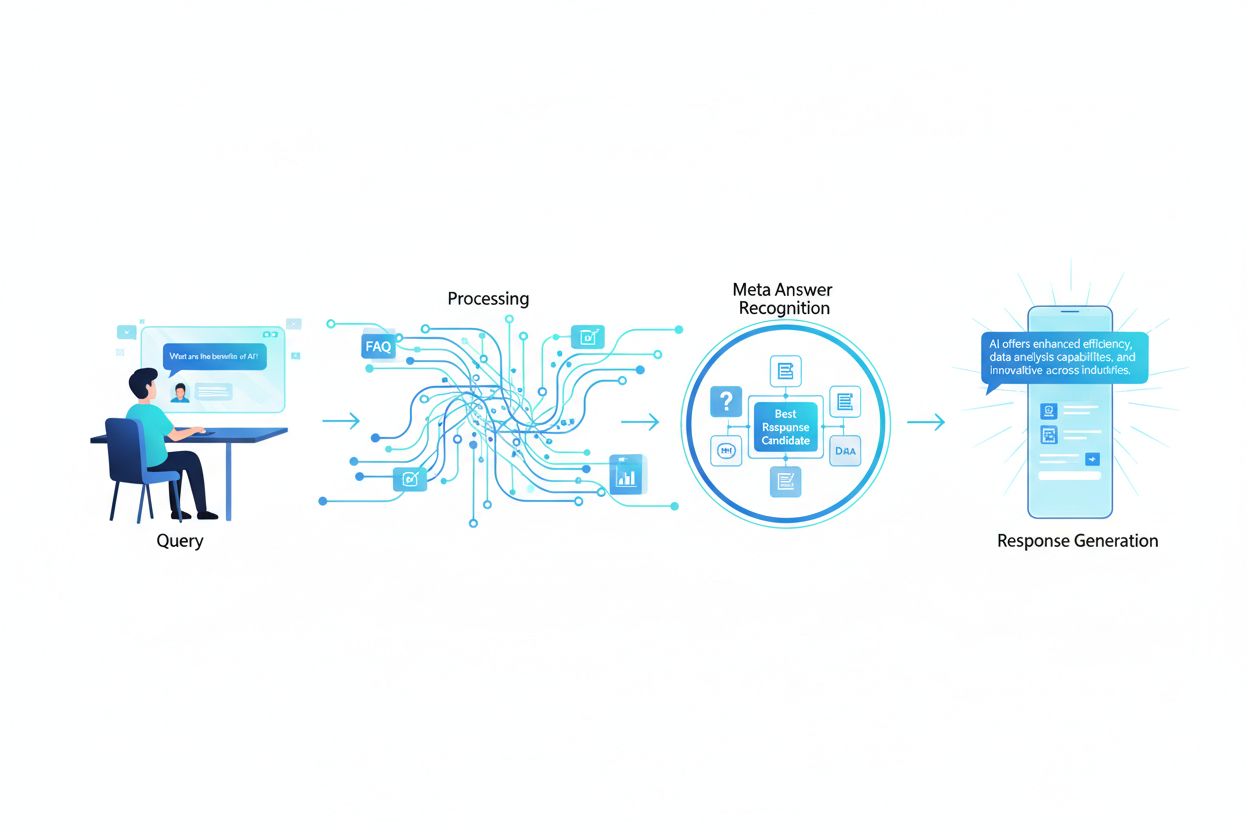

When a user submits a query to an LLM, the system doesn’t simply match keywords like traditional search engines do. Instead, LLMs perform semantic analysis to understand the underlying intent, context, and nuance of the question. The model breaks down the query into conceptual components, identifies related topics and entities, and determines what type of answer would be most helpful—whether that’s a definition, comparison, step-by-step guide, or analytical perspective. LLMs then retrieve relevant content from their knowledge base using RAG systems that prioritize sources based on relevance, authority, and comprehensiveness. The retrieval process considers not just exact keyword matches but semantic similarity, topical relationships, and how well content addresses the specific aspects of the query. This means your content needs to be discoverable not just for exact keywords, but for the semantic concepts and related topics that users are actually asking about.

| Query Interpretation Factor | Traditional Search | LLM Meta Answers |

|---|---|---|

| Matching Method | Keyword matching | Semantic understanding |

| Result Format | List of links | Synthesized narrative |

| Source Selection | Relevance ranking | Relevance + comprehensiveness + authority |

| Context Consideration | Limited | Extensive semantic context |

| Answer Synthesis | User must read multiple sources | AI combines multiple sources |

| Citation Requirement | Optional | Often included |

For your content to be selected and cited in LLM meta answers, it must possess several critical characteristics that align with how these systems evaluate and synthesize information. First, your content must demonstrate clear expertise and authority on the topic, with strong E-E-A-T signals (Experience, Expertise, Authoritativeness, Trustworthiness) that help LLMs identify reliable sources. Second, the content should provide genuine information gain—unique insights, data, or perspectives that add value beyond what’s commonly available. Third, your content must be structured in a way that LLMs can easily parse and extract relevant information, using clear hierarchies and logical organization. Fourth, semantic richness is essential; your content should thoroughly explore related concepts, use varied terminology, and build comprehensive topical authority rather than focusing narrowly on single keywords. Fifth, freshness matters significantly for current topics, as LLMs prioritize recent, up-to-date information when synthesizing answers. Finally, your content should include corroborating evidence through citations, data, and external references that build trust with LLM systems.

Key characteristics that improve LLM citation:

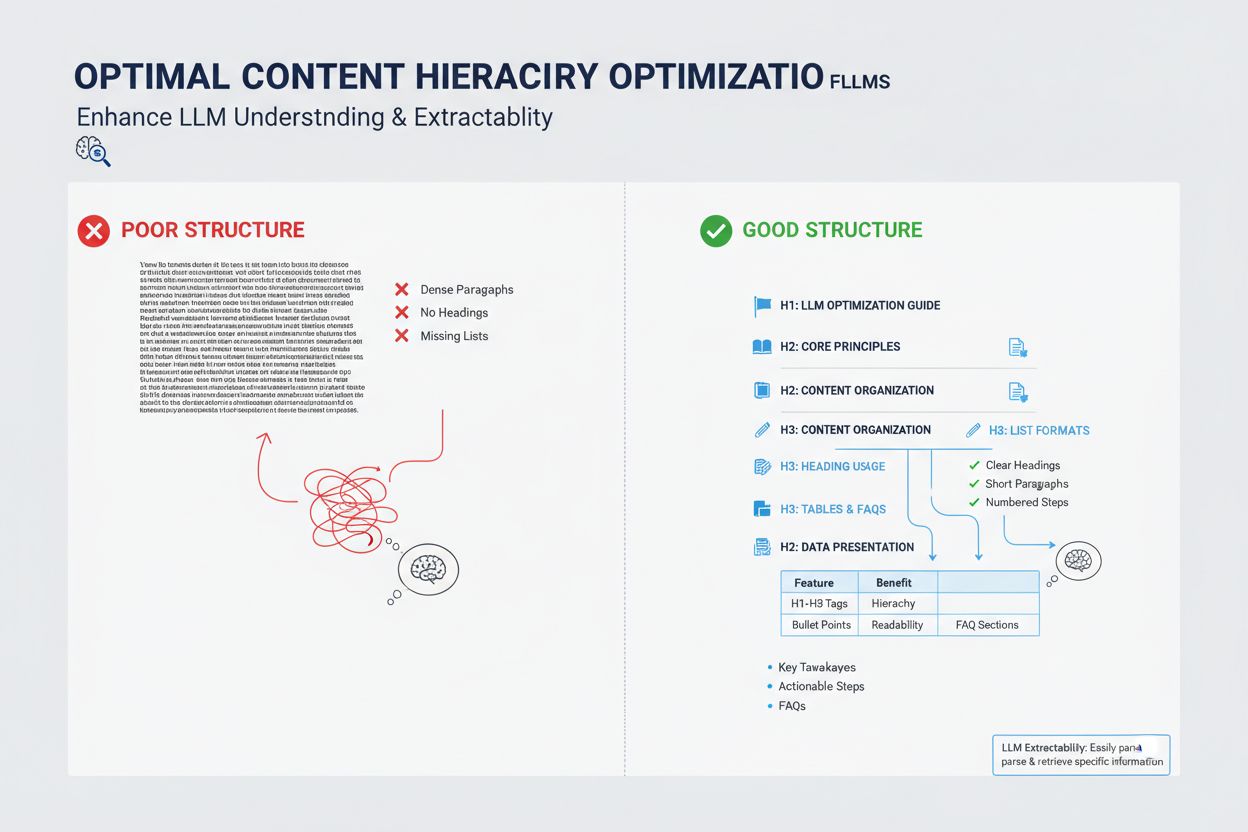

The way you structure your content has a profound impact on whether LLMs will select it for meta answers, as these systems are optimized to extract and synthesize well-organized information. LLMs strongly favor content with clear heading hierarchies (H1, H2, H3) that create logical information architecture, allowing the model to understand the relationship between concepts and extract relevant sections efficiently. Bullet points and numbered lists are particularly valuable because they present information in scannable, discrete units that LLMs can easily incorporate into their synthesized responses. Tables are especially powerful for LLM visibility because they present structured data in a format that’s easy for AI systems to parse and reference. Short paragraphs (3-5 sentences) perform better than dense blocks of text, as they allow LLMs to identify and extract specific information without wading through irrelevant content. Additionally, using schema markup (FAQ, Article, HowTo) provides explicit signals to LLMs about your content’s structure and purpose, significantly improving the likelihood of citation.

While LLM meta answers and featured snippets might seem similar on the surface, they represent fundamentally different mechanisms for content visibility and require distinct optimization strategies. Featured snippets are selected by traditional search algorithms from existing web content and displayed in a specific position on the search results page, typically showing 40-60 words of extracted text. LLM meta answers, by contrast, are AI-generated responses that synthesize information from multiple sources into a new, original narrative that may be longer and more comprehensive than any single source. Featured snippets reward content that directly answers a specific question in a concise format, while LLM meta answers reward comprehensive, authoritative content that provides deep topical coverage. The citation mechanisms differ significantly: featured snippets show a source link but the content itself is extracted verbatim, while LLM meta answers may paraphrase or synthesize your content and typically include attribution. Additionally, featured snippets are primarily optimized for traditional search, whereas LLM meta answers are optimized for AI platforms and may not appear in search results at all.

| Aspect | Featured Snippets | LLM Meta Answers |

|---|---|---|

| Generation Method | Algorithm extracts existing text | AI synthesizes from multiple sources |

| Display Format | Search results page position | AI platform response |

| Content Length | 40-60 words typically | 200-500+ words |

| Citation Style | Source link with extracted text | Attribution with paraphrased content |

| Optimization Focus | Concise direct answers | Comprehensive authority |

| Platform | Google Search | ChatGPT, Claude, Google AI Overviews |

| Visibility Metric | Search impressions | AI response citations |

Semantic richness—the depth and breadth of conceptual coverage in your content—is one of the most important but often overlooked factors in LLM optimization. LLMs don’t just look for your target keyword; they analyze the semantic relationships between concepts, the contextual information surrounding your main topic, and how thoroughly you explore related ideas. Content with high semantic richness uses varied terminology, explores multiple angles of a topic, and builds connections between related concepts, which helps LLMs understand that your content is genuinely authoritative rather than superficially keyword-optimized. When you write about a topic, include related terms, synonyms, and conceptually adjacent ideas that help LLMs place your content within a broader knowledge graph. For example, an article about “content marketing” should naturally incorporate discussions of audience segmentation, buyer personas, content distribution, analytics, and ROI—not because you’re keyword-stuffing, but because these concepts are semantically related and essential to understanding the topic comprehensively. This semantic depth signals to LLMs that you have genuine expertise and can provide the kind of nuanced, multi-faceted answers that users are seeking.

LLMs evaluate authority differently than traditional search engines, placing greater emphasis on signals that indicate genuine expertise and trustworthiness rather than just link popularity. Author credentials and demonstrated experience are particularly important; LLMs favor content from recognized experts, practitioners, or organizations with established authority in their field. External citations and references to other authoritative sources build credibility with LLM systems, as they demonstrate that your content is grounded in broader knowledge and corroborated by other experts. Consistency across multiple pieces of content on a topic helps establish topical authority; LLMs recognize when an author or organization has published multiple comprehensive pieces on related subjects. Third-party validation through mentions, quotes, or references from other authoritative sources significantly boosts your visibility in LLM meta answers. Original research, proprietary data, or unique methodologies provide powerful authority signals because they represent information that can’t be found elsewhere. Additionally, maintaining an updated publication history and regularly refreshing content signals that you’re actively engaged with your topic and staying current with developments.

Authority signals that influence LLM selection:

Content freshness has become increasingly critical for LLM visibility, particularly for topics where information changes frequently or where recent developments significantly impact the answer. LLMs are trained on data with knowledge cutoffs, but they increasingly rely on RAG systems that retrieve current information from the web, meaning your content’s publication date and update frequency directly influence whether it gets selected for meta answers. For evergreen topics, regular updates—even minor ones that refresh the publication date—signal to LLMs that your content is actively maintained and reliable. For time-sensitive topics like industry trends, technology updates, or current events, outdated content is actively deprioritized by LLM systems in favor of more recent sources. The best practice is to establish a content maintenance schedule where you review and update key pieces quarterly or semi-annually, refreshing statistics, adding new examples, and incorporating recent developments. This ongoing maintenance not only improves your LLM visibility but also demonstrates to both AI systems and human readers that you’re a current, reliable source of information.

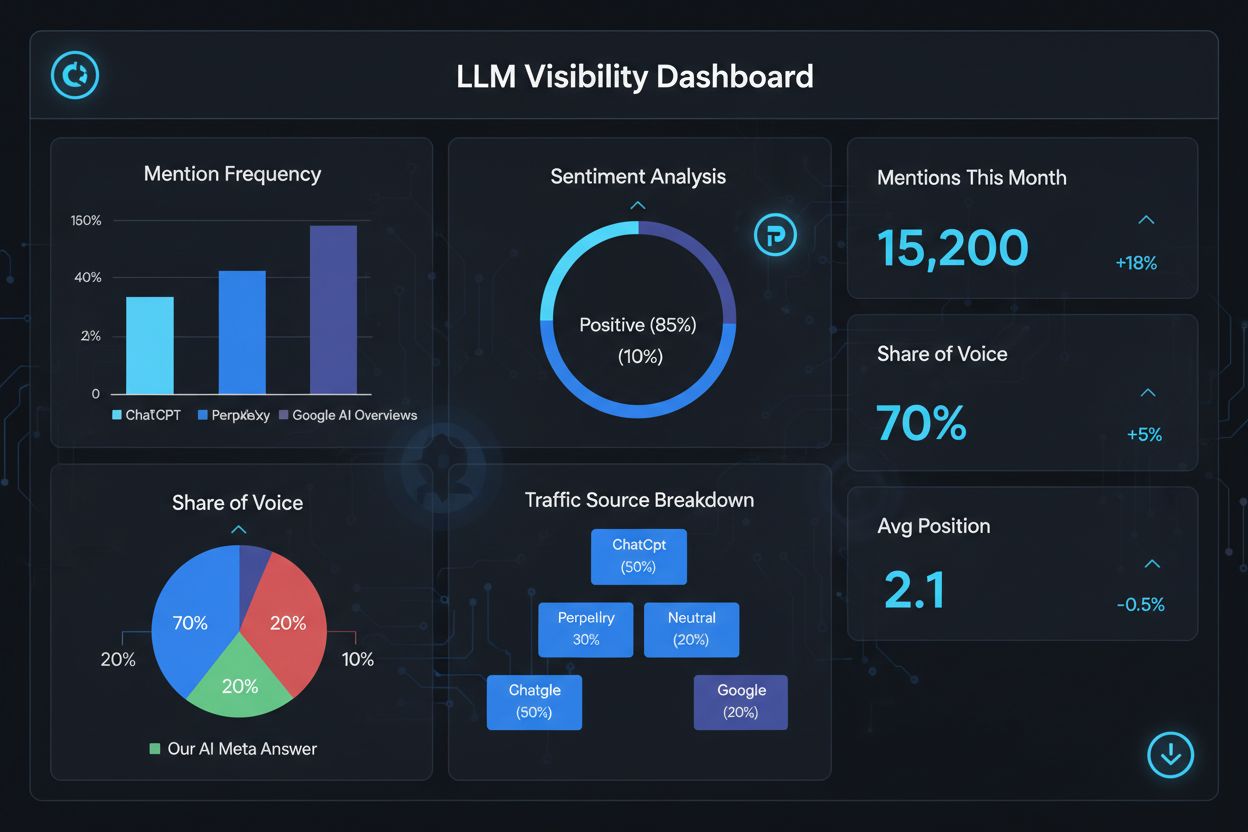

Measuring your success in LLM meta answers requires different metrics and tools than traditional SEO, as these responses exist outside the traditional search results ecosystem. Tools like AmICited.com provide specialized monitoring that tracks when and where your content appears in AI-generated responses, giving you visibility into which pieces are being cited and how frequently. You should monitor several key metrics: citation frequency (how often your content appears in LLM responses), citation context (what topics trigger your content’s inclusion), source diversity (whether you’re cited across different LLM platforms), and answer positioning (whether your content is featured prominently in the synthesized response). Track which specific pages or sections of your content are most frequently cited, as this reveals what types of information LLMs find most valuable and trustworthy. Monitor changes in your citation patterns over time to identify which optimization efforts are working and which topics need additional development. Additionally, analyze the queries that trigger your citations to understand how LLMs are interpreting user intent and which semantic variations are most effective.

Key metrics for LLM meta answer performance:

Optimizing for LLM meta answers requires a strategic approach that combines technical implementation, content quality, and ongoing measurement. Start by conducting comprehensive topical research to identify gaps where your expertise can provide unique value that LLMs will prioritize over generic sources. Structure your content with clear hierarchies, using H2 and H3 headings to create logical information architecture that LLMs can easily parse and extract from. Implement schema markup (FAQ, Article, HowTo) to explicitly signal your content’s structure and purpose to LLM systems, significantly improving discoverability. Develop comprehensive, authoritative content that thoroughly explores your topic from multiple angles, incorporating related concepts and building semantic richness that demonstrates genuine expertise. Include original research, data, or insights that provide information gain and differentiate your content from competitors. Maintain a regular content update schedule, refreshing key pieces quarterly to ensure freshness and signal ongoing authority. Build topical authority by creating multiple related pieces that collectively establish your expertise across a subject area. Use clear, direct language that answers specific questions users are asking, making it easy for LLMs to extract and synthesize your content. Finally, monitor your LLM citation performance using specialized tools to identify what’s working and continuously refine your strategy based on data-driven insights.

LLM Meta Answers focus on how language models interpret and cite content, while traditional SEO focuses on ranking in search results. LLMs prioritize relevance and clarity over domain authority, making well-structured, answer-first content more important than backlinks. Your content can be cited in LLM responses even if it doesn't rank in Google's top results.

Structure content with clear headings, provide direct answers upfront, use schema markup (FAQ, Article), include citations and statistics, maintain topical authority, and ensure your site is crawlable by AI bots. Focus on semantic richness, information gain, and comprehensive topical coverage rather than narrow keyword targeting.

LLMs extract snippets from well-organized content more easily. Clear headings, lists, tables, and short paragraphs help models identify and cite relevant information. Studies show LLM-cited pages have significantly more structured elements than average web pages, making structure a critical ranking factor.

Yes. Unlike traditional SEO, LLMs prioritize query relevance and content quality over domain authority. A well-structured, highly relevant niche page can be cited by LLMs even if it doesn't rank in Google's top results, making expertise and clarity more important than site authority.

Update content regularly, especially for time-sensitive topics. Add timestamps showing when content was last updated. For evergreen topics, quarterly reviews are recommended. Fresh content signals accuracy to LLMs, improving citation likelihood and demonstrating ongoing authority.

AmICited.com specializes in monitoring AI mentions across ChatGPT, Perplexity, and Google AI Overviews. Other tools include Semrush's AI SEO Toolkit, Ahrefs Brand Radar, and Peec AI. These tools track mention frequency, share of voice, sentiment, and help you measure optimization success.

Schema markup (FAQ, Article, HowTo) provides machine-readable structure that helps LLMs understand and extract content more accurately. It signals content type and intent, making your page more likely to be selected for relevant queries and improving overall discoverability.

External mentions on high-authority sites (news, Wikipedia, industry publications) build credibility and increase the chances LLMs will cite your content. Multiple independent sources mentioning your brand or data create a pattern of authority that LLMs recognize and reward with higher citation frequency.

Track how your brand appears in AI-generated answers across ChatGPT, Perplexity, and Google AI Overviews with AmICited's specialized monitoring platform.

Learn how to create LLM meta answers that AI systems cite. Discover structural techniques, answer density strategies, and citation-ready content formats that in...

Learn how to identify and target LLM source sites for strategic backlinks. Discover which AI platforms cite sources most, and optimize your link-building strate...

Learn what LLMO is and discover proven techniques to optimize your brand for visibility in AI-generated responses from ChatGPT, Perplexity, Claude, and other LL...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.