Split Testing

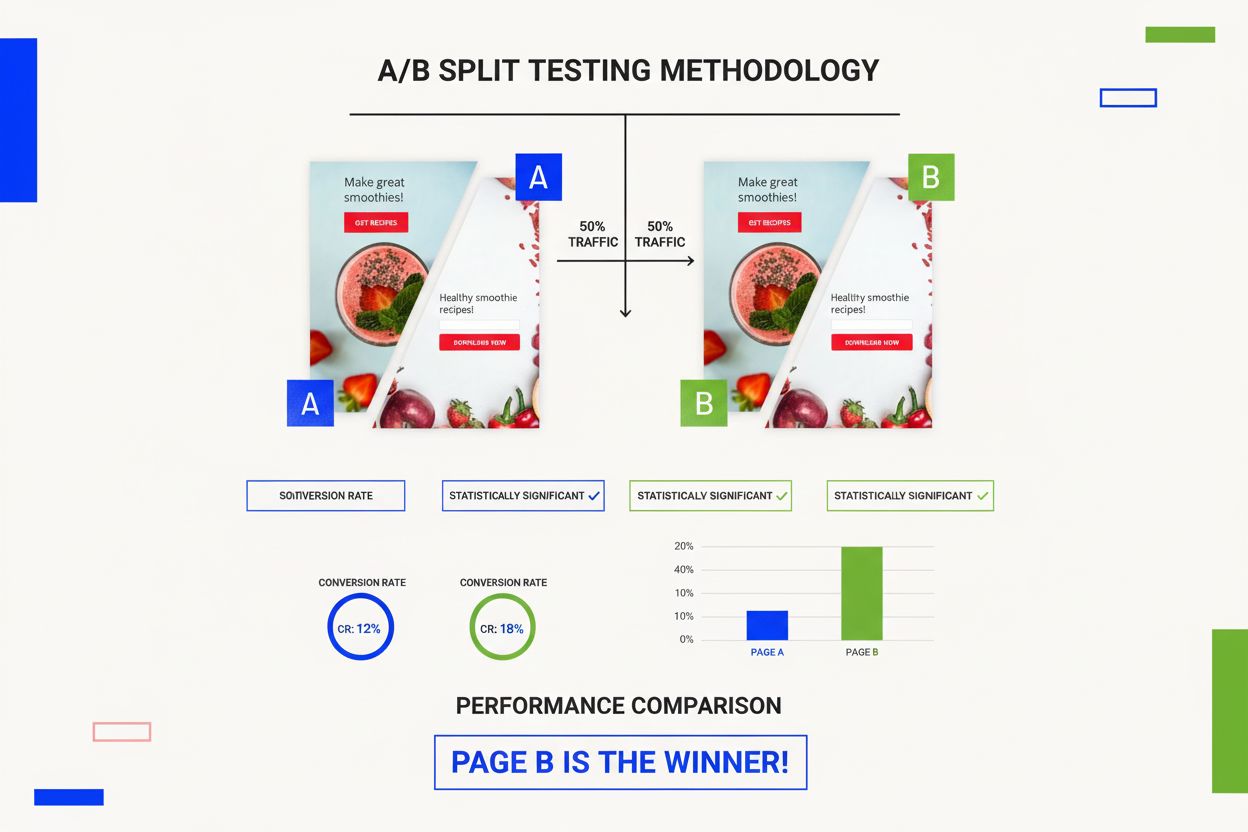

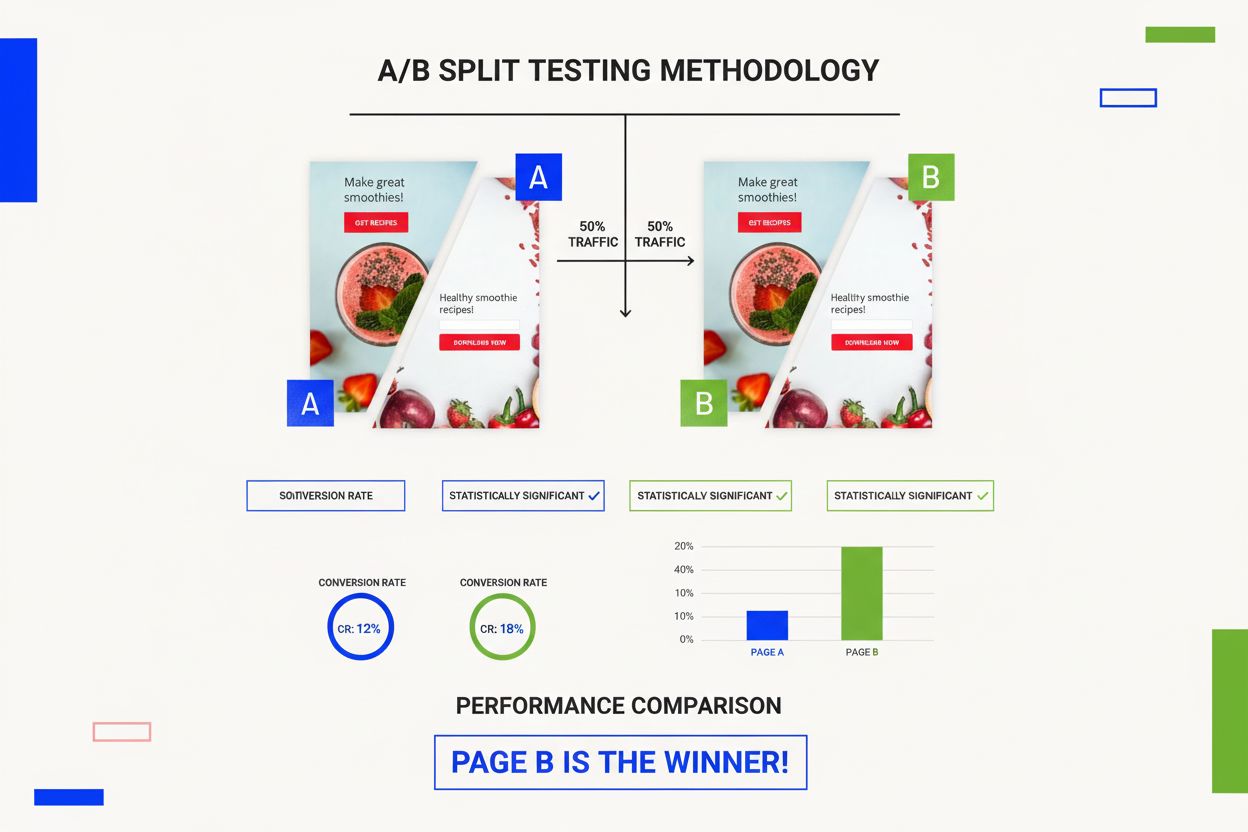

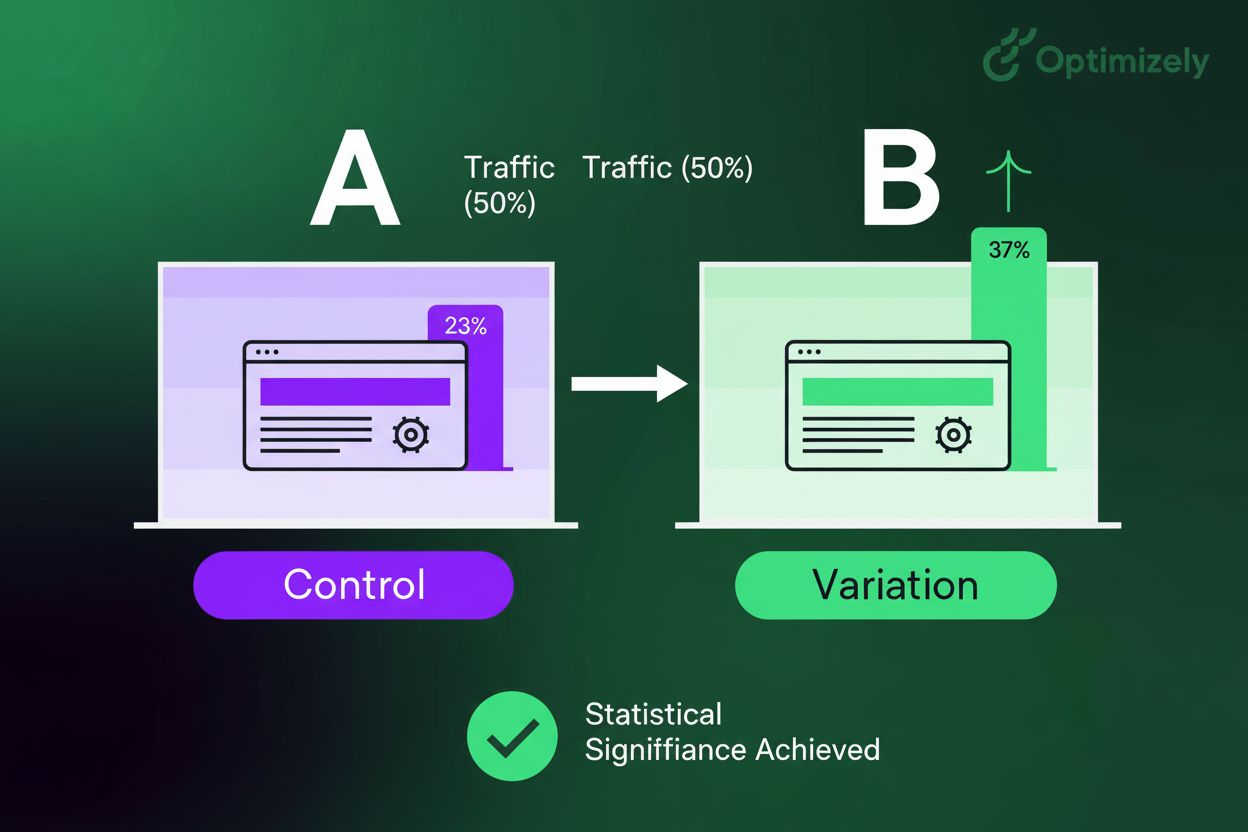

Split testing divides website traffic between different versions to identify the highest-performing variant. Learn how A/B testing drives conversion optimizatio...

Multivariate testing (MVT) is an experimentation methodology that tests multiple variables simultaneously on a webpage or digital asset to determine which combination of variations produces the highest conversion rates and user engagement. Unlike A/B testing which isolates a single variable, MVT evaluates how different page elements interact with each other to optimize overall performance.

Multivariate testing (MVT) is an experimentation methodology that tests multiple variables simultaneously on a webpage or digital asset to determine which combination of variations produces the highest conversion rates and user engagement. Unlike A/B testing which isolates a single variable, MVT evaluates how different page elements interact with each other to optimize overall performance.

Multivariate testing (MVT) is a sophisticated experimentation methodology that simultaneously tests multiple variables and their combinations on a webpage, application, or digital asset to determine which permutation produces the highest conversion rates, user engagement, and business outcomes. Unlike traditional A/B testing, which isolates a single variable to measure its impact, multivariate testing evaluates how different page elements interact with each other in real-time, providing comprehensive insights into complex user behavior patterns. This methodology enables organizations to optimize multiple elements concurrently rather than sequentially, significantly reducing the time required to identify winning combinations. MVT is particularly valuable for high-traffic websites and applications where sufficient visitor volume exists to support the statistical requirements of testing numerous variations simultaneously.

Multivariate testing emerged as a formalized methodology in the early 2000s as digital marketing matured and organizations recognized the limitations of single-variable testing approaches. The technique evolved from classical experimental design principles used in manufacturing and quality control, adapted specifically for digital optimization. Early adopters in the e-commerce and SaaS sectors discovered that testing multiple elements simultaneously could reveal synergistic effects—where the combination of elements produced results superior to what individual element tests predicted. According to industry research, only 0.78% of organizations actively conduct multivariate tests, indicating that despite its power, MVT remains underutilized compared to A/B testing. This adoption gap exists partly because MVT requires more sophisticated statistical knowledge, higher traffic volumes, and more complex implementation than traditional A/B testing. However, organizations that have mastered MVT report 19% better performance compared to those relying exclusively on A/B testing, demonstrating the substantial competitive advantage this methodology provides.

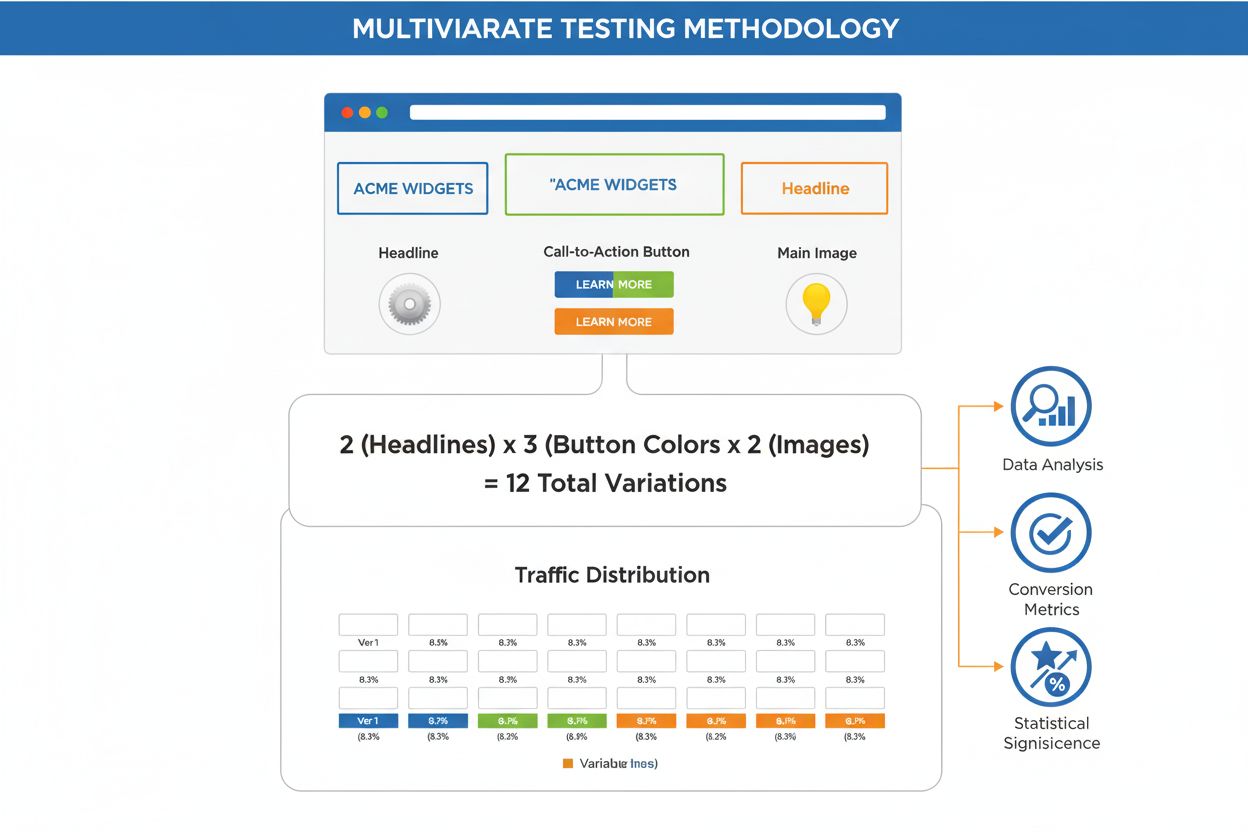

The mathematical foundation of multivariate testing relies on factorial design principles, where the total number of variations equals the product of variations across all tested elements. The fundamental formula is: Total Variations = (# of Variations for Element A) × (# of Variations for Element B) × (# of Variations for Element C). For example, testing three headlines, two button colors, and two images creates 3 × 2 × 2 = 12 distinct variations that must be tested concurrently. This exponential growth in combinations is why traffic requirements become critical—each variation receives proportionally less traffic, extending the time needed to reach statistical significance at the standard 95% confidence level. The methodology assumes that all combinations make logical sense together and that elements can be tested independently without creating contradictory or nonsensical user experiences. Understanding these mathematical principles is essential for designing effective tests that yield reliable, actionable insights rather than inconclusive or misleading results.

| Aspect | Multivariate Testing (MVT) | A/B Testing | Split URL Testing | Multipage Testing |

|---|---|---|---|---|

| Variables Tested | Multiple simultaneously | One at a time | Entire page designs | Single element across multiple pages |

| Complexity | High | Low | High | Medium |

| Sample Size Required | Very large | Small to medium | Large | Very large |

| Test Duration | Long (weeks to months) | Short (days to weeks) | Medium to long | Long (weeks to months) |

| Traffic Requirements | 5,000+ weekly visits | 1,000+ weekly visits | 5,000+ weekly visits | 10,000+ weekly visits |

| Best Use Case | Optimizing multiple elements on one page | Testing single element changes | Complete page redesigns | Consistent experience across site |

| Element Interactions | Measured and analyzed | Not measured | Not measured | Not measured |

| Implementation Effort | High | Low | Very high | Medium |

| Statistical Insights | Comprehensive | Clear and isolated | Holistic but unclear | Site-wide patterns |

Multivariate testing operates by dividing incoming traffic across all test variations proportionally, with each visitor randomly assigned to one combination of variables. The testing platform tracks user interactions with each variation, measuring predefined conversion goals and engagement metrics. The methodology employs full factorial design, where all possible combinations receive equal traffic distribution, or partial factorial design, where the system intelligently allocates traffic based on early performance signals. In full factorial testing, if you’re testing 8 variations, each receives approximately 12.5% of total traffic, requiring substantially more visitors than an A/B test where each version receives 50%. The statistical analysis compares conversion rates across variations using methods like chi-squared tests or Bayesian statistics to determine which combinations significantly outperform the control. Modern testing platforms increasingly employ machine learning algorithms that can identify underperforming variations early and reallocate traffic to more promising combinations, reducing overall test duration while maintaining statistical validity. This adaptive approach, sometimes called evolutionary neural networks, allows organizations to achieve results faster without compromising data integrity.

The business value of multivariate testing extends far beyond identifying winning page elements—it fundamentally transforms how organizations understand customer psychology and decision-making processes. By testing combinations of headlines, images, call-to-action buttons, form fields, and layout elements simultaneously, companies gain insights into which specific combinations resonate most powerfully with their target audiences. Real-world case studies demonstrate substantial impact: organizations implementing MVT-driven optimizations report conversion rate improvements ranging from 15% to 62%, with some high-impact tests yielding even more dramatic results. The methodology is particularly effective for e-commerce optimization, where testing product image sizes, pricing displays, trust badges, and CTA button text combinations can directly impact revenue per visitor. For SaaS companies, MVT helps optimize onboarding flows, feature discovery, and pricing page layouts to improve free-to-paid conversion rates. The key advantage is that MVT eliminates the need to run multiple sequential A/B tests, which would require months of testing to achieve the same insights. By testing combinations concurrently, organizations compress their optimization timeline while gathering more comprehensive data about element interactions that sequential testing would never reveal.

Different digital platforms present unique challenges and opportunities for multivariate testing implementation. On websites, MVT works best on high-traffic pages like homepages, product pages, and checkout flows where sufficient visitor volume exists to support multiple variations. Mobile applications require careful consideration of screen real estate constraints, as testing too many visual variations simultaneously can create confusing user experiences. Email marketing campaigns can employ MVT principles by testing subject line variations, content blocks, and CTA button combinations, though email platforms typically require larger sample sizes due to lower engagement rates. Landing pages are ideal MVT candidates because they’re purpose-built for conversion and typically receive concentrated traffic. Checkout flows benefit significantly from MVT because small improvements in form field labels, button colors, or trust signal placement can dramatically impact completion rates and revenue. The choice of testing platform—whether Optimizely, VWO, Amplitude, or Adobe Target—affects implementation complexity and statistical capabilities. Enterprise platforms offer advanced features like variance reduction techniques (CUPED), sequential testing, and machine learning-powered traffic allocation, while simpler platforms may require manual traffic management and basic statistical analysis.

Implementing multivariate testing effectively requires adherence to established best practices that maximize the probability of generating reliable, actionable insights. First, create a learning agenda before launching any test, clearly defining which hypotheses you want to validate and what business metrics matter most. Second, focus on high-impact variables rather than testing every possible element—prioritize page components that directly influence user decisions, such as headlines, primary CTAs, and product images. Third, avoid testing too many variations simultaneously; limit tests to 6-12 variations maximum to maintain statistical power and interpretability. Fourth, ensure adequate traffic volume by using sample size calculators that account for your baseline conversion rate, expected improvement, and desired confidence level. Fifth, monitor test performance continuously and eliminate underperforming variations early to redirect traffic toward more promising combinations. Sixth, use qualitative research alongside quantitative testing—employ heatmaps, session recordings, and user feedback to understand why certain combinations perform better. Seventh, document all hypotheses and learnings to build institutional knowledge and inform future testing strategies. Finally, apply winning combinations strategically rather than implementing all changes simultaneously, allowing you to measure the true impact of each optimization.

Despite its power, multivariate testing presents significant challenges that organizations must navigate carefully. The most substantial limitation is traffic requirements—MVT demands substantially more visitor volume than A/B testing, making it impractical for low-traffic websites or niche pages. A test with 8 variations requires approximately 8 times more traffic than an equivalent A/B test to reach statistical significance in the same timeframe. Test duration extends considerably; while A/B tests might complete in 1-2 weeks, MVT tests often require 4-12 weeks or longer, creating opportunity costs as organizations delay implementing other optimizations. Complexity in setup and analysis means that MVT requires more sophisticated statistical knowledge and testing expertise than A/B testing, limiting adoption among smaller teams without dedicated optimization specialists. Inconclusive results occur more frequently in MVT because with numerous variations, some may perform similarly to the control, making it difficult to identify clear winners. Interaction effects can be difficult to interpret—sometimes a combination performs unexpectedly well or poorly due to subtle interactions between elements that weren’t anticipated. Design constraints limit which combinations make logical sense; testing a headline about “beach vacations” with an image of mountains creates nonsensical variations that confuse users. Additionally, multivariate testing is biased toward design optimization and may overlook the importance of copy, offers, and functionality changes that don’t involve visual elements.

Full factorial testing represents the most comprehensive approach, where all possible combinations of variables receive equal traffic distribution and are tested to completion. This methodology provides the most reliable data because every combination is directly measured rather than statistically inferred. Full factorial testing answers not only which individual elements perform best but also reveals interaction effects—situations where specific combinations outperform what individual element performance would predict. However, full factorial testing requires the largest traffic volume and longest test duration, making it practical only for high-traffic digital properties. Partial or fractional factorial testing offers a more efficient alternative by testing only a subset of all possible combinations, then using statistical methods to infer the performance of untested combinations. This approach reduces traffic requirements by 50-75% compared to full factorial testing, enabling organizations with moderate traffic to conduct MVT. The trade-off is that partial factorial testing relies on mathematical assumptions and cannot detect all interaction effects. Taguchi testing, an older methodology adapted from manufacturing quality control, attempts to minimize the number of combinations tested through orthogonal array design. However, Taguchi testing is rarely recommended for modern digital experimentation because it makes assumptions that don’t hold in online environments and provides less reliable results than full or partial factorial approaches.

The convergence of machine learning and multivariate testing has revolutionized how organizations conduct experiments, introducing adaptive testing methodologies that dramatically improve efficiency. Traditional MVT distributes traffic equally across all variations regardless of performance, but machine learning algorithms can identify underperforming variations early and reallocate traffic toward more promising combinations. Evolutionary neural networks represent a sophisticated approach where algorithms learn which variable combinations are likely to perform well without testing all possibilities. These systems continuously introduce new variations (mutations) based on what’s working, creating a dynamic testing environment that evolves throughout the experiment. The advantage is substantial: organizations using machine learning-powered MVT can achieve statistical significance 30-50% faster than traditional full factorial testing while maintaining or improving result reliability. Bayesian statistics, increasingly common in modern testing platforms, allows for sequential analysis where tests can conclude earlier if results become statistically significant before the predetermined sample size is reached. These advanced methodologies are particularly valuable for organizations with moderate traffic volumes that would otherwise be unable to conduct traditional MVT due to traffic constraints.

The future of multivariate testing is being shaped by several converging trends that will fundamentally change how organizations approach digital optimization. Artificial intelligence and machine learning will increasingly automate variable selection, hypothesis generation, and traffic allocation, reducing the expertise required to conduct sophisticated experiments. Real-time personalization will merge with MVT principles, allowing organizations to test combinations dynamically based on individual user characteristics rather than serving static variations. Privacy-first testing will become essential as third-party cookies disappear and organizations must conduct experiments within stricter data governance frameworks. Cross-platform testing will expand beyond websites to encompass mobile apps, email, push notifications, and emerging channels, requiring unified testing platforms that can coordinate experiments across touchpoints. Causal inference methodologies will advance beyond correlation-based analysis, enabling organizations to understand not just which combinations work but why they work. The integration of voice of customer data with quantitative testing will create more holistic optimization approaches that balance statistical significance with qualitative user feedback. Organizations that master multivariate testing today will gain competitive advantages that compound over time, as continuous optimization creates compounding improvements in conversion rates, customer satisfaction, and lifetime value. The methodology will likely become less specialized and more democratized, with AI-powered platforms enabling teams without deep statistical expertise to conduct sophisticated experiments confidently.

For organizations using AI monitoring platforms like AmICited, understanding multivariate testing becomes strategically important for tracking how optimization expertise and testing methodologies appear in AI-generated content. As AI systems like ChatGPT, Perplexity, Google AI Overviews, and Claude increasingly reference testing methodologies and optimization strategies, organizations need visibility into how their testing frameworks and results are cited. Multivariate testing represents a sophisticated, high-value optimization technique that AI systems frequently reference when discussing conversion rate optimization and digital experimentation. Monitoring how your organization’s MVT expertise, case studies, and testing frameworks appear in AI responses helps establish thought leadership and ensures proper attribution. Organizations conducting significant multivariate testing work should track mentions of their testing methodologies, results, and optimization frameworks across AI platforms to understand how their expertise is being represented and cited. This visibility enables organizations to identify opportunities to strengthen their content authority, correct misattributions, and ensure their testing innovations receive appropriate recognition in AI-generated responses. The intersection of advanced testing methodologies and AI monitoring represents a new frontier in competitive intelligence and brand authority management.

A/B testing compares two versions of a single element, while multivariate testing evaluates multiple variables and their combinations simultaneously. MVT provides insights into how different page elements interact with each other, whereas A/B testing isolates the impact of one change. MVT requires significantly more traffic and time to reach statistical significance but delivers more comprehensive insights into user behavior and element interactions.

The formula is: Total Variations = (# of Variations for Element A) × (# of Variations for Element B) × (# of Variations for Element C). For example, if you test 2 headline variations, 2 button colors, and 2 images, the total would be 2 × 2 × 2 = 8 variations. This exponential growth means each additional variable significantly increases the number of combinations to test.

Because traffic is distributed across all variations, each combination receives a smaller percentage of total visitors. With 8 variations, each receives approximately 12.5% of traffic compared to 50% in an A/B test. This traffic dilution means it takes longer to accumulate sufficient data for each variation to reach statistical significance at the 95% confidence level.

The primary types are full factorial testing, which tests all possible combinations equally, and partial or fractional factorial testing, which tests only a subset of combinations and statistically infers results for untested variations. Full factorial provides comprehensive insights but requires more traffic, while fractional factorial is more efficient but relies on mathematical assumptions. Taguchi testing is an older method rarely used in modern digital experimentation.

Focus on testing only high-impact variables, use fewer variations per element, track micro-conversions instead of primary conversions, and consider lowering your statistical significance threshold from 95% to 70-80%. You can also eliminate underperforming variations early to redirect traffic to more promising combinations, and use statistical methods like the chi-squared test or confidence intervals to measure performance.

Primary metrics typically include conversion rate (CVR), click-through rate (CTR), and revenue per visitor (RPV). Secondary metrics can include engagement rate (ER), view-through rate (VTR), form completion rates, and time on page. Tracking multiple metrics provides more data points for statistical analysis and helps identify which variations drive different user behaviors across your conversion funnel.

Duration depends on traffic volume, number of variations, and expected effect size. A test with 8 variations on a high-traffic page might complete in 2-4 weeks, while the same test on a low-traffic site could take 2-3 months or longer. Using a sample size calculator based on your traffic, baseline conversion rate, and minimum detectable effect helps estimate realistic timelines before launching.

Statistical significance (typically 95% confidence level) indicates that test results are unlikely due to random chance. It means there's only a 5% probability the observed differences occurred randomly. Reaching statistical significance ensures your findings are reliable and actionable, preventing false conclusions that could lead to implementing ineffective changes or missing genuine improvements.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Split testing divides website traffic between different versions to identify the highest-performing variant. Learn how A/B testing drives conversion optimizatio...

A/B testing definition: A controlled experiment comparing two versions to determine performance. Learn methodology, statistical significance, and optimization s...

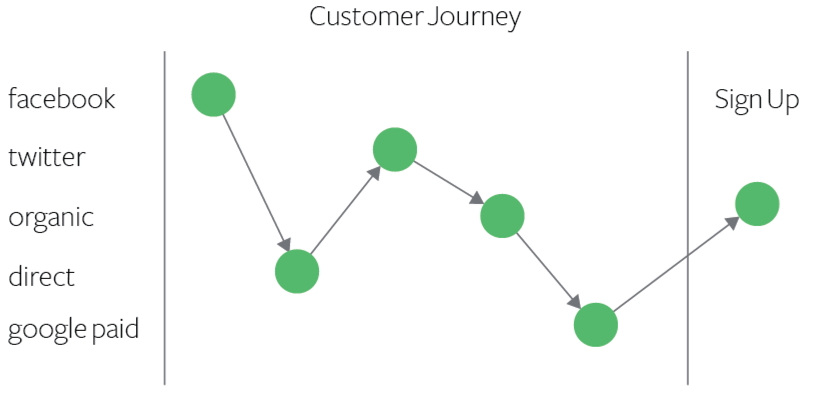

Multi-touch attribution assigns credit to all customer touchpoints in the conversion journey. Learn how this data-driven approach optimizes marketing budgets an...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.