AI Content Detection

Learn what AI content detection is, how detection tools work using machine learning and NLP, and why they matter for brand monitoring, education, and content au...

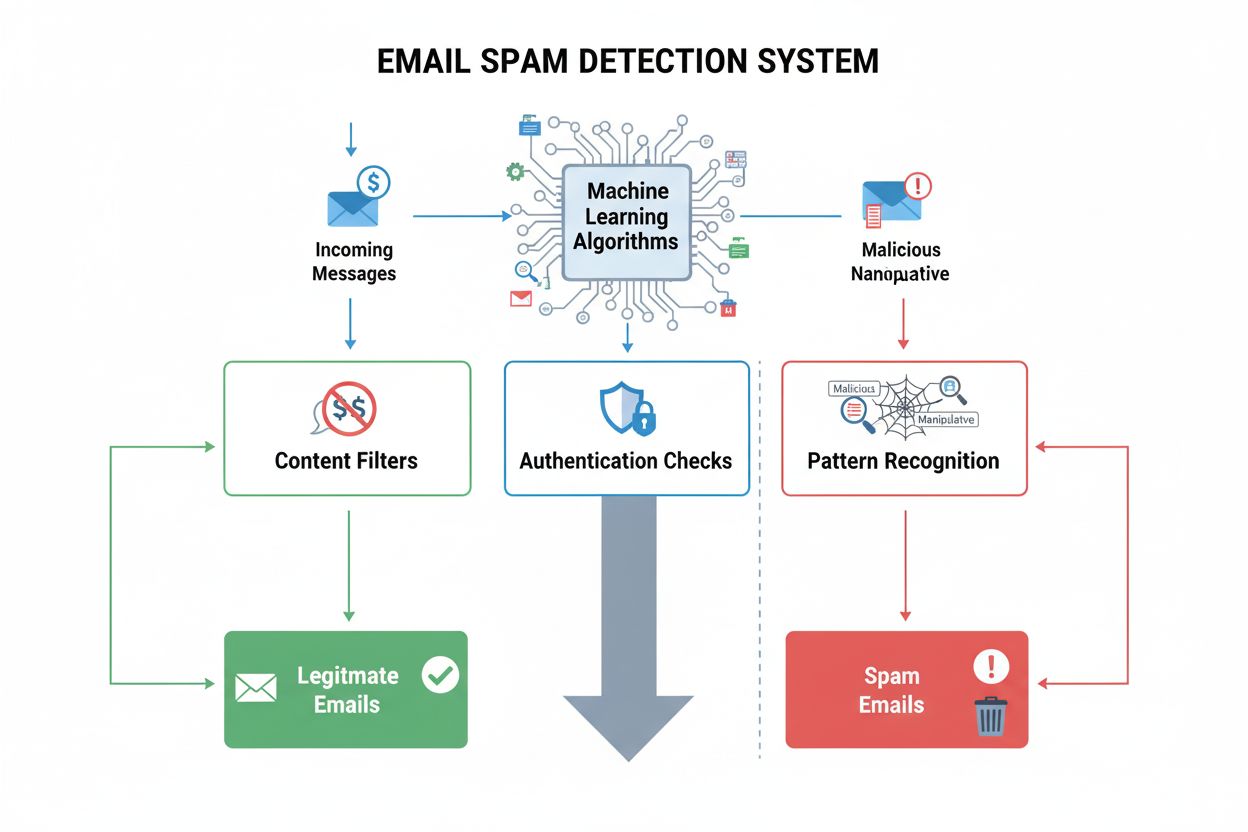

Spam detection is the automated process of identifying and filtering unwanted, unsolicited, or manipulative content—including emails, messages, and social media posts—using machine learning algorithms, content analysis, and behavioral signals to protect users and maintain platform integrity.

Spam detection is the automated process of identifying and filtering unwanted, unsolicited, or manipulative content—including emails, messages, and social media posts—using machine learning algorithms, content analysis, and behavioral signals to protect users and maintain platform integrity.

Spam detection is the automated process of identifying and filtering unwanted, unsolicited, or manipulative content—including emails, messages, social media posts, and AI-generated responses—using machine learning algorithms, content analysis, behavioral signals, and authentication protocols. The term encompasses both the technical mechanisms that identify spam and the broader practice of protecting users from deceptive, malicious, or repetitive communications. In the context of modern AI systems and digital platforms, spam detection serves as a critical safeguard against phishing attacks, fraudulent schemes, brand impersonation, and coordinated inauthentic behavior. The definition extends beyond simple email filtering to include detection of manipulative content across social media, review platforms, AI chatbots, and search results, where bad actors attempt to artificially inflate visibility, manipulate public opinion, or deceive users through deceptive practices.

The history of spam detection parallels the evolution of digital communication itself. In the early days of email, spam was primarily identified through simple rule-based systems that flagged messages containing specific keywords or sender addresses. Paul Graham’s foundational 2002 work “A Plan for Spam” introduced Bayesian filtering to email security, revolutionizing the field by enabling systems to learn from examples rather than relying on predefined rules. This statistical approach dramatically improved accuracy and adaptability, allowing filters to evolve as spammers changed tactics. By the mid-2000s, machine learning techniques including Naive Bayes classifiers, decision trees, and support vector machines became standard in enterprise email systems. The emergence of social media platforms introduced new spam challenges—coordinated inauthentic behavior, bot networks, and fake reviews—requiring detection systems to analyze network patterns and user behavior rather than just message content. Today’s spam detection landscape has evolved to incorporate deep learning models, transformer architectures, and real-time behavioral analysis, achieving accuracy rates of 95-98% in email filtering while simultaneously addressing emerging threats like AI-generated phishing (which surged 466% in Q1 2025) and deepfake manipulation.

Spam detection systems operate through multiple complementary layers that evaluate incoming content across different dimensions simultaneously. The first layer involves authentication verification, where systems check SPF (Sender Policy Framework) records to confirm authorized sending servers, validate DKIM (DomainKeys Identified Mail) cryptographic signatures to ensure message integrity, and enforce DMARC (Domain-based Message Authentication, Reporting, and Conformance) policies to instruct receiving servers on handling authentication failures. Microsoft’s May 2025 enforcement made authentication mandatory for bulk senders exceeding 5,000 daily emails, with non-compliant messages receiving SMTP rejection error code “550 5.7.515 Access denied”—meaning complete delivery failure rather than spam folder placement. The second layer involves content analysis, where systems examine message text, subject lines, HTML formatting, and embedded links for characteristics associated with spam. Modern content filters no longer rely solely on keyword matching (which proved ineffective as spammers adapted language) but instead analyze linguistic patterns, image-to-text ratios, URL density, and structural anomalies. The third layer implements header inspection, examining routing information, sender authentication details, and DNS records for inconsistencies suggesting spoofing or compromised infrastructure. The fourth layer evaluates sender reputation by cross-referencing domain and IP addresses against blocklists, analyzing historical sending patterns, and assessing engagement metrics from previous campaigns.

| Detection Method | How It Works | Accuracy Rate | Primary Use Case | Strengths | Limitations |

|---|---|---|---|---|---|

| Rule-Based Filtering | Applies predefined criteria (keywords, sender addresses, attachment types) | 60-75% | Legacy systems, simple blocklists | Fast, transparent, easy to implement | Cannot adapt to new tactics, high false positives |

| Bayesian Filtering | Uses statistical probability analysis of word frequencies in spam vs. legitimate mail | 85-92% | Email systems, personal filters | Learns from user feedback, adapts over time | Requires training data, struggles with novel attacks |

| Machine Learning (Naive Bayes, SVM, Random Forests) | Analyzes feature vectors (sender metadata, content characteristics, engagement patterns) | 92-96% | Enterprise email, social media | Handles complex patterns, reduces false positives | Requires labeled training data, computationally intensive |

| Deep Learning (LSTM, CNN, Transformers) | Processes sequential data and contextual relationships using neural networks | 95-98% | Advanced email systems, AI platforms | Highest accuracy, handles sophisticated manipulation | Requires massive datasets, difficult to interpret decisions |

| Real-Time Behavioral Analysis | Monitors user interactions, engagement patterns, and network relationships dynamically | 90-97% | Social media, fraud detection | Catches coordinated attacks, adapts to user preferences | Privacy concerns, requires continuous monitoring |

| Ensemble Methods | Combines multiple algorithms (voting, stacking) to leverage strengths of each | 96-99% | Gmail, enterprise systems | Highest reliability, balanced precision/recall | Complex to implement, resource-intensive |

The technical foundation of modern spam detection relies on supervised learning algorithms that classify messages into spam or legitimate categories based on labeled training data. Naive Bayes classifiers calculate the probability of an email being spam by analyzing word frequencies—if certain words appear more frequently in spam emails, their presence increases the spam probability score. This approach remains popular because it’s computationally efficient, interpretable, and performs surprisingly well despite its simplistic assumptions. Support Vector Machines (SVM) create hyperplanes in high-dimensional feature space to separate spam from legitimate messages, excelling at handling complex, non-linear relationships between features. Random Forests generate multiple decision trees and aggregate their predictions, reducing overfitting and improving robustness against adversarial manipulation. More recently, Long Short-Term Memory (LSTM) networks and other recurrent neural networks have demonstrated superior performance by analyzing sequential patterns in email text—understanding that certain word sequences are more indicative of spam than individual words alone. Transformer models, which power modern language models like GPT and BERT, have revolutionized spam detection by capturing contextual relationships across entire messages, enabling detection of sophisticated manipulation tactics that simpler algorithms miss. Research indicates that LSTM-based systems achieve 98% accuracy on benchmark datasets, though real-world performance varies based on data quality, model training, and the sophistication of adversarial attacks.

Manipulative content encompasses a broad spectrum of deceptive practices designed to deceive users, artificially inflate visibility, or damage brand reputation. Phishing attacks impersonate legitimate organizations to steal credentials or financial information, with AI-powered phishing increasing 466% in Q1 2025 as generative AI eliminates the grammatical errors that previously signaled malicious intent. Coordinated inauthentic behavior involves networks of fake accounts or bots amplifying messages, artificially inflating engagement metrics, and creating false impressions of popularity or consensus. Deepfakes use generative AI to create convincing but false images, videos, or audio recordings that can damage brand reputation or spread misinformation. Spam reviews artificially inflate or deflate product ratings, manipulate consumer perception, and undermine trust in review systems. Comment spam floods social media posts with irrelevant messages, promotional links, or malicious content designed to distract from legitimate discussion. Email spoofing forges sender addresses to impersonate trusted organizations, exploiting user trust to deliver malicious payloads or phishing content. Credential stuffing uses automated tools to test stolen username-password combinations across multiple platforms, compromising accounts and enabling further manipulation. Modern spam detection systems must identify these diverse manipulation tactics through behavioral analysis, network pattern recognition, and content authenticity verification—a challenge that intensifies as attackers employ increasingly sophisticated AI-powered techniques.

Different platforms implement spam detection with varying sophistication levels tailored to their specific threats and user bases. Gmail employs ensemble methods combining rule-based systems, Bayesian filtering, machine learning classifiers, and behavioral analysis, achieving 99.9% spam blocking before reaching inboxes while maintaining false positive rates below 0.1%. Gmail’s system analyzes over 100 million emails daily, continuously updating models based on user feedback (spam reports, marking as not spam) and emerging threat patterns. Microsoft Outlook implements multi-layered filtering including authentication verification, content analysis, sender reputation scoring, and machine learning models trained on billions of emails. Perplexity and other AI search platforms face unique challenges detecting manipulative content in AI-generated responses, requiring detection of prompt injection attacks, hallucinated citations, and coordinated attempts to artificially inflate brand mentions in AI outputs. ChatGPT and Claude implement content moderation systems that filter harmful requests, detect attempts to bypass safety guidelines, and identify manipulative prompts designed to generate misleading information. Social media platforms like Facebook and Instagram employ AI-powered comment filtering that automatically detects and removes hate speech, scams, bots, phishing attempts, and spam in comments across posts. AmICited, as an AI prompts monitoring platform, must distinguish legitimate brand citations from spam and manipulative content across these diverse AI systems, requiring sophisticated detection algorithms that understand context, intent, and authenticity across different platforms’ response formats.

Evaluating spam detection system performance requires understanding multiple metrics that capture different aspects of effectiveness. Accuracy measures the percentage of correct classifications (both true positives and true negatives), but this metric can be misleading when spam and legitimate emails are imbalanced—a system that marks everything as legitimate achieves high accuracy if spam represents only 10% of messages. Precision measures the percentage of messages flagged as spam that are actually spam, directly addressing false positive rates that damage user experience by blocking legitimate emails. Recall measures the percentage of actual spam that the system successfully identifies, addressing false negatives where malicious content reaches users. F1-score balances precision and recall, providing a single metric for overall performance. In spam detection, precision is typically prioritized because false positives (legitimate emails marked as spam) are considered more harmful than false negatives (spam reaching inboxes), as blocking legitimate business communications damages user trust more severely than occasional spam. Modern systems achieve 95-98% accuracy, 92-96% precision, and 90-95% recall on benchmark datasets, though real-world performance varies significantly based on data quality, model training, and adversarial sophistication. False positive rates in enterprise email systems typically range from 0.1-0.5%, meaning that for every 1,000 emails sent, 1-5 legitimate messages are incorrectly filtered. Research from EmailWarmup indicates that 83.1% average inbox placement across major providers means one in six emails fails entirely, with 10.5% hitting spam folders and 6.4% vanishing completely—highlighting the ongoing challenge of balancing security with deliverability.

The future of spam detection will be shaped by the escalating arms race between increasingly sophisticated attacks and more advanced defensive systems. AI-powered attacks are evolving rapidly—AI-generated phishing increased 466% in Q1 2025, eliminating the grammatical errors and awkward phrasing that previously signaled malicious intent. This evolution requires detection systems to employ equally sophisticated AI, moving beyond pattern matching toward understanding intent, context, and authenticity at deeper levels. Deepfake detection will become increasingly critical as generative AI enables creation of convincing but false images, videos, and audio—detection systems must analyze visual inconsistencies, sound artifacts, and behavioral anomalies that reveal synthetic origins. Behavioral biometrics will play a larger role, analyzing how users interact with content (typing patterns, mouse movements, engagement timing) to distinguish authentic users from bots or compromised accounts. Federated learning approaches will enable organizations to improve spam detection collaboratively without sharing sensitive data, addressing privacy concerns while leveraging collective intelligence. Real-time threat intelligence sharing will accelerate response to emerging threats, with platforms rapidly distributing information about new attack vectors and manipulation tactics. Regulatory frameworks like GDPR, CAN-SPAM, and emerging AI governance regulations will shape how spam detection systems operate, requiring transparency, explainability, and user control over filtering decisions. For platforms like AmICited monitoring brand mentions across AI systems, the challenge will intensify as attackers develop sophisticated techniques to manipulate AI responses, requiring continuous evolution of detection algorithms to distinguish genuine citations from coordinated manipulation attempts. The convergence of AI advancement, regulatory pressure, and adversarial sophistication suggests that future spam detection will require human-AI collaboration, where automated systems handle volume and pattern recognition while human experts address edge cases, novel threats, and ethical considerations that algorithms alone cannot resolve.

Spam detection specifically identifies unsolicited, repetitive, or manipulative messages using automated algorithms and pattern recognition, while content moderation is the broader practice of reviewing and managing user-generated content for policy violations, harmful material, and community standards. Spam detection focuses on volume, sender reputation, and message characteristics, whereas content moderation addresses context, intent, and compliance with platform policies. Both systems often work together in modern platforms to maintain user safety and experience quality.

Modern spam detection systems achieve 95-98% accuracy rates using advanced machine learning models like LSTM (Long Short-Term Memory) and ensemble methods combining multiple algorithms. However, accuracy varies by platform and implementation—Gmail reports 99.9% of spam blocked before reaching inboxes, while false positive rates (legitimate emails marked as spam) typically range from 0.1-0.5%. The challenge lies in balancing precision (avoiding false positives) against recall (catching all spam), as missing spam is often considered less harmful than blocking legitimate messages.

AI systems analyze patterns, context, and relationships that humans might miss, enabling detection of sophisticated manipulation tactics like coordinated inauthentic behavior, deepfakes, and AI-generated phishing. Machine learning models trained on millions of examples can identify subtle linguistic patterns, behavioral anomalies, and network structures indicative of manipulation. However, AI-powered attacks have also evolved—AI-generated phishing surged 466% in Q1 2025—requiring continuous model updates and adversarial testing to maintain effectiveness against emerging threats.

Spam filters balance precision (minimizing false positives where legitimate emails are blocked) against recall (catching all actual spam). Most systems prioritize precision because blocking legitimate emails damages user trust more severely than missing some spam. Bayesian filters learn from user feedback—when recipients mark filtered emails as 'not spam,' systems adjust thresholds. Enterprise systems often implement quarantine zones where suspicious emails are held for admin review rather than deleted, allowing recovery of legitimate messages while maintaining security.

Spam detection employs multiple complementary techniques: rule-based systems apply predefined criteria, Bayesian filtering uses statistical probability analysis, machine learning algorithms identify complex patterns, and real-time analysis inspects URLs and attachments dynamically. Content filters examine message text and formatting, header filters analyze routing information and authentication, reputation filters check sender history against blocklists, and behavioral filters monitor user engagement patterns. Modern systems layer these techniques simultaneously—a message might pass content checks but fail authentication, requiring comprehensive evaluation across all dimensions.

For AI monitoring platforms tracking brand mentions across ChatGPT, Perplexity, Google AI Overviews, and Claude, spam detection helps distinguish legitimate brand citations from manipulative content, fake reviews, and coordinated inauthentic behavior. Effective spam detection ensures that monitoring data reflects genuine user interactions rather than bot-generated noise or adversarial manipulation. This is critical for accurate brand reputation assessment, as spam and manipulative content can artificially inflate or deflate brand visibility metrics, leading to incorrect strategic decisions.

False positives in spam detection create significant business and user experience costs: legitimate marketing emails fail to reach customers, reducing conversion rates and revenue; important transactional messages (password resets, order confirmations) may be missed, causing user frustration; and sender reputation suffers as complaint rates increase. Studies show that 83.1% average inbox placement means one in six emails fails entirely, with false positives contributing substantially to this loss. For enterprises, even a 1% false positive rate across millions of emails represents thousands of missed business opportunities and damaged customer relationships.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Learn what AI content detection is, how detection tools work using machine learning and NLP, and why they matter for brand monitoring, education, and content au...

Learn what search engine spam is, including black hat SEO tactics like keyword stuffing, cloaking, and link farms. Understand how Google detects spam and the pe...

Learn what Google Spam Updates are, how they target spam tactics like expired domain abuse and scaled content, and their impact on SEO and search rankings.

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.