Content SEO

Content SEO is the strategic creation and optimization of high-quality content to improve search engine rankings and organic visibility. Learn how to optimize c...

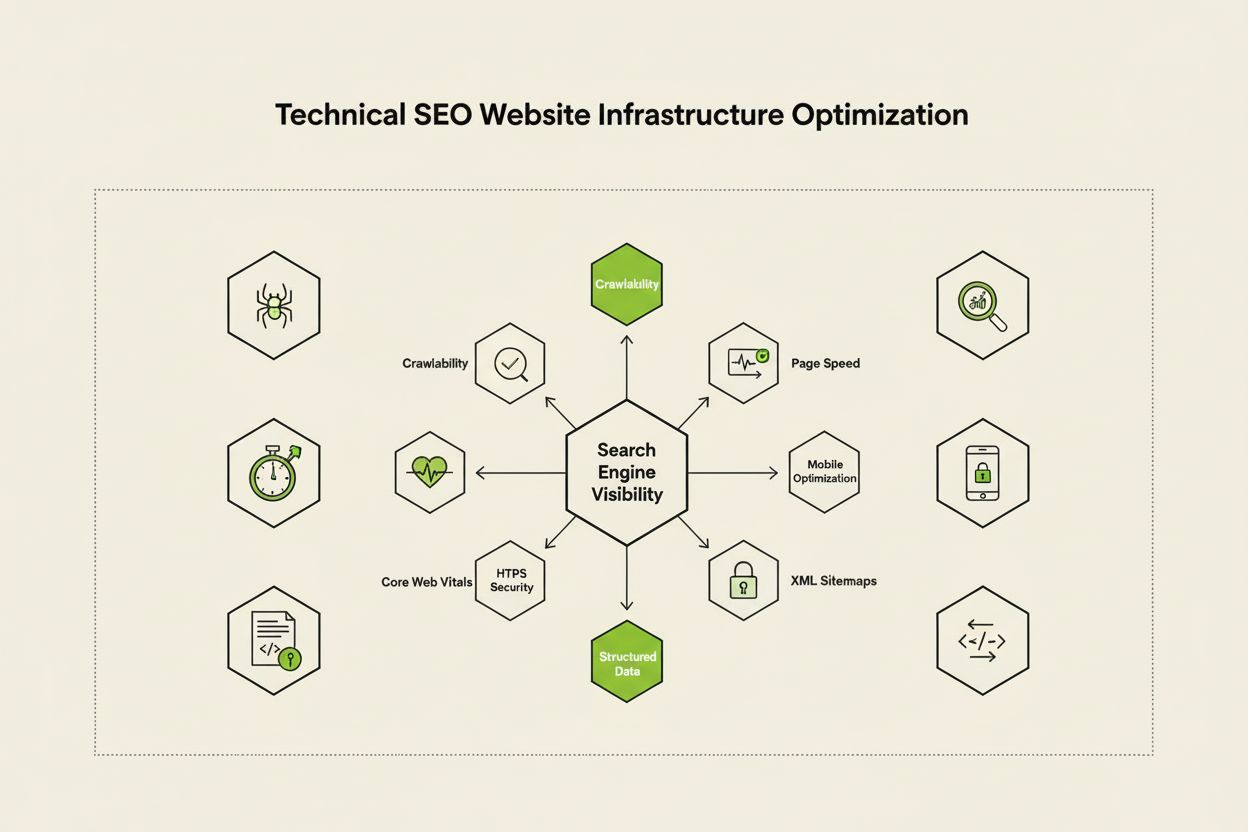

Technical SEO is the process of optimizing a website’s infrastructure so search engines can crawl, render, index, and serve content correctly and efficiently. It encompasses website speed, mobile-friendliness, site architecture, security, and structured data implementation to ensure search engines can discover and rank your pages.

Technical SEO is the process of optimizing a website's infrastructure so search engines can crawl, render, index, and serve content correctly and efficiently. It encompasses website speed, mobile-friendliness, site architecture, security, and structured data implementation to ensure search engines can discover and rank your pages.

Technical SEO is the process of optimizing a website’s backend infrastructure to ensure search engines can efficiently crawl, render, index, and serve content correctly. Unlike on-page SEO, which focuses on content quality and keyword optimization, technical SEO addresses the foundational elements that determine whether search engines can even access and understand your website. This includes website speed, mobile responsiveness, site architecture, security protocols, structured data implementation, and crawlability factors. Technical SEO acts as the invisible foundation upon which all other SEO efforts depend—without it, even the highest-quality content remains invisible to search engines and users. According to industry research, 91% of marketers reported that SEO improved website performance in 2024, with technical optimization playing a critical role in achieving measurable results. The importance of technical SEO has intensified as search engines become more sophisticated and as AI-driven search platforms emerge, requiring websites to meet increasingly stringent technical standards for visibility.

Technical SEO rests on four interconnected pillars that work together to maximize search engine visibility. Crawlability determines whether Googlebot and other search engine crawlers can access your website’s pages through links, sitemaps, and internal navigation structures. Indexability ensures that crawled pages are actually stored in Google’s index and eligible to appear in search results. Performance and Core Web Vitals measure how quickly pages load and how responsive they are to user interactions—factors that directly influence rankings and user experience. Mobile optimization ensures your website functions flawlessly on smartphones and tablets, which now account for over 60% of all search traffic according to Sistrix research. These four pillars are interdependent; weakness in any one area can undermine the effectiveness of the others. For example, a fast-loading desktop site that performs poorly on mobile will struggle to rank in Google’s mobile-first index, regardless of how well-optimized its crawlability might be. Understanding how these pillars interact is essential for developing a comprehensive technical SEO strategy that addresses all aspects of search engine visibility.

While often confused, crawlability and indexability represent two distinct stages in the search engine pipeline. Crawlability refers to a search engine’s ability to discover and access your website’s pages through following internal links, external backlinks, and XML sitemaps. It answers the question: “Can Googlebot reach this page?” If a page isn’t crawlable—perhaps because it’s blocked by robots.txt, hidden behind JavaScript, or orphaned with no internal links—it cannot proceed to the indexing stage. Indexability, by contrast, determines whether a crawled page is actually stored in Google’s index and eligible to appear in search results. A page can be perfectly crawlable but still not indexed if it contains a noindex meta tag, has duplicate content, or fails to meet Google’s quality thresholds. According to Search Engine Land’s comprehensive technical SEO guide, understanding this distinction is critical because fixing crawlability issues requires different solutions than fixing indexability problems. Crawlability problems typically involve site structure, robots.txt configuration, and internal linking, while indexability issues often relate to meta tags, canonical tags, content quality, and rendering problems. Both must be addressed to achieve optimal search visibility.

Core Web Vitals are three specific metrics that Google uses to measure real-world user experience and directly influence search rankings. Largest Contentful Paint (LCP) measures how quickly the largest visible element on a page loads—Google recommends an LCP of 2.5 seconds or less. Interaction to Next Paint (INP), which replaced First Input Delay in 2024, measures how responsive a page is to user interactions like clicks and taps—the target is under 200 milliseconds. Cumulative Layout Shift (CLS) measures visual stability by tracking unexpected layout changes during page load—a score below 0.1 is considered good. Research from DebugBear indicates that websites need 75% of users to experience “Good” performance across all three metrics to receive maximum ranking benefits. The transition to INP as a ranking factor reflects Google’s commitment to evaluating overall page responsiveness throughout a user’s entire interaction with a page, not just the initial load. Optimizing Core Web Vitals requires a multifaceted approach: improving LCP involves optimizing images, leveraging CDNs, and deferring non-critical JavaScript; enhancing INP requires breaking up long JavaScript tasks and optimizing event handlers; and reducing CLS involves reserving space for dynamic content and avoiding layout-shifting ads. These metrics have become non-negotiable for competitive SEO, as pages with poor Core Web Vitals face ranking penalties and higher bounce rates.

Site architecture refers to how your website’s pages are organized, structured, and connected through internal links. A well-designed site architecture serves multiple critical functions: it helps search engines understand your content hierarchy, distributes link equity (ranking power) throughout your site, ensures important pages are easily discoverable, and improves user navigation. The ideal site architecture follows a clear hierarchy with the homepage at the top, category pages in the second tier, and individual content pages in deeper levels. Best practice dictates that all important pages should be accessible within three clicks from the homepage, ensuring Googlebot doesn’t waste crawl budget on deep, isolated pages. Internal linking acts as the connective tissue of your site architecture, guiding both users and search engines through your content. Strategic internal linking consolidates ranking signals on priority pages, establishes topical relationships between related content, and helps search engines understand which pages are most important. For example, a hub-and-spoke model—where a comprehensive pillar page links to multiple related subtopic pages, which in turn link back to the pillar—creates a powerful structure that signals topical authority to search engines. Poor site architecture, characterized by orphaned pages, inconsistent navigation, and deep nesting, forces search engines to waste crawl budget on less important content and makes it difficult to establish topical authority. Companies that restructure their site architecture often see dramatic improvements in indexation speed and ranking performance.

Mobile-first indexing means Google primarily uses the mobile version of your website for crawling, indexing, and ranking—not the desktop version. This fundamental shift reflects the reality that over 60% of searches now originate from mobile devices. For technical SEO, this means your mobile experience must be flawless: responsive design that adapts to all screen sizes, touch-friendly navigation with adequate spacing between clickable elements, readable font sizes without zooming, and fast loading times optimized for mobile networks. Responsive design uses fluid layouts and flexible images to automatically adjust to different screen sizes, ensuring consistent functionality across devices. Common mobile optimization mistakes include intrusive interstitials (pop-ups) that block main content, text that’s too small to read without zooming, buttons that are too close together for accurate tapping, and pages that load slowly on mobile networks. Google’s Mobile-Friendly Test and Lighthouse audits can identify these issues, but real-world testing on actual mobile devices remains essential. The relationship between mobile optimization and rankings is direct: pages with poor mobile experiences face ranking penalties, while pages optimized for mobile see improved visibility. For e-commerce sites, SaaS platforms, and content publishers, mobile optimization isn’t optional—it’s fundamental to competitive search visibility.

XML sitemaps serve as a roadmap for search engines, listing all the URLs you want indexed along with metadata like last modified dates and priority levels. A well-maintained sitemap should include only canonical, indexable URLs—excluding redirects, 404 pages, and duplicate content. Robots.txt is a text file in your website’s root directory that provides crawling instructions to search engine bots, specifying which directories and files they can and cannot access. While robots.txt can block crawling, it doesn’t prevent indexing if the page is discovered through other means; for true exclusion, use noindex meta tags instead. Crawl budget refers to the number of pages Googlebot will crawl on your site within a given timeframe—a finite resource that must be managed strategically. Large websites with millions of pages must optimize crawl budget by ensuring Googlebot focuses on high-value content rather than wasting resources on low-priority pages, duplicate content, or faceted navigation variations. Common crawl budget waste occurs when search parameters generate endless URL variations, when staging or development environments are exposed to crawlers, or when thin content pages receive excessive internal linking. According to Google’s official guidance, managing crawl budget is particularly important for large sites, as inefficient crawling can delay the discovery and indexing of new or updated content. Tools like Google Search Console’s Crawl Stats report and server log analysis reveal exactly which pages Googlebot is visiting and how frequently, enabling data-driven crawl budget optimization.

Structured data, implemented through schema markup, helps search engines understand the meaning and context of your content beyond simple text analysis. Schema markup uses standardized vocabulary (from schema.org) to label different types of content—products, articles, recipes, events, local businesses, and more. When properly implemented, schema markup enables rich results in search, where Google displays enhanced information like star ratings, prices, cooking times, or event dates directly in search results. This increased visibility can significantly improve click-through rates and user engagement. Different content types require different schema implementations: Article schema for blog posts and news content, Product schema for e-commerce pages, FAQ schema for frequently asked questions, Local Business schema for brick-and-mortar locations, and Event schema for upcoming events. Implementation typically uses JSON-LD format, which is Google’s preferred method. However, schema markup must accurately reflect the actual content on the page—misleading or fabricated schema can result in manual penalties. According to Search Engine Land’s research, only pages with valid, relevant schema markup qualify for rich results, making implementation accuracy critical. Organizations that implement comprehensive schema markup across their most important pages often see improved visibility in both traditional search results and AI-driven search platforms, as these systems rely on structured data to understand and cite content accurately.

HTTPS (Hypertext Transfer Protocol Secure) encrypts data transmitted between users’ browsers and your web server, protecting sensitive information and signaling trustworthiness to both users and search engines. Google has confirmed that HTTPS is a ranking factor, and sites without HTTPS face both direct ranking penalties and indirect consequences like browser warnings that discourage user engagement. Implementing HTTPS requires obtaining an SSL/TLS certificate from a trusted Certificate Authority and configuring your server to use HTTPS for all pages. Beyond basic HTTPS implementation, security headers like Content-Security-Policy, Strict-Transport-Security, and X-Content-Type-Options provide additional protection against common web vulnerabilities. Mixed content errors—where HTTPS pages load HTTP resources—undermine security and can trigger browser warnings. The relationship between security and SEO extends beyond rankings: secure sites build user trust, reduce bounce rates, and improve conversion rates. For e-commerce sites handling payment information, SaaS platforms with user data, and any site collecting personal information, security is non-negotiable. Regular security audits using tools like Google Search Console’s Security Issues report and third-party vulnerability scanners help identify and remediate security problems before they impact rankings or user trust.

| Aspect | Technical SEO | On-Page SEO | Off-Page SEO |

|---|---|---|---|

| Primary Focus | Website infrastructure, speed, crawlability, indexability | Content quality, keywords, meta tags, headings | Backlinks, brand mentions, social signals |

| Search Engine Access | Ensures search engines can crawl and index pages | Helps search engines understand page relevance | Builds authority and trust signals |

| Key Elements | Site speed, mobile optimization, Core Web Vitals, site architecture, HTTPS, structured data | Keyword placement, content depth, internal linking, meta descriptions | Backlink profile, domain authority, brand mentions |

| Tools Used | PageSpeed Insights, Google Search Console, Screaming Frog, Lighthouse | Yoast SEO, Surfer SEO, Content Genius | Ahrefs, Semrush, Moz, Majestic |

| Impact on Rankings | Foundational—without it, pages can’t rank at all | Direct—improves relevance for target keywords | Significant—builds authority and trust |

| User Experience Impact | High—affects page speed, mobile usability, accessibility | Medium—affects readability and engagement | Low—indirect through brand perception |

| Implementation Timeline | Ongoing—requires continuous monitoring and optimization | Ongoing—requires content updates and optimization | Long-term—requires sustained link building efforts |

| ROI Measurement | Crawl efficiency, indexation rate, Core Web Vitals scores, rankings | Keyword rankings, organic traffic, click-through rates | Backlink growth, domain authority, referral traffic |

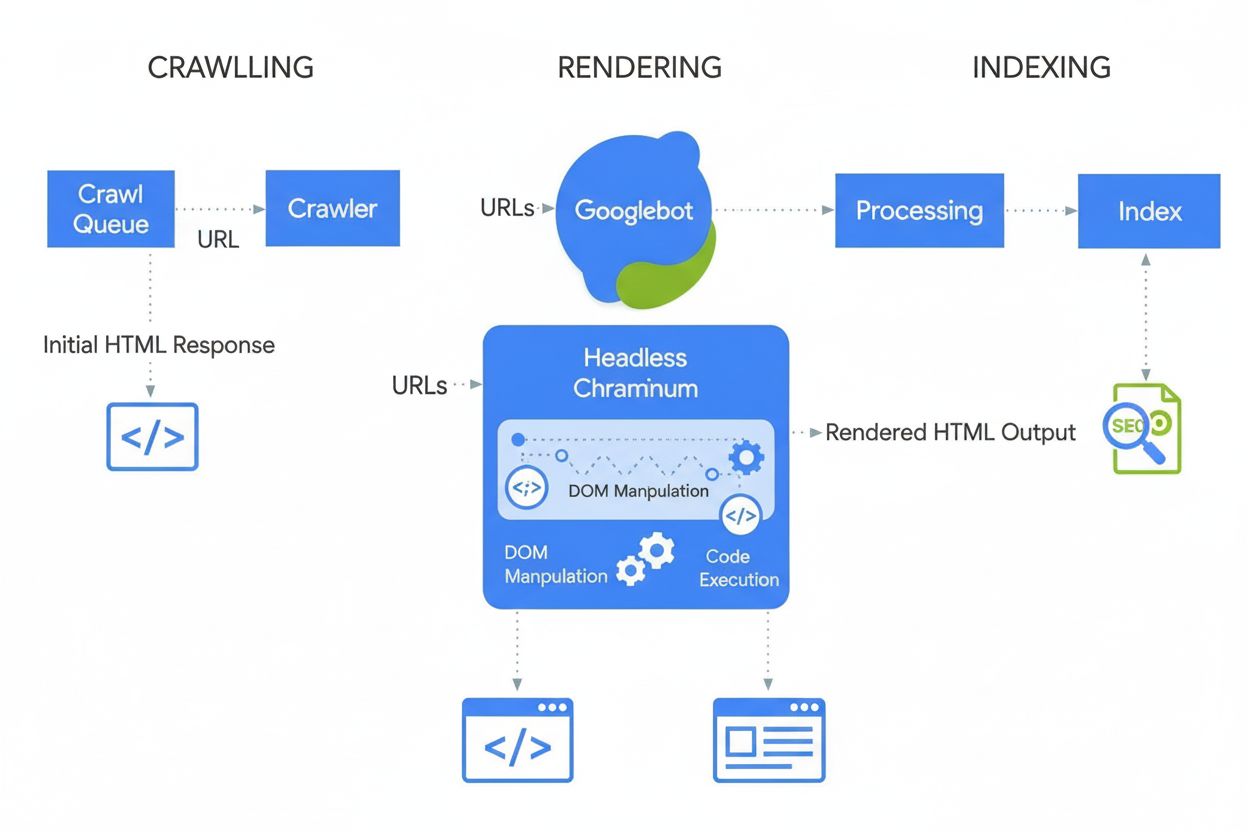

JavaScript SEO addresses the unique challenges posed by JavaScript-heavy websites, single-page applications (SPAs), and dynamic content rendering. Historically, Googlebot struggled with JavaScript because it required rendering—executing JavaScript code to build the final page—which added complexity and delay to the crawling process. Modern Googlebot now renders most pages before indexing, but this process introduces new technical SEO considerations. Server-side rendering (SSR) generates complete HTML on the server before sending it to the browser, ensuring search engines see all content immediately without waiting for JavaScript execution. Static site generation (SSG) pre-renders pages at build time, creating static HTML files that load instantly. Dynamic rendering serves pre-rendered HTML to search engines while serving JavaScript to users, though Google has stated this is a temporary solution. The critical principle: all essential content, meta tags, and structured data should be visible in the initial HTML, not loaded dynamically after JavaScript execution. Pages that hide critical content behind JavaScript risk incomplete indexing or delayed indexing. React, Vue, and Angular frameworks require special attention—many organizations using these frameworks implement Next.js or Nuxt.js specifically to handle server-side rendering and improve SEO performance. Testing how Googlebot sees your pages using Google Search Console’s URL Inspection Tool and rendered snapshot feature reveals whether JavaScript-dependent content is being properly indexed.

Implementing technical SEO effectively requires a systematic approach that prioritizes high-impact optimizations. Start with foundational elements: ensure your site is crawlable by checking robots.txt and internal linking, verify that important pages are indexable by checking for noindex tags and canonical issues, and establish a baseline for Core Web Vitals performance. Next, address performance optimization: compress and optimize images, implement lazy loading for below-fold content, defer non-critical JavaScript, and leverage Content Delivery Networks (CDNs) for faster content delivery. Then, optimize site structure: ensure important pages are within three clicks of the homepage, implement breadcrumb navigation with schema markup, and create clear internal linking patterns that guide both users and search engines. Implement structured data for your most important content types—products, articles, local business information, or events—using JSON-LD format and validating with Google’s Rich Results Test. Monitor continuously using Google Search Console for indexation issues, PageSpeed Insights for Core Web Vitals, and server logs for crawl patterns. Establish accountability by tracking key metrics like indexed page count, Core Web Vitals scores, and crawl efficiency over time. Organizations that treat technical SEO as an ongoing process rather than a one-time audit consistently outperform competitors in search visibility and user experience metrics.

As AI-driven search platforms like Google AI Overviews, Perplexity, ChatGPT, and Claude become increasingly prominent, technical SEO’s importance has expanded beyond traditional Google search. These AI systems rely on properly indexed, well-structured content from search engine indexes to generate answers and citations. Technical SEO ensures your content is discoverable and correctly interpreted by AI systems through several mechanisms: proper indexing makes content available for AI training and retrieval, structured data helps AI systems understand content context and meaning, and semantic richness enables AI systems to recognize your content as authoritative and relevant. According to research from Conductor and Botify, pages that appear in AI Overviews typically come from well-indexed, technically sound websites with strong semantic signals. The relationship is bidirectional: while traditional SEO focuses on ranking for specific keywords, AI search focuses on providing comprehensive answers that may cite multiple sources. This shift means technical SEO must support both paradigms—ensuring pages are indexed for traditional search while also being semantically rich enough for AI systems to recognize and cite. Organizations monitoring their visibility across multiple AI platforms using tools like AmICited can identify which technical optimizations most effectively improve citation rates and visibility in generative search results.

Effective technical SEO requires tracking specific metrics that indicate website health and search engine accessibility. Indexed page count shows how many of your intended pages are actually stored in Google’s index—comparing this against your total indexable pages reveals your index efficiency ratio. Crawl efficiency measures how effectively Googlebot uses its crawl budget, calculated by dividing pages crawled by total pages on your site. Core Web Vitals scores indicate user experience quality across loading speed (LCP), interactivity (INP), and visual stability (CLS). Mobile usability issues tracked in Google Search Console reveal problems like small tap targets, viewport configuration errors, or intrusive interstitials. Redirect chain length should be minimized to preserve link equity and reduce crawl delays. Structured data coverage shows what percentage of your pages implement valid schema markup. Page speed metrics including First Contentful Paint (FCP), Time to Interactive (TTI), and Total Blocking Time (TBT) provide detailed performance insights. Organizations should establish baseline measurements for these metrics, set improvement targets, and track progress monthly or quarterly. Sudden changes in these metrics often signal technical problems before they impact rankings, enabling proactive remediation. Tools like Google Search Console, PageSpeed Insights, Lighthouse, and enterprise platforms like Semrush provide comprehensive dashboards for monitoring these metrics across your entire website.

The technical SEO landscape continues to evolve in response to search engine algorithm changes, emerging technologies, and shifting user behaviors. AI-driven indexing and ranking will increasingly influence which pages get indexed and how they’re ranked, requiring technical SEO to support semantic understanding and entity recognition. Edge computing and serverless architectures enable faster content delivery and real-time optimization at the network edge, reducing latency and improving Core Web Vitals. Increased focus on E-E-A-T signals (Experience, Expertise, Authoritativeness, Trustworthiness) means technical SEO must support content credibility through proper author markup, publication dates, and trust signals. Multi-surface optimization will become standard practice as AI search platforms proliferate, requiring websites to optimize for visibility across Google, Perplexity, ChatGPT, Claude, and other emerging platforms. Privacy-first analytics and first-party data collection will reshape how organizations measure technical SEO impact, moving away from third-party cookies toward server-side tracking and consent-based measurement. JavaScript framework maturation will continue improving SEO capabilities, with frameworks like Next.js, Nuxt.js, and Remix becoming standard for building SEO-friendly applications. Automated technical SEO powered by AI will enable faster issue detection and remediation, with platforms automatically identifying and suggesting fixes for crawlability, indexability, and performance problems. Organizations that stay ahead of these trends by continuously updating their technical infrastructure and monitoring emerging best practices will maintain competitive advantages in organic search visibility.

Technical SEO focuses on optimizing a website's backend infrastructure—such as site speed, crawlability, indexability, and server configuration—to help search engines discover and process content. On-page SEO, by contrast, concentrates on optimizing individual page elements like keyword placement, meta tags, headings, and content quality to improve relevance for specific search queries. While technical SEO ensures search engines can access your site, on-page SEO ensures they understand what your content is about and why it matters to users.

Core Web Vitals—Largest Contentful Paint (LCP), Interaction to Next Paint (INP), and Cumulative Layout Shift (CLS)—are confirmed ranking factors that measure real-world user experience. According to DebugBear research, websites need 75% of users to have a 'Good' experience across all three metrics to receive the maximum ranking boost. Poor Core Web Vitals can result in ranking penalties, reduced click-through rates, and higher bounce rates, directly affecting organic visibility and user engagement.

Crawlability determines whether search engine bots can access and follow links throughout your website. If Googlebot cannot crawl your pages due to blocked resources, poor site structure, or robots.txt restrictions, those pages cannot be indexed or ranked. Without proper crawlability, even high-quality content remains invisible to search engines, making it impossible to achieve organic visibility regardless of content quality or backlink profile.

As AI-driven search platforms like Google AI Overviews and Perplexity become more prominent, technical SEO remains foundational. These AI systems rely on properly indexed, well-structured content from Google's index to generate answers. Technical SEO ensures your content is discoverable, correctly rendered, and semantically rich enough for AI systems to cite and reference. Without solid technical foundations, your content won't appear in AI Overviews or other generative search results.

For most websites, conducting a comprehensive technical SEO audit quarterly is recommended, with monthly monitoring for critical issues. Larger enterprise sites with frequent updates should audit monthly or implement continuous monitoring systems. After major site changes, migrations, or redesigns, immediate audits are essential. Regular audits help catch issues early before they impact rankings, ensuring your site maintains optimal crawlability, indexability, and performance.

Site architecture determines how search engines navigate and understand your website's content hierarchy. A well-optimized architecture ensures important pages are within three clicks of the homepage, distributes link equity effectively, and helps search engines prioritize crawling high-value content. Poor site architecture can lead to orphaned pages, wasted crawl budget, and difficulty in establishing topical authority, all of which negatively impact rankings and visibility.

Page speed directly impacts both search rankings and user behavior. Google has confirmed that Core Web Vitals—which measure loading speed, interactivity, and visual stability—are ranking factors. Slow-loading pages experience higher bounce rates, lower engagement, and reduced conversions. Studies show that pages loading in under 2.5 seconds have significantly better user retention and ranking performance compared to slower pages, making speed optimization critical for SEO success.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Content SEO is the strategic creation and optimization of high-quality content to improve search engine rankings and organic visibility. Learn how to optimize c...

JavaScript SEO optimizes JavaScript-rendered websites for search engine crawling and indexing. Learn best practices, rendering methods, and strategies to improv...

Enterprise SEO is the practice of optimizing large, complex websites with thousands of pages for search engines. Learn strategies, challenges, and best practice...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.