How Do I Optimize Support Content for AI?

Learn essential strategies to optimize your support content for AI systems like ChatGPT, Perplexity, and Google AI Overviews. Discover best practices for clarit...

I’ve been trying to understand the technical side of how AI actually processes our content. Not the marketing implications - the actual technical process.

What I’m trying to understand:

Why this matters: If we understand the technical process, we can optimize more effectively. I keep seeing advice like “use clear headers” without understanding WHY that helps technically.

Anyone with ML/AI background who can explain this in practical terms?

Great question! Let me break down the technical pipeline:

The AI content processing pipeline:

Step 1: Tokenization Text is broken into “tokens” - typically words or subwords. “Understanding” might become [“Under”, “stand”, “ing”]. This is crucial because AI doesn’t see words like humans do.

Step 2: Embeddings Each token is converted to a vector (list of numbers) that represents its meaning. Similar meanings = similar vectors. “King” and “Queen” would have similar vectors, as would “King” and “Monarch.”

Step 3: Attention Mechanism The model looks at ALL tokens and figures out which ones are related. In “The bank was flooded,” attention helps understand “bank” means riverbank, not financial institution.

Step 4: Transformer Processing Multiple layers of processing where the model builds understanding of relationships between all parts of the text.

Step 5: Output Generation Model predicts the most likely next token based on everything it’s learned.

Why this matters for content:

Let me add some practical implications:

Token limits and content optimization:

| Model | Token Limit | Practical Implication |

|---|---|---|

| GPT-4 | ~128,000 | Can process very long content |

| Claude | ~200,000 | Excellent for comprehensive docs |

| Most RAG systems | ~2,000-8,000 per chunk | Content is chunked for retrieval |

Why chunking matters: When AI retrieves your content, it typically grabs chunks (200-500 words). If your key information is split across chunk boundaries, it may not be retrieved properly.

Optimization based on this:

The embedding space: Your content lives in a “vector space” where similar content is close together. If your content is semantically scattered (covering many unrelated topics), it becomes harder to retrieve for specific queries.

Focus tip: Topically focused content creates tighter embedding clusters, making retrieval more precise.

Let me translate the technical concepts into practical content advice:

Structure based on technical understanding:

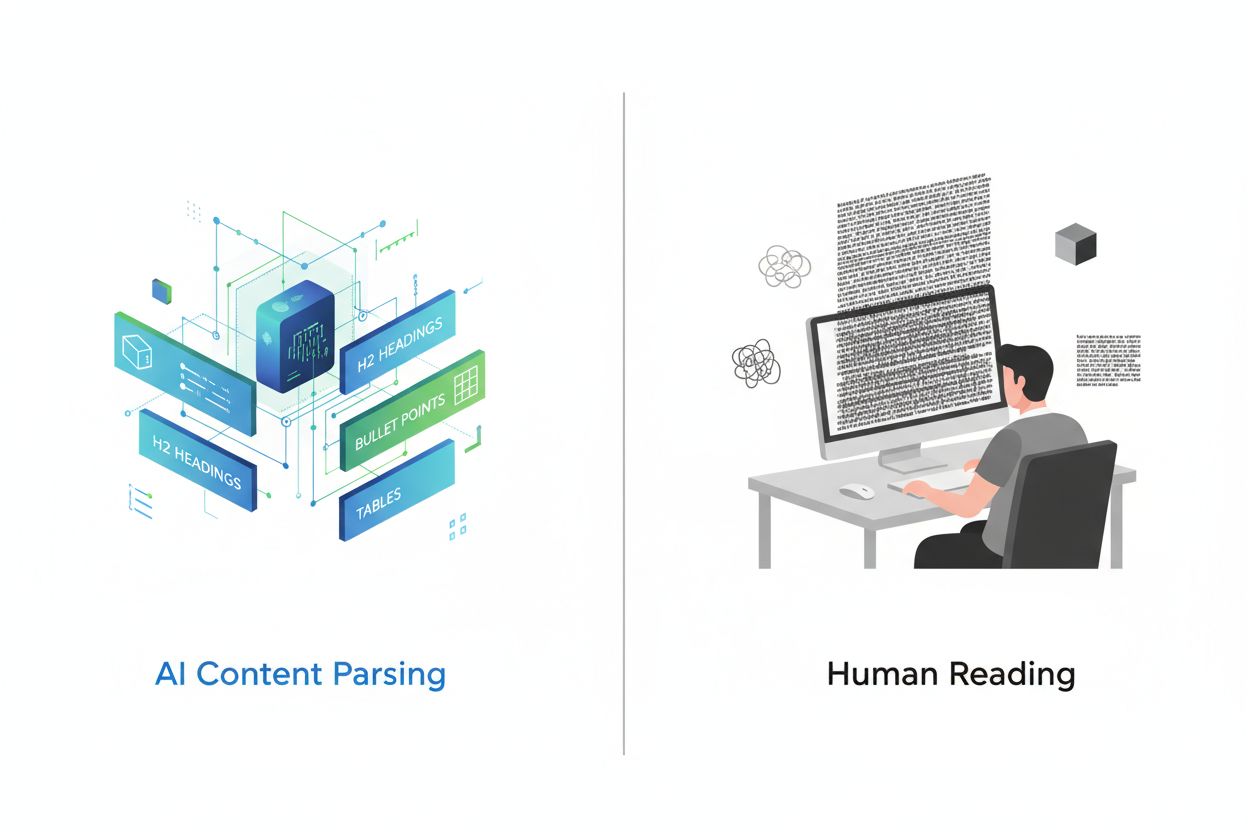

Why headers matter technically: Headers create explicit semantic boundaries that tokenizers and attention mechanisms can recognize. They’re not just visual - they’re structural signals that AI uses to understand content organization.

Optimal structure:

H1: Main Topic (establishes overall context)

Opening paragraph: Core concept (40-60 words)

H2: Subtopic 1 (signals new semantic unit)

Direct answer (becomes self-contained chunk)

Supporting details

H2: Subtopic 2

[Same pattern]

Why bullet points work:

Why tables excel: Tables create highly structured information that AI can parse with high confidence. The row/column structure maps directly to how AI organizes relationships.

The semantic signal: Every formatting choice is a signal about content organization. Make those signals explicit and consistent.

This is exactly what I needed. The chunking explanation especially - I hadn’t considered that AI systems break content into pieces for retrieval.

Follow-up: What about domain-specific terminology? We have a lot of technical terms that might not be in common usage. How does AI handle that?

Great question! Domain-specific terminology is a real challenge.

How tokenizers handle specialized terms:

The problem: Standard tokenizers trained on general English struggle with specialized jargon. “Preauthorization” might become [“Pre”, “author”, “ization”] - losing the healthcare meaning entirely.

What this means:

Mitigation strategies:

Context reinforcement - When you use a technical term, provide context that helps AI understand it. “Preauthorization, the process of getting insurance approval before treatment…”

Synonyms and explanations - Include common terms alongside jargon. This creates embedding connections between your term and related concepts AI understands.

Consistent terminology - Use the same term consistently. If you switch between “preauth,” “preauthorization,” and “prior authorization,” you fragment the semantic signal.

Define on first use - Especially for uncommon terms, brief definitions help AI map them to the right concepts.

Schema can help: FAQ schema that defines your terms creates explicit semantic connections AI can use.

Adding to the embedding discussion:

How embeddings create “semantic neighborhoods”:

Think of your content as living in a multi-dimensional space. Semantically similar content is clustered together.

When users query AI: Their query is converted to a vector in the same space. AI retrieves content from the “nearest neighbors” in that space.

Implications:

Topical focus - Content that stays focused on a topic creates a tight cluster. Broad, unfocused content scatters across the space.

Related content linking - When you link to related content on your site, you’re creating semantic connections that can strengthen your cluster.

Keyword variations - Using natural variations of key terms (synonyms, related phrases) makes your cluster “larger” and easier to retrieve from multiple query angles.

Practical test: Take your target keywords and think about all the ways users might phrase queries. Your content should have semantic connections to all those phrasings, not just exact matches.

This is why “semantic SEO” works - it’s not about keywords, it’s about creating the right embedding neighborhoods.

Let me explain attention mechanism implications:

What attention does: For every token, attention calculates which other tokens are most relevant. This is how AI understands context and relationships.

Multi-head attention: AI runs multiple attention calculations in parallel, each capturing different types of relationships:

Why this matters for content:

Clear referents - When you use pronouns or references, make them unambiguous. “The software helps users. It also provides analytics.” - What is “it”? The software? Something else?

Logical flow - Attention works better when ideas flow logically. Random topic jumps confuse the attention mechanism.

Explicit connections - “This approach improves conversion because…” is better than leaving relationships implicit.

The readability connection: Content that’s easy for humans to follow is often easier for attention mechanisms too. Logical organization, clear references, explicit relationships.

Exactly! There’s a strong correlation:

AI-friendly content = Human-friendly content:

| Human Best Practice | Technical AI Benefit |

|---|---|

| Clear, simple sentences | Easier tokenization, clearer attention patterns |

| Logical structure | Better chunk boundaries, coherent embeddings |

| Explicit transitions | Clearer semantic relationships |

| Defined terms | Proper concept mapping |

| Focused topics | Tighter embedding clusters |

The misconception: Some think “AI optimization” means gaming systems with hidden tricks. In reality, it means creating well-organized, clear, comprehensive content.

Why the correlation exists: AI models are trained on high-quality human writing. They’ve learned that well-structured, clear content is typically more valuable. The patterns of “good content” are baked into their training.

The takeaway: Don’t think about “writing for AI.” Think about writing clearly for humans, then ensure it’s technically accessible (proper HTML, schema, fast loading). The rest follows.

This has been incredibly enlightening. Key takeaways:

Technical understanding:

Practical implications:

What I’ll change:

Thanks everyone for the technical depth!

Get personalized help from our team. We'll respond within 24 hours.

Track how AI systems process and cite your content across major platforms.

Learn essential strategies to optimize your support content for AI systems like ChatGPT, Perplexity, and Google AI Overviews. Discover best practices for clarit...

Learn how to optimize content readability for AI systems, ChatGPT, Perplexity, and AI search engines. Discover best practices for structure, formatting, and cla...

Learn how to restructure your content for AI systems with practical before and after examples. Discover techniques to improve AI citations and visibility across...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.