What Tools Check AI Crawlability? Top Monitoring Solutions

Discover the best tools for checking AI crawlability. Learn how to monitor GPTBot, ClaudeBot, and PerplexityBot access to your website with free and enterprise ...

Crawlability refers to the ability of search engine crawlers and AI bots to access, navigate, and understand website content. It is a foundational technical SEO factor that determines whether search engines can discover and index pages for ranking in search results and AI-powered answer engines.

Crawlability refers to the ability of search engine crawlers and AI bots to access, navigate, and understand website content. It is a foundational technical SEO factor that determines whether search engines can discover and index pages for ranking in search results and AI-powered answer engines.

Crawlability is the ability of search engine crawlers and AI bots to access, navigate, and understand the content on your website. It represents a foundational technical SEO factor that determines whether search engines like Google, Bing, and AI-powered answer engines like ChatGPT and Perplexity can discover your pages, read their content, and ultimately include them in their indexes for ranking and citation. Without crawlability, even the highest-quality content remains invisible to search engines and AI systems, making it impossible for your brand to achieve visibility in search results or be cited as an authoritative source. Crawlability is the first critical step in the search engine optimization process—if a page cannot be crawled, it cannot be indexed, and if it cannot be indexed, it cannot rank or be recommended by AI systems.

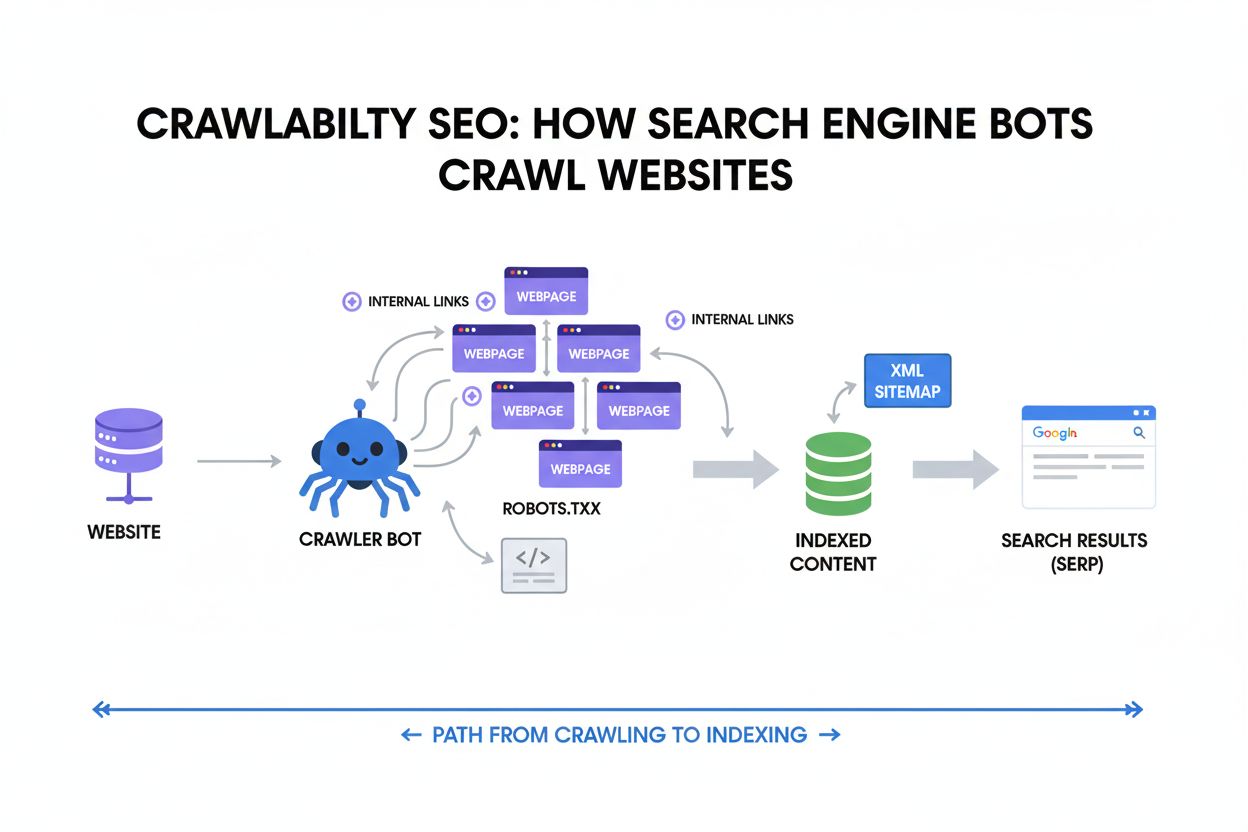

Search engines deploy automated programs called crawlers (also known as bots, spiders, or robots) to systematically explore the web and discover content. These crawlers start from known URLs and follow internal links from one page to another, building a comprehensive map of your website’s structure and content. When a crawler visits your site, it downloads the HTML code of each page, analyzes the content, and stores information about what it finds in a massive database called the search engine index. This process, called crawling, is continuous—crawlers return to websites regularly to discover new pages and identify updates to existing content. The frequency of crawls depends on several factors, including how important the search engine deems your site, how often you publish new content, and the overall health of your site’s technical infrastructure. Google’s crawler, known as Googlebot, is the most widely recognized crawler, but search engines like Bing, DuckDuckGo, and AI systems like OpenAI’s crawler and Perplexity’s bot all operate similarly, though with important differences in how they process content.

Crawlability has been a cornerstone of SEO since the early days of search engines in the 1990s. As the web grew exponentially, search engines realized they needed a systematic way to discover and organize billions of pages. The concept of crawlability emerged as a critical factor—if a page was not crawlable, it simply did not exist in the eyes of search engines. Over the past two decades, crawlability has evolved from a simple concept (can the crawler access the page?) to a complex technical discipline involving site architecture, server performance, JavaScript rendering, and structured data. According to research from Search Engine Journal, approximately 65.88% of websites have severe duplicate content issues, and 93.72% of webpages have a low text-to-HTML ratio, both of which negatively impact crawlability. The rise of JavaScript-heavy websites and single-page applications (SPAs) in the 2010s introduced new crawlability challenges, as traditional crawlers struggled to render dynamic content. More recently, the emergence of AI-powered search engines and large language models (LLMs) has fundamentally changed the crawlability landscape. Research from Conductor shows that AI crawlers like ChatGPT and Perplexity visit pages significantly more frequently than Google—sometimes over 100 times more often—and they do not render JavaScript, making crawlability optimization even more critical for brands seeking visibility in AI search results.

While crawlability and indexability are often used interchangeably, they represent two distinct stages in the search engine process. Crawlability is about access—can the crawler reach and read your page? Indexability is about inclusion—is the page allowed to be stored in the search engine’s index and shown in results? A page can be highly crawlable but not indexable if it contains a noindex meta tag, which explicitly tells search engines not to include it in their index. Conversely, a page might be blocked from crawling via robots.txt but still be discovered and indexed if it is linked from external websites. Understanding this distinction is crucial because it affects your optimization strategy. If a page is not crawlable, you must fix the technical issues preventing access. If a page is crawlable but not indexable, you need to remove the indexing restrictions. Both factors are essential for SEO success, but crawlability is the prerequisite—without it, indexability becomes irrelevant.

Several technical and structural factors directly influence how effectively search engines can crawl your website. Internal linking is perhaps the most important factor—crawlers follow links from one page to another, so pages without internal links pointing to them (called orphaned pages) are difficult or impossible to discover. A well-organized site structure with important pages within two to three clicks of the homepage ensures that crawlers can reach all critical content efficiently. XML sitemaps serve as a roadmap for crawlers, explicitly listing the pages you want indexed and helping search engines prioritize their crawling efforts. The robots.txt file controls which parts of your site crawlers can access, and misconfiguring it can accidentally block important pages from being crawled. Page load speed affects crawlability because slow pages waste crawl budget and may be skipped by crawlers. Server health and HTTP status codes are critical—pages that return error codes (like 404 or 500) signal to crawlers that content is unavailable. JavaScript rendering presents a unique challenge: while Googlebot can process JavaScript, most AI crawlers cannot, meaning critical content loaded via JavaScript may be invisible to AI systems. Finally, duplicate content and improper use of canonical tags can confuse crawlers about which version of a page to prioritize, wasting crawl budget on redundant content.

| Factor | Googlebot | Bing Bot | AI Crawlers (ChatGPT, Perplexity) | Traditional SEO Tools |

|---|---|---|---|---|

| JavaScript Rendering | Yes (after initial crawl) | Limited | No (raw HTML only) | Simulated crawling |

| Crawl Frequency | Varies by site importance | Varies by site importance | Very high (100x+ more than Google) | Scheduled (weekly/monthly) |

| Crawl Budget | Yes, limited | Yes, limited | Appears unlimited | N/A |

| Respects robots.txt | Yes | Yes | Varies by crawler | N/A |

| Respects noindex | Yes | Yes | Varies by crawler | N/A |

| Crawl Speed | Moderate | Moderate | Very fast | N/A |

| Content Requirements | HTML + JavaScript | HTML + Limited JS | HTML only (critical) | HTML + JavaScript |

| Monitoring Availability | Google Search Console | Bing Webmaster Tools | Limited (requires specialized tools) | Multiple tools available |

Understanding what prevents crawlers from accessing your content is essential for maintaining good crawlability. Broken internal links are among the most common issues—when a link points to a page that no longer exists (returning a 404 error), crawlers encounter a dead end and cannot continue exploring that path. Redirect chains and loops confuse crawlers and waste crawl budget; for example, if Page A redirects to Page B, which redirects to Page C, which redirects back to Page A, the crawler gets stuck in a loop and cannot reach the final destination. Server errors (5xx status codes) indicate that your server is overloaded or misconfigured, causing crawlers to back off and visit less frequently. Slow page load times are particularly problematic because crawlers have limited time and resources; if pages take too long to load, crawlers may skip them entirely or reduce crawl frequency. JavaScript rendering issues are increasingly important—if your site relies on JavaScript to load critical content like product information, pricing, or navigation, AI crawlers will not see this content since they do not execute JavaScript. Misconfigured robots.txt files can accidentally block entire sections of your site; for example, a directive like Disallow: / blocks all crawlers from accessing any page. Misused noindex tags can prevent pages from being indexed even if they are crawlable. Poor site structure with pages buried too deep (more than 3-4 clicks from the homepage) makes it harder for crawlers to discover and prioritize content. Duplicate content without proper canonical tags forces crawlers to waste resources crawling multiple versions of the same page instead of focusing on unique content.

The emergence of AI-powered search engines and large language models has elevated the importance of crawlability to a new level. Unlike traditional search engines, which have sophisticated systems for handling JavaScript and complex site structures, most AI crawlers operate with significant limitations. AI crawlers do not render JavaScript, meaning they only see the raw HTML served by your website. This is a critical distinction because many modern websites rely heavily on JavaScript to load content dynamically. If your product pages, blog content, or key information is loaded via JavaScript, AI crawlers will see a blank page or incomplete content, making it impossible for them to cite or recommend your brand in AI search results. Additionally, research from Conductor reveals that AI crawlers visit pages far more frequently than traditional search engines—sometimes over 100 times more often in the first few days after publication. This means your content must be technically perfect from the moment it is published; you may not get a second chance to fix crawlability issues before AI systems form their initial assessment of your content’s quality and authority. The stakes are higher with AI because there is no equivalent to Google Search Console’s recrawl request feature—you cannot ask an AI crawler to come back and re-evaluate a page after you fix issues. This makes proactive crawlability optimization essential for brands seeking visibility in AI search results.

Improving your website’s crawlability requires a systematic approach to technical SEO. First, create a flat site structure where important pages are accessible within two to three clicks from the homepage. This ensures that crawlers can discover and prioritize your most valuable content. Second, build a strong internal linking strategy by linking to important pages from multiple locations within your site, including navigation menus, footers, and contextual links within content. Third, create and submit an XML sitemap to search engines via Google Search Console; this explicitly tells crawlers which pages you want indexed and helps them prioritize their crawling efforts. Fourth, audit and optimize your robots.txt file to ensure it is not accidentally blocking important pages or sections of your site. Fifth, fix all broken links and eliminate orphaned pages by either linking to them from other pages or removing them entirely. Sixth, optimize page load speed by compressing images, minifying code, and leveraging content delivery networks (CDNs). Seventh, serve critical content in HTML rather than relying on JavaScript to load important information; this ensures that both traditional crawlers and AI bots can access your content. Eighth, implement structured data markup (schema) to help crawlers understand the context and meaning of your content. Ninth, monitor Core Web Vitals to ensure your site provides a good user experience, which indirectly affects crawlability. Finally, regularly audit your site using tools like Google Search Console, Screaming Frog, or Semrush Site Audit to identify and fix crawlability issues before they impact your visibility.

Traditional approaches to crawlability monitoring are no longer sufficient in the age of AI search. Scheduled crawls that run weekly or monthly create significant blind spots because AI crawlers visit pages far more frequently and can discover issues that go undetected for days. Real-time monitoring platforms that track crawler activity 24/7 are now essential for maintaining optimal crawlability. These platforms can identify when AI crawlers visit your pages, detect technical issues as they occur, and alert you to problems before they impact your visibility. Research from Conductor demonstrates the value of real-time monitoring: one enterprise customer with over 1 million webpages was able to reduce technical issues by 50% and improve AI search discoverability by implementing real-time monitoring. Real-time monitoring provides visibility into AI crawler activity, showing which pages are being crawled by ChatGPT, Perplexity, and other AI systems, and how frequently. It can also track crawl frequency segments, alerting you when pages haven’t been visited by AI crawlers in hours or days, which may indicate underlying technical or content issues. Additionally, real-time monitoring can verify schema implementation, ensuring that high-priority pages have proper structured data markup, and monitor Core Web Vitals to ensure pages load quickly and provide good user experience. By investing in real-time monitoring, brands can shift from reactive problem-solving to proactive optimization, ensuring their content remains crawlable and visible to both traditional search engines and AI systems.

The definition and importance of crawlability are evolving rapidly as AI search becomes increasingly prominent. In the near future, crawlability optimization will become as fundamental as traditional SEO, with brands needing to optimize for both Googlebot and AI crawlers simultaneously. The key difference is that AI crawlers have stricter requirements—they do not render JavaScript, they visit more frequently, and they do not provide the same level of transparency through tools like Google Search Console. This means brands will need to adopt a “mobile-first” mindset for AI crawlability, ensuring that critical content is accessible in raw HTML without relying on JavaScript. We can expect to see specialized AI crawlability tools become standard in the SEO toolkit, similar to how Google Search Console is essential today. These tools will provide real-time insights into how AI systems are crawling and understanding your content, enabling brands to optimize specifically for AI visibility. Additionally, structured data and schema markup will become even more critical, as AI systems rely on explicit semantic information to understand content context and authority. The concept of crawl budget may evolve differently for AI systems compared to traditional search engines, potentially requiring new optimization strategies. Finally, as AI search becomes more competitive, brands that master crawlability optimization early will gain significant advantages in establishing authority and visibility in AI-powered answer engines. The future of crawlability is not just about being discoverable—it is about being understood, trusted, and cited by AI systems that increasingly influence how people find information online.

Crawlability refers to whether search engines can access and read your website pages, while indexability refers to whether those pages are allowed to be included in search results. A page can be crawlable but not indexable if it contains a noindex tag or canonical tag pointing elsewhere. Both are essential for SEO success, but crawlability is the first step—without it, indexing cannot occur.

AI crawlers like those from OpenAI and Perplexity do not render JavaScript, meaning they only see raw HTML content. Googlebot can process JavaScript after its initial visit. Additionally, research shows AI crawlers visit pages more frequently than traditional search engines—sometimes over 100 times more often. This means your content must be technically sound from the moment of publication, as you may not get a second chance to make a good impression with AI bots.

Common crawlability blockers include broken internal links, orphaned pages with no internal links pointing to them, incorrect robots.txt directives that block important sections, misused noindex or canonical tags, pages buried too deep in site structure (more than 3-4 clicks from homepage), server errors (5xx codes), slow page speed, JavaScript rendering issues, and redirect chains or loops. Each of these can prevent crawlers from efficiently accessing and understanding your content.

To improve crawlability, create a flat site structure with important pages within 2-3 clicks of the homepage, implement an XML sitemap and submit it to Google Search Console, build a strong internal linking structure, ensure your robots.txt file doesn't accidentally block important pages, fix broken links and orphaned pages, optimize page load speed, serve critical content in HTML rather than JavaScript, and regularly audit your site for technical issues using tools like Google Search Console or Semrush Site Audit.

Crawlability is critical for AI search because answer engines like ChatGPT and Perplexity must be able to access and understand your content to cite or mention your brand. If your site has crawlability issues, AI bots may not visit frequently or may miss important pages entirely. Since AI crawlers visit more frequently than traditional search engines but don't render JavaScript, ensuring clean HTML, proper site structure, and technical health is essential for establishing authority in AI-powered search results.

Key tools for monitoring crawlability include Google Search Console (free, shows indexation status), Screaming Frog (simulates crawler behavior), Semrush Site Audit (detects crawlability issues), server log analysis tools, and specialized AI monitoring platforms like Conductor Monitoring that track AI crawler activity in real-time. For comprehensive insights into both traditional and AI crawlability, real-time monitoring solutions are increasingly important as they can catch issues before they impact your visibility.

Crawl budget is the number of pages a search engine will crawl on your site during each visit. If your site has crawlability issues like duplicate content, broken links, or poor structure, crawlers waste their budget on low-value pages and may miss important content. By improving crawlability through clean site structure, fixing technical issues, and eliminating unnecessary pages, you ensure that crawlers use their budget efficiently on pages that matter most for your business.

Start tracking how AI chatbots mention your brand across ChatGPT, Perplexity, and other platforms. Get actionable insights to improve your AI presence.

Discover the best tools for checking AI crawlability. Learn how to monitor GPTBot, ClaudeBot, and PerplexityBot access to your website with free and enterprise ...

Learn how to audit AI crawler access to your website. Discover which bots can see your content and fix blockers preventing AI visibility in ChatGPT, Perplexity,...

Learn proven strategies to increase how often AI crawlers visit your website, improve content discoverability in ChatGPT, Perplexity, and other AI search engine...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.