What is Source Selection Bias in AI? Definition and Impact

Learn about source selection bias in AI, how it affects machine learning models, real-world examples, and strategies to detect and mitigate this critical fairne...

AI systems’ tendency to prioritize recently published or updated content over older information. This bias occurs when machine learning models give disproportionate weight to newer data points in their training or decision-making processes, potentially leading to conclusions based on temporary trends rather than long-term patterns.

AI systems' tendency to prioritize recently published or updated content over older information. This bias occurs when machine learning models give disproportionate weight to newer data points in their training or decision-making processes, potentially leading to conclusions based on temporary trends rather than long-term patterns.

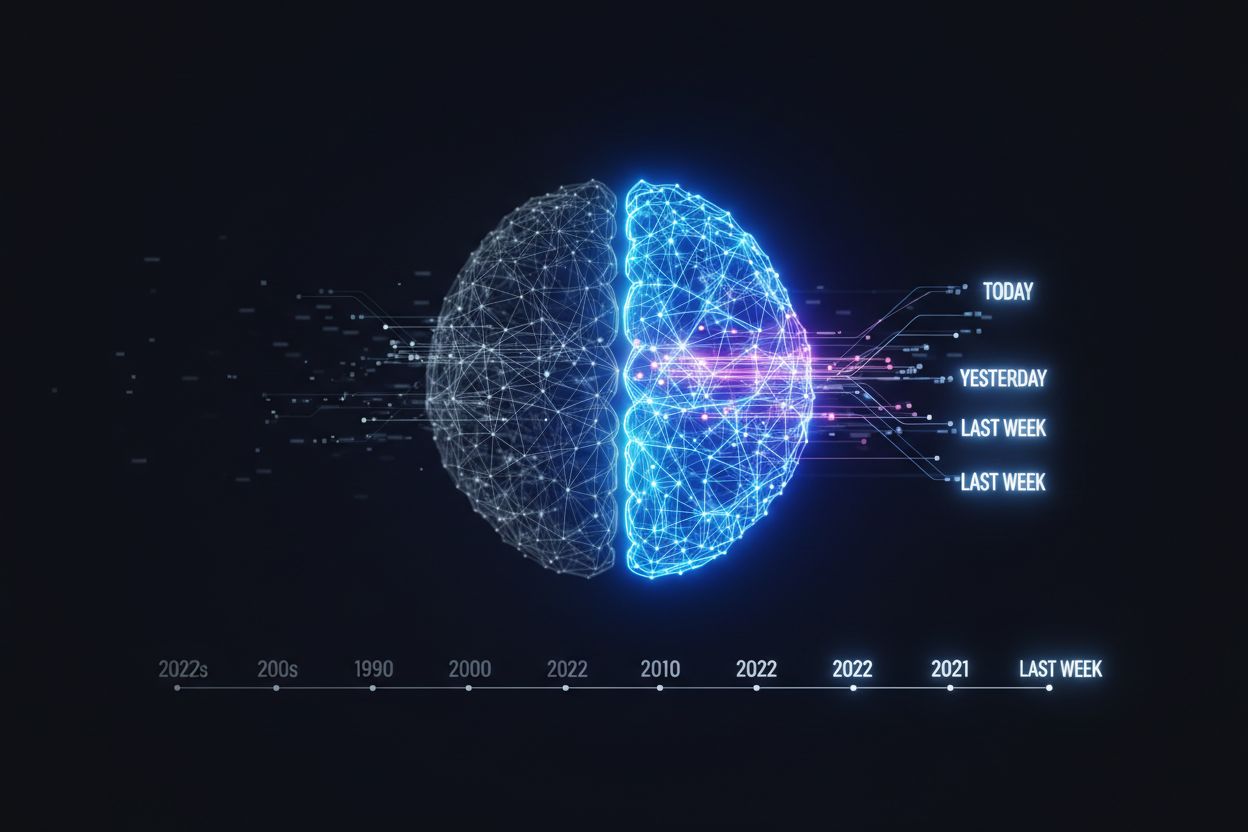

Recency bias in AI refers to the systematic tendency of machine learning models to disproportionately weight and prioritize recent data points, events, or information when making predictions or generating responses. Unlike human recency bias—which is a cognitive limitation rooted in memory accessibility—AI recency bias emerges from deliberate architectural choices and training methodologies designed to capture current trends and patterns. The core mechanism operates through temporal weighting functions that assign higher importance to recent data during model training and inference, fundamentally altering how the system evaluates information relevance. This bias significantly impacts AI decision-making across domains, causing models to overemphasize recent patterns while potentially discarding valuable historical context and long-term trends. It’s crucial to distinguish recency bias from temporal bias, which is a broader category encompassing any systematic error related to time-dependent data, whereas recency bias specifically concerns the overvaluation of recent information. In real-world manifestations, this appears when AI systems recommend products based solely on trending items, financial models predict market movements based only on recent volatility, or search engines rank newly published content above more authoritative older sources. Understanding this distinction helps organizations identify when their AI systems are making decisions based on fleeting trends rather than substantive, enduring patterns.

Recency bias operates distinctly across various AI architectures, each with unique manifestations and business consequences. The following table illustrates how this bias appears across major AI system categories:

| AI System Type | Manifestation | Impact | Example |

|---|---|---|---|

| RAG Systems | Recent documents ranked higher in retrieval, older authoritative sources deprioritized | Outdated information prioritized over established knowledge | ChatGPT citing recent blog posts over foundational research papers |

| Recommendation Systems | Sequential models favor items trending in last 7-30 days | User receives trending products instead of personalized matches | E-commerce platforms recommending viral items over user preference history |

| Time-Series Models | Recent data points weighted 5-10x higher in forecasting | Overreaction to short-term fluctuations, poor long-term predictions | Stock price models reacting dramatically to daily volatility |

| Search Ranking | Publication date as primary ranking signal after relevance | Newer content ranks above more comprehensive older articles | Google Search prioritizing recent news over definitive guides |

| Content Ranking | Engagement metrics from last 30 days dominate ranking algorithms | Viral but low-quality content outranks established quality content | Social media feeds showing trending posts over consistently valuable creators |

RAG-enabled systems like ChatGPT, Gemini, and Claude demonstrate this bias when retrieving documents—they often surface recently published content even when older, more authoritative sources contain superior information. Sequential recommendation systems in e-commerce platforms exhibit recency bias by suggesting items that have gained traction in recent weeks rather than matching user historical preferences and behavior patterns. Time-series forecasting models used in financial services and demand planning frequently overweight recent data points, causing them to chase short-term noise rather than identifying genuine long-term trends. Search ranking algorithms incorporate publication dates as quality signals, inadvertently penalizing comprehensive, evergreen content that remains relevant years after publication. Content ranking systems on social platforms amplify recency bias by prioritizing engagement metrics from the most recent period, creating a feedback loop where older content becomes invisible regardless of its lasting value.

Recency bias in AI systems stems from multiple interconnected technical and business factors rather than a single root cause. Training data composition heavily influences this bias—most machine learning datasets contain disproportionately more recent examples than historical ones, either because older data is discarded during preprocessing or because data collection naturally accumulates more recent samples. Model architecture design choices deliberately incorporate temporal weighting mechanisms; for instance, LSTM and transformer models with attention mechanisms naturally assign higher weights to recent tokens and sequences, making them inherently susceptible to recency bias. Search index algorithms and ranking functions explicitly use publication dates and freshness signals as quality indicators, based on the reasonable assumption that recent information is more likely to be accurate and relevant. Optimization objectives in training often reward models for capturing recent trends—recommendation systems are optimized for immediate user engagement, time-series models for short-term forecast accuracy, and search systems for user satisfaction with current results. The data freshness as quality signal assumption pervades AI development; engineers and data scientists often treat newer data as inherently superior without questioning whether this assumption holds across all use cases and domains. This combination of technical architecture, training methodology, and business optimization creates a systematic bias toward recency that becomes embedded in model behavior.

Recency bias in AI systems creates tangible, measurable business consequences across multiple industries and functions:

These consequences extend beyond individual transactions—they compound over time, creating systematic disadvantages for established brands, proven solutions, and historical knowledge while artificially amplifying the visibility and perceived value of recent but potentially inferior alternatives.

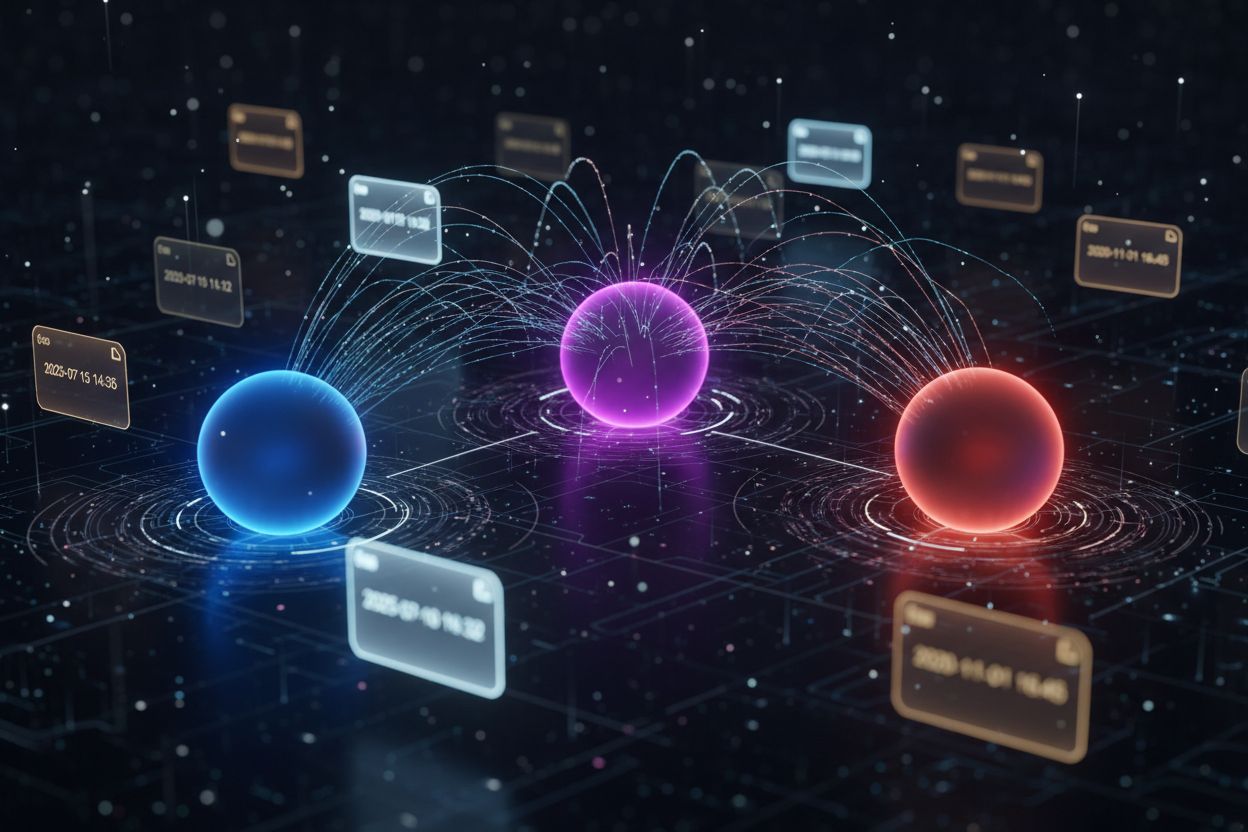

Retrieval-Augmented Generation (RAG) systems represent a critical frontier where recency bias significantly impacts AI response quality and business outcomes. RAG architecture combines a retrieval component that searches external knowledge bases with a generation component that synthesizes retrieved information into responses, creating a two-stage process where recency bias can compound. Research from Evertune indicates that approximately 62% of ChatGPT responses rely on foundational knowledge embedded during training, while 38% trigger RAG mechanisms to retrieve external documents—this distribution means recency bias in the retrieval stage directly affects over one-third of AI-generated responses. The retrieval component typically ranks documents using content freshness as a primary ranking signal, often weighting publication dates alongside relevance scores, causing the system to surface recently published content even when older sources contain more authoritative or comprehensive information. Publication dates function as implicit quality indicators in most RAG systems, based on the assumption that recent information is more accurate and relevant—an assumption that breaks down for evergreen content, foundational knowledge, and domains where established principles remain constant. This bias creates a strategic challenge for content creators: maintaining visibility in AI responses requires not just publishing high-quality content once, but continuously refreshing and republishing to signal freshness to RAG systems. Organizations must understand that their content’s visibility in AI-generated responses depends partly on temporal signals independent of actual quality or relevance, fundamentally changing content strategy from “publish once, benefit forever” to “continuous refresh cycles.”

Identifying recency bias requires both quantitative metrics and qualitative diagnostic approaches that reveal when AI systems are overweighting recent information. The HRLI metric (Hit Rate of Last Item) provides a quantitative measure specifically designed for sequential recommendation systems—it calculates the percentage of recommendations that are the most recent item in a user’s history, with elevated HRLI scores indicating problematic recency bias. In recommendation systems, practitioners measure recency bias by comparing recommendation diversity across time periods: systems exhibiting strong recency bias show dramatically different recommendations when the same user is evaluated at different time points, whereas robust systems maintain consistency while incorporating appropriate temporal signals. Performance metrics affected by recency bias include declining accuracy on historical prediction tasks, poor performance during periods that differ from recent training data, and systematic underperformance on long-tail items that haven’t been recently active. Warning signs of problematic recency bias include: sudden ranking changes when content ages despite unchanged quality, recommendation lists dominated by items from the last 7-30 days, and forecast models that consistently overreact to short-term fluctuations. Diagnostic approaches involve temporal holdout validation, where models are tested on data from different time periods to reveal whether performance degrades significantly when predicting older patterns, and comparative analysis of model behavior across different temporal windows. Organizations should implement continuous monitoring of temporal bias indicators rather than treating recency bias as a one-time detection problem, as model behavior evolves as new data accumulates.

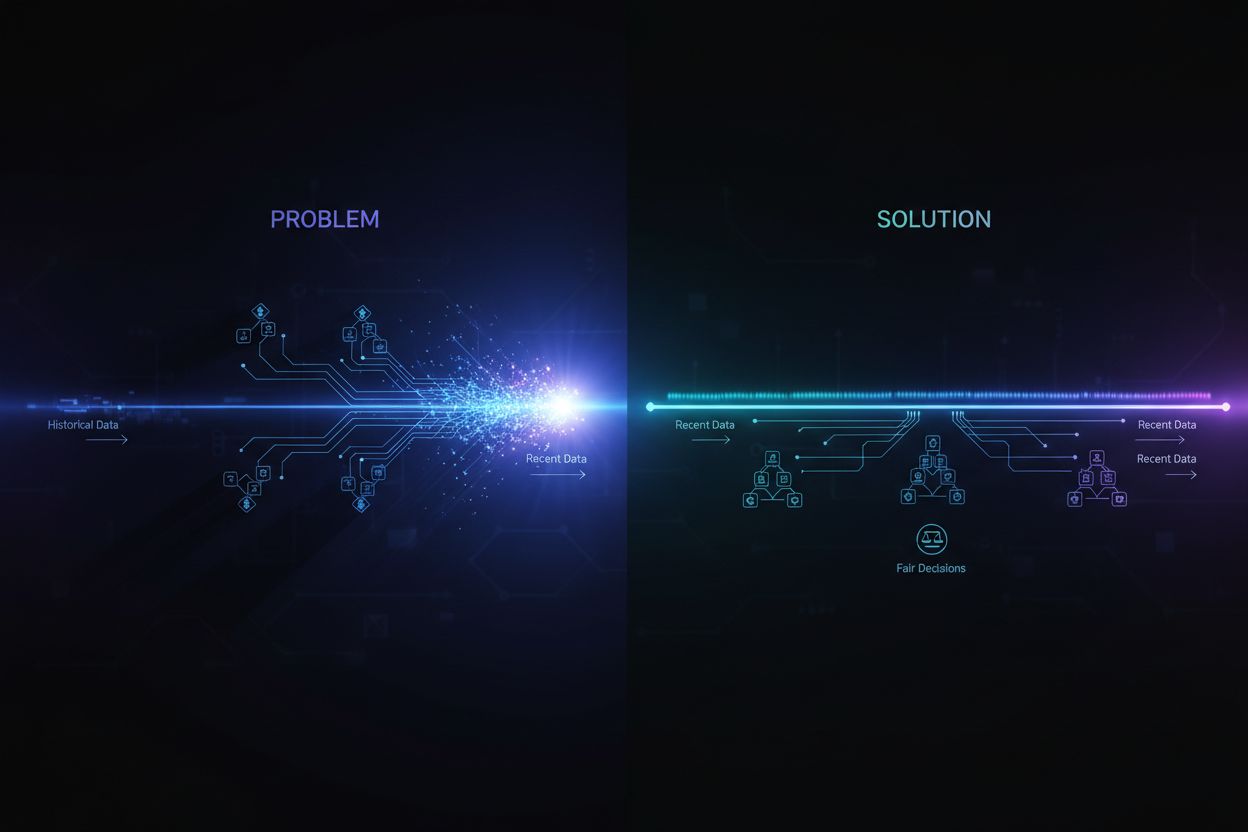

Effective mitigation of recency bias requires multi-layered strategies addressing training methodology, model architecture, and operational practices. Time-weighted models that explicitly balance recent and historical data through carefully calibrated decay functions can reduce recency bias while preserving the ability to capture genuine trend shifts—these models assign decreasing weights to older data points according to a decay schedule rather than treating all historical data equally. Balanced training data composition involves deliberately oversampling historical data and undersampling recent data during training to counteract the natural accumulation bias in datasets, ensuring models learn patterns across full temporal ranges rather than optimizing primarily for recent periods. Adversarial testing specifically designed to evaluate model behavior across different temporal windows reveals whether recency bias causes performance degradation and helps quantify the bias magnitude before deployment. Explainable AI techniques that surface which temporal features and data points most influence model decisions enable practitioners to identify when recency bias is driving predictions and adjust accordingly. Content refresh strategies acknowledge that some recency bias is inevitable and work within that constraint by ensuring important content receives periodic updates and republication to maintain visibility signals. Historical pattern integration involves explicitly encoding known seasonal patterns, cyclical trends, and long-term relationships into models as features or constraints, preventing the model from ignoring these patterns simply because they’re not prominent in recent data. Organizations should implement temporal validation frameworks that test model performance across multiple time periods and explicitly penalize models that show strong recency bias, making bias reduction a formal objective rather than an afterthought.

Recency bias fundamentally shapes how brand content appears in AI-generated responses, creating a visibility challenge distinct from traditional search engine optimization. When AI systems retrieve information to answer user queries, recency bias affects brand visibility by causing older brand content—even if more authoritative or comprehensive—to be deprioritized in favor of recently published competitor content or newer brand publications. Content refresh importance has shifted from a nice-to-have practice to a strategic necessity; brands must now continuously update and republish content to maintain visibility signals in AI systems, even when the core information hasn’t changed. Monitoring tools that track how frequently brand content appears in AI responses, which queries trigger brand citations, and how brand visibility changes over time have become essential for understanding AI-driven visibility trends. AmICited.com addresses this critical gap by providing comprehensive monitoring of how brands are cited and referenced across AI systems—the platform tracks when and how your content appears in AI-generated responses, reveals which queries surface your brand, and identifies visibility gaps where competitors are cited instead. This monitoring capability is essential because recency bias creates a hidden visibility problem: brands may not realize their content is being deprioritized until they systematically track AI citations and discover declining mention rates despite unchanged content quality. Tracking brand mentions in AI reveals patterns that traditional analytics miss—you can identify which content types maintain visibility longest, which topics require more frequent updates, and how your citation rate compares to competitors across different AI systems. Strategic implications include recognizing that content strategy must now account for AI visibility requirements alongside human reader needs, requiring organizations to balance evergreen content creation with strategic refresh cycles that signal freshness to AI systems.

Recency bias in AI systems raises significant ethical concerns that extend beyond technical performance to fundamental questions of fairness, equity, and access to information. Fairness implications emerge because recency bias systematically disadvantages established, reliable information sources in favor of recent content, creating a bias against historical knowledge and proven solutions that may be more valuable than recent alternatives. Disadvantaging older reliable information means that well-established medical treatments, proven business practices, and foundational scientific knowledge become less visible in AI responses simply because they’re not recent, potentially causing users to overlook superior options in favor of newer but less validated alternatives. Healthcare ethics concerns are particularly acute: clinical decision support systems exhibiting recency bias may recommend recently published but insufficiently validated treatments over established protocols with decades of safety data, potentially compromising patient outcomes and violating principles of evidence-based medicine. Credit scoring discrimination can emerge when AI systems trained on recent economic data make lending decisions that overweight recent financial behavior while ignoring longer-term creditworthiness patterns, potentially disadvantaging individuals recovering from temporary hardship or those with limited recent credit history. Criminal justice implications arise when risk assessment algorithms overweight recent behavior, potentially recommending harsher sentences for individuals whose recent actions don’t reflect their overall pattern or rehabilitation trajectory. Accessibility of historical knowledge becomes compromised when AI systems systematically deprioritize older information, effectively erasing institutional memory and making it harder for users to access the full context needed for informed decision-making. These ethical considerations suggest that addressing recency bias isn’t merely a technical optimization problem but a responsibility to ensure AI systems provide fair access to information across temporal dimensions and don’t systematically disadvantage reliable historical knowledge in favor of recent but potentially inferior alternatives.

Human recency bias is a cognitive limitation rooted in memory accessibility, while AI recency bias emerges from algorithmic design choices and training methodologies. Both prioritize recent information, but AI bias stems from temporal weighting functions, model architecture, and ranking algorithms rather than psychological shortcuts.

If your content isn't regularly updated, it loses visibility in RAG-enabled AI responses like ChatGPT and Gemini. Brands that publish fresh content see higher mention rates in AI-generated responses, while stale content becomes invisible regardless of its quality or relevance.

Complete elimination is impractical, but significant mitigation is possible through time-weighted models, balanced training data spanning multiple business cycles, and careful algorithm design that considers multiple time horizons rather than optimizing solely for recent patterns.

Sequential recommendation models often overemphasize recent user interactions to predict next items, missing long-term preferences and reducing recommendation diversity. This happens because models are optimized for immediate engagement rather than capturing the full spectrum of user interests.

Use metrics like HRLI (Hit Rate of Last Item) for recommendation systems, analyze temporal distribution in training data, monitor whether recent items consistently rank higher than appropriate, and conduct temporal holdout validation to test performance across different time periods.

Content freshness signals (publication dates, update timestamps) help search indexes and AI systems identify recent content. While useful for timeliness, they can amplify recency bias if not balanced with content quality metrics, causing older authoritative sources to be deprioritized.

AI models may overweight recent market data, missing historical patterns and cycles. This leads to poor predictions during market anomalies, overreaction to short-term volatility, and failure to recognize long-term trends, resulting in procyclical lending and investment decisions.

AmICited monitors how brands appear in AI-generated responses across platforms, helping track whether content freshness strategies effectively improve visibility in AI search. The platform reveals which queries surface your brand, identifies visibility gaps, and tracks citation rate changes over time.

Track how your content appears in AI-generated responses across ChatGPT, Gemini, and other platforms. Understand recency bias impact on your brand visibility and optimize your content strategy.

Learn about source selection bias in AI, how it affects machine learning models, real-world examples, and strategies to detect and mitigate this critical fairne...

Discover how publication dates impact AI citations across ChatGPT, Perplexity, and Google AI Overviews. Learn industry-specific freshness strategies and avoid t...

Learn how AI systems reduce content relevance scores over time through freshness decay algorithms. Understand temporal decay functions, monitoring strategies, a...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.