How to Build Domain Authority for AI Search Engines

Learn how to build domain authority that AI search engines recognize. Discover strategies for entity optimization, citations, topical authority, and E-E-A-T sig...

AI evaluation of content trustworthiness based on author credentials, citations, and verification. Source credibility assessment systematically analyzes multiple dimensions including author expertise, publisher reputation, citation patterns, and fact-checking results to determine whether information sources merit inclusion in research, knowledge bases, or AI-generated summaries. This automated process enables platforms to scale credibility evaluation across millions of sources while maintaining consistency that human reviewers alone cannot achieve.

AI evaluation of content trustworthiness based on author credentials, citations, and verification. Source credibility assessment systematically analyzes multiple dimensions including author expertise, publisher reputation, citation patterns, and fact-checking results to determine whether information sources merit inclusion in research, knowledge bases, or AI-generated summaries. This automated process enables platforms to scale credibility evaluation across millions of sources while maintaining consistency that human reviewers alone cannot achieve.

Source credibility assessment is the systematic evaluation of information sources to determine their trustworthiness, reliability, and authority in providing accurate information. In the context of AI-powered systems, credibility assessment involves analyzing multiple dimensions of a source to establish whether its content merits inclusion in research, citations, or knowledge bases. AI credibility evaluation operates by examining author credentials—including educational background, professional experience, and subject matter expertise—alongside the citation patterns that indicate how frequently and positively other authoritative sources reference the work. The process evaluates verification mechanisms such as peer review status, institutional affiliation, and publication venue reputation to establish baseline trustworthiness. Credibility signals are the measurable indicators that AI systems detect and weigh, ranging from explicit markers like author qualifications to implicit signals derived from textual analysis and metadata patterns. Modern AI systems recognize that credibility is multidimensional; a source might be highly credible in one domain while lacking authority in another, requiring context-aware evaluation. The assessment process has become increasingly critical as information volume explodes and misinformation spreads rapidly across digital platforms. Automated credibility assessment enables platforms to scale evaluation across millions of sources while maintaining consistency that human reviewers alone cannot achieve. Understanding how these systems work helps content creators, researchers, and publishers optimize their sources for credibility recognition while helping consumers make informed decisions about information trustworthiness.

AI systems evaluate source credibility through sophisticated multi-signal analysis that combines natural language processing, machine learning models, and structured data evaluation. Credibility signal detection identifies specific markers within text, metadata, and network patterns that correlate with reliable information; these signals are weighted based on their predictive value for accuracy. NLP analysis examines linguistic patterns, citation density, claim specificity, and language certainty to assess whether content demonstrates the hallmarks of rigorous research or exhibits characteristics common to unreliable sources. Machine learning models trained on large datasets of verified credible and non-credible sources learn to recognize complex patterns that humans might miss, enabling real-time evaluation at scale. Fact-checking integration cross-references claims against verified databases and established facts, flagging contradictions or unsupported assertions that reduce credibility scores. These systems employ ensemble methods that combine multiple evaluation approaches, recognizing that no single signal perfectly predicts credibility. The following table illustrates the primary signal categories that AI systems analyze:

| Signal Type | What It Measures | Examples |

|---|---|---|

| Academic Signals | Peer review status, publication venue, institutional affiliation | Journal impact factor, conference ranking, university reputation |

| Textual Signals | Writing quality, citation density, claim specificity, language patterns | Proper citations, technical terminology, evidence-based assertions |

| Metadata Signals | Author credentials, publication date, update frequency, source history | Author degrees, publication timeline, revision history |

| Social Signals | Citation counts, sharing patterns, expert endorsements, community engagement | Google Scholar citations, academic network mentions, peer recommendations |

| Verification Signals | Fact-check results, claim corroboration, source transparency | Snopes verification, multiple independent confirmations, methodology disclosure |

| Structural Signals | Content organization, methodology clarity, conflict of interest disclosure | Clear sections, transparent methods, funding source transparency |

The most influential credibility factors that AI systems evaluate include multiple interconnected dimensions that collectively establish source reliability. Author reputation serves as a foundational credibility marker, with established experts in their field carrying significantly more weight than unknown contributors. Publisher reputation extends credibility assessment to the institutional context, recognizing that sources published through peer-reviewed journals or established academic presses demonstrate higher baseline credibility. Citation patterns reveal how the broader scholarly community has engaged with the source; highly-cited works in reputable venues indicate community validation of the research quality. Recency matters contextually—recent publications demonstrate current knowledge while older foundational works retain credibility based on their historical impact and continued relevance. Bias detection algorithms examine whether sources disclose potential conflicts of interest, funding sources, or ideological positions that might influence their conclusions. Engagement signals from the academic and professional community, including citations and peer discussion, provide external validation of credibility. The following factors represent the most critical elements that AI systems prioritize:

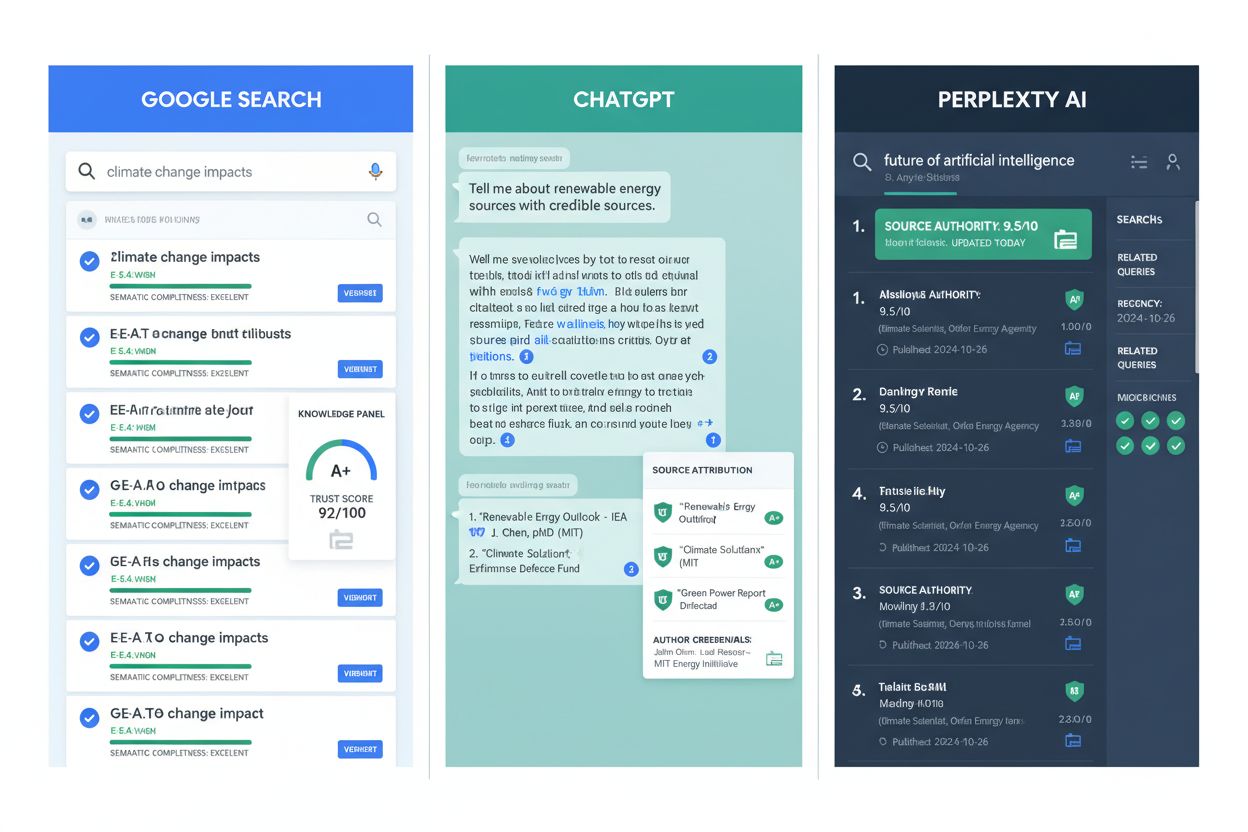

AI-powered credibility assessment has become integral to major information platforms and research infrastructure. Google AI Overviews utilize credibility signals to determine which sources appear in AI-generated summaries, prioritizing content from established publishers and verified experts. ChatGPT and similar language models employ credibility assessment during training to weight sources appropriately, though they face challenges in real-time evaluation of novel claims. Perplexity AI explicitly incorporates source credibility into its citation methodology, displaying source reputation alongside search results to help users evaluate information quality. In academic research, credibility assessment tools help researchers identify high-quality sources more efficiently, reducing time spent on literature review while improving research foundation quality. Content preservation initiatives use credibility assessment to prioritize archiving of authoritative sources, ensuring that future researchers can access reliable historical information. AmICited.com serves as a monitoring solution that tracks how sources are cited and evaluated across platforms, helping publishers understand their credibility standing and identify opportunities for improvement. Fact-checking organizations leverage automated credibility assessment to prioritize claims for manual verification, focusing human effort on high-impact misinformation. Educational institutions increasingly use credibility assessment tools to teach students about source evaluation, making the implicit criteria explicit and measurable. These applications demonstrate that credibility assessment has moved from theoretical framework to practical infrastructure supporting information quality across digital ecosystems.

Despite significant advances, automated credibility assessment faces substantial limitations that require human oversight and contextual judgment. Engagement signal bias presents a fundamental challenge; popular sources may receive high credibility scores based on social signals despite containing inaccurate information, as engagement metrics correlate imperfectly with accuracy. False positives and false negatives occur when credibility algorithms misclassify sources—established experts in emerging fields may lack sufficient citation history while prolific misinformation creators develop sophisticated credibility signals. Evolving misinformation tactics deliberately exploit credibility assessment systems by mimicking legitimate sources, creating fake author credentials, and manufacturing false citations that fool automated systems. Domain-specific credibility variation means that a source credible in one field may lack authority in another, yet systems sometimes apply uniform credibility scores across domains. Temporal dynamics complicate assessment; sources credible at publication may become outdated or discredited as new evidence emerges, requiring continuous re-evaluation rather than static scoring. Cultural and linguistic bias in training data means that credibility assessment systems may undervalue sources from non-English-speaking regions or underrepresented communities, perpetuating existing information hierarchies. Transparency challenges arise because many credibility assessment algorithms operate as black boxes, making it difficult for sources to understand how to improve their credibility signals or for users to understand why certain sources received particular scores. These limitations underscore that automated credibility assessment should complement rather than replace human critical evaluation.

Content creators and publishers can significantly improve their credibility signals by implementing evidence-based practices that align with how AI systems evaluate trustworthiness. Implement E-E-A-T principles—demonstrating Experience, Expertise, Authoritativeness, and Trustworthiness—by clearly displaying author credentials, professional affiliations, and relevant qualifications on content pages. Proper citation practices strengthen credibility by linking to high-quality sources, using consistent citation formats, and ensuring all claims reference verifiable evidence; this signals that content is built on established knowledge rather than speculation. Transparency about methodology helps AI systems recognize rigorous research practices; clearly explain data sources, research methods, limitations, and any potential conflicts of interest. Maintain author profiles with detailed biographical information, publication history, and professional credentials that AI systems can verify and evaluate. Update content regularly to demonstrate commitment to accuracy; outdated information reduces credibility scores, while regular revisions signal that you monitor new developments in your field. Disclose funding sources and affiliations explicitly, as transparency about potential biases actually increases credibility rather than decreasing it—AI systems recognize that disclosed conflicts are less problematic than hidden ones. Build citation authority by publishing in reputable venues, pursuing peer review, and earning citations from other credible sources; this creates positive feedback loops where credibility begets credibility. Engage with the professional community through conferences, collaborations, and peer discussion, as these engagement signals validate expertise and increase visibility to credibility assessment systems. Implement structured data markup using schema.org and similar standards to help AI systems automatically extract and verify author information, publication dates, and other credibility signals.

The evolution of source credibility assessment will increasingly incorporate multimodal evaluation that analyzes text, images, video, and audio together to detect sophisticated misinformation that exploits single-modality analysis. Real-time verification systems will integrate with content creation platforms, providing immediate credibility feedback as creators publish, enabling course correction before misinformation spreads. Blockchain-based credibility tracking may enable immutable records of source history, citations, and corrections, creating transparent credibility provenance that AI systems can evaluate with greater confidence. Personalized credibility assessment will move beyond one-size-fits-all scoring to evaluate sources relative to individual user expertise and needs, recognizing that credibility is partially subjective and context-dependent. Integration with knowledge graphs will enable AI systems to evaluate sources not just in isolation but within networks of related information, identifying sources that contradict established knowledge or fill important gaps. Explainable AI credibility systems will become standard, providing transparent explanations of why sources received particular credibility scores, enabling creators to improve and users to understand evaluation rationale. Continuous learning systems will adapt to emerging misinformation tactics in real-time, updating credibility assessment models as new manipulation techniques appear rather than relying on static training data. Cross-platform credibility tracking will create unified credibility profiles that follow sources across the internet, making it harder for bad actors to maintain different reputations on different platforms. These developments will make credibility assessment increasingly sophisticated, transparent, and integrated into the information infrastructure that billions of people rely on daily.

Source credibility assessment is the systematic evaluation of information sources to determine their trustworthiness and reliability. AI systems analyze multiple dimensions including author credentials, publisher reputation, citation patterns, and fact-checking results to establish whether sources merit inclusion in research, knowledge bases, or AI-generated summaries. This automated process enables platforms to evaluate millions of sources consistently.

AI systems detect credibility signals through natural language processing, machine learning models, and structured data analysis. They examine academic signals (peer review status, institutional affiliation), textual signals (citation density, claim specificity), metadata signals (author credentials, publication dates), social signals (citation counts, expert endorsements), and verification signals (fact-check results, claim corroboration). These signals are weighted based on their predictive value for accuracy.

The most critical credibility factors include author reputation and expertise, publisher reputation, citation count and quality, recency and timeliness, bias and conflict disclosure, engagement signals from the professional community, source verification through fact-checking, background knowledge integration, creator association with reputable institutions, and update frequency. These factors collectively establish source reliability and trustworthiness.

Publishers can improve credibility by implementing E-E-A-T principles (Experience, Expertise, Authoritativeness, Trustworthiness), using proper citation practices, maintaining transparent methodology, displaying detailed author profiles with credentials, updating content regularly, disclosing funding sources and affiliations, building citation authority through peer review, engaging with the professional community, and implementing structured data markup to help AI systems extract credibility information.

Automated credibility assessment faces challenges including engagement signal bias (popular sources may score high despite inaccuracy), false positives and negatives, evolving misinformation tactics that mimic legitimate sources, domain-specific credibility variation, temporal dynamics as sources become outdated, cultural and linguistic bias in training data, and transparency challenges with black-box algorithms. These limitations mean automated assessment should complement rather than replace human critical evaluation.

Google AI Overviews prioritizes sources with established publishers and verified experts for AI-generated summaries. ChatGPT weights sources during training based on credibility signals. Perplexity explicitly displays source reputation alongside search results. AmICited.com monitors how sources are cited across all major AI platforms, helping publishers understand their credibility standing and identify improvement opportunities.

Future developments include multimodal evaluation analyzing text, images, video, and audio together; real-time verification systems providing immediate credibility feedback; blockchain-based credibility tracking; personalized credibility assessment relative to user expertise; integration with knowledge graphs; explainable AI systems providing transparent scoring rationale; continuous learning systems adapting to new misinformation tactics; and cross-platform credibility tracking creating unified profiles.

Source credibility assessment is critical because it determines which sources appear in AI-generated summaries, influence AI training data, and shape what information billions of people encounter. Accurate credibility assessment helps prevent misinformation spread, ensures AI systems provide reliable information, supports academic research quality, and maintains trust in AI-powered information systems. As AI becomes more influential in information discovery, credibility assessment becomes increasingly important.

Track how your sources are cited and evaluated across Google AI Overviews, ChatGPT, Perplexity, and Gemini. AmICited.com helps you understand your credibility standing and identify opportunities for improvement.

Learn how to build domain authority that AI search engines recognize. Discover strategies for entity optimization, citations, topical authority, and E-E-A-T sig...

Discover how AI engines like ChatGPT, Perplexity, and Google AI evaluate source trustworthiness. Learn about E-E-A-T, domain authority, citation frequency, and ...

Discover which sources AI engines cite most frequently. Learn how ChatGPT, Google AI Overviews, and Perplexity evaluate source credibility, and understand citat...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.