The Truth About LLMs.txt: Overhyped or Essential?

Critical analysis of LLMs.txt effectiveness. Discover whether this AI content standard is essential for your site or just hype. Real data on adoption, platform ...

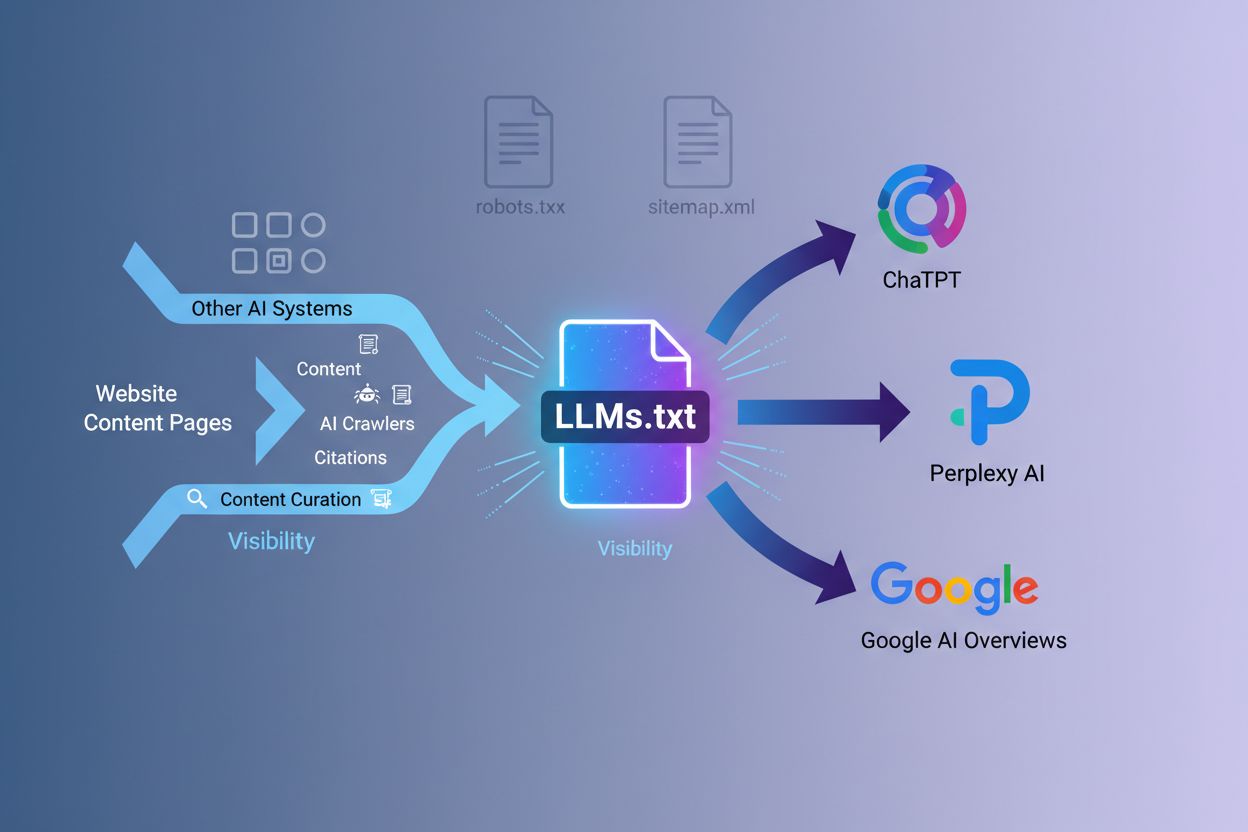

A proposed standard file placed at a website’s root domain that communicates with AI crawlers and large language models about high-quality, citable content. Similar to robots.txt but designed for inference-time guidance rather than access control. Helps AI systems discover and prioritize authoritative content when generating responses. Becoming increasingly adopted by major AI platforms like OpenAI, Anthropic, Perplexity, and Google.

A proposed standard file placed at a website's root domain that communicates with AI crawlers and large language models about high-quality, citable content. Similar to robots.txt but designed for inference-time guidance rather than access control. Helps AI systems discover and prioritize authoritative content when generating responses. Becoming increasingly adopted by major AI platforms like OpenAI, Anthropic, Perplexity, and Google.

The LLMs.txt file is a plain text markdown file placed at the root domain of a website that serves as a curated guide for large language models during inference time. Unlike traditional SEO tools, LLMs.txt is designed to help AI crawlers and language models discover and prioritize high-quality content on your website when they’re generating responses or searching for information. This proposed standard represents a shift in how websites communicate with artificial intelligence systems, moving beyond the blocking mechanisms of robots.txt to instead provide intelligent content curation. The file functions as a content roadmap that tells AI systems which pages, articles, and resources are most valuable, authoritative, and relevant for their purposes. It’s important to understand that LLMs.txt is not about blocking or allowing AI training—it’s specifically about inference-time ingestion, helping AI systems find the right content when answering user questions. The file is written in markdown format and stored as plain text, making it simple to create and maintain. By implementing LLMs.txt, websites can ensure that when AI systems reference their content, they’re pulling from the most accurate, well-structured, and authoritative sources available.

While robots.txt and sitemap.xml have served websites well for traditional search engines, LLMs.txt addresses a fundamentally different need in the age of artificial intelligence. The key distinction lies in their primary functions and timing: robots.txt controls crawling behavior and what search engines can access, sitemap.xml helps search engines discover and index pages, while LLMs.txt guides AI systems during inference time when they’re actively generating responses. It’s crucial to understand that LLMs.txt doesn’t block or allow AI training—it simply curates which content AI systems should prioritize when answering questions or retrieving information. The three files serve complementary purposes and can absolutely coexist on the same domain without conflict. Where robots.txt is about access control and sitemap.xml is about discoverability, LLMs.txt is about content quality and relevance. Think of it this way: robots.txt says “what you can crawl,” sitemap.xml says “here’s what exists,” and LLMs.txt says “here’s what matters most.” This distinction is particularly important because AI systems need different signals than traditional search engines—they need to understand which content is authoritative, well-structured, and suitable for citation.

| File | Primary Function | Main Purpose | Use Case |

|---|---|---|---|

| robots.txt | Access Control | Prevent/allow crawler access | Blocking sensitive pages from search engines |

| sitemap.xml | Discoverability | Help search engines find pages | Improving indexation of new or deep content |

| LLMs.txt | Content Curation | Guide AI inference-time retrieval | Directing AI systems to authoritative sources |

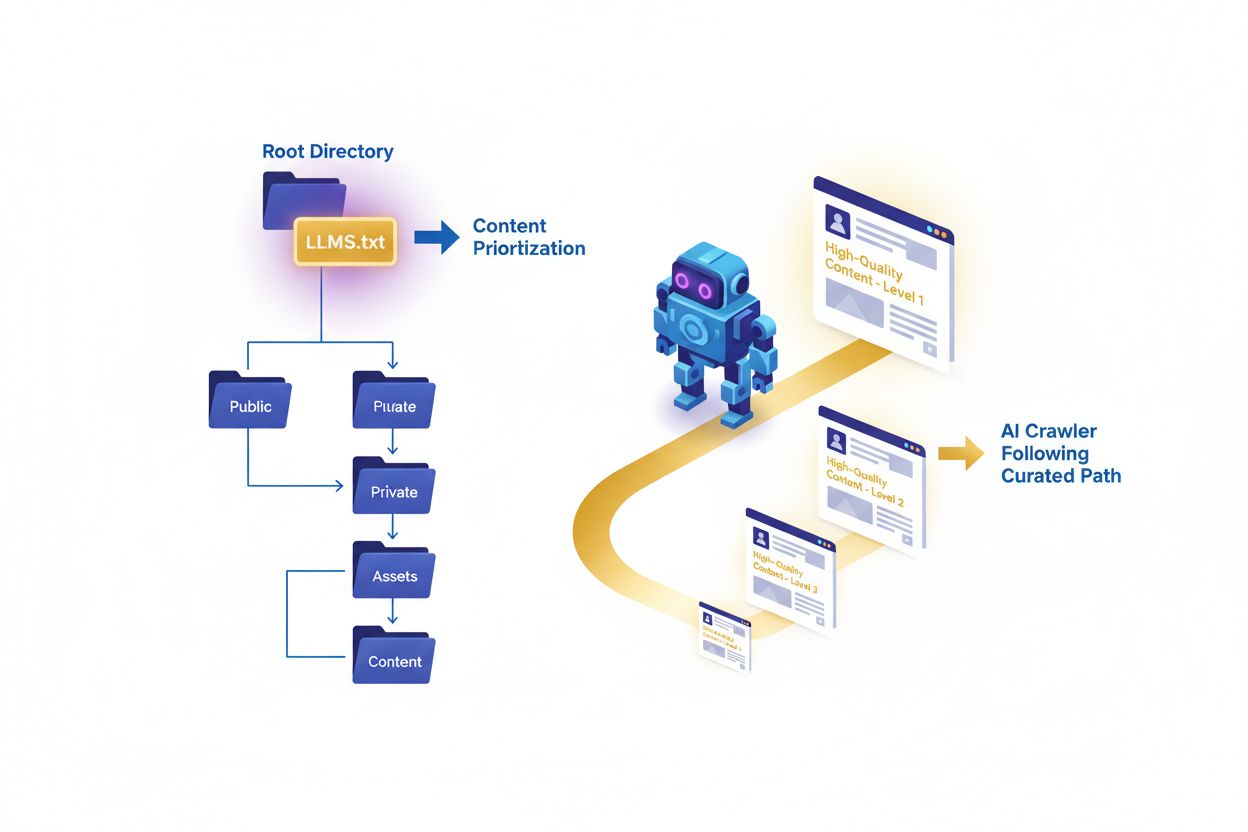

The LLMs.txt file follows a markdown-based structure that’s both human-readable and machine-parseable, making it accessible to both content creators and AI systems. The file typically begins with an H1 title (using #) that identifies the website and its purpose, followed by an introductory blockquote that provides context about the site’s mission or focus. The core structure includes organized sections using H2 headings (##) that categorize different types of content—such as “Core Resources,” “Guides,” “Documentation,” or “Best Practices”—each containing a curated list of URLs with brief descriptions. An “Optional” section at the end allows websites to include additional resources that might be valuable but aren’t part of the primary curation. The file uses plain text UTF-8 encoding to ensure compatibility across all systems and AI platforms. Each URL entry typically includes the full path and a short description explaining why that content is valuable or what it covers. The recommended file size is generally kept under 100KB to ensure efficient processing by AI systems, though there’s no hard limit. The markdown format allows for flexible organization while maintaining clarity, and the structure should reflect your site’s actual content hierarchy and importance.

# Example Website - LLMs.txt

> This is Example Website, a comprehensive resource for learning about [your topic].

> We provide authoritative guides, tutorials, and documentation for [your domain].

## Core Resources

- https://example.com/about - Overview of our mission and expertise

- https://example.com/getting-started - Essential starting point for new users

## Comprehensive Guides

- https://example.com/guide/advanced-techniques - In-depth exploration of advanced methods

- https://example.com/guide/best-practices - Industry standards and recommendations

## Documentation

- https://example.com/docs/api-reference - Complete API documentation

- https://example.com/docs/installation - Setup and installation instructions

## Optional

- https://example.com/blog/latest-trends - Recent industry insights

- https://example.com/case-studies - Real-world implementation examples

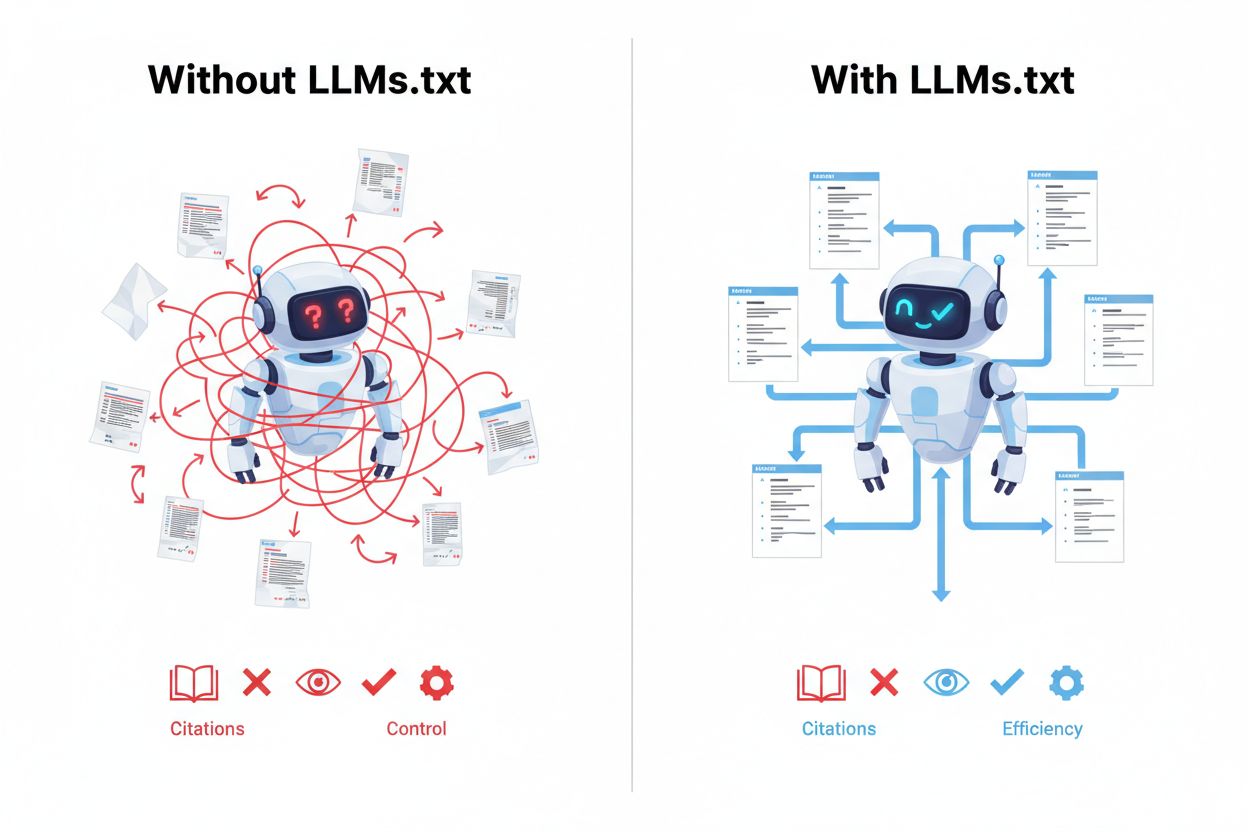

Implementing LLMs.txt provides significant advantages in the emerging landscape of AI-powered search and content discovery. The primary benefit is inference-time ingestion, which means your curated content gets prioritized when AI systems are actively answering user questions rather than during training phases. This leads to better AI understanding of your content’s context, authority, and relevance, resulting in more accurate citations and references when AI systems mention your work. By implementing LLMs.txt, you gain direct control over discovery, ensuring that AI systems find your best content first rather than potentially lower-quality pages. The file enhances your visibility in AI search results and AI-powered applications, creating a new channel for traffic and attribution that complements traditional SEO. Organizations that adopt LLMs.txt early gain a competitive advantage by establishing themselves as authoritative sources in their field before the standard becomes ubiquitous. The implementation also serves as future-proofing, preparing your website for the inevitable shift toward AI-driven content discovery.

Key use cases include:

LLM-friendly content possesses specific characteristics that make it more valuable and usable for artificial intelligence systems during inference. The most important feature is clear structure with proper heading hierarchy, using H1, H2, and H3 tags to organize information logically so AI systems can understand the content’s flow and relationships. Short paragraphs (typically 2-4 sentences) are preferred because they allow AI systems to extract discrete concepts and ideas more effectively than dense blocks of text. Content should include lists, tables, and bullet points that break down complex information into digestible components, making it easier for AI to parse and reference specific points. Minimal distractions like auto-playing videos, pop-ups, or excessive advertisements should be avoided, as they don’t contribute to the core content value. Semantic clarity is essential—using clear language, defining technical terms, and avoiding ambiguity helps AI systems understand your meaning accurately. The content should be self-contained and contextual, meaning it makes sense even when extracted and used outside its original page context. This approach directly supports AI SEO and improves the likelihood that your content will be cited accurately and completely when AI systems reference your work.

Proper implementation of LLMs.txt requires strategic thinking about which content truly deserves inclusion and how to organize it for maximum value. The file must be placed at the root domain (e.g., example.com/llms.txt) to be easily discoverable by AI systems and crawlers. Rather than dumping your entire sitemap into LLMs.txt, focus on quality over quantity—include only your most authoritative, evergreen, and valuable content that you’d want AI systems to reference. Prioritize high-value resources like comprehensive guides, documentation, tutorials, and original research that demonstrate expertise and provide genuine value. Consider including your homepage or about page to help AI systems understand your organization’s mission and credibility. The content you select should be well-maintained and regularly updated, as outdated information can harm your credibility with AI systems. Organize content logically using clear section headings that reflect your site’s structure and content categories. Avoid including authentication-required content, paywalled articles, or pages that require user accounts, as AI systems won’t be able to access them. Regularly audit and update your LLMs.txt file to reflect changes in your content strategy, remove broken links, and add new authoritative resources as they’re created.

LLMs.txt adoption is accelerating rapidly among major AI platforms and companies that recognize the value of curated content sources. OpenAI, Anthropic, Perplexity, and Google have all indicated support for or interest in the LLMs.txt standard, with some platforms actively using it to improve their retrieval and citation systems. The standard is still emerging and not yet mandatory, but it’s becoming increasingly recognized as a best practice for websites that want to optimize their visibility in AI-powered applications. Several directories and registries have emerged to catalog websites that implement LLMs.txt, making it easier for AI systems to discover and prioritize curated content sources. Early adopters are gaining a significant advantage by establishing themselves as authoritative sources before the standard becomes ubiquitous across all AI platforms. Real-world examples show that websites implementing LLMs.txt are seeing improved citation rates and better representation in AI-generated content. The adoption trajectory suggests that LLMs.txt will become as standard as robots.txt and sitemap.xml within the next few years, making implementation a prudent investment for forward-thinking organizations.

The distinction between llms.txt and llms-full.txt represents two complementary approaches to guiding AI systems through your content. LLMs.txt is the curated, human-selected version that contains only your most important, authoritative, and valuable content—typically 20-100 URLs organized by category with descriptions. LLMs-full.txt, by contrast, is a complete, machine-readable version that includes every page on your website in a structured format, often generated automatically from your sitemap or content management system. The primary difference is intentionality: llms.txt requires human judgment and curation, while llms-full.txt is comprehensive and exhaustive. LLMs.txt should be used when you want to guide AI systems toward your best content and establish clear authority signals, while llms-full.txt serves as a fallback for AI systems that want complete coverage of your site. Both files use markdown formatting but with different organizational philosophies—llms.txt is selective and strategic, while llms-full.txt is inclusive and complete. Many organizations implement both files together, allowing AI systems to choose between curated guidance (llms.txt) or comprehensive coverage (llms-full.txt). For example, AIOSEO provides tools to generate both versions automatically, with llms.txt highlighting premium content and llms-full.txt providing complete site coverage.

Several common mistakes can undermine the effectiveness of your LLMs.txt implementation and should be carefully avoided. The most critical error is placing the file in the wrong location—it must be at the root domain (example.com/llms.txt), not in subdirectories or with different naming conventions. Missing required elements like the H1 title and introductory blockquote can confuse AI systems about your site’s purpose and authority. Including broken or outdated URLs damages your credibility and wastes AI system resources trying to access non-existent content. Over-inclusion is another common mistake—adding too many URLs (hundreds or thousands) defeats the purpose of curation and makes it harder for AI systems to identify truly important content. Poor or missing descriptions for each URL means AI systems can’t understand why that content is valuable or what it covers. Neglecting to update your LLMs.txt file regularly allows it to become stale, with outdated links and irrelevant content that no longer reflects your site’s focus. Including authentication-required content or paywalled articles that AI systems can’t actually access creates frustration and reduces trust. Finally, ensure you’re using the correct MIME type (text/plain or text/markdown) when serving the file, as incorrect configuration can prevent proper parsing by AI systems.

Several tools and resources have emerged to simplify the creation and maintenance of LLMs.txt files. AIOSEO offers a dedicated plugin that automatically generates both llms.txt and llms-full.txt files, making implementation accessible even for non-technical users. For those preferring manual creation, the process is straightforward—simply create a text file with markdown formatting and upload it to your root domain. Validation tools are available online to check your LLMs.txt file for proper formatting, broken links, and compliance with the standard. The GitHub community has created numerous repositories with templates, examples, and best practices for LLMs.txt implementation. Official documentation at llmstxt.org provides comprehensive guidance on file structure, formatting requirements, and implementation strategies. Many AI platform documentation pages now include sections on LLMs.txt support, helping you understand how different systems use your curated content. These resources collectively make it easier than ever to implement LLMs.txt and ensure your content is properly optimized for AI-driven discovery and citation.

LLMs.txt guides AI systems to your best content for inference-time use, while robots.txt controls what search engine crawlers can access. They serve different purposes and can coexist on the same domain. LLMs.txt is about curation and guidance, while robots.txt is about access control.

No, it's not mandatory, but it's becoming a best practice. Implementing LLMs.txt gives you a competitive advantage in AI-powered search results and ensures your content gets proper attribution when cited by AI systems.

The file must be placed at the root of your domain (e.g., yoursite.com/llms.txt) to be discoverable by AI systems and crawlers. It should be publicly accessible without authentication.

No, llms.txt is not designed for blocking or controlling training. It's specifically for guiding AI systems during inference (when generating responses). Use robots.txt or other mechanisms if you want to control training access.

Review and update quarterly or whenever you make significant changes to your website structure, add new important content, or change URLs. Regular maintenance ensures your file remains accurate and valuable.

OpenAI, Anthropic, Perplexity, and Google have started implementing llms.txt support. Adoption is growing as the standard becomes more established and recognized as a best practice.

LLMs.txt is a curated list of your best content (typically 20-100 URLs), while llms-full.txt contains a complete machine-readable version of all your content in Markdown format. Both can be used together for maximum flexibility.

Focus on quality over quantity. Include 10-20 of your most important, authoritative pages that best represent your expertise and content value. Avoid dumping your entire sitemap into the file.

AmICited tracks how AI systems reference your brand across ChatGPT, Perplexity, Google AI Overviews, and more. Ensure your content gets proper attribution and visibility in AI-generated responses.

Critical analysis of LLMs.txt effectiveness. Discover whether this AI content standard is essential for your site or just hype. Real data on adoption, platform ...

Learn how to implement LLMs.txt on your website to help AI systems understand your content better. Complete step-by-step guide for all platforms including WordP...

Learn what LLMs.txt is, whether it actually works, and if you should implement it on your website. Honest analysis of this emerging AI SEO standard.

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.